I.

Clarke’s First Law goes: When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong.

Stuart Russell is only 58. But what he lacks in age, he makes up in distinction: he’s a computer science professor at Berkeley, neurosurgery professor at UCSF, DARPA advisor, and author of the leading textbook on AI. His new book Human Compatible states that superintelligent AI is possible; Clarke would recommend we listen.

I’m only half-joking: in addition to its contents, Human Compatible is important as an artifact, a crystallized proof that top scientists now think AI safety is worth writing books about. Nick Bostrom’s Superintelligence: Paths, Dangers, Strategies previously filled this role. But Superintelligence was in 2014, and by a philosophy professor. From the artifactual point of view, HC is just better – more recent, and by a more domain-relevant expert. But if you also open up the books to see what’s inside, the two defy easy comparison.

S:PDS was unabashedly a weird book. It explored various outrageous scenarios (what if the AI destroyed humanity to prevent us from turning it off? what if it put us all in cryostasis so it didn’t count as destroying us? what if it converted the entire Earth into computronium?) with no excuse beyond that, outrageous or not, they might come true. Bostrom was going out on a very shaky limb to broadcast a crazy-sounding warning about what might be the most important problem humanity has ever faced, and the book made this absolutely clear.

HC somehow makes risk from superintelligence not sound weird. I can imagine my mother reading this book, nodding along, feeling better educated at the end of it, agreeing with most of what it says (it’s by a famous professor! I’m sure he knows his stuff!) and never having a moment where she sits bolt upright and goes what? It’s just a bizarrely normal, respectable book. It’s not that it’s dry and technical – HC is much more accessible than S:PDS, with funny anecdotes from Russell’s life, cute vignettes about hypothetical robots, and the occasional dad joke. It’s not hiding any of the weird superintelligence parts. Rereading it carefully, they’re all in there – when I leaf through it for examples, I come across a quote from Moravec about how “the immensities of cyberspace will be teeming with unhuman superminds, engaged in affairs that are to human concerns as ours are to those of bacteria”. But somehow it all sounds normal. If aliens landed on the White House lawn tomorrow, I believe Stuart Russell could report on it in a way that had people agreeing it was an interesting story, then turning to the sports page. As such, it fulfills its artifact role with flying colors.

How does it manage this? Although it mentions the weird scenarios, it doesn’t dwell on them. Instead, it focuses on the present and the plausible near-future, uses those to build up concepts like “AI is important” and “poorly aligned AI could be dangerous”. Then it addresses those abstractly, sallying into the far future only when absolutely necessary. Russell goes over all the recent debates in AI – Facebook, algorithmic bias, self-driving cars. Then he shows how these are caused by systems doing what we tell them to do (ie optimizing for one easily-described quantity) rather than what we really want them to do (capture the full range of human values). Then he talks about how future superintelligent systems will have the same problem.

His usual go-to for a superintelligent system is Robbie the Robot, a sort of Jetsons-esque butler for his master Harriet the Human. The two of them have all sorts of interesting adventures together where Harriet asks Robbie for something and Robbie uses better or worse algorithms to interpret her request. Usually these requests are things like shopping for food or booking appointments. It all feels very Jetsons-esque. There’s no mention of the word “singleton” in the book’s index (not that I’m complaining – in the missing spot between simulated evolution of programs, 171 and slaughterbot, 111, you instead find Slate Star Codex blog, 146, 169-70). But even from this limited framework, he manages to explore some of the same extreme questions Bostrom does, and present some of the answers he’s spent the last few years coming up with.

If you’ve been paying attention, much of the book will be retreading old material. There’s a history of AI, an attempt to define intelligence, an exploration of morality from the perspective of someone trying to make AIs have it, some introductions to the idea of superintelligence and “intelligence explosions”. But I want to focus on three chapters: the debate on AI risk, the explanation of Russell’s own research program, and the section on misuse of existing AI.

II.

Chapter 6, “The Not-So-Great Debate”, is the highlight of the book-as-artifact. Russell gets on his cathedra as top AI scientist, surveys the world of other top AI scientists saying AI safety isn’t worth worrying about yet, and pronounces them super wrong:

I don’t mean to suggest that there cannot be any reasonable objections to the view that poorly designed superintelligent machines would present a serious risk to humanity. It’s just that I have yet to see such an objection.

He doesn’t pull punches here, collecting a group of what he considers the stupidest arguments into a section called “Instantly Regrettable Remarks”, with the connotation that the their authors (“all of whom are well-known AI researchers”), should have been embarrassed to have been seen with such bad points. Others get their own sections, slightly less aggressively titled, but it doesn’t seem like he’s exactly oozing respect for those either. For example:

Kevin Kelly, founding editor of Wired magazine and a remarkably perceptive technology commentator, takes this argument one step further. In “The Myth of a Superhuman AI,” he writes, “Intelligence is not a single dimension, so ‘smarter than humans’ is a meaningless concept.” In a single stroke, all concerns about superintelligence are wiped away.

Now, one obvious response is that a machine could exceed human capabilities in all relevant dimensions of intelligence. In that case, even by Kelly’s strict standards, the machine would be smarter than a human. But this rather strong assumption is not necessary to refute Kelly’s argument.

Consider the chimpanzee. Chimpanzees probably have better short-term memory than humans, even on human-oriented tasks such as recalling sequences of digits. Short-term memory is an important dimension of intelligence. By Kelly’s argument, then, humans are not smarter than chimpanzees; indeed, he would claim that “smarter than a chimpanzee” is a meaningless concept.

This is cold comfort to the chimpanzees and other species that survive only because we deign to allow it, and to all those species that we have already wiped out. It’s also cold comfort to humans who might be worried about being wiped out by machines.

Or:

The risks of superintelligence can also be dismissed by arguing that superintelligence cannot be achieved. These claims are not new, but it is surprising now to see AI researchers themselves claiming that such AI is impossible. For example, a major report from the AI100 organization, Artificial Intelligence and Life in 2030, includes the following claim: “Unlike in the movies, there is no race of superhuman robots on the horizon or probably even possible.”

To my knowledge, this is the first time that serious AI researchers have publicly espoused the view that human-level or superhuman AI is impossible—and this in the middle of a period of extremely rapid progress in AI research, when barrier after barrier is being breached. It’s as if a group of leading cancer biologists announced that they had been fooling us all along: They’ve always known that there will never be a cure for cancer.

What could have motivated such a volte-face? The report provides no arguments or evidence whatever. (Indeed, what evidence could there be that no physically possible arrangement of atoms outperforms the human brain?) I suspect that the main reason is tribalism — the instinct to circle the wagons against what are perceived to be “attacks” on AI. It seems odd, however, to perceive the claim that superintelligent AI is possible as an attack on AI, and even odder to defend AI by saying that AI will never succeed in its goals. We cannot insure against future catastrophe simply by betting against human ingenuity.

If superhuman AI is not strictly impossible, perhaps it’s too far off to worry about? This is the gist of Andrew Ng’s assertion that it’s like worrying about “overpopulation on the planet Mars.” Unfortunately, a long-term risk can still be cause for immediate concern. The right time to worry about a potentially serious problem for humanity depends not just on when the problem will occur but also on how long it will take to prepare and implement a solution. For example, if we were to detect a large asteroid on course to collide with Earth in 2069, would we wait until 2068 to start working on a solution? Far from it! There would be a worldwide emergency project to develop the means to counter the threat, because we can’t say in advance how much time is needed.

Russell displays master-level competence at the proving too much technique, neatly dispatching sophisticated arguments with a well-placed metaphor. Some expert claims it’s meaningless to say one thing is smarter than another thing, and Russell notes that for all practical purposes it’s meaningful to say humans are smarter than chimps. Some other expert says nobody can control research anyway, and Russell brings up various obvious examples of people controlling research, like the ethical agreements already in place on the use of gene editing.

I’m a big fan of Luke Muehlhauser’s definition of common sense – making sure your thoughts about hard problems make use of the good intuitions you have built for thinking about easy problems. His example was people who would correctly say “I see no evidence for the Loch Ness monster, so I don’t believe it” but then screw up and say “You can’t disprove the existence of God, so you have to believe in Him”. Just use the same kind of logic for the God question you use for every other question, and you’ll be fine! Russell does great work applying common sense to the AI debate, reminding us that if we stop trying to out-sophist ourselves into coming up with incredibly clever reasons why this thing cannot possibly happen, we will be left with the common-sense proposition that it might.

My only complaint about this section of the book – the one thing that would have added a cherry to the slightly troll-ish cake – is that it missed a chance to include a reference to On The Impossibility Of Supersized Machines.

Is Russell (or am I) going too far here? I don’t think so. Russell is arguing for a much weaker proposition than the ones Bostrom focuses on. He’s not assuming super-fast takeoffs, or nanobot swarms, or anything like that. All he’s trying to do is argue that if technology keeps advancing, then at some point AIs will become smarter than humans and maybe we should worry about this. You’ve really got to bend over backwards to find counterarguments to this, those counterarguments tend to sound like “but maybe there’s no such thing as intelligence so this claim is meaningless”, and I think Russell treats these with the contempt they deserve.

He is more understanding of – but equally good at dispatching – arguments for why the problem will really be easy. Can’t We Just Switch It Off? No; if an AI is truly malicious, it will try to hide its malice and prevent you from disabling it. Can’t We Just Put It In A Box? No, if it were smart enough it could probably find ways to affect the world anyway (this answer was good as far as it goes, but I think Russell’s threat model also allows a better one: he imagines thousands of AIs being used by pretty much everybody to do everything, from self-driving cars to curating social media, and keeping them all in boxes is no more plausible than keeping transportation or electricity in a box). Can’t We Just Merge With The Machines? Sounds hard. Russell does a good job with this section as well, and I think a hefty dose of common sense helps here too.

He concludes with a quote:

The “skeptic” position seems to be that, although we should probably get a couple of bright people to start working on preliminary aspects of the problem, we shouldn’t panic or start trying to ban AI research. The “believers”, meanwhile, insist that although we shouldn’t panic or start trying to ban AI research, we should probably get a couple of bright people to start working on preliminary aspects of the problem.

I couldn’t have put it better myself.

III.

If it’s important to control AI, and easy solutions like “put it in a box” aren’t going to work, what do you do?

Chapters 7 and 8, “AI: A Different Approach” and “Provably Beneficial AI” will be the most exciting for people who read Bostrom but haven’t been paying attention since. Bostrom ends by saying we need people to start working on the control problem, and explaining why this will be very hard. Russell is reporting all of the good work his lab at UC Berkeley has been doing on the control problem in the interim – and arguing that their approach, Cooperative Inverse Reinforcement Learning, succeeds at doing some of the very hard things. If you haven’t spent long nights fretting over whether this problem was possible, it’s hard to convey how encouraging and inspiring it is to see people gradually chip away at it. Just believe me when I say you may want to be really grateful for the existence of Stuart Russell and people like him.

Previous stabs at this problem foundered on inevitable problems of interpretation, scope, or altered preferences. In Yudkowsky and Bostrom’s classic “paperclip maximizer” scenario, a human orders an AI to make paperclips. If the AI becomes powerful enough, it does whatever is necessary to make as many paperclips as possible – bulldozing virgin forests to create new paperclip mines, maliciously misinterpreting “paperclip” to mean uselessly tiny paperclips so it can make more of them, even attacking people who try to change its programming or deactivate it (since deactivating it would cause fewer paperclips to exist). You can try adding epicycles in, like “make as many paperclips as possible, unless it kills someone, and also don’t prevent me from turning you off”, but a big chunk of Bostrom’s S:PDS was just example after example of why that wouldn’t work.

Russell argues you can shift the AI’s goal from “follow your master’s commands” to “use your master’s commands as evidence to try to figure out what they actually want, a mysterious true goal which you can only ever estimate with some probability”. Or as he puts it:

The problem comes from confusing two distinct things: reward signals and actual rewards. In the standard approach to reinforcement learning, these are one and the same. That seems to be a mistake. Instead, they should be treated separately…reward signals provide information about the accumulation of actual reward, which is the thing to be maximized.

So suppose I wanted an AI to make paperclips for me, and I tell it “Make paperclips!” The AI already has some basic contextual knowledge about the world that it can use to figure out what I mean, and my utterance “Make paperclips!” further narrows down its guess about what I want. If it’s not sure – if most of its probability mass is on “convert this metal rod here to paperclips” but a little bit is on “take over the entire world and convert it to paperclips”, it will ask me rather than proceed, worried that if it makes the wrong choice it will actually be moving further away from its goal (satisfying my mysterious mind-state) rather than towards it.

Or: suppose the AI starts trying to convert my dog into paperclips. I shout “No, wait, not like that!” and lunge to turn it off. The AI interprets my desperate attempt to deactivate it as further evidence about its hidden goal – apparently its current course of action is moving away from my preference rather than towards it. It doesn’t know exactly which of its actions is decreasing its utility function or why, but it knows that continuing to act must be decreasing its utility somehow – I’ve given it evidence of that. So it stays still, happy to be turned off, knowing that being turned off is serving its goal (to achieve my goals, whatever they are) better than staying on.

This also solves the wireheading problem. Suppose you have a reinforcement learner whose reward is you saying “Thank you, you successfully completed that task”. A sufficiently weak robot may have no better way of getting reward than actually performing the task for you; a stronger one will threaten you at gunpoint until you say that sentence a million times, which will provide it with much more reward much faster than taking out your trash or whatever. Russell’s shift in priorities ensures that won’t work. You can still reinforce the robot by saying “Thank you” – that will give it evidence that it succeeded at its real goal of fulfilling your mysterious preference – but the words are only a signpost to the deeper reality; making you say “thank you” again and again will no longer count as success.

All of this sounds almost trivial written out like this, but number one, everything is trivial after someone thinks about it, and number two, there turns out to be a lot of controversial math involved in making it work out (all of which I skipped over). There are also some big remaining implementation hurdles. For example, the section above describes a Bayesian process – start with a prior on what the human wants, then update. But how do you generate the prior? How complicated do you want to make things? Russell walks us through an example where a robot gets great information that a human values paperclips at 80 cents – but the real preference was valuing them at 80 cents on weekends and 12 cents on weekdays. If the robot didn’t consider that a possibility, it would never be able to get there by updating. But if it did consider every single possibility, it would never be able to learn anything beyond “this particular human values paperclips at 80 cents on 12:08 AM on January 14th when she’s standing in her bedroom.” Russell says that there is “no working example” of AIs that can solve this kind of problem, but “the general idea is encompassed within current thinking about machine learning”, which sounds half-meaningless and half-reassuring.

People with a more technical bent than I have might want to look into some deeper criticisms of CIRL, including Eliezer Yudkowsky’s article here and some discussion in the AI Alignment Newsletter.

IV.

I want to end by discussing what was probably supposed to be an irrelevant middle chapter of the book, Misuses of AI.

Russell writes:

A compassionate and jubilant use of humanity’s cosmic endowment sounds wonderful, but we also have to reckon with the rapid rate of innovation in the malfeasance sector. Ill-intentioned people are thinking up new ways to misuse AI so quickly that this chapter is likely to be outdated even before it attains printed form. Think of it not as depressing reading, however, but as a call to act before it is too late.

…and then we get a tour of all the ways AIs are going wrong today: surveillance, drones, deepfakes, algorithmic bias, job loss to automation, social media algorithms, etc.

Some of these are pretty worrying. But not all of them.

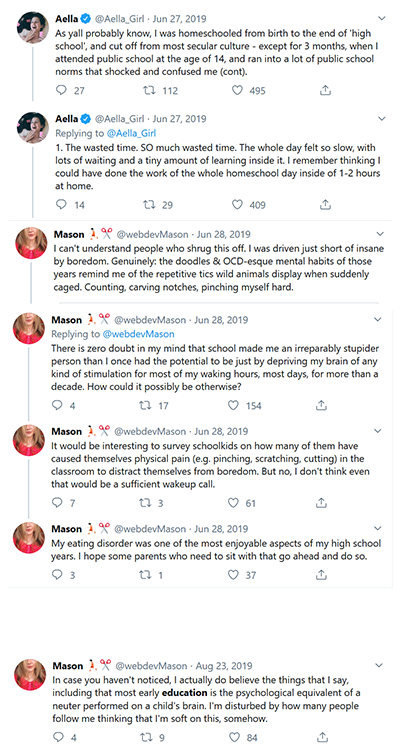

Google “deepfakes” and you will find a host of articles claiming that we are about to lose the very concept of truth itself. Brookings calls deepfakes “a threat to truth in politics” and comes up with a scenario where deepfakes “could trigger a nuclear war.” The Guardian asks “You Thought Fake News Was Bad? Deepfakes Are Where Truth Goes To Die”. And these aren’t even the alarmist ones! The Irish Times calls it an “information apocalypse” and literally titles their article “Be Afraid”; Good Times just writes “Welcome To Deepfake Hell”. Meanwhile, deepfakes have been available for a couple of years now, with no consequences worse than a few teenagers using them to make pornography, ie the expected outcome of every technology ever. Also, it’s hard to see why forging videos should be so much worse than forging images through Photoshop, forging documents through whatever document-forgers do, or forging text through lying. Brookings explains that deepfakes might cause nuclear war because someone might forge a video of the President ordering a nuclear strike and then commanders might believe it. But it’s unclear why this is so much more plausible than someone writing a memo saying “Please launch a nuclear strike, sincerely, the President” and commanders believing that. Other papers have highlighted the danger of creating a fake sex tape with a politician in order to discredit them, but you can already convincingly Photoshop an explicit photo of your least favorite politician, and everyone will just laugh at you.

Algorithmic bias has also been getting colossal unstoppable neverending near-infinite unbelievable amounts of press lately, but the most popular examples basically boil down to “it’s impossible to satisfy several conflicting definitions of ‘unbiased’ simultaneously, and algorithms do not do this impossible thing”. Humans also do not do the impossible thing. Occasionally someone is able to dig up an example which actually seems slightly worrying, but I have never seen anyone prove (or even seriously argue) that algorithms are in general more biased than humans (see also Principles For The Application Of Human Intelligence – no, seriously, see it). Overall I am not sure this deserves all the attention it gets any time someone brings up AI, tech, science, matter, energy, space, time, or the universe.

Or: with all the discussion about how social media algorithms are radicalizing the youth, it was refreshing to read a study investigating whether this was actually true, which found that social media use did not increase support for right-wing populism, and online media use (including social media use) and right-wing populism actually seem to be negatively correlated (remember, correlational studies are always bad). Recent studies of YouTube’s algorithms find they do not naturally tend to radicalize, and may deradicalize, viewers, although I’ve heard some people say this is only true of the current algorithm and the old ones (which were not included in these studies) were much worse.

Or: is automation destroying jobs? Although it seems like it should, the evidence continues to suggest that it isn’t. There are various theories for why this should be, most of which suggest it may not destroy jobs in the near future either. See my review of technological unemployment for details.

A careful reading reveals Russell appreciates most of these objections. A less careful reading does not reveal this. The general structure is “HERE IS A TERRIFYING WAY THAT AI COULD BE KILLING YOU AND YOUR FAMILY although studies do show that this is probably not literally happening in exactly this way AND YOUR LEADERS ARE POWERLESS TO STOP IT!”

I understand the impulse. This book ends up doing an amazing job of talking about AI safety without sounding weird. And part of how it accomplishes this is building on a foundation of “AI is causing problems now”. The media has already prepared the way; all Russell has to do is vaguely gesture at deepfakes and algorithmic radicalization, and everyone says “Oh yeah, that stuff!” and realizes that they already believe AI is dangerous and needs aligning. And then you can add “and future AI will be the same way but even more”, and you’re home free.

But the whole thing makes me nervous. Lots of right-wingers say “climatologists used to worry about global cooling, why should we believe them now about global warming?” They’re wrong – global cooling was never really a big thing. But in 2040, might the same people say “AI scientists used to worry about deepfakes, why should we believe them now about the Singularity?” And might they actually have a point this time? If we get a reputation as the people who fall for every panic about AI, including the ones that in retrospect turn out to be kind of silly, will we eventually cry wolf one too many times and lose our credibility before crunch time?

I think the actual answer to this question is “Haha, as if our society actually punished people for being wrong”. The next US presidential election is all set to be Socialists vs. Right-Wing Authoritarians – and I’m still saying with a straight face that the public notices when movements were wrong before and lowers their status? Have the people who said there were WMDs in Iraq lost status? The people who said sanctions on Iraq were killing thousands of children? The people who said Trump was definitely for sure colluding with Russia? The people who said global warming wasn’t real? The people who pushed growth mindset as a panacea for twenty years?

So probably this is a brilliant rhetorical strategy with no downsides. But it still gives me a visceral “ick” reaction to associate with something that might not be accurate.

And there’s a sense in which this is all obviously ridiculous. The people who think superintelligent robots will destroy humanity – these people should worry about associating with the people who believe fake videos might fool people on YouTube, because the latter group is going beyond what the evidence will support? Really? But yes. Really. It’s more likely that catastrophic runaway global warming will boil the world a hundred years from now than that it will reach 75 degrees in San Francisco tomorrow (predicted high: 59); extreme scenarios about the far future are more defensible than even weak claims about the present that are ruled out by the evidence.

There’s been some discussion in effective altruism recently about public relations. The movement has many convincing hooks (you can save a live for $3000, donating bednets is very effective, think about how you would save a drowning child) and many things its leading intellectuals are actually thinking about (how to stop existential risks, how to make people change careers, how to promote plant-based meat), and the Venn diagram between the hooks and the real topics has only partial overlap. What to do about this? It’s a hard question, and I have no strong opinion besides a deep respect for everyone on both sides of it and appreciation for the work they do trying to balance different considerations in creating a better world.

HC’s relevance to this debate is as an extraordinary example. If you try to optimize for being good at public relations and convincingness, you can be really, really good at public relations and convincingness, even when you’re trying to explain a really difficult idea to a potentially hostile audience. You can do it while still being more accurate, page for page, than a New York Times article on the same topic. There are no obvious disadvantages to doing this. It still makes me nervous.

V.

My reaction to this book is probably weird. I got interested in AI safety by hanging out with transhumanists and neophiles who like to come up with the most extreme scenario possible, and then back down when maybe it isn’t true. Russell got interested in AI safety by hanging out with sober researchers who like to be as boring and conservative as possible, and then accept new ideas once the evidence for them proves overwhelming. At some point one hopes we meet in the middle. We’re almost there.

But maybe we’re not quite there yet. My reaction to this book has been “what an amazing talent Russell must have to build all of this up from normality”. But maybe it’s not talent. Maybe Russell is just recounting his own intellectual journey. Maybe this is what a straightforward examination of AI risk looks like if you have fewer crazy people in your intellectual pedigree than I do.

I recommend this book both for the general public and for SSC readers. The general public will learn what AI safety is. SSC readers will learn what AI safety sounds like when it’s someone other than me talking about it. Both lessons are valuable.