I.

Evolutionary psychology is famous for having lots of stories that make sense but are hard to test. Psychiatry is famous for having mountains of experimental data but no idea what’s going on. Maybe if you added them together, they might make one healthy scientific field? Enter Evolutionary Psychopathology: A Unified Approach by psychology professor Marco del Giudice. It starts by presenting the theory of “life history strategies”. Then it uses the theory – along with a toolbox of evolutionary and genetic ideas – to shed new light on psychiatric conditions.

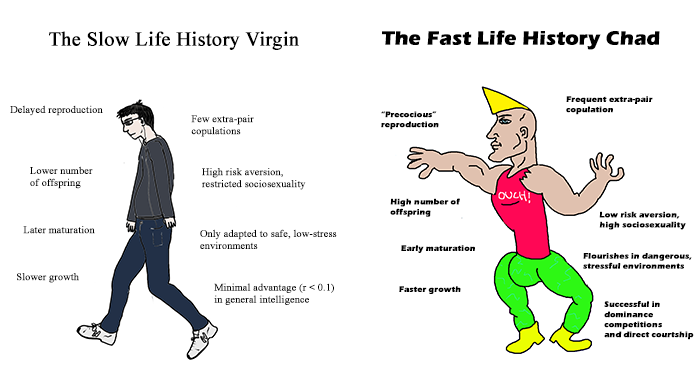

Some organisms have lots of low-effort offspring. Others have a few high-effort offspring. This was the basis of the old r/k selection theory. Although the details of that theory have come under challenge, the basic insight remains. A fish will lay 10,000 eggs, then go off and do something else. 9,990 will get eaten by sharks, but that still leaves enough for there to be plenty of fish in the sea. But an elephant will spend two years pregnant, three years nursing, and ten years doing at least some level of parenting, all to produce a single big, well-socialized, and high-prospect-of-life-success calf. These are two different ways of doing reproduction. In keeping with the usual evolutionary practice, del Giudice calls the fish strategy “fast” and the elephant strategy “slow”.

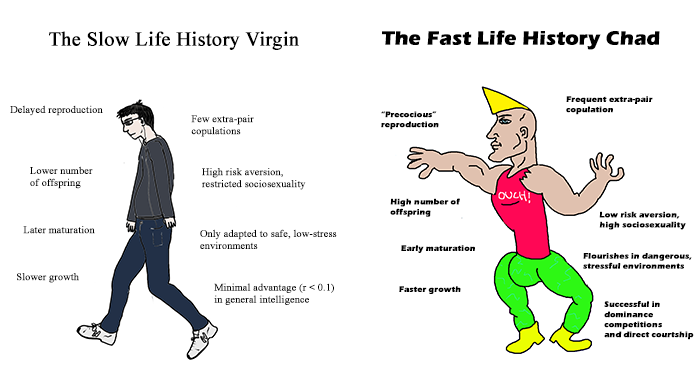

To oversimplify: fast strategies (think “live fast, die young”) are well-adapted for unpredictable dangerous environments. Each organism has a pretty good chance of randomly dying in some unavoidable way before adulthood; the species survives by sheer numbers. Fast organisms should grow up as quickly as possible in order to maximize the chance of reaching reproductive age before they unpredictably die. They should mate with anybody around, to maximize the chance of mating before they unpredictably die. They should ignore their offspring, since they expect most offspring to unpredictably die, and since they have too many to take care of anyway. They should be willing to take risks, since the downside (death without reproducing) is already their default expectation, and the upside (becoming one of the few individuals to give birth to the 10,000 offspring of the next generation) is high.

Slow strategies are well-adapted for safer environments, or predictable complex environments whose intricacies can be mastered with enough time and effort. Slow strategy animals may take a long time to grow up, since they need to achieve mastery before leaving their parents. They might be very picky maters, since they have all the time in the world to choose, will only have a few children each, and need to make sure each of those children has the best genes possible. They should work hard to raise their offspring, since each individual child represents a substantial part of the prospects of their genetic line. They should avoid risks, since the downside (death without reproducing) would be catastrophically worse than default, and the upside (giving birth to a few offspring of the next generation) is what they should expect anyway.

Del Giudice asks: what if life history strategies differ not just across species, but across individuals of the same species? What if this theory applied within the human population?

In line with animal research on pace-of-life syndromes, human research has shown that impulsivity, risk-taking, and sensation seeking, are systematically associated with fast life history traits such as early intercourse, early childbearing in females, unrestricted sociosexuality, larger numbers of sexual partners, reduced long-term mating orientation, and increased mortality. Future discounting and heightened mating competition reduce the benefits of reciprocal long-term relationships; in motivational terms, affiliation and reciprocity are downregulated, whereas status seeking and aggression are upregulated. The resulting behavioral pattern is marked by exploitative and socially antagonistic tendencies; these tendencies may be expressed in different forms in males and females, for example through physical versus relational aggression (Belsky et al 1991; Borowsky et al 2009; Brezina et al 2009; Chen & Vazsonyi 2011; Copping et al 2013a, 2013b, 2014a; Curry et al 2008; Dunkel & Decker 2010 […]

And:

Disgust sensitivity is another dimension of individual differences with links to the fast-slow continuum. To begin, high disgust sensitivity is broadly associated with measures of risk aversion. Moral and sexual disgust correlate with higher agreeableness, conscientiousness, and honesty-humility; and sexual disgust specifically predicts restricted sociosexuality (Al-Shawaf et al 2015; Sparks et al 2018; Tybur et al 2009, 2015; Tybur & de Vries 2013). These findings suggest that the disgust system is implicated in the regulation of life-history-related behaviors. In particular, sexual and moral disgust show the most consistent pattern of correlations with other indicators of slow strategies.

Romantic attachment styles have wide ranging influences on sexuality, mating, and couple stability, but their relations with life history strategies are somewhat complex. Secure attachment styles are consistently associated with slow life history traits (eg Chisholm 1999b; Chisholm et al 2005; Del Giudice 2990a). Avoidance predicts unrestricted sociosexuality, reduced long-term orientation, and low commitment to partners (Brennan & Shaver 1995; Jackson & Kirkpatrick 2007; Templehof & Allen 2008). Given the central role of pair bonding in long-term parental investment, avoidant attachment – which, on average, is higher in men – can be generally interpreted as a mediator of reduced parenting effort. However, some inconsistent findings indicate that avoidance may capture multiple functional mechanisms. High levels of romantic avoidance are found both in people with very early sexual debut and in those who delay intercourse (Gentzler & Kearns, 2004); this suggests that, at least for some people, avoidant attachment may actually reflect a partial downregulation of the mating system, consistent with slower life history strategies.

And:

At a higher level of abstraction, the behavioral correlates of life history strategies can be framed within the five-factor model of personality. Among the Big Five, agreeableness and conscientiousness show the most consistent pattern of associations with slow traits such as restricted sociosexuality, long-term mating orientation, couple stability, secure attachment to parents in infancy and romantic partners in adulthood, reduced sex drive, low impulsivity, and risk aversion across domains (eg Baams et al 2004; Banai & Pavela 2015; Bourage et al 2007; DeYoung 2001; Holtzman & Strube 2013; Jonasen et al 2013 […] Some researchers working in a life history perspective have argued that the general factor of personality should be regarded as the core personality correlate of slow strategies.

Del Giudice suggests that these traits, and predisposition to fast vs. slow life history in general, are caused by a gene * environment interaction. The genetic predisposition is straightforward enough. The environmental aspect is more interesting.

There has been some research on the thrify phenotype hypothesis: if you’re undernourished while in the womb, you’ll be at higher risk of obesity later on. Some mumble “epigenetic” mumble “mechanism” looks around, says “We seem to be in a low-food environment, better design the brain and body to gorge on food when it’s available and store lots of it as fat”, then somehow modulates the relevant genes to make it happen.

Del Giudice seems to imply that a similar epigenetic mechanism “looks around” at the world during the first few years of life to try to figure out if you’re living in the sort of unpredictable dangerous environment that needs a fast strategy, or the sort of safe, masterable environment that needs a slow strategy. Depending on your genetic predisposition and the observable features of the environment, this mechanism “makes a decision” to “lock” you into a faster or slower strategy, setting your personality traits more toward one side or the other.

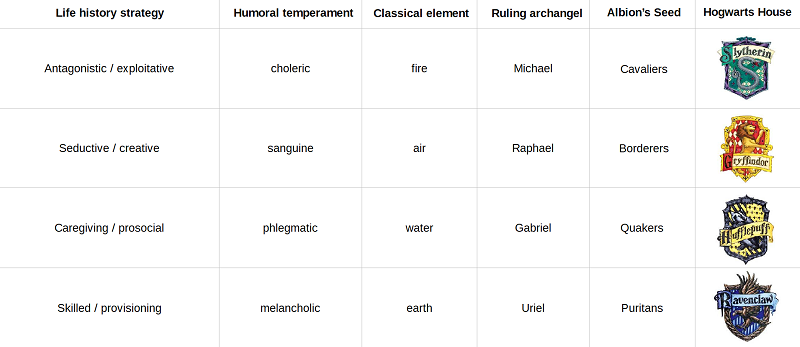

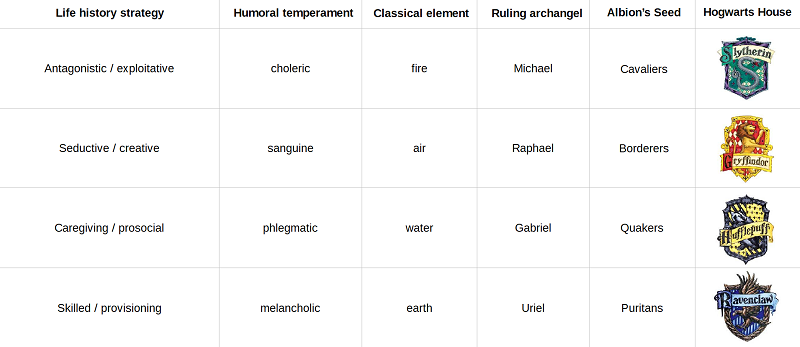

He further subdivides fast vs. slow life history into four different “life history strategies”.

The antagonistic/exploitative strategy is a fast strategy that focuses on getting ahead by defecting against other people. Because it expects a short and noisy life without the kind of predictable iterated games that build reciprocity, it throws all this away and focuses on getting ahead quick. A person who has been optimized for an antagonistic/exploitative strategy will be charming, good at some superficial social tasks, and have no sense of ethics – ie the perfect con man. Antagonistic/exploitative people will have opportunities to reproduce through outright rape, through promising partners commitment and then not providing it, through status in criminal communities, or through things in the general category of hiring prostitutes when both parties are too drunk to use birth control. These people do not have to be criminals; they can also be the most cutthroat businessmen, lawyers, and politicians. Jumping ahead to the psychiatry connection, the extreme version of this strategy is probably antisocial personality disorder.

The creative/seductive strategy is a fast strategy that focuses on getting ahead through sexual selection, ie optimizing for being really sexy. Because it expects a short and noisy life, it focuses on raw sex appeal (which peaks in the late teens and early twenties) as opposed to things like social status or ability to care for children (which peak later in maturity). A person who has been optimized for a creative/seductive strategy will be attractive, artistic, flirtatious, and popular – eg the typical rock star or starlet. They will also have traits that support these skills, which for complicated reasons include being very emotional. Creative/seductive people will have opportunities to reproduce through making other people infatuated with them; if they are lucky, they can seduce a high-status high-resource person who can help take care of the children. The most extreme version of this strategy is probably borderline personality disorder.

The prosocial/caregiving strategy is a slow strategy that focuses on being a responsible pillar of the community who everybody likes. Because it expects a slow and stable life, it focuses on lasting relationships and cultivating a good reputation that will serve it well in iterated games. A person who has been optimized for a prosocial/caregiving strategy will be dependable, friendly, honest, and conformist – eg the typical model citizen. Prosocial/caregiving people will have opportunities to reproduce by marrying their high school sweetheart, living in a suburban tract house, and having 2.4 children who go to state college. The most extreme version of this strategy is probably being a normie.

The skilled/provisioning strategy is a slow strategy that focuses on being good at specific useful tasks. Because it expects a slow and stable life, it focuses on gaining abilities that may take years to bear any fruit. A person who is optimized for a skilled/provisioning strategy will be intelligent, good at learning, and a little bit obsessive. They may not invest as much in friendliness or seductiveness; once they succeed at their chosen path, they will get social status through being indispensible for the continued functioning of the community, and they will have opportunities to reproduce because of their high status and obvious ability to provide for the children. The most extreme version of this strategy is probably high-functioning autism.

This division into life strategies is a seductive idea. I mean, literally, it’s a seductive idea, ie in terms of memetic evolution, we may worry it is optimized for a seductive/creative strategy for reproduction, rather than the boring autistic “is actually true” strategy. The following is not a figure from Del Giudice’s book, but maybe it should be:

There’s a lot of debate these days about how we should treat research that fits our existing beliefs too closely. I remember Richard Dawkins (or maybe some other atheist) once argued we should be suspicious of religion because it was too normal. When you really look at the world, you get all kinds of crazy stuff like quantum physics and time dilation, but when you just pretend to look at the world, you get things like a loving Father, good vs. evil, and ritual purification – very human things, things a schoolchild could understand. Atheists and believers have since had many debates over whether religion is too ordinary or sufficiently strange, but I haven’t heard either side deny the fundamental insight that science should do something other than flatter our existing categories for making sense of the world.

On the other hand, the first thermometer no doubt recorded that it was colder in winter than in summer. And if someone had criticized physicists, saying “You claim to have a new ‘objective’ way of looking at temperature, but really all you’re doing is justifying your old prejudices that the year is divided into nice clear human-observable parts, and summer is hot and winter is cold” – then that person would be a moron.

This kind of thing keeps coming up, from Klein vs. Harris on the science of race to Jussim on stereotype accuracy. I certainly can’t resolve it here, so I want to just acknowledge the difficulty and move on. If it helps, I don’t think Del Giudice wants to argue these are objectively the only four possible life strategies and that they are perfect Platonic categories, just that these are a good way to think of some of the different ways that organisms (including humans) can pursue their goal of reproduction.

II.

Psychiatry is hard to analyze from an evolutionary perspective. From an evolutionary perspective, it shouldn’t even exist. Most psychiatric disorders are at least somewhat genetic, and most psychiatric disorders decrease reproductive fitness. Biologists have equations that can calculate how likely it is that maladaptive genes can stay in the population for certain amounts of time, and these equations say, all else being equal, that psychiatric disorders should not be possible. Apparently all else isn’t equal, but people have had a lot of trouble figuring out exactly what that means. A good example of this kind of thing is Greg Cochran’s theory that homosexuality must be caused by some kind of infection; he does not see another way it could remain a human behavior without being selected into oblivion.

Del Giudice does the best he can within this framework. He tries to sort psychiatric conditions into a few categories based on possible evolutionary mechanisms.

First, there are conditions that are plausibly good evolutionary strategies, and people just don’t like them. For example, nymphomania is unfortunate from a personal and societal perspective, but one can imagine the evolutionary logic checks out.

Second, there are conditions which might be adaptive in some situations, but don’t work now. For example, antisocial traits might be well-suited to environments with minimal law enforcement and poor reputational mechanisms for keeping people in check; now they will just land you in jail.

Third, there are conditions which are extreme levels of traits which it’s good to have a little of. For example, a little anxiety is certainly useful to prevent people from poking lions with sticks just to see what will happen. Imagine (as a really silly toy model) that two genes A and B determine anxiety, and the optimal anxiety level is 10. Alice has gene A = 8 and gene B = 2. Bob has gene A = 2 and gene B = 8. Both of them are happy well-adjusted individuals who engage in the locally optimal level of lion-poking. But if they reproduce, their child may inherit gene A = 8 and gene B = 8 for a total of 16, much more anxious than is optimal. This child might get diagnosed with an anxiety disorder, but it’s just a natural consequence of having genes for various levels of anxiety floating around in the population.

Fourth, there are conditions which are the failure modes of traits which it’s good to have a little of. For example, psychiatrists have long categorized certain common traits into “schizotypy”, a cluster of characteristics more common in the relatives of schizophrenics and in people at risk of developing schizophrenia themselves. These traits are not psychotic in and of themselves and do not decrease fitness, nor is schizophrenia necessarily just the far end of this distribution. But schizotypal traits are one necessary ingredient of getting schizophrenia; schizophrenia is some kind of failure mode only possible with enough schizotypy. If schizotypal traits do some other good thing, they can stick around in the population, and this will look a lot like “schizophrenia is genetic”.

How can we determine which of these categories any given psychiatric disorder falls into?

One way is through what is called taxometrics – the study of to what degree mental disorders are just the far end of a normal distribution of traits. Some disorders are clearly this way; for example, if you quantify and graph everybody’s anxiety levels, they will form a bell curve, and the people diagnosed with anxiety disorders will just be the ones on the far right tail. Are any disorders not this way? This is a hard question, though schizophrenia is a promising candidate.

Another way is through measuring the correlation of disorders with mutational load. Some people end up with more mutations (and so a generically less functional genome) than others. The most common cause of this is being the child of an older father, since that gives mutations more time to accumulate in sperm cells. Other people seem to have higher mutational load for other, unclear reasons, which can be measured through facial asymmetry and the presence of minor physical abnormalities (like weirdly-shaped ears). If a particular psychiatric disorder is more common in people with increased mutational load, that suggests it isn’t just a functional adaptation but some kind of failure mode of something or other. Schizophrenia and low-functioning autism are both linked to higher mutational load.

Another way is by trying to figure out what aspect of evolutionary strategy matches the occurrence of the disorder. Developmental psychologists talk about various life stages, each of which brings new challenges. For example, adrenache (age 6-8) marks “the transition from early to middle childhood”, when “behavioral plasticity and heightened social learning go hand in hand with the expression of new genetic influences on psychological traits such as agression, prosocial behavior, and cognitive skills” and children receive social feedback “about their attractiveness and competitive ability”. More obviously, puberty marks the expression of still other genetic influences and the time at which young people start seriously thinking about sex. So if various evolutionary adaptations to deal with mating suddenly become active around puberty, and some mental disorder always starts at puberty, that provides some evidence that the mental disorder might be related to an evolutionary adaptation for dealing with mating. Or, since a staple of evo psych is that men and women pursue different reproductive strategies, if some psychiatric disease is twice as common in women (eg depression) or five times as common in men (eg autism), then that suggests it’s correlated with some strategy or trait that one sex uses more than the other.

This is where Del Giudice ties in the life history framework. If some psychiatric disease is more common in people who otherwise seem to be pursuing some life strategy, then maybe it’s related to that strategy. Either it’s another name for that strategy, or it’s another name for an extreme version of that strategy, or it’s a failure mode of that strategy, or it’s associated with some trait or adaptation which that strategy uses more than others do. By determining the association of disorders with certain life strategies, we can figure out what adaptive trait they’re piggybacking on, and from there we can reverse engineer them and try to figure out what went wrong.

This is a much more well-thought-out and orderly way of thinking about psychiatric disease than anything I’ve ever seen anyone else try. How does it work?

Unclear. Psychiatric disorders really resist being put into this framework. For example, some psychiatric disorders have a u-shaped curve regarding childhood quality – they are more common both in people with unusually deprived childhoods and people with unusually good childhoods. Many anorexics are remarkably high-functioning, so much so that even the average clinical psychiatrist takes note, but others are kind of a mess. Autism is classically associated with very low IQ and with bodily asymmetries that indicate high mutational load, but a lot of autistics have higher-than-normal IQ and minimal bodily asymmetry. Schizophrenia often starts in a very specific window between ages 18 and 25, which sounds promising for a developmental link, but a few cases will start at age 5, or age 50, or pretty much whenever. Everything is like this. What is a rational, order-loving evolutionary psychologist supposed to do?

Del Giudice bites the bullet and says that most of our diagnostic categories conflate different conditions. The unusually high-functioning anorexics have a different disease than the unusually low-functioning ones. Low IQ autism with bodily asymmetries has a different evolutionary explanation than high IQ autism without. In some cases, he is able to marshal a lot of evidence for distinct clinical entities. For example, most cases of OCD start in adulthood, but one-third begin in early childhood instead. These early OCD cases are much more likely to be male, more likely to have high conscientiousness, more likely to co-occur with autistic traits, and have a different set of obsessions focusing on symmetry, order, and religion (my own OCD started in very early childhood and I feel called out by this description). Del Giudice says these are two different conditions, one of which is associated with pathogen defense and one of which is associated with a slow life strategy.

Deep down, psychiatrists know that we have not really subdivided the space of mental disorders very well. Every year a new study comes out purporting to have discovered the three types of depression, or the four types of depression, or the five types of depression, or some other number of types of depression that some scientist thinks she has discovered. Often these are given explanatory power, like “number three is the one that doesn’t respond to SSRIs”, or “1 and 2 are biological; 3, 4, and 5 are caused by life events”. All of these seem equally plausible, so given that they all say different things I tend to ignore all of them. So when del Giudice puts depression under his spotlight and finds it subdivides into many different subdisorders, this is entirely fair. Maybe we should be concerned if he didn’t find that.

But part of me is still concerned. If evo psych correctly predicted the characteristics of the psychiatric disorders we observe, then we would count that as theoretical confirmation. Instead, it only works after you replace the psychiatric disorders we observe with another, more subtle set right on the threshold of observation. The more you’re allowed to diverge from our usual understanding, the more chance you have to fudge your results; the more different disorders you can divide things into, the more options you have for overfitting. Del Giudice’s new schema may well be accurate; it just makes it hard to check his accuracy.

On the other hand, reality has a surprising amount of detail. Every previous attempt to make sense of psychopathology has failed. But psychopathology has to make sense. So it must make sense in some complicated way. If you see what looks like a totally random squiggle on a piece of paper, then probably the equation that describes it really is going to have a lot of variables, and you shouldn’t criticize a many-variable equation as “overfitting”. There is a part of me that thinks this book is a beautiful example of what solving a complicated field would look like. You take all the complications, and you explain by layering of a bunch of different simple and reasonable things on top of one another. The psychiatry parts of Evolutionary Psychopathology: A Unified Approach do this. I don’t know if it’s all just epicycles, but it’s a heck of a good try.

I would encourage anyone with an interest in mental health and a tolerance for dense journal-style writing to read the psychiatry parts of this book. Whether or not the hypotheses are right, in the process of defending them it calls in such a wide array of evidence, from so many weird studies that nobody else would have any reason to think about, that it serves as a fantastic survey of the field from an unusual perspective. If you’ve ever wanted to know how many depressed people are reproducing (surprisingly many! about 90 – 100% as many as non-depressed people!) or what the IQ of ADHD people is (0.6 standard deviations below average; the people most of you see are probably from a high-functioning subtype) or how schizophrenia varies with latitude (triples as you move from the equator to the poles, but after adjusting for this darker-skinned people seem to have more, suggesting a possible connection with Vitamin D), this is the book for you.

III.

I want to discuss some political and social implications of this work. These are my speculations only; del Giudice is not to blame.

We believe that an abusive or deprived childhood can negatively affect people’s life chances. So far, we’ve cached this out entirely in terms of brain damage. Children’s developing brains “can’t deal with the trauma” and so become “broken” in ways that make them a less functional adult. Life history theory offers a different explanation. Nothing is “broken”. Deprived children have just looked around, seen what the world is like, and rewired themselves accordingly on some deep epigenetic level.

I was reading this at the same time as the studies on preschool, and I couldn’t help noticing how well they fit together. The preschool studies were surprising because we expected them to improve children’s intelligence. Instead, they improved everything else. Why? This would make sense if the safe environment of preschool wasn’t “fixing” their “broken” brains, but pushing them to follow a slower life strategy. Stay in school. Don’t commit crimes. Don’t have kids while you’re still a teenager. This is exactly what we expect a push towards slow life strategies to do.

Life strategies even predict the “fade-out/fade-in” nature of the effects; the theory specifies that although aspects of life strategy may be set early on, they only “activate” at the appropriate developmental period. From page 93: “The social feedback that children receive in this phase [middle childhood]…may feed into the regulation of puberty timing and shape behavioral strategies in adolescence.”

Society has done a lot to try to help disadvantaged children. A lot of research has been gloomy about the downstream effects; none of it raised anybody’s IQ, there are still lots of poor people around, income inequality continues to increase. But maybe we’re just looking in the wrong place.

On a related note: a lot of intelligent, responsible, basically decent young men complain of romantic failure. Although the media has tried hard to make this look like some kind of horrifying desire to rape everybody because they believe are entitled to whatever and whoever they want, the basic complaint is more prosaic: “I try to be a nice guy who contributes to society and respects others; how come I’m a miserable 25-year-old virgin, whereas every bully and jerk and frat bro I know is able to get a semi-infinite supply of sex partners whom they seduce, abuse, and dump?” This complaint isn’t imaginary; studies have shown that criminals are more likely to have lost their virginity earlier, that boys with more aggressive and dishonest behaviors have earlier age of first sexual intercourse, and that women find men with dark triad traits more attractive. I used to work in a psychiatric hospital that served primarily adolescents with a history of violence or legal issues; most of them had had multiple sexual encounters by age fifteen; only half of MIT students in their late teens and early 20s have had sex at all.

Del Giudice’s work offers a framework by which to understand these statistics. Most MIT students are probably pursuing slow life strategies; most violent adolescents in psych hospitals are probably pursuing fast ones. Fast strategies activate a suite of traits designed for having sex earlier; slow life strategies activate a suite of traits designed for preventing early sex. There’s a certain logical leap here where you have to explain how, if an individual is trying very hard to have teenage sex, his mumble epigenetic mumble mechanism can somehow prevent this. But millions of very vocal people’s lived experiences argue that it can. The good news for these people is that they are adapted for a life strategy which in the past has consistently resulted in reproduction at some point. Maybe when they graduate with a prestigious MIT degree, they will get enough money and status to attract a high-quality slow-strategy mate, who can bear high-quality slow-strategy kids who produce many surviving grandchildren. I don’t know. This hasn’t happened to me yet. Maybe I should have gone to MIT.

Finally, the people who like to say that various things “serve as a justification for oppression” are going to have a field day with this one. Although del Giudice is too scientific to assign any moral weight to his life history strategies, it’s not that hard to import it.

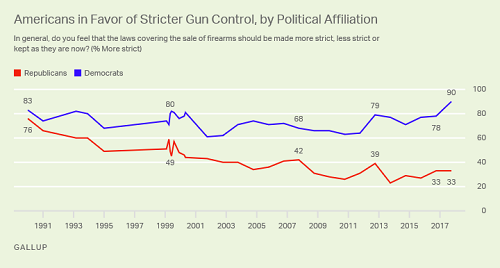

(source)

Life strategies run the risk of reifying some of our negative judgments. If criminals are pursuing a hard-coded antagonistic-exploitative strategy, that doesn’t look good for rehabilitation. Likewise, if some people are pursuing creative-seductive strategies, that provides new force to the warning to avoid promiscuous floozies and stick to your own social class. In the extreme version of this, you could imagine a populism that claims to be fighting for the decent middle-class slow-strategy segment of the population against an antagonistic/exploitative underclass. The creative/seductive people are on thin ice – maybe they should start producing art that looks like something.

(it doesn’t help that this theory is distantly related to an earlier theory proposed by Canadian psychologist John Rushton, who added that black people are racially predisposed to fast strategies and Asians to slow strategies, with white people somewhere in the middle. Del Giudice mentions Rushton just enough that nobody can accuse him of deliberately covering up his existence, then hastily moves on.)

But aside from the psychological compellingness, this doesn’t make a lot of sense. We already know that antagonistic and exploitative people exist in the world. All that life history theory does is exactly what progressives want to do: provide an explanation that links these qualities to childhood deprivation, or to dangerous environments where they may be the only rational choice. Sure, you would have to handwave away the genetic aspect, but you’re going to have be handwaving away some genetics to make this kind of thing work no matter what, and life history theory makes this easier rather than harder. It also provides some testable hypotheses about what aspects of childhood deprivation we might want to target, and what kind of effects we might expect such interventions to have.

Apart from all this, I find life history strategy theory sort of reassuring. Until now, atheists have been denied the comfort of knowing God has a plan for them. Sure, they could know that evolution had a plan for them, but that plan was just “watch dispassionately to see whether they live or die, then adjust gene frequencies in the next generation accordingly”. In life history strategy theory, evolution – or at least your mumble epigenetic mumble mechanism – actually has a plan for you. Now we can be evangelical atheists who have a personal relationship with evolution. It’s pretty neat.

And I come at this from the perspective of someone who has failed at many things despite trying very hard, and also succeeded at others without even trying. This has been a pretty formative experience for me, and it’s seductive to be able to think of all of it as part of a plan. Literally seductive, in the sense of memetic evolution. Like that Hogwarts chart.

Read this book at your own risk; its theories will start creeping into everything you think.