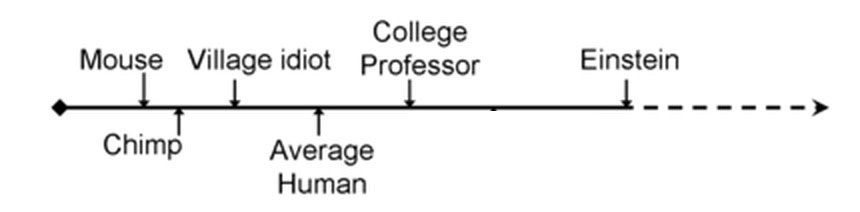

Eliezer Yudkowsky argues that forecasters err in expecting artificial intelligence progress to look like this:

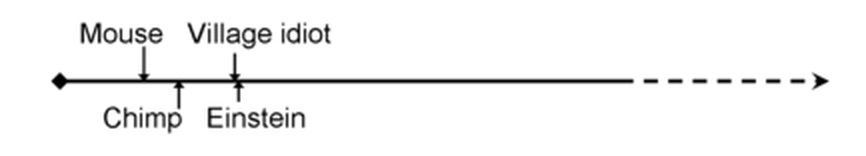

…when in fact it will probably look like this:

That is, we naturally think there’s a pretty big intellectual difference between mice and chimps, and a pretty big intellectual difference between normal people and Einstein, and implicitly treat these as about equal in degree. But in any objective terms we choose – amount of evolutionary work it took to generate the difference, number of neurons, measurable difference in brain structure, performance on various tasks, etc – the gap between mice and chimps is immense, and the difference between an average Joe and Einstein trivial in comparison. So we should be wary of timelines where AI reaches mouse level in 2020, chimp level in 2030, Joe-level in 2040, and Einstein level in 2050. If AI reaches the mouse level in 2020 and chimp level in 2030, for all we know it could reach Joe level on January 1st, 2040 and Einstein level on January 2nd of the same year. This would be pretty disorienting and (if the AI is poorly aligned) dangerous.

I found this argument really convincing when I first heard it, and I thought the data backed it up. For example, in my Superintelligence FAQ, I wrote:

In 1997, the best computer Go program in the world, Handtalk, won NT$250,000 for performing a previously impossible feat – beating an 11 year old child (with an 11-stone handicap penalizing the child and favoring the computer!) As late as September 2015, no computer had ever beaten any professional Go player in a fair game. Then in March 2016, a Go program beat 18-time world champion Lee Sedol 4-1 in a five game match. Go programs had gone from “dumber than heavily-handicapped children” to “smarter than any human in the world” in twenty years, and “from never won a professional game” to “overwhelming world champion” in six months.

But Katja Grace takes a broader perspective and finds the opposite. For example, she finds that chess programs improved gradually from “beating the worst human players” to “beating the best human players” over fifty years or so, ie the entire amount of time computers have existed:

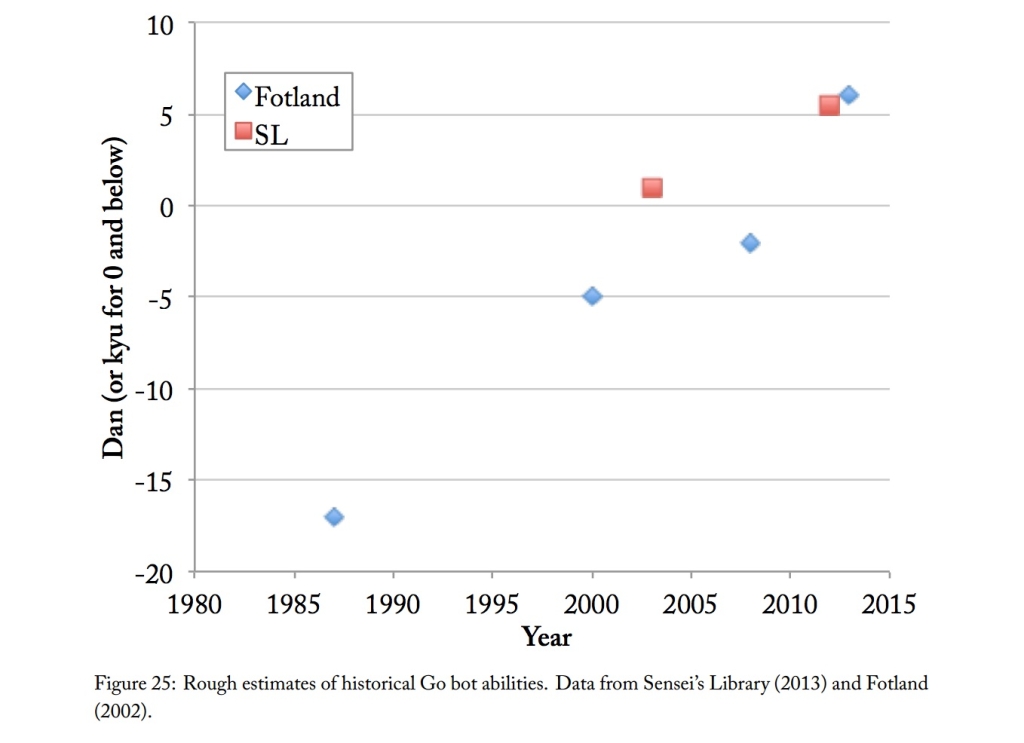

AlphaGo represented a pretty big leap in Go ability, but before that, Go engines improved pretty gradually too (see the original AI Impacts post for discussion of the Go ranking system on the vertical axis):

There’s a lot more on Katja’s page, overall very convincing. In field after field, computers have taken decades to go from the mediocre-human level to the genius-human level. So how can one reconcile the common-sense force of Eliezer’s argument with the empirical force of Katja’s contrary data?

Theory 1: Mutational Load

Katja has her own theory:

The brains of humans are nearly identical, by comparison to the brains of other animals or to other possible brains that could exist. This might suggest that the engineering effort required to move across the human range of intelligences is quite small, compared to the engineering effort required to move from very sub-human to human-level intelligence…However, we should not be surprised to find meaningful variation in the cognitive performance regardless of the difficulty of improving the human brain. This makes it difficult to infer much from the observed variations.

Why should we not be surprised? De novo deleterious mutations are introduced into the genome with each generation, and the prevalence of such mutations is determined by the balance of mutation rates and negative selection. If de novo mutations significantly impact cognitive performance, then there must necessarily be significant selection for higher intelligence–and hence behaviorally relevant differences in intelligence. This balance is determined entirely by the mutation rate, the strength of selection for intelligence, and the negative impact of the average mutation.

You can often make a machine worse by breaking a random piece, but this does not mean that the machine was easy to design or that you can make the machine better by adding a random piece. Similarly, levels of variation of cognitive performance in humans may tell us very little about the difficulty of making a human-level intelligence smarter.

I’m usually a fan of using mutational load to explain stuff. But here I worry there’s too much left unexplained. Sure, the explanation for variation in human intelligence is whatever it is. And there’s however much mutational load there is. But that doesn’t address the fundamental disparity: isn’t the difference between a mouse and Joe Average still immeasurably greater than the difference between Joe Average and Einstein?

Theory 2: Purpose-Built Hardware

Mice can’t play chess (citation needed). So talking about “playing chess at the mouse level” might require more philosophical groundwork than we’ve been giving it so far.

Might the worst human chess players play chess pretty close to as badly as is even possible? I’ve certainly seen people who don’t even seem to be looking one move ahead very well, which is sort of like an upper bound for chess badness. Even though the human brain is the most complex object in the known universe, noble in reason, infinite in faculties, like an angel in apprehension, etc, etc, it seems like maybe not 100% of that capacity is being used in a guy who gets fools-mated on his second move.

We can compare to human prowess at mental arithmetic. We know that, below the hood, the brain is solving really complicated differential equations in milliseconds every time it catches a ball. Above the hood, most people can’t multiply two two-digit numbers in their head. Likewise, in principle the brain has 2.5 petabytes worth of memory storage; in practice I can’t always remember my sixteen-digit credit card number.

Imagine a kid who has an amazing $5000 gaming computer, but her parents have locked it so she can only play Minecraft. She needs a calculator for her homework, but she can’t access the one on her computer, so she builds one out of Minecraft blocks. The gaming computer can have as many gigahertz as you want; she’s still only going to be able to do calculations at a couple of measly operations per second. Maybe our brains are so purpose-built for swinging through trees or whatever that it takes an equivalent amount of emulation to get them to play chess competently.

In that case, mice just wouldn’t have the emulated more-general-purpose computer. People who are bad at chess would be able to emulate a chess-playing computer very laboriously and inefficiently. And people who are good at chess would be able to bring some significant fraction of their full most-complex-object-in-the-known-universe powers to bear. There are some anecdotal reports from chessmasters that suggest something like this – descriptions of just “seeing” patterns on the chessboard as complex objects, in the same way that the dots on a pointillist painting naturally resolve into a tree or a French lady or whatever.

This would also make sense in the context of calculation prodigies – those kids who can multiply ten digit numbers in their heads really easily. Everybody has to have the capacity to do this. But some people are better at accessing that capacity than others.

But it doesn’t make sense in the context of self-driving cars! If there was ever a task that used our purpose-built, no-emulation-needed native architecture, it would be driving: recognizing objects in a field and coordinating movements to and away from them. But my impression of self-driving car progress is that it’s been stalled for a while at a level better than the worst human drivers, but worse than the best human drivers. It’ll have preventable accidents every so often – not as many as a drunk person or an untrained kid would, but more than we would expect of a competent adult. This suggests a really wide range of human ability even in native-architecture-suited tasks.

Theory 3: Widely Varying Sub-Abilities

I think self-driving cars are already much better than humans at certain tasks – estimating differences, having split-second reflexes, not getting lost. But they’re also much worse than humans at others – I think adapting to weird conditions, like ice on the road or animals running out onto the street. So maybe it’s not that computers spend much time in a general “human-level range”, so much as being superhuman on some tasks, and subhuman on other tasks, and generally averaging out to somewhere inside natural human variation.

In the same way, long after Deep Blue beat Kasparov there were parts of chess that humans could do better than computers, “anti-computer” strategies that humans could play to maximize their advantage, and human + computer “cyborg” teams that could do better than either kind of player alone.

This sort of thing is no doubt true. But I still find it surprising that the average of “way superhuman on some things” and “way subhuman on other things” averages within the range of human variability so often. This seems as surprising as ever.

Theory 1.1: Humans Are Light-Years Beyond Every Other Animal, So Even A Tiny Range Of Human Variation Is Relatively Large

Or maybe the first graph representing the naive perspective is right, Eliezer’s graph representing a more reasonable perspective is wrong, and the range of human variability is immense. Maybe the difference between Einstein and Joe Average is the same (or bigger than!) the difference between Joe Average and a mouse.

That is, imagine a Zoological IQ in which mice score 10, chimps score 20, and Einstein scores 200. Now we can apply Katja’s insight: that humans can have very wide variation in their abilities thanks to mutational load. But because Einstein is so far beyond lower animals, there’s a wide range for humans to be worse than Einstein in which they’re still better than chimps. Maybe Joe Average scores 100, and the village idiot scores 50. This preserves our intuition that even the village idiot is vastly smarter than a chimp, let alone a mouse. But it also means that most of computational progress will occur within the human range. If it takes you five years from starting your project, to being as smart as a chimp, then even granting linear progress it could still take you fifty more before you’re smarter than Einstein.

This seems to explain all the data very well. It’s just shocking that humans are so far beyond any other animal, and their internal variation so important.

Maybe the closest real thing we have to zoological IQ is encephalization quotient, a measure that relates brain size to body size in various complicated ways that sometimes predict how smart the animal is. We find that mice have an EQ of 0.5, chimps of 2.5, and humans of 7.5.

I don’t know whether to think about this in relative terms (chimps are a factor of five smarter than mice, but humans only a factor of three greater than chimps, so the mouse-chimp difference is bigger than the chimp-human difference) or in absolute terms (chimps are 2 units bigger than mice, but humans are five units bigger than chimps, so the chimp-human difference is bigger than the mouse-chimp difference).

Brain size variation within humans is surprisingly large. Just within a sample of 46 adult European-American men, it ranged from 1050 to 1500 cm^3m; there are further differences by race and gender. The difference from the largest to smallest brain is about the same as the difference between the smallest brain and a chimp (275 – 500 cm^3); since chimps weight a bit less than humans, we should probably give them some bonus points. Overall, using brain size as some kind of very weak Fermi calculation proxy measure for intelligence (see here), it looks like maybe the difference between Einstein and the village idiot equals the difference between the idiot and the chimp?

But most mutations that decrease brain function will do so in ways other than decreasing brain size; they will just make brains less efficient per unit mass. So probably looking at variation in brain size underestimates the amount of variation in intelligence. Is it underestimating it enough that the Einstein – Joe difference ends up equivalent to the Joe – mouse difference? I don’t know. But so far I don’t have anything to say it isn’t, except a feeling along the lines of “that can’t possibly be true, can it?”

But why not? Look at all the animals in the world, and the majority of the variation in size is within the group “whales”. The absolute size difference between a bacterium and an elephant is less than the size difference between Balaenoptera musculus brevicauda and Balaenoptera musculus musculus – ie the Indian Ocean Blue Whale and the Atlantic Ocean Blue Whale. Once evolution finds a niche where greater size is possible and desirable, and figures out how to make animals scalable, it can go from the horse-like ancestor of whales to actual whales in a couple million years. Maybe what whales are to size, humans are to brainpower.

Stephen Hsu calculates that a certain kind of genetic engineering, carried to its logical conclusion, could create humans “a hundred standard deviations above average” in intelligence, ie IQ 1000 or so. This sounds absurd on the face of it, like a nutritional supplement so good at helping you grow big and strong that you ended up five light years tall, with a grip strength that could crush whole star systems. But if we assume he’s just straightforwardly right, and that Nature did something of about this level to chimps – then there might be enough space for the intra-human variation to be as big as the mouse-chimp-Joe variation.

How does this relate to our original concern – how fast we expect AI to progress?

The good news is that linear progress in AI would take a long time to cross the newly-vast expanse of the human level in domains like “common sense”, “scientific planning”, and “political acumen”, the same way it took a long time to cross it in chess.

The bad news is that if evolution was able to make humans so many orders of magnitude more intelligent in so short a time, then intelligence really is easy to scale up. Once you’ve got a certain level of general intelligence, you can just crank it up arbitrarily far by adding more inputs. Consider by analogy the hydrogen bomb – it’s very hard to invent, but once you’ve invented it you can make a much bigger hydrogen bomb just by adding more hydrogen.

This doesn’t match existing AI progress, where it takes a lot of work to make a better chess engine or self-driving car. Maybe it will match future AI progress, after some critical mass is reached. Or maybe it’s totally on the wrong track. I’m just having trouble thinking of any other explanation for why the human level could be so big.

As noted, it appears that human intelligence seems to filter basically everything abstract through language processing, spatial modeling, and heuristics. That we can manage systems of any complexity through this method is quite remarkable. It will be interesting to see how a “general intelligence” AI will develop, whether it will work in layers of increasing abstraction like our brains do, or if it will have a separate capacity to design and access custom-built direct-access algorithmic functions like Alpha Go does. An intelligence like that would be quite remarkable, and quite alien.

Imo this discussion is missing the most important aspect, energy efficiency.

Human brains are ridiculously efficient compared to our hardware. It’s why I’m not worried about AI.

The size of smallest transistors (2D structures or 2.5D at best) is about 150nm, all things considered, especially spacing between transistors (this not the same thing as process size, which refers the smallest ‘mark’ you can make).

To contrast, the diameter of a ribosome – a full-fledged molecular factory – is 20nm. I think it’s a good illustration of how much silicon hardware sucks compared to biology – you can only do so much with software.

The more hypothetical part – which I find plausible – is how animal thinking works. Cell insides are small enough that the Second Law of Thermodynamics – a statistical law – no longer applies directly, but only on average. Operating on that scale allows reversible computing – which would sort-of explain how sudden insight works – you are not aware of how did you figure a solution to something because information about computation process is gone – reversed, leaving only the answer + some unavoidable heat waste.

If the part about reversible computation is true, there’s ZERO AI risk with both current and foreseeable hardware – trying to emulate reversible computation with a non-reversible one leads to an exponential blowup in energy usage. It could well be that a non-reversible human brain would use as much energy as a large city.

That doesn’t mean I’m skeptical about AI in general – it could well be that ~99% of human jobs are reproducible and simple enough they can be done even with an inferior silicon hardware.

I’m actually pretty inclined to believe your Theory 2: Purpose Built Hardware provides most or all of the answer. It’s exactly as you say, humans have custom “hardware” (wetware?) to handle complex things like spatial perception, proprioception, navigation, etc. Self driving cars have some overlap with our skills, in regards to object detection and physics prediction, and to the degree that it’s optimized for just those things, it performs as well or better than we do. There are still algorithms we have in our repertoire that give us edges in other ways, such as the ability to ascribe agency to other drivers and project likely behaviors onto them, or first-hand knowledge of how varying conditions affect friction, visibility, etc. These aren’t free, these are acquired from a lifetime of learning first to control our bodies as infants, and then a succession of experiences that shape our heuristic into a general world model. By the time we come of age and go through driver training, that model includes tons of “extras” like “some people are jerks” and “ice is slippery” that, while not learned for the purpose of driving, can nonetheless apply in that domain.

So I guess it would be wrong to say that it’s custom hardware so much as custom training. The AI that steer your self-driving cars have many benefits in that regard, such as being able to share the heuristic knowledge between multiple AIs and cut out a lot of the re-learning process for each successive generation. But it’s still lacking in some basic training that humans get by virtue of growing up around other humans, and interacting with them in lots of other ways long before we ever start driving. I suspect the “secret sauce” that is missing from allowing self-driving cars to truly surpass even the most skilled human driver lies in some of those common experiences of being human that we take for granted to a degree that we fail to recognize it is even a learned skill.

What I think will prove interesting over time is the ways in which autonomous vehicles behavior over time will adapt as society reaches a critical mass of self-driving-vehicle adoption. I.e. at some point “understanding how other human motivations work and how it affects their cars on the road” will not be a relevant heuristic, and instead the vehicles will be learning from the behaviors of other AI (and/or from the networked internal communication between cars). I suspect what “traffic” looks like at that point will be something distinctly different from today, and more organic in structure.

In any case, self-driving-car Utopia can’t get here soon enough, as far as I’m concerned. I’m tired of dealing with all the worst parts of humanity vis-a-vis traffic on a daily basis during my commute. I, for one, welcome our new robotic car overlords.

Here’s a different challenge: what would it take for a computer program to invent a game that people want to play?

I realize this is vague. How many people? How long would the game need to remain popular to count? Maybe it would be enough if people organize tournaments for the new game.

Eh, the answer is sociality, and to some extent play. You’ve got to have complex things that are trying to figure out (and sometimes fool) other complex things, to have intelligence. A new person is like a whole game unto themselves; “learning” a new person is like learning a whole new game, like Chess, on the fly. So you multiply that by all the pairwise interactions in an ancestral environment tribe (so learning a bunch of people is like learning Chess1, Chess2, etc.) in terms of roughly the numbers of such a tribe (what’s the memory number, like 20 people or something?), then you might have something like actual intelligence popping up.

That’s really what you’ve got to get an AI to do, in order to be anything other than an idiot savant with a bloated specialization in some particular form of pattern recognition. Intelligence is more than pattern recognition or learning, it’s also negotiation, which involves play and modelling, not just of the “opponent” but also of the world you’re both embedded in.

Re. the Mouse to Joe/Einstein thing, why not look at it the other way round? Maybe the reason why the diff. between Joe and Einstein seems great is because it really is great, and the measurement that’s telling us that it isn’t is using the wrong parameters.

The problem with all of this speculation, is that we don’t have any idea how scaleable intelligence is outside of its observed range. Perhaps its huge but it also might be quite incremental. Most growth curves follow a sigmoid pattern and we could easily be anywhere on that curve (we could even be at the top of it). If we’re near the bottom or middle, then AI may overtake us in milliseconds if we’re near the top, it may be a lot of work just to bring AI level with us. That’s assuming we use a human-like intelligence architecture. If we use a different one to ours, it’s growth curve could look very different and top out either way above or below ours on general intelligence tasks.

For instance someone mentioned working memory as a bottleneck. This is certainly true in explaining within human difference, but we don’t have a good sense of how large gains it would produce outside of the observed range. For instance, 64 bit processors have significant advances over 32 bit processors, but most people seem to think that a 128 bit processor would not confer many practical advantages. Similarly, we presumably are somewhat constrained by the physical brain sizes we can fit through the birth canal, but we don’t actually know how much extra advantage you would get with a doubling. Obviously having integrated non-neuronal technology integrated into artificial brains would confer an advantage, but again it’s hard to know in advance how much of an advantage that will be compared with humans who have access to those tools (either as currently using computer interfaces) or a more direct brain interface.

Computers playing chess and playing Go were not solved by creating a general intelligence.

I can beat the transistors off Deep Blue and AlphaGo- at Go and Chess, respectively.

Making a computer that can play Go and Chess is possible- and I can beat it at Tic-Tac-Toe, or Monopoly, or Iterated Prisoner’s Dilemma, or some other game that it hasn’t been designed for.

When you can design a computer that learns the rules and victory conditions of arbitrary games and it can occasionally get in the money at poker tournaments, then I will believe that computers might be approaching human-level at the skill of ‘game playing’, and are a force imminently to be watched in the field of software design.

You might be interested to know that neural networks are now able to generalize at least to learning the rules & victory conditions of arbitrary Atari games, even the same instance of the neural network learning multiple games in a row. Also that a deep reinforcement learning system beats professionals at no limit heads-up poker.

And neither of those networks is general purpose.

I would bet a thousand dollars to a hundred that neither of those learning networks could regularly beat me at Monopoly, and even money that neither would be any good at gin rummy, spades, or Go.

/General purpose/ is a very hard thing to do.

You might be interested in General Game Playing, where programs first are given the rules of the game, and then play. I don’t know how good the current crop is, but they used to be able to beat me at certain games.

Are they able to beat competent humans at Chess, Go, or even checkers?

The chess and go data seem awfully noisy, and I suspect that they conflate two different AI modalities: pre-neural machine learning and post-neural machine learning. It’s entirely possible that they were valid for most of the expert system/rule-based/deep-cutoff era of AI, but deep learning techniques are likely to improve super-linearly–especially in well-constrained domains of knowledge and performance. And the deep learning stuff is only about a decade old. If you were to plot the deep learning systems, they go from “plays go worse than a planarium” to “beats Lee Sedol” in an alarmingly short amount of time.

EDIT: Moved this comment here because this is really where it belongs, not on the Open Thread.

There’s an interesting little anecdote over at this blog (run by one of our commenters) and if it’s accurate as to the state of play in machine recognition, then my level of scepticism about AI threat has been racheted up another couple of notches.

Because this is not intelligence in any form, this is about as intelligent as a photocopier. It’s not recognising the image of a panda because, unlike a human who has general models for recognising “panda” and “gibbon” and so could identify the second image as a panda even if they had never seen the first image, the machine plainly isn’t doing this.

It’s not abstracting out a platonic form, if you will, of “panda” from all the images of pandas shown to it; it’s doing the same thing as a photocopier reproducing what is put on the platen to be copied: scanning the image and keeping it in memory, with a lot of other scanned images, to compare with a new image shown to it. So of course it’s being thrown off by the coloured pixels: the first image has no coloured pixels, so it’s a different image. Why it comes out with “gibbon” – well, maybe the images of gibbons it has been trained on had similar colours to the pixelation, so it’s making the ‘best guess’.

It’s Chinese Room activity at its finest, and if Searle’s thought-experiment was meant to show you don’t need consciousness, I think it shows that machine “intelligence” is anything but intelligence as we define it.

And that’s why I’m sceptical of AI threat qua “independent actor with its own goals”; I think it highly unlikely we’ll get that. What we will get are Big Dumb Machines that work by being so much faster in processing and having so much more memory and having so much more speed of recall and ability to brute-force pattern match than humans. And we’ll fool ourselves that Alexa and Cortana are really intelligent (because we so badly want them to be intelligent), and we’ll use them to make recommendations and base policy and governing and economic decisions on those recommendations, and then we’ll go running off a cliff and splat at the bottom because these are not conscious or intelligent entities that we have entrusted with so much power, they’re Huge Stupid Fast Photocopiers.

Maybe this isn’t the latest word in machine learning or neural networks or what have you, but if it’s anyway recent I think the worry about “soon mouse-level, then human-level, and the four minutes after that superhuman and beyond!” is very much misplaced; human-level or anything like it looks a long, long way away (“human-level” as in “can recognise, by identifying the notes and checking them against pieces held in memory, that the notes of a piece of music being played are dah-dah-dah-dum that’s A major etc etc etc okay that’s the Mendelssohn Fourth” and not “pattern-match snippet of music to other snippets in memory and if it isn’t identical on an oscilloscope, or however the heck the thing works, to one particular recording that it’s sampled then it’s completely lost as to what it is”).

This does not follow. Neural-net based recognition does build a general model. It has to, it cannot work this way:

The models simply aren’t big enough to work this way. But just because you’ve trained a model to recognize things by features doesn’t mean you’ve picked the correct features; if all the pictures of pandas in the training set are strictly black and white, maybe the model over-weights the lack of color in the picture.

I would submit that if you are training your model to recognise features of “black” and “white” for pandas, and not “has round ears on the top of its head, a snout, and claws” then you are not alone training it on the wrong features, you are not training it at all. If the “model” the neural net is creating is “the salient features of an image of a panda are that the image be in black and white, not colour”, then it is so far from anything that can reasonably be called human intelligence, it’s not intelligent in any meaningful form at all. It is not building a general model of what a panda is, it is building a model of “images of pandas are always in black and white, so an image in black and white is a panda, an image with colour is not a panda”, without any idea in the world what a “panda” is.

But if it works to sort out ten (black and white) images of a panda from fifty images of various animals, then we tell ourselves “Aha, we’ve taught it to recognise a panda!” when we have done nothing of the kind, and even more erroneously “Aha, now it knows what a panda is!”. Throwing in a few colour images of pandas isn’t any kind of correction for the underlying problem; now all the machine has ‘learned’ is that some images are in colour as well. It still has no model for “panda”, so give it a line art image and it’ll be stumped. And it certainly has not learned “a panda is an animal belonging to the bear family and can be identified by these anatomical features”, which even small human children can learn.

Deiseach, if I’m correctly interpreting the conclusions you’ve drawn, I can say with confidence that you’re really misunderstanding. Machine learning systems can absolutely recognize pandas in a fairly general way, not just panda photos it’s already seen, and without any gimmes like the panda images being exactly the black and white ones. I think I see where you went wrong — the paper that’s linked from plover.com is publication-worthy precisely because it’s a very surprising result. They did a systematic search for changes that would cause the system to fail, and then systematically sought out the minimal version to cause failure — that’s what they mean when they say it’s an adversarial example. It’s not at all typical for such a small change to cause failure that way. This is the equivalent of an optical illusion — it’s just that what works as an optical illusion for that system isn’t at all the same as what would work for a human, and so to us it seems ridiculous that the example in question would cause failure.

This is made more confusing by the fact that the other example cited by plover.com, Shazam, really is a very dumb, photocopier-like program, with no machine learning involved at all as far as I know — it looks for a very specific fingerprint associated with a very specific recording, and can’t generalize at all (although it does some smart things with compensating for noise, different sound systems, and volume variation).

Machine learning has actually gotten as good as humans at recognizing the subject of a photo, possibly even better.

Does that clear things up? It’s possible I’m misunderstanding what conclusions you’ve drawn, in which case never mind ;).

It is rude to call Shazam a “very dumb, photocopier-like program”. Containing machine learned is not the only way that a program can be not dumb. The ideas behind Shazam are actually quite subtle, and are far beyond what current deep learning would come up with just given raw data. There is a recent tendency to disregard anything that is not “machine learning”, and I think your post is a good example of this.

I think Deiseach is mostly right in her doubts about how Pandas are being recognized. If the program can be tricked in certain ways, it strongly suggests that it is failing to have the same kind of understanding that we have. If machine learning sees patterns of black and yellow bars as a school bus, then it is missing something. It is not acceptable to claim that the program is right, and that we are mistaken. Current recognition rates are impressive, but the evidence is that they are not looking at the same kinds of things that we are looking at. This mismatch will hurt them later.

I’m certainly not intending to be rude to Shazam. I’m a software developer with some experience in both music and signal processing, and I’d be extremely proud if I’d conceived and implemented Shazam. I only mean that it’s dumb in the precise, “photocopier-like” way that Deiseach mentions: it’s looking for the fingerprint of a specific recording, not generalizing in any way over the musical qualities of a composition. And in this way, it’s extremely different from how eg image recognition works, which does generalize over characteristics of the image, rather than looking for the fingerprint of a particular file.

Deep learning works on the premiss that it is extracting some features, and matching against those features. Shazam works in the same way, the only difference is that they are explicit about how they extract features, rather than having a learning algorithm extract them. Choosing to move everything to frequency space, and to select just the loudest signals is one way of selecting features. Counting the number of features that overlap is another way of comparing features. A machine learned system might, in a similar way, extract a set of archetypes, and determine a set of weights for each archetype. Of course, machine learned models are not usually described in terms of archetypes, but they can be thought of in that way, especially when they can be used generatively.

A machine learning algorithm is generalizing when it is used in recognition, it is looking for features that it has previously chosen. I suppose in future, we might have networks that carry out learning the instance to be classified, and then use those second level weights as input to a previously learned classifier. I have not seen this done, probably because it would be equivalent to something simpler, or because it makes no sense.

They’re certainly different. It may just be a matter of semantics; to me they seem importantly different, and to you they seem unimportantly different. I’m happy to leave it there; it wasn’t the main point I was trying to make anyhow.

In the past, nature (or what have you) has sometimes selected for better intelligence. I’m curious how the author believes this selection may take place in the future, if ever.

In the first paragraph, you use “easy” to refer to millions of years of optimization by evolution. In the second, you use “a lot of work” to refer to decades of work by small teams of humans. Maybe the variation/work-required-to-reproduce-that-variation between humans is small relative to the scale that evolution operates on, but large relative to the scale that humans operate on.

The obvious rejoinder is that mice and chimps seem to be closer to bad humans than bad humans are to world champions on many tasks…but I’m not sure that’s actually true. Has a chimp ever played a game of Chess or Go? Or learned a language? Or competed seriously with a below-average human on any intelligence task?

Maybe Eliezer’s picture is more-or-less accurate, but the scale is just much vaster than we think.

This is very misleading. You make it sound like these were random children, but in fact they were dan-level players (https://en.wikipedia.org/wiki/Computer_Go#History) who had been studying Go seriously for years. Similarly, in Go (and most things?), the gap between “random professional” and “world champion” is much narrower than the gap between “random professional” and “random human” (or even “amateur human”); perhaps a few hundred elo points compared to a few thousand elo points (https://en.wikipedia.org/wiki/Go_ranks_and_ratings).

If an AI was coming to get me, I would just spritz it with some orange juice and be done with it.

Seriously, though, I don’t see how it’s reasonable to think of any AI as “mouse-like”, or almost chimp-like (in some decades), or almost almost human-like (in some more decades). What AI has ever done anything with intent? What AI has ever been self-sufficient?

The fact that they can perform a couple tasks better than some humans is a trivial feat. Rocks are super good at sitting still. Flies are super good at smelling things. Should I be afraid of rocks and flies?

If someone told me, “Over the last 50 years flies have become exponentially better at smelling XYZ,” I would reply, “Huh…curious.” If someone then told me, “Humans engineered them to do it!” I would reply, “Oh…less curious.”

And yet, the fly is more threatening than the AI. The fly is a hearty thing; it can replicate and evolve according to the pressures of its environment. Spritz it with orange juice and it gets super psyched!

I might be inclined to find the AI threatening if it could act with intention, self-sustain, and replicate. The AI would be decidedly scarier than the rock, if it could do these things.

Anyway, I don’t mean for this comment to be disrespectful — killer blog, first time participating here! — but I wanted to inject into the discussion a little appreciation for what it takes to make it in this universe.

I’ll be sure to take your advice if the US government ever sends an autonomous weapon after me. 😛

I think you’re confusing hardware with software here.

The average supercomputer has hardware that’s highly specialized and nonthreatening (unless the hardware has some reason to be made threatening.) Far less dangerous than even a fly’s body… well, unless a battery explodes.

But the supercomputer’s software is already much more dangerous and useful than a fly’s mind, which can seek food and halfheartedly avoid flyswatters and that’s about it. It can be turned to a far wider variety of tasks than any fly – it can predict the weather, analyse language, play the stock market, trawl through databases to identify persons of interest or target demographics for an ad campaign … still not that dangerous except in the wrong circumstances, though.

The hardware probably isn’t going to get much more dangerous, at least not for cutting-edge AI. They’re always going to be in massive server farms. But the software is going to get more dangerous over time; maybe even comparable to, or more dangerous than, the human mind.

And once it’s acting with well-reasoned intentions, becoming self-sustaining and self-replicating (humans already know how to make computers) isn’t far behind.

There’s a potential equivocation here between classifying AIs as dangerous agents and classifying them as dangerous tools. There’s a fantastic case to be made for our current, non-general AIs being very dangerous tools in the wrong hands. It’s a much weaker case to try and paint them as dangerous agents.

I would just be wary of that with your argument.

How well did Einstein drive?

… TIL.

“But that doesn’t address the fundamental disparity: isn’t the difference between a mouse and Joe Average still immeasurably greater than the difference between Joe Average and Einstein?”

Not necessarily. I’d say it’s just immeasurable. The fact that you put these things on a number line does not make it sensible to put them on a number line.

I can’t help but think of this line: “The crucial cognitive structures of the mind are working memory, a bottleneck that is fixed, limited and easily overloaded, and long-term memory, a storehouse that is almost unlimited.”

Pragmatic Education

If our reasoning powers are dominated by one or two bottlenecks, then widening those bottlenecks could have a huge effect on our reasoning powers, without requiring much change to the rest of the brain. So people with brains that were in most respects highly similar could vary greatly in their reasoning powers, just because of a better working memory.

Of course, an AI could be built with however large a working memory you liked. It would be relatively easy to improve any specific bottleneck with the next version.

Alternate theory: Human individual variation in intelligence is large enough that it overlaps the average value of other species. A troop of chimps can survive in a jungle, a troop of village idiots left to their own devices will probably starve in a month.

Conversely, this theory suggests that there are some exceptionally intelligent chimps who approach average human intelligence. Maybe not quite, because it’s harder to improve something rather than break it, but they might perhaps get within a few standard deviations from it. This would explain the occasional chimp who invents new tools or manages to learn sign language.

I wouldn’t bet on a troop of Einsteins, or equivalent, to do much better than our village idiots. In fact, i wouldn’t bet against them doing worse. Intelligence is all well and good, but knowledge is probably equally, if not more so, important.

Human chess-playing ability runs from “has never seen a chessboard before” to “grandmaster”. By definition, any progress up until you surpass human grandmasters falls somewhere within this range – even the decades where computers couldn’t play chess at all technically fell within the human range of chess-playing ability.

I’m not sure about the villiage idiot, but grandmaster level is about where people start playing professionally.

Chess computers went from barely being able to compete in state tournaments (Chess 4.5, ’76) to master level (Cray Blitz in ’81, Belle in ’82) in five or six years, then to grandmaster level (HiTech and Deep Thought, ’88) in six or seven years. By 96-97, Deep Blue was about on par with the world champion, so that’s another decade. At some point over the decade after that, it became clear that the best chess computers were strictly superhuman; it was now impressive for a world champion to merely draw with one, and even that became more and more fleeting over time. And now, two decades after Deep Blue, the idea that a human could beat the best chess computers is laughable.

That’s about 8-15 years from “chess computers are as good as human professionals” to “chess computers are better than every human on earth”. Does that really sound like a wide band of human exceptionalism?

Depends how impressive you think being a professional in a niche field is. If a hypothetical baseball-playing robot went from AA to World Series MVP in 20 years, I wouldn’t find that nearly as impressive as being able to make one as good as a AA ballplayer in the first place.

If we’re trying to compare something to humans generally, using the average human is probably wisest. The average human can be okay at chess, but they’ll never be a grandmaster, even with intense training – chess AIs were “superhuman” in the 70s, if you’re talking about normal humans and not those with freakish chess skillz.

That’s rather the point, is it not?

It seems like a lot of your intelligence touchstones are binary. You can either do them or you can’t.

You can come up with dummy metrics that sum the number of tasks something is able to do, but that doesn’t tell you how “close” two groups are in intelligence.

There are some tasks that operate on a sliding scale. At what elo can you play chess, etc. But what we really want to know is whether leaps in intelligence translate into being able to do new tasks no one can really do yet. For example it is easy to imagine that we develop chess AI to 9001 elo but it the same AI recipe still sucks at driving.

Those gaps are quite different. The evolutionary work for example contains requirements for birth canal size and available daily energy intake. A too-powerful brain on a vegetable diet would simply starve animals to death.

AI as designed by humans on the other hand may scale quite different because human engineering often allows for dump stats. The system is optimized towards a few goals while others are just externalized. Neutral networks don’t need to reproduce. They don’t need to self-repair. They don’t need to supply themselves with constant energy, they can just be turned off and booted up again. Humans will supply everything they need to exist.

So if we measure difference in intelligence based on evolutionary steps it takes vs. how much hardware and advances in algorithms it takes then you’re getting different results. Do we have to factor in the billions spent on researching the latest semiconductor technology? And how do we account that most ANNs don’t even run on specialized ANN-hardware because the field is moving so fast that it rarely makes sense to build ASICs, especially for training instead of inference?

There are so many confounding factors in both chess progress and autonomous car progress. Autonomous cars are constrained by economics, we want them to run on cheap, embedded hardware and not on a supercomputer to beat the world’s best human driver. Chess computers on the other hand contain a lot of hand-crafted algorithms, something that is bottlenecked by humans writing task-specific code. That’s not generalizable intelligence. And even if you pair such hand-crafted rules with a hypothetical scalable ANN then the system may show more limited scaling behavior than whatever specific subtask the ANN is assigned to.

And this issue generalizes, most current ANN uses rely on manually designed scaffolding. And ANNs don’t even scale arbitrarily (yet). As hardware gets faster new research gets cranked out that shows what is necessary to structure those deeper networks for efficient training. Which means the structuring is still hand-crafted by humans for a specific scale level and for specific tasks.

So “progress” is measured by the combining many different inputs where most inputs will yield diminishing returns. And all practical implementations are cheating a lot by making some of those inputs irrelevant.

This is another confounding factor. Each human needs to be trained from a fairly low level (some batteries included). On the other hand ANNs can be copied. Once a single copy has been improved in a development setting it can be rolled out to millions of cars and the whole fleet will improve. That’s like everyone getting Einstein brain transplants.

I don’t think this actually happens very often. It’s just that it is very noticeable when it does. I think there are far more things that fall out of that range. I think we often don’t even think about human and machine being substitutable for tasks where there is a clear winner, so we focus on the few cases where we can even think about substitution:

* information storage (humans have been outperformed by technology on this since the invention of writing)

* information retrieval (humans have been outperformed on this clearly since google and in some forms since indexing)

* arithmetic

* applying skills and information from one ad-hoc unstructured domain to another (humans still greatly outperform machines here)

* theory of mind (humans still outperform greatly)

* running a switchboard (very fast transition from human to machine winner)

* delivering a short message between cities (clearly machines have won this for a long time)

I could go on, but I think the vast majority of tasks have a clear winner between man and machine, with a few tasks that combine skills from both lists or which the technology is still developing falling in the middle. It’s really quite a short list of specific things where we can even have the man versus machine fight with a straight face.

We also redefine those tasks as the established use changes. For example running a switchboard also involved voice recognition, i.e. taking the request of who you want to be connected to. This was substituted with dialing systems. Which in a way are dumber but more machine-friendly. Only now we have voice recognition could enough that we could go back to the old operator-style calling.

Cars also can’t reproduce, horses can. We just didn’t value that part that much once industrialization allowed us to externalize the production. Hypothetically, if cars had been invented in medival times they would have been extravagant royal carriages crafted by hundreds of blacksmiths, clockmakers etc.

The commoners would still have used horses because they’re a lot easier to make. All you need is two horses.

Some of the tasks here strike me as a bit weird, because it’s hard to draw the line between a somehow distinct machine and a tool being operated by a human. It’s sort of like saying: “Machines have always been better at knocking fruit off of high branches than humans have been, because look, here’s a stick you can use to whack at it,” and then trying to use that to demonstrate machine intelligence and sophistication at solving some problems. Writing seems to fall under the same category, as does the human-driven car and arithmetic. The obvious distinction one can try to draw is between automatic and non-automatic tasks, where the switchboard and Google handle things automatically, but all of our “automatic” systems still have to have a human at the helm telling them when and how to get started. If we accept this task-comparison as a standard of thought, then it’s very hard to cordon off the computer and say “this, now this is machine intelligence, while everything else was just tools of varying efficiency!” Instead, the computer just looks like an extraordinarily sophisticated tool, so much so that it inspires thoughts of intelligence, but which doesn’t have any of the real meat of it. The computer can beat people at information storage, retrieval, arithmetic, and even chess, but all that just means that it’s a really, really good tool. After all, the stick can beat the human at some tasks.

The next problem is to try and figure out what intelligence is if it has nothing to do with tool-oriented tasks. Potentially there could be no such thing as intelligence, and instead we could find there are only tool-oriented tasks, but I’m personally suspicious of that claim.

Part of the answer may be that the tasks you’re talking about (playing chess, driving cars) are not general goals achievable by a myriad of aims, but are specific constrained tasks that have been optimized for the human level.

Chess is a game that’s pretty well aimed at the human level. Mice can’t play it at all. You might be able to train chimps to not make illegal moves, but even that seems hard enough. Bad human players are close to as bad as you can be while still playing legal moves. And at the highest levels a lot of games are draws, so I think it’s quite plausible that the best human players aren’t *too far* away from ‘perfect’ play, which in chess means playing moves that (in the worst case) force a draw.

Cars and roads are likewise optimized for humans. Superhuman reflexes are helpful on the track, but they don’t buy you much driving around town; certainly not enough to make up for the occasional lapse in vision perception or whatever.

Basically the model I’m suggesting is that, in activities that are optimized for humans, there are often rapidly diminishing returns to performance above human level on specific subtasks, while subhuman performance can be very deleterious. That’s because the tasks are designed under the assumption that people will perform the component parts at around the human level, and so there’s no point in rewarding ability above this level, and you can safely assume human-level ability. Thus it’s not too surprising that narrow AIs progress slowly through human ability space.

Of course there are exceptions on other human tasks, particularly ones that reward particular subskills far above human level. I would imagine that a counter strike AI would progress rather rapidly from “running around in circles” to “instantly headshotting any human player” fairly quickly.

I do need to say, the title of this post is fantastic 🙂

Before I read on, wasn’t eliezer obviously talking about general intelligence?

Whenever I see debris in the street (empty plastic bag? raccoon carcass? fallen chunk of rock?), I wonder how a self-driving car would handle that situation.

@ Kaj Sotala

Rigidly following all speed limits seems a perilous strategy.

It is a great strategy if everyone else is also doing it. Its terrible if everyone else is doing 1.5x the speed limit.

Wait, what?

The “short” period of time we are taking about here is longer than humans have existed as a species. And that’s not taking into account the engineering work to develop the preconditions, namely DNA, sexual selection, mammals, and the entire ecosystem that created the perfect game theoretic conditions where evolving intelligence makes sense.

I think there’s an assumption in there somewhere that evolution is massively inefficient and that more goal-directed human engineering can outperform it by orders of magnitude.

That assumption has proven true in certain domains. In other domains, not so much. It’s certainly one hell of an assumption to apply to intelligence.

On the other hand, if you decrease the time between generations by orders of magnitude you can accomplish that speedup without changing anything else at all.

What do you mean by “without changing anything else at all?” Presumably a human engineering effort to develop AI is not going to literally simulate the entire evolutionary history of the human species, and if one tried, it’d run orders of magnitude slower than the real thing, not faster.

Right. My point was, if you can iterate faster than 15 years or so, then the system evolves faster than humans do. Iterate once an hour and you’re evolving 130000 times as fast as humans, for example, plus whatever gains you realize from targeting mutations / faster mutation rate / stronger selection pressure / etc.

Yeah, but that assumes that your iteration process is as good at producing intelligence as human evolution was.

One way of looking at human evolution is “wow, so inefficient, new generation every 15 years, easy to outperform”.

Another way is “wow, the Earth is basically a giant super-computer simulating a complex evolutionary algorithm (DNA), competing / cooperating in a completely open-ended game (survival in a world with a fully-realized physics engine), and even with the computational resources of an entire planet, it still took millions of years to evolve human-level intelligence”.

In other words, in order to run an evolutionary simulation on human-created hardware that runs faster than the Earth-as-supercomputer, your simulation is going to need to be a lot simpler than the actual process that led to humans was, by an order of magnitude that has a lot of zeros attached to it. Maybe that’s possible, maybe that’s not. And even if it is possible, to actually implement it, you have to understand the evolution of human intelligence well enough to know which parts of the simulation you can safely discard.

On a technical note, EQ actually has a serious flaw – it uses brain size as a proxy for number of neurons, and those in turn as a proxy for the connectivity. There was a recent paper on this (http://www.pnas.org/content/113/26/7255.abstract) showing that even though smart birds like corvids have physically small brains, those brains are jam-packed with neurons, reaching primate-like total numbers.

As for the “smart at one thing, dumb at others”, this is basically the description of most “smart” non-mammals, especially if you also exclude birds. Crocodilians and monitor lizards are amazingly/terrifyingly intelligent in specific contexts, yet dunces in others. Using bait to catch food, understanding locking mechanisms (even if they lack the dexterity to manipulate them), anticipating prey behavior, coordinated pack hunting, etc., yet their communication abilities range from modest to minimal, and they’re largely uninterested in applying their minds to anything that doesn’t end with a meal or injuring a keeper. The same is likely true of cephalopods, though I don’t have direct experience with them like I do with crocodilians and other reptiles.

I’m also skeptical of the “Humans are so brilliant” angle, thought not in the stereotypical “but animals are smart too”, but rather the opposite. Even dealing with less intelligent animals than crocs and monitors, it’s amazing what can be accomplished with little more than a good memory, diverse stimuli, communication, and classical and operant conditioning. For all our vaunted intelligence, how much of what most humans do (or what we do on a day-to-day basis) really requires explanations beyond those factors, possibly with imitation thrown in? Are we *really* that smart, or are we just chimps with great memories and who are good enough at generalizing their operant conditioning to find rules which apply universally (i.e. rationality), then apply them all over the place until we get a banana / a program to compile / a Nature paper? I once heard it said that genius isn’t a matter of information stored or processing speed, but the ability to draw connections; can we think of Einstein as just a chimp who generalized the right rules in the right way at the right time to the right problem, a chimp could form more associations between rules and experiences than the others? We’ve created a system which amplifies our abilities through recorded knowledge, detailed communication, a special language for these universal rules (math), and which has positive feedback (new tools -> new info -> new discoveries -> new tools…), but in the absence of that community, amplification, & feedback, how smart are we? Are we only 5% smarter than an Austalopithecus, but that crucial 5% was the tipping point allowing us to create a feedback system? After all, we spent the vast majority of our species lifespan in a state only barely more advanced that modern chimp troops.

Perhaps it’s like flight. TONS of animals (and plant seeds) glide in some manner or another, and some can add power (just not enough). But once you cross the threshold into being capable of powered, steady, level flight (which has only happened 4 times out of the numerous gliders), the benefits are immense and a huge adaptive radiation follows. Archaeopteryx wasn’t that much worse, muscularly or aerodynamically, that some of the poorly-flying modern birds, but that last extra gram of muscle, that last 2% increase in lift:drag ratio makes all the difference. Perhaps we’re simply on the “winning”* side of that last inch?

*results pending.

You beat me to it, Cerastes :).

Brain size has only a loose correlation to neuron count, and neuron count correlates directly to information processing and data manipulation.

As far as AI goes, I’ve always believed the hockey stick jump in AI development as the inevitable conclusion:

You can give a machine the equivalent of a trillion neurons. It’ll take a while (and lots of programming) to get those “neurons” to function the way organic neurons do.. but when that is achieved, the AI will become super-intelligent (approximately 10x human) in relatively short order.. much faster than a human takes to develop. Electrical signals travel through our brain at about 200 miles per hour, or have about a 100 millisecond delay from signal activation to completion. In our hypothetical trillion “neuron” computer, using the same pathway lengths that exist in the human brain, that same signal would travel at about 175,000 mph or have a delay of 3 nanoseconds from activation to completion.

In a nutshell, it may take a human about 20 years to fully develop cognition, but computers operate about 3 millions times faster than humans.. so once the programming is complete, it would take a computer about 4 minutes to reach that same level of development, but with 10x the amount of neurons a human has.. or becomes “super intelligent.” The question I have is what happens in the next 4 minutes.. 😉

One important thing that seems to be missing from this, is the huge difference in training between biological intelligence and artificial intelligence. At the moment AI can do really well, often better than humans, if tons of labeled samples exist. Once this training is done, we can just copy the coefficients to generate a clone of the AI. We can’t do that with humans. Children are not born knowing everything that there parents knew at conception. But on other hand, humans are much better at learning from a tiny set of examples. Show a human a single picture of a pangolin and he will be able to recognize pangolins in completely different pictures including drawings.

And on other issue is energy efficiency. The brain is extremely energy efficient, it uses around 20 watt and even that was only possible because it also enabled more efficient use of food via cooking. Current AI often uses large amounts of power, especially for training and CMOS semiconductor technology is close to its limits.

I think once you get to full mouse level intelligence it is like easy to scale that up to beyond human intelligence, however, we are far apart from that. Just because AI has started to become really useful on some tasks, that doesn’t mean that we have made much progress toward general AI as these specialized tasks still put constrains on the tasks that are very helpful for AI, but not all required for human intelligence.

So a general distinction about AI that’s being missed here: there are two ways we might think ai will improve suddenly at some point. First, maybe once we figure out how to do it we can just throw a bunch of extra hardware at the problem to cross from regular smart to super smart.

In practice, this would probably just give the regular (exponential) curve we see here for things like chess – we’re assuming at the point we get the algorithmic improvement we still have a bunch of boring tricks we can add. In practice, I suspect by the time we’re anywhere close to AGI we’ve already long since done all the easy tricks like adding hardware, so improvement will remain gradual.

But, there’s another method which doesn’t generalize, which is that agi might be able to improve itself. This has no analogy in the chess scenario (a computer good at playing chess isn’t good at programming a computer to play chess). It doesn’t have an equivalent anywhere short of agi. And that’s the version that could plausibly lead to a foom scenario.

Interestingly, it only leads to the food scenario once the ai is comparable in ability to top ai experts. So we’d have a gradual curve all the way up to “ai can match top human players”, and then a sudden jump to being a hundred times smarter over a day or two.

Thank you. Though there’s another issue: as I said elsewhere, people are talking about human-level AGI as if it hasn’t already passed – by assumption – the only visible barriers to absorbing a superhuman amount of knowledge.

I would add my 0.25 cents. It is wrong to compare mice brain and Einstein’s brain, as most what makes Einstein = Einstein is not its hardware, but its training. In case of mice, there is no much training. I mean that if Einstein’s brain was born 10 000 ago he would not be able to create the relativity theory. Einstein is the product of the long evolution of billion’s of brains, which created language, physiscs and even invented Lorentz equation. So Einstein’s brain is equal to billion human brains and the gap between chimp and him is much wider, when it is typically presented by the EY’s graph.

Off-topic: Your comment about “my 0.25 cents” piqued my interest. Is this a phrase borrowed from another language/culture, or a joke about inflation/having an eighth as much to offer/etc.?

On-topic: Yes, training makes a big deal. I suspect animals are a lot closer to humans than we realize in terms of raw intelligence, they just can’t be trained nearly as well.

In fact, I copied my comment from the Facebook, where I replied to another comment, which started with “My 5 cents..” – so I was joking about commenting on a comment.

Current success with neural nets shows the importance of the large datasets, and humans have enormous dataset from their childhood. May be we just don’t have correct dataset to train animals. ))

I tentatively think you’re just wrong about how driving works. It takes training to be able to apply our ‘natural’ brainpower to the task. I suspect the worst adult human drivers can’t apply it consistently, for one reason or another.

(It also seems odd to say that the societal equivalent of Scaramucci’s tenure has been doing anything “for a while.” But it would still surprise me if it got stuck within the range of people who can actually apply their intelligence to the task.)

I don’t think Eliezer’s argument is common sense. It’s actually pretty contrarian. Common sense says that Einstein is substantially more intellectually capable than a mouse, a chimp, or a village idiot. I think I might agree.

“Amount of evolutionary work it took to generate the difference, number of neurons, measurable difference in brain structure” — these are all inputs, not outputs. So they might not be a good measure of intelligence — akin to trying to value a corporation based on its annual expenses.

As for the last one, performance on various tasks, I’d argue that Einstein is able to handily outperform a mouse, a chimp, and a village idiot on a broad range of tasks.

If this is true, how is Einstein able to do so much more with similar inputs? I’m not sure.

Maybe Einstein isn’t a genius due to hardware, he’s a genius due to software. If you strand Einstein on an island and he grows up as a feral child, he isn’t going to be able to invent relativity. The village idiot can’t run the latest software (he can’t do calculus for example) and thus the range of intellectual tasks he’s capable of performing is underwhelming.

Yes, much of the variance in intellectual output between humans seems to be explained by genes rather than environment. But that doesn’t mean software is unimportant. Consider a world where all the world’s software is open source, but some computers aren’t very good at running it. Most variance between computers will be accounted for by hardware, but it would be a mistake to conclude that we can just ignore software.

Instead of just thinking in terms of amount of evolutionary work, try thinking in terms of amount of computational work period. You could think of biological evolution as doing very slow computation to optimize the genome of an individual species. You could think of a human society as performing much faster computation, distributed among human brains, which develops intellectual tools like calculus. Maybe there is some crazy ratio like 100 yrs of human society computation=1 million years of evolutionary computation or something like that.

But why does this matter? We don’t care how Einstein got smart, we’re only comparing his intelligence to other things.

It could matter. This partly depends on what scientific information is available – the AI is allowed to do what you would do in its place.

«“Amount of evolutionary work it took to generate the difference, number of neurons, measurable difference in brain structure” — these are all inputs, not outputs.»

Sure, obviously that’s true. But it is also the case that metrics of outputs have their own problems, and some cannot be applied transversely, so measuring inputs is also important. This is why most companies demand employees to pass a card when they arrive and leave. Measuring inputs also helps you evaluating your subject. If an animal has only a couple of neurons, you can rule out any high intelligence. Likewise, if an employee never shows up to work, you can infer his outputs are nil as well.

Is it possible that the problem where dealing with is that animals just don’t have intelligence, at least as we define it for people, and people do. In other words that humans have certain fundamental ways of thinking that animals just don’t and that therefore the range of humans can be very large and take sometime to surpass because some humans really do have almost no intelligence (which is still way better than none) and some have a lot. Obviously this couldn’t be true for all aspects of intelligence but might be for some.

It’s actually pretty easy to set up a compelling case for “intelligence” for which this exact model applies, although it does mean that we have to toss out a lot of complex cognitive tasks as being fundamentally “unintelligent.”

Let’s say that “intelligence” is precisely the innate skill required in order to successfully relate “real” phenomena, in all their varying forms and indistinct nature, to abstract structures like language. That is, all beings with eyes can look at trees, but only intelligent ones will declare that they are trees as a separate category from grasses, herbs, and shrubs. Carrying this further, more intelligent individuals will identify separate species of trees, and perhaps the most will identify groups of similar species, like oaks and pines. This definition decently matches our innate intuitions about intelligence, in that we regard people who are able to describe things which are already out there in the world in comprehensible terms as being very intelligent. (To echo Neil Gaiman’s comments on the late Douglas Adams, a genius is someone who sees the world in a different way than regular people do and can make them see it in the same way – to communicate it effectively.)

So, does this link up well to your criteria? It neatly separates out all other animals, barring possibly some extremely high-intelligence ones like dolphins and chimps and Alex the African Gray Parrot, as being unintelligent, and further establishes certain humans as exhibiting little intelligence. To this extent, it fits those criteria perfectly. Further, it takes all tasks which computers have surpassed humans at and categorizes them as inherently unintelligent: rather, it was those humans who programmed the computers who demonstrated actual intelligence by binding down real-world phenomena into some form of code. An interesting side-effect of this is that very few human activities actually count as demonstrating intelligence at all, which does prove problematic.

The limitation of using this definition is simply that it doesn’t deal at all with the various other mental skills which we regard humans highly for: good memory, fast calculation, hand-eye coordination, etc. Although they might be different in kind from what we called intelligence, a good number of them seem to go hand-in-hand with it. It also gives us trouble in separating things like octopi, which seem pretty clever but don’t have a lot of word skills, from things as dumb as pillbugs. These aren’t knockdown critiques, though, but places the theory could be expanded.

Either way, I think that answers your question. Yes, it is possible, but we might not have a totally clear answer without a lot more work.

I’m not sure about that. Current neural network systems are able to recognize quite abstract classes, such as chairs and mammals, and I don’t think it is correct to ascribe that level of “intelligence” to the intelligence of the programmers – they are undoubtedly very clever, but the actual implementation is about linear algebra and mathematics, and the training simply consists of showing the network a huge number of examples – not in itself a task requiring a lot of cognition.

An interesting side-effect of this is that very few human activities actually count as demonstrating intelligence at all, which does prove problematic.

I disagree. Just because we are intelligent, doesn’t mean intelligence is needed for everything we do.

Neural networks are lovely, aren’t they? In fact, it’s the very idea of how to use them and the intellectual work needed to get them to the state where they can be tuned is significant. The “intelligent” work here is how to get from linear algebra to a working and trainable AI. After the first breakthrough, subsequent work is less difficult, but there’s some real creative labor in figuring out what examples to give. For example, check out Google’s pictures of what their visual AI – probably the best on Earth – does to various images when it’s tuned to things that aren’t in them. It’s a great little lesson on how some excellent computer scientists think about the training process, and it’s more than just force-feeding the thing examples!

It’s fine to disagree on that point, and in fact I’m very sympathetic to the opinion, but it is a problem that needs a thorough solution. The traditional position is that intelligence is needed for many tasks outside of just assigning abstract classes, and throwing out the traditional position takes extra work. Otherwise, the argument remains vulnerable to the “but everyone knows” counterpoint, and that’s no good. (The “but everyone knows” counterpoint isn’t worth getting rid of, either, in part because it lets you defend typical use of words against people who insist on using them in strange ways.)

I didn’t mean to belittle the work people do on neural networks. But I think it is clear that e.g. AlphaGO’s skill does not stem from its programmers’ skill at playing GO. The systems are in fact capable of constructing its own, new abstractions to fit the problem.

And the efficacy of a sewing machine has nothing to do with its designer’s ability to do needlework. I’m afraid I must have put my points in a confusing manner for you to have interpreted “the ‘intelligent’ work here is how to get from linear algebra to a working and trainable AI” as having anything to do with skill at Go rather than skill at transforming the complexities of games into (relatively) complete machine solutions.

The machines, I’m afraid, do not construct abstractions. They are given abstractions and return abstractions according to an internal calculus, in the same way that mathematics does not construct abstractions but simply takes in abstractions and returns them. Humans understand the abstractions on both ends.

Yudkowsky’s argument might not be in-congruent with the data. If the intelligence gap between humans and animals is large, but the gap between the least and most intelligent humans is small in comparison and we expect the intelligence of AI to follow an exponential curve through these samples, then it follows that, when the AI is developing over the range of humans, its progress looks linear. This is for the same reason as when you zoom in on any smooth curve it eventually looks straight.

The first plot you showed looks like linear regression applied to a data set of computer capabilities in which an exponential could have fit quite well, considering the clustering of low success computers in the 1950’s. Has a Chi-squared test been done to see if a linear model actually fits the data better?

The second plot also looks quite bereft of data, enough that I could draw a straight line through it and you mention that alphago’s recent success would be an uptick on that plot.

I suppose most readers are aware of this, but IQ is a seriously misleading way to measure absolute mental capability. By definition, average human IQ is 100 and the standard deviation is 15. This means that a negative IQ is not only possible, but that a similar number of people have a negative IQ as have an IQ over 200. (Six sigmas correspond to about three per million, according to manufacturing process literature). In any case, IQ doesn’t let you claim that Einstein (hypothetically with an IQ of 200) is “twice as smart” as the average, such a term would be just meaningless.

Which I think is the whole confusion behind the posting, we don’t have any good ways of comparing mental capability on an absolute scale.

Anyway, I did a visualization for my machine learning class (on the blackboard only, sorry, not preserved for posterity), showing a rough picture of the development of various technologies (natural language processing, self-driving cars, chess, algebra and calculus, etc) and the y-axis having categories of “works at all”, “worse than human”, “better than some humans”, and “better than any human” – with the (admittedly not very well founded) conclusion that going from better than some to better than all often goes quite quickly. YMMV.

Another way to compare is by the number of neurons in artificial and biological systems. With the caveat that an artificial neuron is not particularly comparable to a biological neuron, it still reflects the capability of an organism or an AI system to solve complex tasks. See the figure in http://www.deeplearningbook.org/contents/intro.html, figure 1.11, page 27, where a linear extrapolation indicates that we will be building artificial networks with a comparable complexity to the human brain around 2056.

Very cool figure, thanks for the link. 🙂

No; 4.5 sigmas correspond to about three per million; you’re supposed to leave a 1.5 sigma radius safety buffer for the process mean to shift around in.

6 sigmas, with no shift, is about 1 per billion.

Right, thanks for the clarification. Still, this leaves us with perhaps a couple of persons with an IQ above 200, and the same number with a negative IQ, statistically speaking. (Which is a statement that probably shouldn’t be taken literally, IQ tests are only valid for a certain range, and it’s probably not meaningful to talk about IQ too far from the mean.)

I’ve also seen statements that indicate that IQ is only beneficial up to a point, and that after that, differences in IQ no longer reflects work performance or other correlates. Is this a defect of the IQ tests, perhaps due to their limited ranges?

>Or maybe the first graph representing the naive perspective is right, Eliezer’s graph representing a more reasonable perspective is wrong, and the range of human variability is immense.

How is Eliezer’s perspective more reasonable? There doesn’t seem to be any evidence in favor of it.