[I am not a neuroscientist and apologize in advance for any errors in this article. A recent study came out contradicting some of the claims mentioned here. See here for study, this comment for some discussion, and entry #20 on my Mistakes page.]

Hey, let’s review the literature on adult neurogenesis! This’ll be really fun, promise.

Gage’s Neurogenesis In The Adult Brain, published in the Journal Of Neuroscience and cited 834 times, begins:

A milestone is marked in our understanding of the brain with the recent acceptance, contrary to early dogma, that the adult nervous system can generate new neurons. One could wonder how this dogma originally came about, particularly because all organisms have some cells that continue to divide, adding to the size of the organism and repairing damage. All mammals have replicating cells in many organs and in some cases, notably the blood, skin, and gut, stem cells have been shown to exist throughout life, contributing to rapid cell replacement. Furthermore, insects, fish, and amphibia can replicate neural cells throughout life. An exception to this rule of self-repair and continued growth was thought to be the mammalian brain and spinal cord. In fact, because we knew that microglia, astrocytes, and oligodendrocytes all normally divide in the adult and respond to injury by dividing, it was only neurons that were considered to be refractory to replication. Now we know that this long accepted limitation is not completely true

Subsequent investigation has found adult neurogenesis in all sorts of brain regions. Wikipedia notes that “In humans, new neurons are continually born throughout adulthood in two regions of the brain: the subgranular zone and the striatum”, but adds that “some authors (particularly Elizabeth Gould) have suggested that adult neurogenesis may also occur in regions within the brain not generally associated with neurogenesis including the neocortex”, and there’s also some research pointing to the cerebellum.

Some research has looked at the exact mechanism by which neurogenesis takes place; for example, in a paper in Nature cited 1581 times, Song et al determine that astroglia have an important role in promoting neurogenesis from FGF-2-dependent stem cells. Other research has tried to determine the rate; for example, Cameron et al (1609 citations) find that there is “a substantial pool of immature granule neurons” that may generate as many as 250,000 new cells per month. Still other research looks at the chemical regulators – a study by Lie et al, cited 1312 times, finds that Wnt3 signaling is involved.

(which is making you more nervous – the fact that I keep emphasizing how many citations these studies have, or the fact that one of the principal investigators is named “Lie”?)

But the most exciting research has been the work identifying the many important roles that neurogenesis plays in the adult brain – roles vital in understanding learning, memory, and disease.

Snyder et al (775 citations) finds “a new role for adult neurogenesis in the formation and/or consolidation of long-term, hippocampus-dependent, spatial memories.” Dupret et al go further and find that “spatial relational memory requires hippcampal adult neurogenesis”. Aimone et al (633 citations) find “a possible role” for adult neurogenesis in explaining the “temporal clusters of long-term episodic memories seen in some human psychological studies”. And Jessberger et al (506 citations) finds a role in object recognition memory as well.

In terms of learning, one of the major studies was Gould et al in Nature Neuroscience (2207 citations) finding that Learning Enhances Adult Neurogenesis In The Hippocampal Formation. Lledo et al (1288 citations) find that neurogenesis plays a part in explaining the brain’s amazing plasticity, and is “highly modulated, revealing a plastic mechanism by which the brain’s performance can be optimized for a given environment”. Clemenson et al (17 citations) find that “from mice to humans”, enviromental enrichment improves neurogenesis, and this “may one day lead us to a way to enrich our own lives and enhance performance on hippocampal behaviors”.

But I’ve always been most interested in the link with depression. In 2000, Jacobs et al published Adult Brain Neurogenesis And Psychiatry: A Novel Theory Of Depression (961 citations). It’s important enough that I want to quote the whole abstract:

Neurogenesis (the birth of new neurons) continues postnatally and into adulthood in the brains of many animal species, including humans. This is particularly prominent in the dentate gyrus of the hippocampal formation. One of the factors that potently suppresses adult neurogenesis is stress, probably due to increased glucocorticoid release. Complementing this, we have recently found that increasing brain levels of serotonin enhance the basal rate of dentate gyrus neurogenesis. These and other data have led us to propose the following theory regarding clinical depression. Stress-induced decreases in dentate gyrus neurogenesis are an important causal factor in precipitating episodes of depression. Reciprocally, therapeutic interventions for depression that increase serotonergic neurotransmission act at least in part by augmenting dentate gyrus neurogenesis and thereby promoting recovery from depression. Thus, we hypothesize that the waning and waxing of neurogenesis in the hippocampal formation are important causal factors, respectively, in the precipitation of, and recovery from, episodes of clinical depression.

This theory got a boost from studies like Duman et al (522 citations), which found that antidepressant drugs like SSRIs upregulated neurogenesis – could this be their mechanism of action? And Ernst et al (327 citations) find that “there is evidence to support the hypothesis that exercise alleviates MDD and that several mechanisms exist that could mediate this effect through adult neurogenesis” – ie the antidepressant effects of exercise seem to work this way too. Electroconvulsive therapy, the most effective known treatment for depression? Works by promoting adult neurogenesis, at least according to Schloesser et al.

Is there anything that doesn’t have important neurogenesis-related effects? It would seem there is not. Sex, for example, “promotes adult neurogenesis in the hippocampus, despite an initial elevation in stress hormones” according to Leuner et al (124 citations). Drug addiction is modulated by neurogenesis. We need rock n’ roll to complete the triad, so here’s Music Faciliates The Neurogenesis, Regeneration, and Repair of Neurons.

A study in Nature Neuroscience that garnered over 3000 citations found that running increased neurogenesis. The popular science press was quick to notice. A slew of exercise-neurogenesis studies spawned articles like Psychology Today’s More Proof That Aerobic Exercise Can Make Your Brain Bigger. Dr. Perlmutter (“Empowering Neurologist!”) has a video about how you can Grow New Brain Cells Through Exercise. After this the pop sci world might have gotten a little carried away, until neurogenesis controls everything and is controlled by everything in turn. Slimland (of course there’s a site called Slimland) has a How To Grow New Brain Cells And Stimulate Neurogenesis page, suggesting you can “set yourself free and start flying” by removing toxins, eating a ketogenic diet, and meditating. Naturalstacks.com boasts 11 Proven Ways To Generate More Brain Cells, Improve Memory, And Boost Mood, which advises…really? Do you really want to know what it advises? Come on.

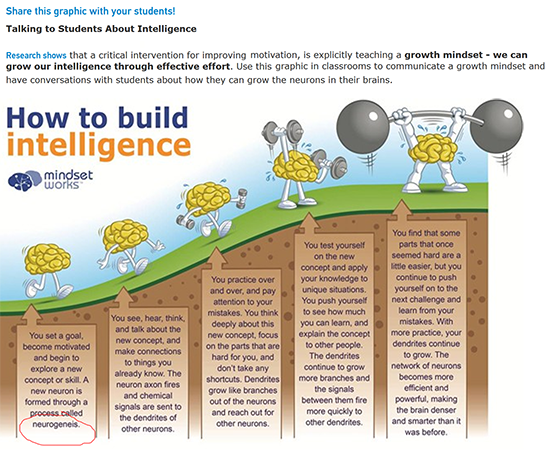

Also, growth mindset. Of course growth mindset. Carol Dweck’s Mindsetworks helpfully provides an infographic for teachers, urging them to tell their students that each time they set a goal or become motivated to learn a new skill, “a new neuron is formed through a process called neurogeneis [sic]”.

So it’s no surprise that researchers in the area are calling adult neurogenesis “one of the most exciting and rapidly evolving areas of research in the field of neuroscience”.

II.

Fun fact: there’s no such thing as adult neurogenesis in humans.

At least, this is the conclusion of Sorrells et al, who have a new and impressive study in Nature. They look at “59 post-mortem and post-operative slices of the human hippocampus” and find “that recruitment of young neurons to the primate hippocampus decreases rapidly during the first years of life, and that neurogenesis in the dentate gyrus does not continue, or is extremely rare, in adult humans.” Also, the subgranular zone, the supposed part of the brain where neurogenesis begins, isn’t even a real structure.

I am not a neuroscientist and am unqualified to evaluate it. But the Neuroskeptic blog, which I tend to trust in issues like this, thinks it’s legit and has been saying this for years. Ed Yong from The Atlantic has a really excellent review of the finding that interviews a lot of the major players on both sides and which I highly recommend. Both of these reinforce my feeling that the current study makes a really strong case.

I was kind of floored when I saw this, in a way that I hope I was able to replicate in you by preceding this with the literature review above. How do you get so many highly-cited papers speaking so confidently about every little sub-sub-detail of a phenomenon, if the phenomenon never existed in the first place?

As far as I can tell, this was entirely innocent, well-intentioned, and understandable. It happened like this:

Adult neurogenesis was discovered in rats. This was so surprising, and such a violation of established doctrine, that it quickly became one of the most-investigated areas in neuroscience. Hundreds of studies were done on rats to nail down every little detail of the process.

The work was extended to many other mammals, to the point where it seemed inevitable that it must be true of humans as well. This was difficult to test because the relevant studies involve dissecting brains, and there aren’t that many human brain specimens available with the necessary level of preservation. After a lot of work, a few people got a couple of brains, did some very complicated and contamination-prone tests, and found evidence of adult neurogenesis. This encouraged everyone to assume that the things they had discovered about rat neurogenesis were probably true in humans as well, even though they could never prove them directly because of the difficulty of human experimentation. Later some other researchers tried to replicate the complicated and contamination-prone tests and couldn’t find adult neurogenesis in humans, but everyone assumed they had just messed up some aspect of the complicated testing process.

And to complicate matters, everyone in the new study has been very careful to say they can’t prove with certainty that zero adult neurogenesis occurs – just that the levels are so low and hard to detect that they can’t possibly matter. Looking back on some past studies, it seems that “so low and hard to detect that they can’t possibly matter” was actually within their confidence intervals. So it may be that some team found some extremely tiny and irrelevant population of immature neurons in the brain, gave a confidence level that included that number, and then everyone just assumed we were talking about levels similar to the ones we saw in rats.

With real scientists taking not-entirely-sufficient care to distinguish rat from human results, the popular press felt licensed to totally ignore the distinction (did you even notice which of the studies in Part I were done on which species? Don’t worry, nobody else did either).

Meanwhile, synaptogenesis – the growth of new synapses from existing nerve cells – was getting linked to depression and all kinds of other things in a lot of interesting studies. When people started talking about neurogenesis’ role in depression, psychiatrists like me who have trouble keeping words ending with -genesis separate just sort of nodded and said “Oh, yeah, I heard about that” and didn’t give it the sort of scrutiny it deserved.

(I wonder if this is young-earth creationists’ problem too)

So it’s not like any one person made a spectacular mistake anywhere along the lines. Most of the studies done were in rats, and 100% correct. A few studies were done in humans, and may have gotten the wrong answer in a very difficult domain, while also hedging their bets and admitting they were trying something hard. It was only on a structural, field-wide level that all of this came together into people just assuming that adult human neurogenesis had to happen and be important.

…or at least, that’s the optimistic take on it. But I can’t help thinking – antidepressants work in humans, which suggests that the people who found neurogenesis was necessary for antidepressant effects must have just been plain wrong. And if exercise has antidepressant effects in humans, then the claim that those effects are neurogenesis-mediated must be wrong too. And, uh, humans form spatial and temporal memories, so unless we do this by a totally different mechanism than the ones rats use, people must have been wrong when they said neurogenesis was involved in that. ECT? Works in humans. Brain plasticity? Happens in humans. So maybe it would be better to say that the original claim that adult neurogenesis happens in humans seems innocent and understandable – but if the new study is true, that suggests that a lot of the followup claims must have been imaginary. Anything that focuses on a process that happens in humans and says “neurogenesis causes this” must not only be wrong to extend the results to humans, but must be under strong suspicion of being wrong even about rats, unless rat brains and human brains accomplish the same basic tasks through totally different mechanisms (eg antidepressants work on rats but for different reasons than in humans).

We know many scientific studies are false. But we usually find this out one-at-a-time. This – again, assuming the new study is true, which it might not be – is a massacre. It offers an unusually good chance for reflection.

And looking over the brutal aftermath, I’m struck by how prosocial a lot of the felled studies are. Neurogenesis shows you should exercise more! Neurogenesis shows antidepressants work! Neurogenesis shows we need more enriched environments! Neurogenesis proves growth mindset! I’m in favor of exercise and antidepressants and enriched environments, but this emphasizes how if we want to believe something, it will accrete a protective layer of positive studies whether it’s true or not.

I’m also struck by how many of the offending studies begin by repeating how dogmatic past neuroscientists were for not recognizing the existence of adult neurogenesis sooner. Remember Gage’s review above:

A milestone is marked in our understanding of the brain with the recent acceptance, contrary to early dogma, that the adult nervous system can generate new neurons. One could wonder how this dogma originally came about…

Or from Neurogenesis In Adult CNS: From Denial To Opportunities And Challenges For Therapy:

The discovery of neurogenesis and neural stem cells (NSC) in the adult CNS has overturned a long‐standing and deep‐routed “dogma” in neuroscience, established at the beginning of the 20th century. This dogma lasted for almost 90 years and died hard when NSC were finally isolated from the adult mouse brain. The scepticism in accepting adult neurogenesis has now turned into a rush to find applications to alleviate or cure the devastating diseases that affect the CNS.

From Adult Human Neurogenesis: From Microscopy To Magnetic Resonance Imaging:

The discovery of adult neurogenesis crushed the century-old dogma that no new neurons are formed in the mammalian brain after birth. However, this finding and its acceptance by the scientific community did not happen without hurdles. At the beginning of the last century, based on detailed observations of the brain anatomy reported by Santiago Ramon y Cajal and others, it was established that the human nervous system develops in utero (Colucci-D’Amato et al., 2006). In adult brains, it was thought, no more neurons could be generated, as the brain is grossly incapable of regenerating after damage (for a more detailed historical report see Watts et al., 2005; Whitman and Greer, 2009). This dogma was deeply entrenched in the Neuroscience community, and Altman’s (1962) discovery of newborn cells in well-defined areas of the adult rodent brain was largely ignored.

I’m bolding the word “dogma” because for some reason every article in this field uses it like a verbal tic. University of Washington’s “Neuroscience For Kids” page feels compelled to use the word even though they don’t expect their readers to know what it means:

The dogma (a set of beliefs or ideas that is commonly accepted to be true) that nerve cells in the adult brain, once damaged or dead, do not replace themselves is being challenged. Research indicates that at least one part of the brain in adults maintains its ability to make nerve cells.

I think Patient Zero in this use-of-the-word-dogma epidemic might be Neurogenesis In The Adult Brain: Death Of A Dogma, (880 citations) whose abstract says:

For over 100 years a central assumption in the field of neuroscience has been that new neurons are not added to the adult mammalian brain. This perspective examines the origins of this dogma, its perseverance in the face of contradictory evidence, and its final collapse. The acceptance of adult neurogenesis may be part of a contemporary paradigm shift in our view of the plasticity and stability of the adult brain.

The dogma-concern isn’t totally wrong. Previous neuroscientists thought there wasn’t neurogenesis in rats, and there is. That was a legitimate mistake and one worth examining. But is it possible that the reaction to that mistake – a field-wide obsession with talking about how dogmatic you had to be to miss the obvious evidence of mammalian neurogenesis, and a desire never to repeat that mistake – contributed to the less-than-stellar effort to make sure neurogenesis was happening in humans? Heuristics work until they don’t. Those who fail to learn from history are doomed to repeat it, but those who learn too much from history are doomed to make the exact opposite mistake and accuse anyone who urges restraint of “failing to learn from history” and “dogmatism”. From the Virtues of Rationality:

The Way is a precise Art. Do not walk to the truth, but dance. On each and every step of that dance your foot comes down in exactly the right spot. Each piece of evidence shifts your beliefs by exactly the right amount, neither more nor less.

Or maybe I’m just grasping for straws. But I feel like I have to grasp for something. I have nowhere near as much expertise as the actual neuroscientists writing about this result (and there are many). I’m sure I’ve made some inexcusable mistakes somewhere in this process. Perhaps I am missing some colossal flaw in the new study, and wrongly slandering dozens of neuroscientists doing great work.

But the reason I feel compelled to dabble in this subject anyway is that I don’t feel like anyone else is conveying the level of absolute terror we should be feeling right now. As far as I can tell, this is the most troubling outbreak of the replication crisis so far. And it didn’t happen in a field like social psychology which everyone already knows is kind of iffy. It happened in neuroscience, with dramatic knock-on effects on medicine, psychology, and psychiatry.

I feel like every couple of months we get a result that could best be summed up as “no matter how bad you thought things were, they’re actually worse”. And then things turn out to be even worse than that. We can’t just become 100% certain things are arbitrarily bad – that would be making the same mistake as the neuroscientists who were overly eager to reject the no-neurogenesis dogma. But that means we always have to be ready for disappointment.

From the Neuroskeptic article:

So what does this all mean? Sorrells et al. conclude by speculating, provocatively, that our lack of adult hippocampal neurogenesis may actually be part of what makes us human:

“Interestingly, a lack of neurogenesis in the hippocampus has been suggested for aquatic mammals (dolphins, porpoises and whales), species known for their large brains, longevity and complex behaviour.”

This hypothesis seems pretty wild to me. But it’s no wilder than some of the other theories that have long surrounded adult neurogenesis

Our total inability to ever change or get better in any way is what separates us from the animals. Inspiring!

Disturbing indeed.

I’m weirdly attracted on a philosophical level by the idea that memory formation, antidepressant action, and plasticity happen in humans by totally different mechanisms than they happen in rats. Philosophically, there does seem to be a whole lot of something different happening in our brains than in rats’, and this’s a potential candidate for that something.

It needs a whole lot of actual evidence to be a scientific theory, though – lacking that was the problem with the theory of human adult neurogenesis in the first place.

Memory formation and plasticity I could almost believe, but antidepressant action? It’s just a coincidence that the same chemical that treats depression in humans does it in rats too?

Hmm, I’m suddenly realizing just how little I know about animal models of depression. How do we know how what antidepressants do in animals compares to what they do in humans? As Wikipedia points out, “It is difficult to develop an animal model that perfectly reproduces the symptoms of depression in patients.” Off the top of my head, maybe they help neurogenesis in animals but do something else in humans?

Well, antidepressant drugs do a bunch of other things, not only increase neurogenesis.

It seems plausible that their method of action is the same in rats and humans, but it’s not neurogenesis; and increased neurogenesis is just a (possibly positive) side effect that happens in rats.

How do you know a rat is depressed? By observing its behavior? Can you also tell when a rat is happy?

If yes, why do you put any trust in happiness surveys instead of demanding that they should be based on observing human behavior that correlates with happiness?

yes – and it’s absolutely worth remembering here that rodent models of depression are not the same as depressed rats. Things like the sucrose preference test etc. are supposed to model aspects of depression, but there are already well-established differences.

Example: The forced swim test is considered a model of antidepressant efficacy. Drugs that treat depression in humans tend to cause rodents to swim more and float less. But it’s clearly not the same thing as depression, since you see the effect very shortly after administering the antidepressant (like, 30 minutes), whereas in humans it takes longer for antidepressants to work.

Usually a scale of 1-to-10 survey, but Amish rats score unusually high.

this stuff is part of things like the Hamilton Depression Rating Scale. It has subjective emotions but also asks about insomnia, early waking, appetite, physical slowness of movement, and so on.

Find me depressed rats, and we’ll talk! We have to assume that the forced-swim test or any other rodent model actually produces the the same pathology as MDD in humans, which I’ve never believed. Plenty of drugs do great in rodent models but fail stage III trials as antidepressants. It’s honestly easier for me to believe that the small number of drug targets shared by standard antidepressants happen to have something to do with certain motor behaviors in rodents. Hell, serotonin does something with movement even in C. elegans.

Well, this was recently posted on the subreddit, saying neurogenesis in the adult amygdala happens in neurotypicals but not in autistics: http://www.pnas.org/content/115/14/3710

So maybe one set of studies happened to use neurotypical subjects and the one at the end happened to use autistic subjects? (If the same is true of the other brain regions they studied as of the amygdala)

It’s plausible to me that there’s something different about the brain of people who volunteer for a brain study than from the general population.

It would be too much “just so” for there to be a different difference between NT and ASD people who volunteer than NT and ASD people who don’t, but it wouldn’t surprise me if severely autistic people were more likely to be actively encouraged to participate in brain studies, and for the “autistic” group to be closer to “people who don’t volunteer for brain studies” than the Neurotypical group.

If early studies were on people who volunteered for brain studies, and the failure to replicate was done on a different selection, things point to a thing which causes neurogenisis and also causes volunteering for brain studies.

Or maybe I’m writing stories about too few dat points that I don’t even really understand.

@deciusbrutus: The one I linked used brains from dead people, including children as young as 2; so for many of the subjects any volunteer bias would be on the part of the subjects’ family members rather than the subjects themselves.

The new iconoclastic study was done on a mixture of preserved tissue sections from cadavers across a range of ages as well as on biopsy sections from from those undergoing operations with seizures and also a longitudinal study on macaques, so it does not strike me as likely to suffer from volunteer bias in either direction (although sample sizes for each age weren’t exactly huge either at the end of the day….). The seizure population is quite likely psychiatrically/neurologically biased and it’s possible that signal loss from degradation or preservative action in the cadaver samples may have simply rendered their technique too insensitive, but they did at least do a positive control with samples from newborns and found signs of neurogenesis there. The macaque results honestly struck me as the most compelling (but I also haven’t read the paper carefully and don’t have a good grasp of the limitations of their techniques or the extent/generality of the validation of the reporters they are using for neurogenesis in humans).

While the paper did do a good job of presenting complementary approaches that agreed with their conclusions, I would still wait for independent replication….there’s something a little ironic about sounding the alarm about a fresh replication crisis on the basis of a single new study, however striking (which is not to say that the dissonance should not be cause for concern).

Hm, if I’m remembering my brain regions correctly, isn’t the amygdala a portion of the brain that developed earlier in evolutionary history? Mice for instance do have their own amygdalas. Maybe one explanation is the areas of the human brain that do have neurogenesis are the older structures? Could be a potential explanation for why it seems so much clearer that important amounts of adult neurogenesis happen in animals but seem to be much harder to find in humans.

Or maybe all of this will get falsified in a year.

Ultimately we don’t have a strong reason yet to suspect that differing levels of neurogenesis actually are a significant indicator of anything. If I’m reading the chart on your link right comparing neuron totals between neurotypicals and autism spectrum individuals, that data looks rather noisy and the gap between the groups looks like it often overlaps until the late 30s (which seems like a long time after behavioral differences are noticeable). You run significance tests on 100 different variables you’re likely to find 5 with <.05 P and at least 1 with <.01 P. So I'm not ready to jump on the bandwagon for any claims to have found an interesting correlation yet.

Is neurogenisis necessary to ever change or learn or get better?

Is there maybe a situation where some environments promote neurogenisis and others don’t, and it just so happens that “being a lab rat” promotes it more that “being the kind of person who donates a well-preserved brain to a study” does?

I think it’s very very likely that any given human has comparable or greater amount of learning than a lab rat. Unless they are literally taking coma patients, humans are in much more novel environments that lab rats, interact with more individuals, require more information processing and a wider array of skills.

I’d wager maybe brain damage would be a plausible factor for encouraging neurogenesis if we find that it doesn’t occur in the average human but can in mammals. We’re familiar with the idea of certain brain areas compensating for lesions in other areas, for instance.

The average human is ecountering the same amount of novelty as they did yesterday, using the same number of skills, and interacting with the same number of people.

Is that also the case for a lab rat in a study? What enrichment behaviors do lab rats have available at the breeder’s location?

I don’t know about the absolute terror I should be feeling.

I mean what did neuroscience do for me lately?

There are two possibilities:

1) Neuroscience works. Then the troubling conclusion is that it doesn’t seem to produce actionable knowledge.

2) Neuroscience creates papers out of noise. Then we can expect things to get better as technology makes data less noisy.

The second possibility is to me the more optimistic of the two. And it seems to be closer to the truth.

Even the first one is a little bit sweeping. “Neuroscience” is not a monolith. Lots of other bits of neuroscience are producing lots of actionable knowledge, and even if adult neurogenesis isn’t true, that doesn’t discount the work of scientists developing improved MEG-based mapping techniques to help evaluate the patient-specific risk of cutting out something important for language use while performing a surgical intervention for chronic epilepsy. Which is admittedly not very helpful unless you or someone you love suffers from chronic epilepsy, but I think we can both agree that that kind of work is a definite net good. Most neuroscience doesn’t do much for you on a day-to-day basis, until something goes wrong with your thinkmeats.

Definitely the number of citations. Partly because in my field (mathematics), there is a famous person (Sophus Lie of Lie group fame) who pronounces that name like ‘lee’ (not ‘lie’), so I pronounced this name the same way when I read it. And partly because I'm not kabbalist.

Yeah, but how many citations does it have?

Oh come on, everyone knows that articles that talk about how other articles that talk about how other articles are wrong are wrong are always right!

Why were scientists so sure for so long that adult neurogenesis doesn’t happen? Did they actually have the technical tools to be sure of a universal negative?

What do you make of The Brain that Changes Itself which is accounts of people making extraordinary increases in their capacity by slowly and stubbornly learning more accurate perception and new behavior? Are the stories false? Maybe it’s something, but not neurogenesis? How about dendrites?

From the article: “In adult brains, it was thought, no more neurons could be generated, as the brain is grossly incapable of regenerating after damage”

Synaptogenesis and plasticity in the connection strength of existing synapses, basically. But I think there’s good reason to be skeptical of all the results thus far, including the no neurogenesis one.

Also the stories in Doidge’s book are definitely not false. We know a great deal happens after injury, for example in adult onset blindness you have visual cortex being taken over (to an extent than is sometimes overemphasized) by auditory functions. There’s clearly something going on that causes this, and it could be any combination of neurogenesis, synaptogenesis, and changing strengths of existing connections.

*Caveat: I work in a related field but haven’t done anything on this in particular, and for all I know there have already been studies showing what the specific nature of the rewiring of visual cortex is in adult-onset blindness. It’s got to be one of the three causes I suggest, or something even weirder, though.

Minor quibble: The link attributed to “Washington University” should be “University of Washington”.

This reminds me of your old article How common are science failures? By my count, this meets your first 3 criteria for a a science failure, and only time will tell if the 4th criteria is met. I never would have guessed we’d have a science failure in neuroscience of all places.

Neuroscience has a lot of fuzzy methods (fMRI, for instance) and bad practice (interpreting the aforementioned badly, for instance) going on. Small sample sizes are also extremely common; Ionnaidis has written about this, too.

https://www.nature.com/articles/nrn3475

One scientist mentioned in a lecture (tried to google it, but I forgot her name) that the most rigorous neuroscience comes from the study of vision, since before studying vision in humans we already had a lot of rigorous base from studying monkey vision. Other parts, however, such as cognition, are more of a ‘wild west’ when it comes to methods. I don’t really know myself.

EDIT: The scientist was Nancy Kanwisher, didn’t find the lecture

if the rodent hippocampus is designed to regularly integrate new neurons into it’s network, it’s not shocking that disrupting that process disrupts hippocampus-dependent tasks like spatial learning. I don’t know a lot about how neurons die and new ones are integrated into the HC, but if you have neuronal death and the replacement neurons aren’t showing up, it’s not shocking that you’d see some kind of deficit. The conclusion you draw from that is not necessarily “rats and humans do spatial learning totally differently”, it could be “the ability to stop adult neurogenesis in rats gives us a subtle way to destabilize the networks underlying spatial learning.” It could even be that an increase above baseline of adult neurogenesis improves learning AND this is still true – I can think of two possible explanations: Some neurotrophic factor that is upregulated in adult neurogenesis has other beneficial non-neurogenesis effects, or it is indeed true that adult neurogenesis CONTRIBUTES to learning in rats, even if it is not the primary mechanism.

basically: it sucks that people didn’t look more rigorously for evidence in humans earlier on, but I don’t think the lack of adult neurogenesis in humans is that confusing.

Scott Alexander, I don’t know the best way to send you a message, so please forgive this off-topic but relevant-to-your-interests link in the middle of this comment thread: https://twitter.com/MondenRei/status/981811525164916738

How did Ramón y Cajal originally measure no adult neurogenesis in humans?

Are the very small modern claims within his error bars? What would have happened if he had applied his method to rats?

If he wouldn’t have detected neurogenesis there, then we can say that he was wrong to claim anything about humans.If he would have detected it but didn’t, why not? Did he not do the much easier rat experiments? Did he use some other model organism that happened to also have very low adult neurogenesis? (probably not, since he usually worked with rats)No, this is typical. A study is only worth debunking if it is popular and has many replications and elaborations.

The consequences for other fields could have been debunked by just reading the effect sizes. It’s more like consequences for journalists covering those fields. The real problem is that those fields are full of journalists.

We know many scientific studies are false. But we usually find this out one-at-a-time. This – again, assuming the new study is true, which it might not be – is a massacre. It offers an unusually good chance for reflection.

If it’s true, it’s very bad, because this isn’t merely a replication crisis in a ‘soft’ science like sociology or where a psychological experiment on a bunch of college students in the 70s didn’t turn out the same way when done on a bunch of different students in the 90s.

This is proper ‘hard’ science that got its results from doing Real Science by getting physical specimens and cutting them up and dunking them in chemicals and looking at the resulting product with the aid of machinery.

So if they were seeing cells there that were not there, this is a matter of concern. Even worse if everyone then built their house of cards on shaky experimental results, taking the data for granted and spinning new gold out of the old straw. This is the vaunt of science: it is based on what is there. Not on what you hope or want or wish is there. If the [thing] is/is not there, then that is the evidence you have to go on, not “but it should be there/it should work this way”.

So if this is (at best) “well if something like this is in a lot of other animals, it should be in humans too” wishful thinking, we’re in a bad place. Assuming that the new study is right and all the preceding work is wrong, which again is something that has to be demonstrated as more than “one fluke result”.

Re: the use of dogma, I wonder if it’s not because it has religious connotations, and using it as a term of criticism about previous science is a kind of subtle put-down: “they behaved like a church, where once the official belief was established, everyone had to go along with it!” The temptation to regard oneself in the light of the new Galileo in one’s field, boldly overturning the established but false orthodoxies, must be present at least sometimes.

I don’t think it’s bad in the same way you do (see below), but one important clarification is that the vast majority of these results are not counting new cells directly, they are counting various things that are associated with new cells. The issue is more one of interpretation than of faulty measurement.

Also: I would say more than ‘at least sometimes’.

Deep learning has been around and popular in my field for at least half a decade now, technically longer. Yet it’s not uncommon to see new papers include some boilerplate in their introduction about how deep learning has revolutionized everything.

Anytime a new approach for some detail is proposed, it’s not uncommon to use language like ‘the traditional approach’ to describe something that’s been more-or-less default practice for…again, what, a few years?

I view the Galilean self-image as a low-level constant presence.

I’d be happy calling anything that been commonly known in a field for less than 10 years as “novel”. Seems about the right standard. I refuse to take seriously the idea that I should be super up to date on everything, even in my field – what’s true will still be true in 5 years, and if it isn’t true it at least stands a chance of being rejected. Time is a useful filter that way.

The ‘original’ big replication crisis of the past decade or so was in biomedicine (see Ioannidis- Why Most Published Research Findings are False ( http://journals.plos.org/plosmedicine/article?id=10.1371/journal.pmed.0020124) ; psychology was just the second coming. Healthy skepticism is important across the board and particularly when dealing with fields that study such complex systems.

This shouldn’t come as a huge surprise, frankly. From my perspective there’s several nontrivial sources of systematic error that tend to scale as you go ‘up’ from physics:

1. System complexity/scale – Escalating rigorous quantitative frameworks that apply to simple underlying systems is often intractable as you go up in complexity, and controlled experiments become far more difficult to do due to issues with signal, baseline ability to quantitate, and the fact that some systems (as in psychology, economics, human biology) only operate on the scale of human life itself- i.e. you cannot isolate the system, iterate a controlled experiment 100 times and independently tune many variables in an easily verifiable way, ethical review creates major barriers for many sorts of experimental approach (preventing a control group from seeking treatment, difficulty of getting approval for experiments on higher primates) etc. Basically, it’s just harder on average to do clear experiments/studies and isolate effects.

2. Lag of rigorous model building and replication practices- the incentive structures for medical and applied sciences in particular tend to reward pursuit of the next big discovery and tool – rigorously dissecting the limitations of a technique, the mechanics of a system according to tractable but rigorous approximations of underlying principles (thermodynamics, physics, chemistry, etc.), repeating studies on a larger scale with more careful controls, etc. have been historically considered unappealing or irrelevant, severely undermining the tendency of many fields to develop real assurance of self-consistent foundations on which to systematically build.

3. Incentive for Exaggeration- Grant funding is extremely tight and competitive, tenure is extremely tight and competitive- p-hacking and other forms of cherrypicking and overstatement can become routine practice in some environments.

4. Distribution of expertise- The types more disposed to rigorous quantitation and associated logic tend to have tend to partition more heavily (as one would expect) into fundamental fields like physics- which also means that some of the statistical tools and perspectives that are very valuable for rigorous approaches across the board will tend to decrease in density as you move towards successively ‘softer’ fields.

In addition to this, ‘good’ scientific methodology with respect to maintenance of multiple hypotheses, careful and consistent usage of appropriate positive and negative controls, and rigorous flow of experimental logic is often not taught at all systematically and tends to be learned idiosyncratically from research mentors or advanced courses.

Heuristically psychology’s roots are more distal from the natural scientific mainstream and pure MDs* are less likely than the average bioscientist to have received a desirable robustness of training in rigorous scientific methodology, so larger issues on this end might be expected (you’ll find ill-supported conclusions or vague rationalizations in place of clear predictions in any field, of course ).

*For the natural sciences at least, PhD programs in the United States tend to expect both appreciable undergraduate research and select agaisnt applicants from the same school as the program; likewise, Post-Docs are typically done at a different university, and is it not as typical for universities to hire professors trained in their halls. Medical programs by contrast have less of an expectation of research, tend to prefer undergrads from their own schools, tend to prefer residents.

-residents from their own MD programs, and likewise with hirings for medical faculty from their own vs. other residency programs. The probability of receiving a diversity of environments and methodologies for research and avoidance of relevant bad habits would be expected to be greater for mature PhDs than MDs, as the system for the former generally has a specific commitment to avoiding ‘intellectual incest; biomedicine has a greater proportion of the latter operating in a research environment and so may also be convolving flaws in institutional recruitment preferences into the field.

New heuristic: any knowledge that has not yet been converted into useful technology can and should be ignored by anyone who is not a direct specialist in that knowledge’s field.

That seems like a good heuristic, actually.

So by your heuristic, Scott (Not a neurologist) should not have written this post, and we (I’m not a neurologist, don’t know about others) should not have read it? I’m confused by the actual implications of what you are proposing. I think it’s normal and decent to have interest in things outside of your expertise, even at a theoretical level.

Maybe “treated as entertainment” rather than “ignored”? It’s normal (well, around here) and decent to argue about the science and technology of fictional worlds, but not to make important life or policy decisions based on it.

I think it’s interesting to read about dark matter and galactic rotation curves… but I guess I’ll know they’ve gotten it right when I can go down to the store and buy a dark matter wifi adapter.

To generalise – science can only be trusted if it can make reliable predictions. A science that makes predictions can sometimes be used to make things (like wi-fi routers), but not always, for instance in astronomy.

Hmm… I don’t think anyone claims that dark matter is 100% real. It’s one hypothesis that explains why Newtonian/Einsteinian/quantum mechanics (which most definitely has resulted in tangible products — everything from buildings to nuclear weapons) gives wonky results at the galactic scale.

There are a few options:

1) Newtonian/Einsteinian/quantum mechanics is flat-out wrong (this is very unlikely — bridges stand up, spacecraft arrive at their destinations, lasers work, etc.)

2) Newtonian/Einsteinian/quantum mechanics is right, but there’s a lot of matter out there (the so-called “dark matter”) that we can’t see for whatever reason.

3) Newtonian/Einsteinian/quantum mechanics is partially right — it’s a good approximation at anything less than galactic scale, but needs tweaking when you get to that level. Note that this is basically what happened with the Einsteinian and quantum modifications to Newtonian mechanics — Newtonian mechanics still works fine if you’re just building a bridge (no engineer bothers with Einsteinian or quantum effects when designing a bridge), but at very large or very small scale (say, you’re making a nuclear reactor or sending a spacecraft out of the solar system) it starts to give you numbers that are measurably off.

My gut leans toward 3), but a lot of people lean toward 2). As to why it matters, well, we now have lasers, computer chips, and many other things that would be considered impossible under straight Newtonian mechanics. Answering the “dark matter” question might seem far removed from practical applications, but so did the photoelectric effect and the discrepancy in the perihelion precession of Mercury.

You act amazed that a bunch of people jumped on a bandwagon–a particular bandwagon that easily dovetails with popular ideals about human potential. And that, from that bandwagon, they proceeded to speak with gentle scorn about the poor, unenlightened souls not aboard.

And it’s not just any bandwagon, it’s a Gotcha! bandwagon. It’s a bandwagon that is as respectable as the Socratic Method (“We just kept searching and asking questions…”) but as satisfying as Myth Busters.

Scientists are humans, first.

It’s been mentioned before how often Science is approached as a religion, with honor and reverence due to scientist-priests, who have been inducted into the hierarchies of the Code, and their words taken as a revelation of truth. In light of that, “dogma” is perfectly appropriate & accurate.

It can be disturbing to realize that we are all humans, even within the cloister walls.

eta: I don’t think you are actually all that amazed. That’s what makes you good at your profession–you have a fair grasp on human nature, even though it annoys you.

I’m really confused as to why everyone gets so upset about this. Beliefs, even apparently well-supported ones, are sometimes wrong. Human neurogenesis has only been dogma for less than a couple of decades. It might be wrong, although I think it’s very dangerous to assume it is based on one paper, but if so… it’s wrong. That’s how science works. Feature, not bug.

The correct epistemological takeaway is that you should be really, really willing to be uncertain about things, even ones that appear really well-supported. Sounds ok to me?

I think there’s a difference between neurologists disagreeing in academic papers or even macroeconomists being wrong when discussing monetary policy vs. something that (I) was taught in elementary school. By the time we get to the “teaching it to uncritical children” it shouldn’t be something about which there is significant doubt.

You were taught that adult humans can make new neurons in elementary school? Seriously? How old are you?

EDIT: That isn’t meant to sound quite so condescending – I just mean this is a finding that only really came out ~15 years ago in journals, and usually it takes > a decade to filter down to schools I would have thought. If it did move that quickly, then I absolutely agree that’s irresponsible.

Look at that infographic in the article. Talking to your students about growth mindset, includes the word “neurogenesis” in the first blurb.

I don’t think the cartoon brain is aimed at collegiate level.

Yeah ok, but this is a problem with marketing, like that’s a commercial product, isn’t it? We’re now talking about the fact that companies that peddle pseudoscience misuse published results, rather than a problem with the scientific method or whatever.

I realize “growth mindset” is a thing that Scott talks about a lot but I honestly have never heard of it outside this blog.

However I think I should concede the point that neurogenesis is/was overhyped. But… I don’t think it’s the end of the world.

That being said I just read the infographic in detail and now I want to stab myself in the brain to stop any accidental neurogenesis that might have resulted from reading it.

The funny thing is, it’s not even necessary to be inspiring. What matters from learning is the practical effects of learning; to the student, it doesn’t matter if this comes from neurogenesis, synaptogenesis, or balancing humors.

YES. SO MUCH THIS.

Here’s one (myth) you’ve probably heard: “Did you know everytime you learn something you get a wrinkle in your brain?”

Nevermind a potentially useful fact or skill, study for the wrinkly brain!

Worse still, given the (slow) turnover in teaching corpora at that level, children will likely be seeing that infographic (or variants of it) for generations to come.

If we limited ourselves to only teaching things we really are certain about to school children, we would certainly shorten up the school day a lot. Lots and lots of reliable information that has a high probability of truth in physics and chemistry and biology. They could spend a lot of time on that, but, frankly, almost everything we know about those subjects (so far) is only moderately useful for the average person to know. Lots of good tools in maths. But then…. ??

And once you get past basic science, and into the really difficult subjects? the ones the average person would actually benefit from knowing something about ? We are right in the middle of a black hole of ignorance. We know some things, that are true some of the time, but we don’t really understand when they are true and why they aren’t always true. For instance, what could you say even about economics? ‘It’s real, real complicated, and we think we’ve figured out a few of the principles’? But economists are constantly being surprised by the real world, so better not even start. And economics is a tower of knowleged compared to the shifting sands of anthropology or sociology or psychology or political science. And anything you’ve got to say about medical care starts to look a little squiggly after you go past ‘buckle your seat belt!! don’t drink and drive!! don’t smoke!! go see your dentist twice a year!!’ So, that’s going to be a pretty brief lecture.

All the really interesting stuff– the really interesting and relevant stuff to the average school child– is yet to be firmed up enough to believe any of it is anything more than best guesses.

Teachers are as bad as journalists in massively overstating the confidence we should have in the information they are providing. And for the same reason of course. No one gains status points by depreciating the accuracy of the special information they can pass on to you.

I think some things in economics are true as a result of basic logic, like supply has to equal demand, why interest is usually positive, comparative advantage and the slope of demand curves. All kids should learn these things and then maybe we would have more sensible policy from the politicians. It would even be helpful for kids to be exposed to the debates between different economic systems so they can actually understand that there are usually arguments for and against so that when they encounter people selling on side of the argument, they actually know there is a counter argument.

By pure synchronicity this shows up in the Guardian today

Some new research out today

https://www.theguardian.com/science/2018/apr/05/humans-produce-new-brain-cells-throughout-their-lives-say-researchers

The fact that you have a lot of people actually looking at cells in human brains now at least means we will likely have a definitive answer soon, I hope.

So if this study is correct (which would be fun simply for laughing at all the previous “dogma of no neurogenesis” comments), how bad is that? As you point out, we already know anti-depressants work, exercise works, heck maybe even growth mindset works. But we discovered these through outcome measures and theories for why we gt outcomes can actually be harmful as it takes our eye off the ball. Nutrition advice is full of this kind of problem (cholesterol must be causing heart attacks so therefore cut cholesterol! Fat must cause obesity to load up on cabs instead! etc). Neurogenesis if it does happen is only a process measure, not the actual outcomes we’re looking for. This is of course an application of avoiding the mental error that “x caused y” is perceived as being less likely than “x caused y because of process z.”

Ultimately this shouldn’t be seen as too shocking or terrible. Getting the “why an outcome happens” wrong is not really that serious as long as in treatments you keep your focus on the actual outcome. Who cares if antidepressants work via serotonin, via neurogenesis, via disrupting the alien implants that make you feel depressed, they work. We didn’t need a correct theory to discover them, we just needed trial and error and a results orientation. The medical science that matters is the science done to find what treatment produces what outcome, not the science that tries to explain why treatment A produces outcome X.

Just because neurogenesis doesn’t happen, doesn’t mean the human brain can’t do any of the things we thought it could do before–it just does them in different ways than was recently proposed.

Except maybe neuroscience*.

*joke

Can we all step back from the ledge here and, before we decide that literally everything is wrong, consider that we are talking about the results of one study? Shouldn’t we at least wait for some replication or conformation before we get too attached to this new-old idea? Scott in particular seems ready to bet the farm on it, despite his own admission that it is not in his field. Are we really taking the first indication of groundbreaking results as an opportunity to castigate others for putting to much stock in the first indication of groundbreaking results? Does nobody else see the irony here?

My main concern is that I see a bunch of statements saying “This is the problem with science and/or journalism”, and it makes me feel that people are simply using this as an excuse to trot out their personal hobby horses. If, as sometimes happens, next month this study is found incorrect and someone finds bushels of newly generated neurons in adult brains, those people aren’t going to walk back any of their statements. In fact, they’ll still probably feel that their views had been confirmed, though they will have trouble stating exactly how.

In short, I think we shouldn’t have a conversation on “How could this have happened, and what we could do to prevent it” until we are sure that the “this” is actually a thing that happened. Otherwise, we are doing the exact same thing as someone who uses neurogenesis to explain a phenomenon when they aren’t sure whether it is actually occurring.

I’m confused. Observably people do change and get better… or is this disputed?

It’s… a joke.

Maybe neurogenesis in adults is associated with memorization (i.e. you literally form completely new neurons to learn something new) and less neurogenesis is associated with generalization and distributed representations (i.e. you can slightly refine existing representations to learn new concepts).

It’s worth noting that most deep learning NN used today don’t literally add new neurons during learning.

Apparently an article came out today saying adult neurogenesis has been discovered again in humans: https://twitter.com/bradcog/status/981971725838467072?s=21

I was about to post the same thing. Thank you for doing that.

http://www.cell.com/cell-stem-cell/fulltext/S1934-5909(18)30121-8

That’s the link to the full text of the journal. I’m not a Well Educated Enough Scientist to make sense of a lot of this, but it’s interesting to say the least.

Technically, there is no neurogenesis in ten year old rats.

My understanding is that “dogma” when used in biology is just a term of art meaning something like “commonly-accepted principle”, e.g. the central dogma of molecular biology. That’s how Crick meant it originally, if memory serves.

The line which jumps out at me is:

That was from one of the “adult neurogenesis is a thing” papers. But… google tells me there’s ~100B neurons in the adult brain. 250k/month sounds extremely rare, basically negligible and unlikely to have any observable macroscopic impact.

I’m no expert in neurogenesis, but this is a problem I see all the frickin’ time in bio papers and it drives me nuts: people completely ignore effect size. The paper will say “yup, this is definitely a real thing, p<0.001 and everything" and then you go look at it and the effect size is like 1%. So it would not surprise me at all if that's what's happening here.

That quote about 250000 new cells per month is actually about rat brains. Rat brains have fewer neurons than human brains, right?

Oh wow, I seriously underestimated that difference. According to wikipedia’s list of animals by number of neurons, brown rats have something like 200M neurons – on the order of 1/500 compared to a human! On that scale, we’d be talking about ~0.1% new neurons per month, so that’s about 2.4% over the entire lifespan of the rat (about 2 years). Macroscopic effects at that scale sound maybe-plausible.

But this is really happy news! You’re saying that neurology researchers have found that the brain of adult rats can grow new neurons, but the brain of adult humans can’t. This implies that the researchers will examine what causes this difference, and eventually develop a cure for aging in humans by causing their brain to grow new neurons.

Some day, we might live as long as rats!

Heh… I’m surprised that nobody mentioned correlation with the aquatic ape theory (I thought I learned about it from this blog) based on the quote: “Interestingly, a lack of neurogenesis in the hippocampus has been suggested for aquatic mammals (dolphins, porpoises and whales), species known for their large brains, longevity and complex behaviour.”

Please understand that I’m not being the slightest bit sarcastic, or trolling, when I say “This is how you got Trump”

Because “experts” who are failures at their jobs are why a large portion of the American electorate (justifiably) no longer trust the “experts”.

And no, the answer isn’t to try to hide from the masses how much of a total charlie foxtrot “science” now is, the answer is to raise our game to the point where we’re not seeing these kind of screwups.

And if we can’t, then we need to seriously consider that the ordinary people are right not to trust any experts

This is such a bizarre attitude to me. Science has always been wrong in massive ways about important things. It seems rather unlikely to me that it is in this particular case (the evidence against neurogenesis is hardly direct or ironclad). Asking for perfect knowledge is a mistake.

Yes. A lot of scientific ideas are going to be wrong, because it’s hard to study complex systems like the brain or immune system. If everything is working as it should, our models of how these systems work should get progressively less wrong and more useful over time, and science should generally be a lot better at coming up with correct explanations for things than the non-rigorous/quantitative intuitions people.

That doesn’t mean there aren’t ways to improve science – there are! Statistical training could be better, and journals and funding agencies could do a better job enforcing statistical best practices. Publishing incentives could be changed towards reducing the file drawer effect. The press could change the way science is covered to better emphasize bodies of work over single studies and to avoid sensationalizing things.

And sure, this is a good cautionary tale of “what’s true for rats is not necessarily true for humans.” But…”we found this thing in rats and eventually we figured out it didn’t generalize to humans” is not in and of itself some kind of total crisis. We can maybe look back and say “we could have figured this out 10 years earlier if we’d taken x,y, and z steps” and that’s legit, but otoh we probably want a bunch of animal studies suggesting something is important before we use up too much valuable human brain tissue on it.

The nutrition reversal was what destroyed a lot of people’s faith in the “establishment”. The thesis that less fat and more carbs equalled healthy eating was heavily pushed as “settled science” by media and other authority figures (the food pyramid etc). Thing was, it was projected as a moral thing to eat less fat and you were “stupid” if you did eat fats. This experience has given many people the probably correct view that most authority figures don’t really have a better answer than people’s own intuition. Probably this is a good thing in the long run, because it makes society less fragile, but it does mean you have to tolerate some stupid behaviour like the anti-vaccers and the anti-GMOs.

Jesus. This actually is quite terrifying. I’ve always explained that it was neurogenesis that was one of the likely factors that promoted the effect of antidepressants, exercise, and bright light therapy. If the evidence of neurogenesis occurring is incredibly flimsy or suggestive of being totally meaningless, then this is a big come to jesus moment.

Zad, it shouldn’t be such a shock. Just because we don’t know the exact effects of antidepressants, exercises, etc, it doesn’t mean they are not good for you. Clearly they are. People love saying “this works because x, y and z”. It sounds more authoritarian. But things are complicated. We know “this works” but we don’t know the “because x, y and z” part.

Ricardocruz, I never suggested that antidepressants don’t work. Your response seems like a bit of a strawman argument. I’m simply saying that it’s surprising that the evidence for neurogenesis may be incredibly weak and not supported at all (even though it’s been cited so many times and spread all over)

Synaptogenesis is real. Changing synaptic strengths is also real. Neurogenesis is possibly not real, but I wouldn’t write it off because of one indirect failure to replicate, and I wouldn’t get too upset even if its not.

well but neuroscience if anything is even more iffy than social psych! Vul et al., “Voodoo correlations”; Yarkoni and Braver “Cognitive neuroscience etc.” ; a Poldrack paper that I don’t remember that title of, and more! Everywhere you look it seems that Neurosomething – above the usual arrogance in mantaining they can explain stuff better than psych, with less power and worse data – has huge, huge problems of QRPs, p-hacking, and statistical power that may be even worse than those in social psych. The reason why this comes as a surprise to many is the impenetrability of the brain as concept – and there is research on it! The “Neurosomething Nonsense Effect”:

https://www.sciencedirect.com/…/pii/S1364661308001563

That’s two you’ve completely blown me away on, in just a few months. This one, and your blog on Placebos. I swear you should be writing a column for the New York Times or equivalent. You could call it ‘Stuff You Know That’s Just Not True’

Minor nit. After I started seeing where you were going with this, I wanted to know how old the articles were that you were citing. Just to get an idea of how long it takes these days to catch a big error like this. I clicked on through and got a couple of dates: Gage’s article was 2002. Gross’s was 2000. I’m guessing that was about when the new scientific consensus developed. So, overturning the ‘new’ dogma this time (assuming it should be overturned) looks like it is not that far off from the 20-30 year time frame that I’ve seen popping up in the past for ‘overturning scientific ‘dogma’. That’s in fields associated with hard science of course. Presumably overturning dogma in something like psychology or sociology or anthropology is a much more lengthy process. I’m a pessimist, but I’m guessing more like 200 years on those. Or possibly infinity. The only self correction mechanism for wishful thinking I see in psychology for instance is the gradual expansion of biological science– so a very slow process indeed. And sociology is even more open loop than that.

I read somewhere once that that 20-30 year time frame is associated with the average length of a working scientist’s lifetime. Something about most people get their ideas locked in in college/graduate school. The only way you get a new perspective is when the old guy kicks the bucket and someone new gets hired. Bad news, if true. It might not be an ‘old knowledge’ problem so much as a commitment problem. Once you enlist on one side or another in a war, even a science war, the blinkers go on. Just another reason why no study should be considered ‘scientific’ if it is only examining one hypothesis vs the null hypothesis. Once a scientist (or anyone else ) has come up with one theory they like, it’s all over for objectivity. No hope of that until he has come up with two theories he kind of likes… I saw a really excellent article about that, well, inadvertently about that the other day in a wikipedia article on the beneficial acclimation hypothesis (BAH); which was “falsified as a general rule by a series of multiple hypotheses”:

When you read the initial hypothesis, or at least when I did, my reaction was, ‘of course, obviously’. The counter hypothesis were pretty compelling evidence to me, not only that BAH is wrong, but that the way we normally do science is wrong.

Test

Seems like the lede is buried in the very last Neuroskeptic quote. Rats have hippocampal neurogenesis but humans don’t? For all the hand-wringing that may occur over this, the discovery of that difference seems like it will ultimately swamp the little “oops” that, uh–heh–we got the whole “neurogenesis in humans” story wrong. (If we actually did, of course.)

I may just be misreading something, but it seems to me like your Part II here goes a lot further in its claims that any of the sources you link to. Well, okay, the Neuroskeptic posts make it sound like the author is pretty convinced by the no-human-adult-neurogenesis side of the argument, but the Atlantic article makes it sound much more like there are just a lot of conflicting results, with a lot of researchers who will argue vociferously for their preferred interpretation but who will also admit that when it comes down to it, there just isn’t enough data (or good enough experimental methodology) and more research is needed — which is a very common situation in science, even in the hard sciences, and isn’t any kind of crisis.

That is also the impression I get from this post by Jason Synder, which Neuroskeptic described as “excellent.” Synder says things like:

And his post has a section heading called “We need more studies of neurogenesis in humans,” describing all the complicated problems that could potentially have corrupted existing results (including the latest anti-neurogenesis ones). It definitely doesn’t sound like he thinks human adult neurogenesis is dead (if it were, we wouldn’t need more studies of it!).

Neuroskeptic sounds more convinced on that score, but I’m not clear on why. They write:

I don’t think this is deliberately misleading, but it is easy to misread. If you don’t click through to the Synder post, it’s easy to infer from the flow of the paragraph that it provides more support for the sense that adult human neurogenesis is probably dead (in fact, it does the opposite). And then, if you ignore that sentence, the rest of the paragraph isn’t convincing at all — it amounts to “there are various studies reporting the opposite result, but there’s at least one that reports the same result,” which does not in itself inspire strong confidence in the result.

The other Neuroskeptic post, about the earlier paper, presents an interpretation like the one in your post (under “entirely innocent, well-intentioned, and understandable”) — but it’s an interpretation of that one paper, and how to reconcile it with one other paper. I don’t see anyone else proposing that interpretation as a resolution of the whole debate, and indeed, Synder and the researchers quoted in the Atlantic article all seem to think the debate can only be truly resolved by more data and better methods.

I was surprised to see the post in Neuroskeptic and have it presented like this is a done deal. Like many folks on the outside of the field, I assumed that the case for neurogenesis was built on solid and fairly indisputable evidence. I don’t understand how we can “see” something that’s not there over so many years.

Based on no evidence at all, it feels very counterintuitive that rat brains would be capable of neurogenesis and human ones not. But I get that a lot of science is counterintuitive.

I just did some poking around at earlier studies and was struck by how small most of the sample sizes are and how many of them are rodent studies. I did find this one from 2011 that is at least looking at human tissue:

https://www.nature.com/articles/mp201126

I don’t know what consensus there is about whether modeling neurogenesis in the human brain can be read from neuronal growth in the lab. Would love to hear anyone who can speak to that.

Okay, Scott I know you’re being sarky here, but this really gives me the willies. It reminds me suspiciously of what I ran into with racialism. The – ha ha – dogma was that human populations have no genetic distinctions, certainly not at the level of the brain. And when that turned out to be balls, a certain group leapt straight to the conclusion YOU SEE?? BLACK PEOPLE ARE CONGENITALLY INCAPABLE OF CIVILISATION! Which lead the mainstream to go IF YOU DON’T THINK THAT EVOLUTION STOPPED 50,000 YEARS AGO, YOU MUST BELIEVE THAT BLACK PEOPLE ARE INCAPABLE OF CIVILISATION!!!!

…and there’s me going “Er, I think we need a little more work in Part 2”.

Just to hack on this, there seem to be some open questions:

Are we sure that adult neurogenesis is necessary for learning new stuff and changing? Rats grow neurons all the time and do the same thing over and over. Humans apparently don’t and learn new stuff all the time (yes, yes, rats figure out how to get out of a maze; guess what? so do humans) Could it be that learning and changing is more down to plasticity and synaptogenesis, which is well supported?

Or maybe neurogenesis sets the limit on the ‘absolute’ level of intelligence but not on how we use it? A kind of Sherlock Holmes thing – we have X to go around, be careful how you use it. And you can take some things out & put new things in – my field has changed rapidly so I’m learning data science & coding, while conversely I’ve lost much of what I did as an undergrad.

Anyway, let’s be careful about leaping one way or t’other.

“Are we sure that adult neurogenesis is necessary for learning new stuff and changing?”

Absolutely necessary. This is why no human has ever learned anything in all of history.

…or possibly it was a joke.

I iz German and all that. 🙂 Sorry about that, it’s just after some of the insanity I’d seen on people running away with genetics & IQ, I guess I’m a little sensitized.

Thanks for the great post.

The similarity is even deeper. The original “dogma” was pretty racist, at least heredity of intelligence was indisputable and population-level differences were considered a given. Then it was “debunked” and “proven” to be a dogma, and we ushered into the age of non-evolving perfectly equal postcapitalist humans. Then the evidence started to threaten this glorious new scientific paradigm, and all humanitarian beliefs that were foolishly revamped to appear based on it, and now we’re here.

I want to be clear, this isn’t the first time a “harder” science has had this problem before. Veritasium had a nice video on it a few months or years ago, talking about a physics publication that definitely found something, published, and then failed to see any replication after an additional 11 studies reconfirmed the work (with knowledgeable bias being the crippling point). It was the false pentaquark discovery.

https://www.youtube.com/watch?v=42QuXLucH3Q (the pentaquark discussion starts at 6:50)

I don’t have absolute terror. But I think it’s worth exploring the idea that even though we have increased the numbers of people educated to be scientists by two, maybe three, orders of magnitude since the birth of Francis Bacon, the number of people capable of being scientists–that is, to both think scientifically, and to act conscientiously enough to perform science–has not increased by more than one order of magnitude.

Has any field taken seriously the concerns Feynman put forth in his cargo cult science talk? Certainly experimental physics has not. Not theoretical CS. Not theoretical physics. Not nanochem. It’s easier to see the mistakes of medicine and neroscience because the vast majority of people care about illness and death–it happens to them–so these assumptions get more publicity. But the same problems of wrong assumptions leading to false or worse results is real in physics and chem and EE and bio worlds.

Most scientists who read Feynman’s talk don’t spend even 30 minutes thinking about their own lab’s cargo cult science. They’re not paid to. They’re not publishing for doing so. For years, we’ve suggested these externalities are why scientists don’t correct the errors. Certainly, scientists don’t publish if they spend their time reading back three-deep the references of the paper they’re interested in. So they assume their prof told them the relevant truths when they were grad students. Academia moves forward not with genius but with tiny work on the back opf prior work.

But what if the externalities aren’t the real problem? What if those trained as scientists really don’t understand them? What if they aren’t conscientious and analytic enough to ask the right questions? Where would they learn it? We’ve taught p values rather than judgment. We’ve taught Bernoulli process statistics models rather than the ability to ask if the questions posed even make sense to assume as independent. We’ve long since stopped asking “what assumptions about rats aren’t true for humans? How would we know?”

Have we failed to teach this not because we haven’t tried, but because it’s not teachable? Maybe most people in science can be taught to be an exquisite robot, replicating an experiment, or even to design one. But maybe they aren’t competent to understand when an experiment is necessary or what assumptions need challenging. Ruthless honesty is not common. It seems to be far less common than possession of educable intellect.

My area is biophysics, much “harder” than neuroscience, but I nonetheless know of two cases similar to this one (but on a smaller scale) from my own experience: a) For decades cytochrome c oxidase, a very core enzyme in the oxidative phosphorylation pathway (makes all oxidative metabolism possible), was thought to have only one metal atom. Then the x-ray structure came out and it was found unambiguously to have two metal atoms. This means that a vast literature–thousands of studies (at least, I haven’t tried to count) on the enzyme that relied on this “fact” to interpret data are simply wrong. More generally, all understanding about how the enzyme worked was qualitatively wrong until the x-ray structure came out. This bad literature is still out there, with no warning in the papers themselves. b) For about twenty years measurements of the turnover rate for HIV Reverse Transcriptase, the enzyme that replicates the genome of the AIDS virus and a primary target for anti-AIDS therapeutic drugs, was at least an order of magnitude too slow. (The earlier studies are low by factors of hundreds or thousands if I remember correctly.) This means that a core fact about a very important disease-related system was simply wrong. In this case the bad value was slowly corrected, but to this day the published value is probably (a bit) low. And again, all the older papers are still in the literature with no warning that they’re bad.

In the first case, the bad “facts” got into the literature because a couple of early studies were badly done, and no one wanted to take the time to replicate a “known” result. (Research funding is enormously competitive, and highly biased toward new results. To spend a year (maybe) of a grad student’s time replicating something is to risk publishing fewer papers, being judged unproductive by a review panel, or at least not pushing your career forward as fast as it might.) The bad facts became “dogma” within the field for lack of anything better, and persisted for a long time.