[Epistemic status: very uncertain. Not to be taken as medical advice. Talk to your doctor before deciding whether or not to get any tests.]

I.

There are many antidepressants in common use. With a few exceptions, none are globally better than any others. The conventional wisdom says patients should keep trying antidepressants until they find one that works for them. If we knew beforehand which antidepressants would work for which patients, it would save everyone a lot of time, money, and misery. This is the allure of pharmacogenomics, the new field of genetically-guided medication prescription.

Everybody has various different types of cytochrome enzymes which metabolize medication. Some of them play major roles in metabolizing antidepressants; usually it’s really complicated and several different enzymes can affect the same antidepressant at different stages. But sometimes one or another dominates; for example, Prozac is mostly metabolized by one enzyme called CYP2D6, and Zoloft is mostly metabolized by a different enzyme called CYP2C19.

Suppose (say the pharmacogenomicists) that my individual genetics code for a normal CYP2D6, but a hyperactive CYP2C19 that works ten times faster than usual. Then maybe Prozac would work normally for me, but every drop of Zoloft would get shredded by my enzymes before it can even get to my brain. A genetic test could tell my psychiatrist this, and then she would know to give me Prozac and not Zoloft. Some tests like this are already commercially available. Preliminary results look encouraging. As always, the key words are “preliminary” and “look”, and did I mention that these results were mostly produced by pharma companies pushing their products?

But let me dream for a just a second. There’s been this uneasy tension in psychopharmacology. Clinical psychiatrists give their patients antidepressants and see them get better. Then research psychiatrists do studies and show that antidepressant effect sizes are so small as to be practically unnoticeable. The clinicians say “Something must be wrong with your studies, we see our patients on antidepressants get much better all the time”. The researchers counter with “The plural of anecdote isn’t ‘data’, your intuitions deceive you, antidepressant effects are almost imperceptibly weak.” At this point we prescribe antidepressants anyway, because – what else are you going to do when someone comes into your office in tears and begs for help? – but we feel kind of bad about it.

Pharmacogenomics offers a way out of this conundrum. Suppose half of the time patients get antidepressants, their enzymes shred the medicine before it can even get to the brain, and there’s no effect. In the other half, the patients have normal enzymes, the medications reach the brain, and the patient gets better. Researchers would average together all these patients and conclude “Antidepressants have an effect, but on average it’s very small”. Clinicians would keep the patients who get good effects, keep switching drugs for the patients who get bad effects until they find something that works, and say “Eventually, most of my patients seem to have good effects from antidepressants”.

There’s a little bit of support for this in studies. STAR*D found that only 33% of patients improved on their first antidepressant, but that if you kept changing antidepressants, about 66% of patients would eventually find one that helped them improve. Gueorguieva & Mallinckrodt (2011) find something similar by modelling “growth trajectories” of antidepressants in previous studies. If it were true, it would be a big relief for everybody.

It might also mean that pharmacogenomic testing would solve the whole problem forever and lets everyone be on an antidepressant that works well for them. Such is the dream.

But pharmacogenomics still very young. And due to a complicated series of legal loopholes, it isn’t regulated by the FDA. I’m mostly in favor of more things avoiding FDA regulation, but it means the rest of us have to be much more vigilant.

A few days ago I got to talk to a representative of the company that makes GeneSight, the biggest name in pharmacogenomic testing. They sell a $2000 test which analyzes seven genes, then produces a report on which psychotropic medications you might do best or worst on. It’s exactly the sort of thing that would be great if it worked – so let’s look at it in more depth.

II.

GeneSight tests seven genes. Five are cytochrome enzymes like the ones discussed above. The other two are HTR2A, a serotonin receptor, and SLC6A4, a serotonin transporter. These are obvious and reasonable targets if you’re worried about serotonergic drugs. But is there evidence that they predict medication response?

GeneSight looks at the rs6313 SNP in HTR2A, which they say determines “side effects”. I think they’re thinking of Murphy et al (2003), who found that patients with the (C,C) genotype had worse side effects on Paxil. The study followed 122 patients on Paxil, of whom 41 were (C,C) and 81 were something else. 46% of the (C,C) patients hated Paxil so much they stopped taking it, compared to only 16% of the others (p = 0.001). There was no similar effect on a nonserotonergic drug, Remeron. This study is interesting, but it’s small and it’s never been replicated. The closest thing to replication is this study which focused on nausea, the most common Paxil side effect; it found the gene had no effect. This study looked at Prozac and found that the gene didn’t affect Prozac response, but it didn’t look at side effects and didn’t explain how it handled dropouts from the study. I am really surprised they’re including a gene here based on a small study from fifteen years ago that was never replicated.

They also look at SLC6A4, specifically the difference between the “long” versus “short” allele. This has been studied ad nauseum – which isn’t to say anyone has come to any conclusions. According to Fabbri, Di Girolamo, & Serretti, there are 25 studies saying the long allele of the gene is better, 9 studies saying the short allele is better, and 20 studies showing no difference. Two meta-analyses (1 n = 1435, 2 n = 5479) come out in favor of the long allele; two others (1 n = 4309, 2, n = 1914) fail to find any effect. But even the people who find the effect admit it’s pretty small – the Italian group estimates 3.2%. This would both explain why so many people miss it, and relieve us of the burden of caring about it at all.

The Carlat Report has a conspiracy theory that GeneSight really only uses the liver enzyme genes, but they add in a few serotonin-related genes so they can look cool; presumably there’s more of a “wow” factor in directly understanding the target receptors in the brain than in mucking around with liver enzymes. I like this theory. Certainly the results on both these genes are small enough and weak enough that it would be weird to make a commercial test out of them. The liver enzymes seem to be where it’s at. Let’s move on to those.

The Italian group that did the pharmacogenomics review mentioned above are not sanguine about liver enzymes. They write (as of 2012, presumably based on Genetic Polymorphisms Of Cytochrome P450 Enzymes And Antidepressant Metabolism“>this previous review):

Available data do not support a correlation between antidepressant plasma levels and response for most antidepressants (with the exception of TCAs) and this is probably linked to the lack of association between response and CYP450 genetic polymorphisms found by the most part of previous studies. In all facts, the first CYP2D6 and CYP2C19 genotyping test (AmpliChip) approved by the Food and Drug Administration has not been recommended by guidelines because of lack of evidence linking this test to clinical outcomes and cost-effectiveness studies.

What does it even mean to say that there’s no relationship between SSRI plasma level and therapeutic effect? Doesn’t the drug only work when it’s in your body? And shouldn’t the amount in your body determine the effective dose? The only people I’ve found who even begin to answer this question are Papakostas & Fava, who say that there are complicated individual factors determining how much SSRI makes it from the plasma to the CNS, and how much of it binds to the serotonin transporter versus other stuff. This would be a lot more reassuring if amount of SSRI bound to the serotonin transporter correlated with clinical effects, which studies seem very uncertain about. I’m not really sure how to fit this together with SSRIs having a dose-dependent effect, and I worry that somebody must be very confused. But taking all of this at face value, it doesn’t really look good for using cytochrome enzymes predicting response.

I talked to the GeneSight rep about this, and he agreed; their internal tests don’t show strong effects for any of the candidate genes alone, because they all interact with each other in complicated ways. It’s only when you look at all of them together, using the proprietary algorithm based off of their proprietary panel, that everything starts to come together.

This is possible, but given the poor results of everyone else in the field I think we should take it with a grain of salt.

III.

We might also want to zoom out and take a broader picture: should we expect these genes to matter?

It’s much easier to find the total effect of genetics than it is to find the effect of any individual gene; this is the principle behind twin studies and GCTAs. Tansey et al do a GCTA on antidepressant response and find that all the genetic variants tested, combined, explain 42% of individual differences in antidepressant response. Their methodology allowed them to break it down chromosome-by-chromosome, and they found that genetic effects were pretty evenly distributed across chromosomes, with longer chromosomes counting more. This is consistent with massively polygenic structure where there are hundreds of thousands of genes, each of small effects – much like height or IQ. But typically even the strongest IQ or height genes only explain about 1% of the variance. So an antidepressant response test containing only seven genes isn’t likely to do very much even if those genes are correctly chosen and well-understood.

SLC6A4 is a great example of this. It’s on chromosome 17. According to Tansey, chromosome 17 explains less than 1% of variance in antidepressant effect. So unless Tansey is very wrong, SLC6A4 must also explain less than 1% of the variance, which means it’s clinically useless. The other six genes on the test aren’t looking great either.

Does this mean that the GeneSight panel must be useless? I’m not sure. For one thing, the genetic structure of which antidepressant you respond to might be different from the structure of antidepressant response generally (though the study found similar structures to any-antidepressant response and SSRI-only response). For another, for complicated reasons sometimes exploiting variance is easier than predicting variance; I don’t understand this enough to be sure that this isn’t one of these cases, though it doesn’t look that way to me.

I don’t think this is a knock-down argument against anything. But I think it means we should take any claims that a seven (or ten, or fifty) gene panel can predict very much with another grain of salt.

IV.

But assuming that there are relatively few genes, and we figure out what they are, then we’re basically good, right? Wrong.

Warfarin is a drug used to prevent blood clots. It’s notorious among doctors for being finicky, confusing, difficult to dose, and making people to bleed to death if you get it wrong. This made it a very promising candidate for pharmacogenomics: what if we could predict everyone’s individualized optimal warfarin dose and take out the guesswork?

Early efforts showed promise. Much of the variability was traced to two genes, VKORC1 and CYP2C9. Companies created pharmacogenomic panels that could predict warfarin levels pretty well based off of those genes. Doctors were urged to set warfarin doses based on the results. Some initial studies looked positive. Caraco et al and Primohamed et al both found in randomized controlled trials with decent sample sizes that warfarin patients did better on the genetically-guided algorithm, p < 0.001. A 2014 meta-analysis looked at nine studies of the algorithm, over 2812 patients, and found that it didn’t work. Whether you used the genetic test or not didn’t affect number of blood clots, percent chance of having your blood within normal clotting parameters, or likelihood of major bleeding. There wasn’t even a marginally significant trend. Another 2015 meta-analysis found the same thing. Confusingly, a Chinese group did a third meta-analysis that did find advantages in some areas, but Chinese studies tend to use shady research practices, and besides, it’s two to one.

UpToDate, the canonical medical evidence aggregation site for doctors, concludes:

We suggest not using pharmacogenomic testing (ie, genotyping for polymorphisms that affect metabolism of warfarin and vitamin K-dependent coagulation factors) to guide initial dosing of the vitamin K antagonists (VKAs). Two meta-analyses of randomized trials (both involving approximately 3000 patients) found that dosing incorporating hepatic cytochrome P-450 2C9 (CYP2C9) or vitamin K epoxide reductase complex (VKORC1) genotype did not reduce rates of bleeding or thromboembolism.

I mention this to add another grain of salt. Warfarin is the perfect candidate for pharmacogenomics. It’s got a lot of really complicated interpersonal variation that often leads to disaster. We know this is due to only a few genes, and we know exactly which genes they are. We understand pretty much every aspect of its chemistry perfectly. Preliminary studies showed amazing effects.

And yet pharmacogenomic testing for warfarin basically doesn’t work. There are a few special cases where it can be helpful, and I think the guidelines say something like “if you have your patient’s genotype already for some reason, you might as well use it”. But overall the promise has failed to pan out.

Antidepressants are in a worse place than warfarin. We have only a vague idea how they work, only a vague idea what genes are involved, and plasma levels don’t even consistently correlate with function. It would be very strange if antidepressant testing worked where warfarin testing failed. But, of course, it’s not impossible, so let’s keep our grains of salt and keep going.

V.

Why didn’t the warfarin pharmacogenomics work? They had the genes right, didn’t they?

I’m not too sure what’s going on, but maybe it just didn’t work better than doctors titrating the dose the old-fashioned way. Warfarin is a blood thinner. You can take blood and check how thin it is, usually measured with a number called INR. Most warfarin users are aiming for an INR between 2 and 3. So suppose (to oversimplify) you give your patient a dose of 3 mg, and find that the INR is 1.7. It seems like maybe the patient needs a little more warfarin, so you increase the dose to 4 mg. You take the INR later and it’s 2.3, so you declare victory and move on.

Maybe if you had a high-tech genetic test you could read the microscopic letters of the code of life itself, run the results through a supercomputer, and determine from the outset that 4 mg was the optimal dose. But all it would do is save you a little time.

There’s something similar going on with depression. Starting dose of Prozac is supposedly 20 mg, but I sometimes start it as low as 10 to make sure people won’t have side effects. And maximum dose is 80 mg. So there’s almost an order of magnitude between the highest and lowest Prozac doses. Most people stay on 20 to 40, and that dose seems to work pretty well.

Suppose I have a patient with a mutation that slows down their metabolism of Prozac; they effectively get three times the dose I would expect. I start them on 10 mg, which to them is 30 mg, and they seem to be doing well. I increase to 20, which to them is 60, and they get a lot of side effects, so I back down to 10 mg. Now they’re on their equivalent of the optimal dose. How is this worse than a genetic test which warns me against using Prozac because they have mutant Prozac metabolism?

Or suppose I have a patient with a mutation that dectuples Prozac levels; now there’s no safe dose. I start them on 10 mg, and they immediately report terrible side effects. I say “Yikes”, stop the Prozac, and put them on Zoloft, which works fine. How is this worse than a genetic test which says Prozac is bad for this patient but Zoloft is good?

Or suppose I have a patient with a mutation that makes them an ultrarapid metabolizer; no matter how much Prozac I give them, zero percent ever reaches their brain. I start them on Prozac 10 mg, nothing happens, go up to 20, then 40, then 60, then 80, nothing happens, finally I say “Screw this” and switch them to Zoloft. Once again, how is this worse than the genetic test?

(again, all of this is pretending that dose correlates with plasma levels correlates with efficacy in a way that’s hard to prove, but presumably necessary for any of this to be meaningful at all)

I expect the last two situations to be very rare; few people have orders-of-magnitude differences in metabolism compared to the general population. Mostly it’s going to be people who I would expect to need 20 of Prozac actually needing 40, or vice versa. But nobody has the slightest idea how to dose SSRIs anyway and we usually just try every possible dose and stick with the one that works. So I’m confused how genetic testing is supposed to make people do better or worse, as opposed to just needing a little more or less of a medication whose dosing is so mysterious that nobody ever knows how much anyone needs anyway.

As far as I can tell, this is why they need those pharmacodynamic genes like HTR2A and SLC6A4. Those represent real differences between antidepressants and not just changes in dose which we would get to anyway. I mean, you could still just switch antidepressants if your first one doesn’t work. But this would admittedly be hard and some people might not do it. Everyone titrates doses!

This is a fourth grain of salt and another reason why I’m wary about this idea.

VI.

Despite my skepticism, there are several studies showing impressive effects from pharmacogenomic antidepressant tests. Now that we’ve established some reasons to be doubtful, let’s look at them more closely.

GeneSight lists eight studies on its website here. Of note, all eight were conducted by GeneSight; as far as I know no external group has ever independently replicated any of their claims. The GeneSight rep I talked to said they’re trying to get other scientists to look at it but haven’t been able to so far. That’s fair, but it’s also fair for me to point out that studies by pharma companies are far more likely to find their products effective than studies by anyone else (OR = 4.05). I’m not going to start a whole other section for this, but let’s call it a fifth grain of salt.

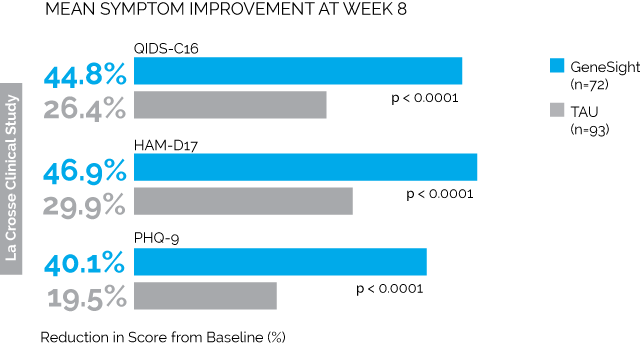

First is the LaCrosse Clinical Study. 114 depressed patients being treated at a clinic in Wisconsin received the GeneSight test, and the results were given to their psychiatrists, who presumably changed medications in accordance with the tests. Another 113 depressed patients got normal treatment without any genetic testing. The results were:

Taken from here, where you’ll find much more along the same lines.

All of the combinations of letters and numbers are different depression tests. The blue bars are the people who got genotyped. The grey bars are the people who didn’t. So we see that on every test, the people who got genotyped saw much greater improvement than the people who didn’t. The difference in remission was similarly impressive; by 8 weeks, 26% of the genotyped group were depression-free as per QIDS-C16 compared to only 13% of the control group (p = 0.03)

How can we nitpick these results? A couple of things come to mind.

Number one, the study wasn’t blinded. Everyone who was genotyped knew they were genotyped. Everyone who wasn’t genotyped knew they weren’t genotyped. I’m still not sure whether there’s a significant placebo effect in depression (Hróbjartsson and Gøtzsche say no!), but it’s at least worth worrying about.

Number two, the groups weren’t randomized. I have no idea why they didn’t randomize the groups, but they didn’t. The first hundred-odd people to come in got put in the control group. The second hundred-off people got put in the genotype group. In accordance with the prophecy, there are various confusing and inexplicable differences between the two groups. The control group had more previous medication trials (4.7 vs. 3.6, p = 0.02). The intervention group had higher QIDS scores at baseline (16 vs. 17.5, p = 0.003). They even had different CYP2D6 phenotypes (p = 0.03). On their own these differences don’t seem so bad, but they raise the question of why these groups were different at all and what other differences might be lurking.

Number three, the groups had very different numbers of dropouts. 42 people dropped out of the genotyped group, compared to 20 people from the control group. Dropouts made up about a quarter of the entire study population. The authors theorize that people were more likely to drop out of the genotype group than the control group because they’d promised to give the control group their genotypes at the end of the study, so they were sticking around to get their reward. But this means that people who were failing treatment were likely to drop out of the genotype group (making them look better) but stay in the control group (making them look worse). The authors do an analysis and say that this didn’t affect things, but it’s another crack in the study.

All of these are bad, but intuitively I don’t feel like any of them should have been able to produce as dramatic an effect as they actually found. But I do have one theory about how this might have happened. Remember, these are all people who are on antidepressants already but aren’t getting better. The intervention group’s doctors get genetic testing results saying what antidepressant is best for them; the control group’s doctors get nothing. So the intervention group’s doctors will probably switch their patients’ medication to the one the test says will be best, and the control group’s doctors might just leave them on the antidepressant that’s already not working. Indeed, we find that 77% of intervention group patients switched medications, compared to 44% of control group patients. So imagine if the genetic test didn’t work at all. 77% of intervention group patients at least switch off their antidepressant that definitely doesn’t work and onto one that might work; meanwhile, the control group mostly stays on the same old failed drugs.

Someone (maybe Carlat again?) mentioned how they should have controlled this study: give everyone a genetic test. Give the intervention group their own test results, and give the control group someone else’s test results. If people do better on their own results than on random results, then we’re getting somewhere.

Second is the Hamm Study, which is so similar to the above I’m not going to treat it separately.

Third is the Pine Rest Study. This one is, at least, randomized and single-blind. Single-blind means that the patients don’t know which group they’re in, but their doctors do; this is considered worse than double-blind (where neither patients nor doctors know) because the doctors’ subtle expectations could unconsciously influence the patients. But at least it’s something.

Unfortunately, the sample size was only 51 people, and the p-value for the main outcome was 0.28. They tried to salvage this with some subgroup analyses, but f**k that.

Fourth and fifth are two different meta-analyses of the above three studies, which is the lowest study-to-meta-analysis ratio I’ve ever seen. They find big effects, but “garbage in, garbage out”.

Sixth, there’s the Medco Study by Winner et al; I assume his name is a Big Pharma plot to make us associate positive feelings with him. This study is an attempt to prove cost-effectiveness. The GeneSight test costs $2000, but it might be worth it to insurers/governments if it makes people so much healthier that they spend less money on health care later. And indeed, it finds that GeneSight users spend $1036 less per year on medication than matched controls.

The details: they search health insurance databases for patients who were taking an psychiatric medication and then got GeneSight tests. Then they search the same databases for control patients for each; the control patients take the same psych med, have the same gender, are similar in age, and have the same primary psychiatric diagnosis. They end up with 2000 GeneSight patients and 10000 matched controls, whom they prove are definitely similar (even as a group) on the traits mentioned above. Then they follow all these people for a year and see how their medication spending changes.

The year of the study, the GeneSight patients spent on average $689 more on medications than they did the year before – unfortunate, but not entirely unexpected since apparently they’re pretty sick. The control patients spent on average $1725 more. So their medication costs increased much more than the GeneSight patients. That presumably suggests GeneSight was doing a good job treating their depression, thus keeping costs down.

The problem is, this study wasn’t randomized and so I see no reason to expect these groups to be comparable in any way. The groups were matched for sex, age, diagnosis, and one drug, but not on any other basis. And we have reason to think that they’re not the same – after all, one group consists of people who ordered a little-known $2000 genetic test. To me, that means they’re probably 1) rich, and 2) have psychiatrists who are really cutting-edge and into this kind of stuff. To be fair, I would expect both of those to drive up their costs, whereas in fact their costs were lower. But consider the possibility that rich people with good psychiatrists probably have less severe disease and are more likely to recover.

Here’s some more evidence for this: of the ~$1000 cost savings, $300 was in psychiatric drugs and $700 was in non-psychiatric drugs. The article mentions that there’s a mind-body connection and so maybe treating depression effectively will make people’s non-psychiatric diseases get better too. This is true, but I think seeing that the effect of a psychiatric intervention is stronger on non-psychiatric than psychiatric conditions should at least raise our suspicion that we’re actually seeing some confounder.

I cannot find anywhere in the study a comparison of how much money each group spent the year before the study started. This is a very strange omission. If these numbers were very different, that would clinch this argument.

Seventh is the Union Health Service study. They genotype people at a health insurance company who have already been taking a psychotropic medication. The genetic test either says that their existing medication is good for them (“green bin”), okay for them (“yellow bin”) or bad for them (“red bin”). Then they compare how the green vs. yellow vs. red patients have been doing over the past year on their medications. They find green and yellow patients mostly doing the same, but red patients doing very badly; for example, green patients have about five sick days from work a year, but red patients have about twenty.

I don’t really see any obvious flaws in this study, but there are only nine red patients, which means their entire results depend on an n = 9 experimental group.

Eighth is a study that just seems to be a simulation of how QALYs might change if you enter some parameters; it doesn’t contain any new empirical data.

Overall these studies show very impressive effects. While it’s possible to nitpick all of them, we have to remind ourselves that we can nitpick anything, even the best of studies, and do we really want to be that much of a jerk when these people have tested their revolutionary new product in five different ways, and every time it’s passed with flying colors aside from a few minor quibbles?

And the answer is: yes, I want to be exactly that much of a jerk. The history of modern medicine is one of pharmaceutical companies having amazing studies supporting their product, and maybe if you squint you can just barely find one or two little flaws but it hardly seems worth worrying about, and then a few years later it comes out that the product had no benefits whatsoever and caused everyone who took it to bleed to death. The reason for all those grains of salt above was to suppress our natural instincts toward mercy and cultivate the proper instincts to use when faced with pharmaceutical company studies, ie Cartesian doubt mixed with smoldering hatred.

VII.

I am totally not above introducing arguments from authority, and I’ve seen two people with much more credibility than myself look into this. The first is Daniel Carlat, Tufts professor and editor of The Carlat Report, a well-respected newsletter/magazine for psychiatrists. He writes a skeptical review of their studies, and finishes:

If we were to hold the GeneSight test to the usual standards we require for making medication decisions, we’d conclude that there’s very little reliable evidence that it works.

The second is John Ioannidis, professor of health research at Stanford and universally recognized expert on clinical evidence. He doesn’t look at GeneSight in particular, but he writes of the whole pharmacogenomic project:

For at least 3 years now, the expectation has been that newer platforms using exome or full-genome sequencing may improve the genome coverage and identify far more variants that regulate phenotypes of interest, including pharmacogenomic ones. Despite an intensive research investment, these promises have not yet materialized as of early 2013. A PubMed search on May 12, 2013, with (pharmacogenomics* OR pharmacogenetc*) AND sequencing yielded an impressive number of 604 items. I scrutinized the 80 most recently indexed ones. The majority were either reviews/commentary articles with highly promising (if not zealot) titles or irrelevant articles. There was not a single paper that had shown robust statistical association between a newly discovered gene and some pharmacogenomics outcome, detected by sequencing. If anything, the few articles with real data, rather than promises, show that the task of detecting and validating statistically rigorous associations for rare variants is likely to be formidable. One comprehensive study sequencing 202 genes encoding drug targets in 14,002 individuals found an abundance of rare variants, with 1 rare variant appearing every 17 bases, and there was also geographic localization and heterogeneity. Although this is an embarrassment of riches, eventually finding which of these thousands of rare variants are most relevant to treatment response and treatment-related harm will be a tough puzzle to solve even with large sample sizes.

Despite these disappointing results, the prospect of applying pharmacogenomics in clinical care has not abided. If anything, it is pursued with continued enthusiasm among believers. But how much of that information is valid and is making any impact? […]

Before investing into expensive clinical trials for testing the new crop of mostly weak pharmacogenomic markers, a more radical decision is whether we should find some means to improve the yield of pharmacogenomics or just call it a day and largely abandon the field. The latter option sounds like a painfully radical solution, but on the other hand, we have already spent many thousands of papers and enormous funding, and the yield is so minimal. The utility yield seems to be even diminishing, if anything, as we develop more sophisticated genetic measurement techniques. Perhaps we should acknowledge that pharmacogenomics was a brilliant idea, we have learned some interesting facts to date, and we also found a handful of potentially useful markers, but industrial-level application of research funds may need to shift elsewhere.

I think the warning from respected authorities like these should add a sixth grain of salt to our rapidly-growing pile and make us feel a little bit better about rejecting the evidence above and deciding to wait.

VIII.

There’s a thing I always used to hate about the skeptic community. Some otherwise-responsible scientist would decide to study homeopathy for some reason, and to everyone’s surprise they would get positive results. And we would be uneasy, and turn to the skeptic community for advice. And they would say “Yeah, but homeopathy is stupid, so forget about this.” And they would be right, but – what’s the point of having evidence if you ignore it when it goes the wrong way? And what’s the point in having experts if all they can do is say “this evidence went the wrong way, so let’s ignore it”? Shouldn’t we demand experts so confident in their understanding that they can explain to us why the new “evidence” is wrong? And as a corollary, shouldn’t we demand experts who – if the world really was topsy-turvy and some crazy alternative medicine scheme did work – would be able to recognize that and tell us when to suspend our usual skepticism?

But at this point I’m starting to feel a deep kinship with skeptic bloggers. Sometimes we can figure out possible cracks in studies, and I think Part VI above did okay with that. But there will be cracks in even the best studies, and there will especially be cracks in studies done by small pharmaceutical companies who don’t have the resources to do a major multicenter trial, and it’s never clear when to use them as an excuse to reject the whole edifice versus when to let them pass as an unavoidable part of life. And because of how tough pharmacogenomics has proven so far, this is a case where I – after reading the warnings from Carlat and Ioannidis and the Italian team and everyone else – tentatively reject the edifice.

I hope later I kick myself over this. This might be the start of a revolutionary exciting new era in psychiatry. But I don’t think I can believe it until independent groups have evaluated the tests, until other independent groups have replicated the work of the first independent groups, until everyone involved has publicly released their data (GeneSight didn’t release any of the raw data for any of these studies!), and until our priors have been raised by equivalent success in other areas of pharmacogenomics.

Until then, I think it is a neat toy. I am glad some people are studying it. But I would not recommend spending your money on it if you don’t have $2000 to burn (though I understand most people find ways to make their insurance or the government pay).

But if you just want to have fun with this, you can get a cheap approximation from 23andMe. Use the procedure outlined here to get your raw data, then look up rs6313 for the HTR2A polymorphism; (G,G) supposedly means more Paxil side effects (and maybe SSRI side effects in general). 23andMe completely dropped the ball on SLC6A4 and I would not recommend trying to look that one up. The cytochromes are much more complicated, but you might be able to piece some of it together from this page’s links links to lists of alleles and related SNPs for each individual enzyme; also Promethease will do some of it for you automatically. Right now I think this process would produce pretty much 100% noise and be completely useless. But I’m not sure it would be more useless than the $2000 test. And if any of this pharmacogenomic stuff turns out to work, I hope some hobbyist automates the 23andMe-checking process and sells it as shareware for $5.

A related question, how do you as a doctor find out information about drugs, treatments, etc? E.g. you want to find out the dosage and effects of a novel drug.

I assume/hope there is a more complicated system than just googling it. But I’ve found it hard to find useful information online.

UpToDate is like an expensive-but-carefully-verified Wikipedia for doctors.

(I’ve been known to google things in the rare cases when I don’t have UpToDate available)

Wikipedia mentions UpToDate is freely accessible in Norway by the website Helsebiblioteket.no (Norwegian Electronic Health Library) by any IP address in the country without any need for login. I tried using norwegian proxies but couldn’t access it.

Does anybody else have any info regarding this?

Also: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4260083/

Just speculating, but I wouldn’t be surprised if they block known proxies.

The problem arose only when I tried to access UpToDate. Other journals made available by the website were accessible via proxy.

Obviously, sales representatives tell you about them. 😀

And fed you lunch, to boot!

“GeneSight lists eight studies on its website are far more likely to find their products effective than studies by anyone else (OR = 4.05). I’m not going to start a whole other section for this, but let’s call it a fifth grain of salt.”

The link in this paragraph is broken, and it seems to have swallowed up some of your text.

Thanks, fixed.

But how many grains of salt would you say ought to constitute a heap?

you can totally have a heap of zero grains…

Yes, I myself favor universalism as the solution to a lot of metaphysical problems. Or, more rarely, nihilism. But this doesn’t look like one of the cases in which to employ nihilism; I prefer everything being a heap, probably as you suggest even including the empty heap (since having a zero case often makes things simpler), to there being no such things as heaps. And certainly any sort of moderate proposal of some things being heaps and some things being non-heaps is just the sort of thing that never ends up working in metaphysics.

Just add a clustered index and don’t worry about it.

This sounds really, really similar to “the passage of time is helpful to at least 66% of patients”.

…because with the genetic test, they don’t have to experience the “terrible side effects”? That sounds like an improvement to me.

The skeptic community is all over this particular question in the particular case of homeopathy. They will tell you at length that this evidence is necessarily wrong because all homeopathic treatments are chemically identical, and in fact are all water.

The other question, “what’s the point of having evidence if we’re just going to reject it if it turns out favorable to homeopathy”, then tells us that there is no point in collecting evidence on homeopathy. There’s no logical issue there. An experiment that wanted to provide meaningful evidence about homeopathy would have to first show e.g. that there was a chemical difference between one homeopathic remedy and a different homeopathic remedy, which would go towards contradicting the point “it’s all just water”.

But homeopathy’s point is that it works on a method other than the chemistry. I know and you know that chemistry matters, but you aren’t addressing their point if you only talk about chemistry.

Anyway, isn’t the easier explanation that it’s just green jelly beans? https://xkcd.com/882/

If you only talk about chemistry, you are addressing their claim that their methods are medicinally beneficial. Why do you need to address some other point of theirs? What point would that be?

Homeopaths claim that there are properties of water aside from chemistry (and temperature, pressure, and other things acknowledged by mainstream science) which are relevant to medicine. If you simply say “But chemistry/temperature/pressure don’t show any difference!” they’ll nod and agree with you.

Sure, the burden of proof is on their side if you want to get picky, but they don’t care.

My untested hypothesis is that some homeopathic remedies contain real drugs put in by the manufacturers so that people get better and keep buying the homeopathic remedy.

Depending somewhat on the drug in question, this would be an outrageously risky maneuver on the part of the manufacturers – as in, like, criminal liability – and would obviate one of the major benefits of being in the homeopathy business: trivial manufacturing costs. I suspect that the pure placebo effect is more than capable of keeping people on the sugar pill train.

(If you were being facetious then disregard this comment!)

Now I wonder if there is any standards body that would complain if the homeopathic method wasn’t made by 20C and instead just pumping out straight sugar pills.

It’s already been proven to happen, at least once. (See http://www.wired.co.uk/article/homeopathy-contains-medicine)

In Europe the term “homeopathic” is regulated, but in America it doesn’t mean anything and the term is slapped onto many substances whose label claims to contain measurable doses. Go into a drug store and look for zinc lozenges. The last time I did, they were all marked “homeopathic,” but I think that the label disagreed. I think that it is common for them to have a specific dose listed. Alternately, they may be labeled with the homeopathic notation “1X” which should mean that they were diluted 10:1, or hardly at all. In Germany that would be illegal, but still the rule is that they must be diluted 4X (aka 4D), 10000:1, which certainly leaves a measurable amount and may well a practical amount, depending on the substance.

This seems like a flawed approach. It’s attempting to rely on reasoning rather than empirical evidence.

This is not scientific

Bob: “XYZ works because of a non-chemical difference.”

Alice: “BAH! There is no chemical difference! Disproven!”

It would be like if the Randi Prize people had simply gone around declaring that since someones claims violated the current best understanding of physics they were going to refuse to test them.

Your position seems closer to classical philosophical Rationality than LW style rationality or empiricism.

If, hypothetically, someone did a well run and supervised double blind trial of a homeopathic remedy and the intervention arm did vastly better than the controls on placebo that would be spectacularly scientifically interesting if it could be replicated. Even more interesting if they followed up by showing that there was indeed no chemical difference between the remedy and the placebo.

But the point is not to collect evidence about homeopathy; it’s to collect evidence about science. We already know homeopathy is false, so if science provides evidence it works, that’s a problem. It means that science probably also provides evidence that other non-working things work, in cases where we don’t already know the answer (so we have to trust science). It suggests that we need a more robust, less optimistic process for proving things work, one weak enough that it can’t “prove” homeopathy works.

The only basis on which we know it is false is that science does not provide evidence that it works.

Because of the FDA loophole you mentioned, there are no real standards for these tests, and people are taking advantage of it. Here is a related story:

https://www.statnews.com/2017/02/28/proove-biosciences-genetic-tests/

A biotech company (Proove) claims it can use genetic tests and questionnaires to determine who will develop opioid dependence and which pain killers a person will respond best to. They get doctors to sign on to do ‘research’ on the effectiveness of the tests. The research measures the doctors impressions of whether the test helps. Some doctors fill out the forms themselves, but Proove will also send out employees to fill out the forms for them to help increase volume. The tests are billed to insurance companies, and the doctors get a payment for each patient the enroll in the ‘study’. Unsurprisingly, the responses show great benefit from the test.

Going back to this post, a detail that stood out to me was using a 15 year old candidate gene study to support the GeneSight test. These studies are now known to have abysmally low replication rates (I think John Ioannidis gives a figure of 98% non-replication in many of the talks he gives, I would have to look up the source). That’s kind of a red flag that they are thinking wishfully.

Finally, this part:

“I talked to the GeneSight rep about this, and he agreed; their internal tests don’t show strong effects for any of the candidate genes alone, because they all interact with each other in complicated ways. It’s only when you look at all of them together, using the proprietary algorithm based off of their proprietary panel, that everything starts to come together.”

Reminded me of this:

https://www.statnews.com/2016/11/29/brca-cancer-myriad-genetic-tests/

Another gene testing company (this time with a product that actually works. The BRCA test for detecting breast cancer risk) says that it has complicated proprietary methods that are better than its competitors, but produces no peer-reviewed or even public data to back up the claim.

Ya, I’m gonna agree with this point. There’s a lot of genes with only 1 or 2 papers about their function and the effects of many variants tend to get overstated.

One of the findings from ExAC was a note of how many subjects appeared to be walking around with 40 to 50 variants which according to the literature are linked to serious issues but appear fine.

recently the BMA published a list of something like 30 variants which they believed were the only ones which had sufficient evidence behind their link to serious health problems to ethically justify notifying patients if they’re found incidentally during other investigations.

Sometimes students will be looking into conditions trying to find associated variants and it’s a bit depressing how flimsy the evidence base is for many links. 10 year old guesses at gene function are pretty normal.

Throw in the “fuck it we can make money this week” attitude of the typical startup founder and you’re not going to get meaningful data or careful analysis. It’s worth taking a lot of things with a pinch of salt until they’ve been well replicated.

I doubt that Myriad’s proprietary algorithms are accurate, but even if they were, they are clinically irrelevant. They are probably like 23andMe’s claim that a particular SNP raised the chance of breast cancer from 10% to 12%, which is irrelevant to any decision (mammograms, prophylactic mastectomy). The only thing that matters are nonsense mutations.

But Myriad does offer something that other companies don’t: it will sequence your BRCA and look for a unique nonsense mutation, while everyone else will just look for the specific nonsense mutations that are common in the Ashkenazi and Dutch. You could get another company to sequence your whole exome for a fraction of the cost, but if you do have a unique mutation, Myriad will then build a boutique test to give to all your relatives. It is still probably cheaper to get all your relatives’ exomes sequenced, but it is close and insurance will cover Myriad. Anyhow, this is a really niche product, for the 1 in 10k people who already know that they have nonsense BRCA in their family but aren’t Ashkenazi or Dutch (or have already passed those tests).

“They are probably like 23andMe’s claim that a particular SNP raised the chance of breast cancer from 10% to 12%”

This is the one case I know of where that really isn’t the situation. Here are the stats from the article:

“if either gene is mutated, a woman’s risk of developing breast and ovarian cancer soars to as high as 85 percent and 40 percent, respectively. That compares with a risk of 12.7 percent and 1.4 percent in the general population.”

These results have held up over time, and women with the bad versions of the genes often opt to have a mastectomy rather than face such a high risk of cancer.

Which is why I mentioned that in my very next sentence. Taken literally, the sentence you quoted is false. It is only nonsense* mutations that have a dramatic effect. Both 23andMe and Myriad claim that other BRCA mutations have effects and they probably do, but they don’t matter. Whereas nonsense mutations are easy to recognize (although not as easy to detect as a SNP) and not a matter of proprietary algorithms.

* Added: I include frameshift mutations as nonsense mutations and not just stop codon mutations. I am not sure if this is standard.

Oh, sorry, I misunderstood your point.

As I understand it, Myriad and its competitors both sequence the same genes and have access to the same genetic information (since Myriad lost its patent protections in the Supreme Court in 2013). The difference is that Myriad currently has a larger database linking sequences to outcomes. Simply knowing whether the mutation is a nonsense mutation is not enough to determine its significance. Myriad claims that its larger database leads to more accurate results, and fewer rare mutations where no call can be made about the clinical significance due to lack of data. That seems to be true only in a very small number of cases.

No, it really is. “Nonsense mutation” is a technical term. (I include frameshift mutations as nonsense mutations. I don’t know if this is standard. In any event, both frameshift and stop-codon mutations have dramatic effects, hardly dependent on the location on the gene. Maybe the importance of a stop mutation depends on the location, but it would be a monotone function of location and pretty easy to figure out.)

I know that nonsense mutation is a technical term, but it’s not enough to determine the clinical significance of a mutation in BRCA1 or BRCA2 gene. There are rare variants where no one knows whether they increase the risk of cancer or not.

1. You sure don’t act like you know it. You also don’t act like to know the difference between a sign error and a magnitude error. (nor your link)

2. Yes, there are lots of rare missense mutations on BRCA. I don’t know the sign of their effect, but I do know the magnitude: clinically irrelevant. Myriad has a database of missense mutations. They probably know more than other people about these mutations. Probably they are 75% correct about guessing the sign of the effect. But even if everything they claim is correct, they only claim that the effects are tiny and worthless, just like the public information about the SNP that 23andMe used. Except that they don’t use the word “worthless” but leave it to genetic counselors to give bad advice.

3. The people who should be using Myriad are the people who already know that they have aggressive breast cancer in their families. Like Angelina Jolie. Except that she got it from her Dutch grandmother, so it’s probably the common variant and she didn’t need sequencing. If in such a person you see a nonsense mutation, you can be pretty sure that it is the problem, even if nonsense mutations are ever safe. Ideally you get a sample from someone who did get aggressive breast cancer, not Angelina Jolie, but her mother, aunt, or grandmother. Then you know that there really is a problem.

It’s not true that you just have to classify a mutation as nonsense or not to determine whether it is of clinical significance. Here is a source:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2928257/

“Most deleterious mutations introduce premature termination codons through small frameshift deletions or insertions, nonsense or splice junction alterations, or large deletions or duplications. ”

So nonsense mutations are a common cause of major problems, but just because a mutation is not nonsense doesn’t mean it can’t have a major negative effect.

Sorry, I made a big reading error. I read “where” in place of “whether.” So my last couple of comments are non sequiturs.

I claim that only big errors matter. I claim that Myriad’s proprietary algorithm is about small errors. That paper acknowledges that some variants are higher risk than others, but fails to really exhibit belief in it.

I agree that there are more kinds of big errors than nonsense errors. The important claim is that big errors are easy to recognize. In particular, all the errors in that quote are easy to recognize. Maybe skipping an exon is a medium size error that is easy to recognize but hard to evaluate. Maybe that is a place where there is real uncertainty, useful knowledge that could be acquired, and perhaps is held by Myriad.

“Fourth and fifth are two different meta-analyses of the above three studies, which is the highest study-to-meta-analysis ratio I’ve ever seen.”

Probably you mean “[…] lowest study-to-meta-analysis ratio […]”

Highest: Most under the influence of marijuana.

The study I want to see done: Recruit participants in a study. Get informed consent from all of them for reports from their doctors &etc, tell them that some will be in the treatment and some in the control group, and all of the above.

Then tell the control group that the experiment was at capacity. Have the experimental group get the test, but no change in intervention.

If that produces a 20% reduction in symptoms in the experimental group, then I would suggest offering genetic testing as treatment is effective.

I feel like “don’t waste time on things which are obviously bullshit” and “actually decide based on the evidence” are both useful skills, and I’ve yet to work out what to do when they seem to conflict. I have some intuition for which to follow, but not sufficient certainty to say confidently which to follow when.

In practice I find this conflict mostly reduces to the skill of “accurately judge the trustworthiness of interpreters and synthesizers of the evidence.” But I’m mostly a consumer for broad-but-shallow knowledge rather than a specialist in anything. Specialists should probably apply their own judgement much more, in their areas.

The advanced-level skills for knowledge consumers, which I haven’t gotten the hang of yet, are “efficiently seek and find the most trustworthy evidence-interpreters for a given issue”, and “deduce which issues are likely to reward a search for evidence-interpreters, as a function of insight payoff versus search costs.”

I basically still rely on other people to do those things for me, and am therefore grateful every time Scott does (and reports back so thoroughly, besides).

Let’s call a spade a shovel. We all know what that data looks like – because it was omitted. If it had come out the other way, it would be in the article.

Related:

“Let’s call a spade a shovel. We all know what that data looks like – because it was omitted. If it had come out the other way, it would be in the article.”

I don’t like making these kinds of accusations, because every time I write an article and try to include everything I think is important, someone in the comments accuses me of deliberately leaving out the strongest argument for the opposing side for sinister reasons. When they tell it to me, it usually turns out to be something I figured was so far down the list of possibilities that it wasn’t worth anyone’s time to talk about.

I quite agree you shouldn’t make such accusations overtly; they would make you look petty, personalise the subject and detract from your larger point. And how would you prove it? You have a reputation to maintain.

But yahoos like me in the comments section are able to make explicit the not very subtle subtext here. You can write “This is a very strange omission,” and I can say what I really think.

You don’t need that many genes to make it hard to figure out what is going on.

Besides the potential for each gene to have multiple alleles, the effect a particular gene has can depend on what the rest of the genome contains.

For what it’s worth, the current estimate of (protein-coding) genes in the human genome is around 20,000. I propose that as a conservative upper bound on the number of genes which might be involved in any trait.

I think usually people find that genetic effects are basically additive.

You mean the genetic effects people have detected are additive.

When getting the result involves doing an ANOVAR or similar, maybe that’s not surprising. All of the ‘non-additive’ effects effectively become noise in the variation within groups and give you the small detectable effects you observed in the literature in the first place.

Non-additive effects would be something like a mutation in a regulator which leads to reduced expression of the enzyme you care about. There may be dozens of relatively rare but individually important interactions you don’t detect when all you look at is the enzyme sequence.

In case it’s not clear I should point out that the upper bound of 20000 I mention above is tongue in cheek. I think it’s reasonable to say that for most measurable traits there will be a few genes with a relatively large effect, some which monkey around with those and a rapid tailing-off of importance.

“There’s a thing I always used to hate about the skeptic community.”

I’ve been saying for years – if you applied to standards of evidence that many in the skeptic community call for in essentially all situations, you simply couldn’t function as a human being. In many areas we’d be rendered completely inert because there simply doesn’t exist evidence that they consider robust. I mean if we were only allowed to teach using methods that we know from randomized controlled studies to be superior to alternative methods, we’d have almost nothing to do in the classroom because almost nothing survives research of that level of rigor.

It’s come up on the site before — the opinion that made the most sense to me went something like “no, the skeptics aren’t any better than anyone else at knowing what’s true. What they’re good at is exposing fraud that they know exists based on their community values”. (And this is still a valuable thing! There’s so much fraud out there.)

Think of them as an engine for generating disbelief in a fairly well-defined set of core values, not as an engine for generating knowledge that didn’t exist before.

To follow up on what was probably intended as just one random example of many:

I worked briefly on a team doing research synthesis of education RCTs. There are lots of problems, but one of the biggest is the control groups. There’s no standard control curriculum, but the control kids of course have to be getting some kind of education. The typical control is “practice as usual,” which could mean radically different things depending on region, school district, and individual teacher.

Worse, published reports are terrible about describing what the control group actually did. Authors aren’t that interested – they want to focus on describing their own intervention instead of what the control does – and may not even know in much detail. Trying to estimate effects over control, in a way that is comparable across studies, is a huge challenge when the control conditions are both highly variable and poorly described.

Exactly right.

Could it be that their test likes to assign certain exotic medications to the “red bin”. Being exotic, doctors only prescribe them to patients where everything else has failed and who desperately need something.

Interesting thought. Someone (maybe Carlat again) pointed out that there’s a lot of room for mischief if they do just put the same (presumably bad) medications in the red group for everybody. I was thinking this didn’t matter because there aren’t any globally bad antidepressants, but your way would definitely work too.

I feel like if this were the case, we’d just have to look for bimodal distributions when evaluating antidepressant effectiveness.

I think that’s sort of what the growth-model-trajectory paper is doing. I’m not sure why I don’t see people just using the words “bimodal distribution”, though.

At a guess, people don’t want to assert that it’s bimodal specifically?

There’s a similar claim saying that antidepressants only help people with very serious depression, and all the moderate cases only work by spontaneous recovery. If both of those narratives are true, we’d have one “good” bucket, two “not great” buckets, and one “hopeless” bucket.

Presumably there are lots of other similar assertions you could make, like talking about who responds to SSRIs versus NMRIs. So broad-spectrum studies find nothing, clinicians find something, but the number of buckets could be 2 or 32 or even more.

I want to examine the implications of this.

Given that systems can be gamed, we should expect that the above described pharmaceutical companies to attempt to game the system. Given no system, we should expect the above described companies to attempt to manipulate the market. We should also expect them to be successful if there is no coordinated attempt to stop them.

So, what does that mean for any coordinated attempt to stop them? It means that the system is not going to be charitable or even very efficient.

The reason the FDA “sucks” is because the market they are regulating “sucks” more.

I can certainly see the argument for this. Medical markets are famously irrational: people will pay arbitrary amounts of money and can’t assess results well, so spending doesn’t reflect preferences and information is badly asymmetric. Therefore, you need Draconian regulations just to cut down on deadly fraud a little bit.

But I think the counterpoint is that the FDA isn’t oppressively fighting fraud, it’s just sort of blundering around ruining things. Grandfathering drugs doesn’t make sense, setting equivalent evidence standards for redundant symptom managers and novel life-savers doesn’t make sense, barring international reciprocity doesn’t make sense, accepting studies with known flaws and no replications doesn’t make sense, and so on.

So we have a user-hostile, dishonest market which deserves our “smoldering hatred”. And then we have a risk-averse, regulatory-captured regulator adding random chaos and harm that can’t really be justified by the hatred.

It sounds to me like a whole lot more needs to be known about how the drugs behave in people’s bodies. Once that’s established, we’ll know more about which genes (and epigenetic changes, I bet) to look at.

cultivate the proper instincts to use when faced with pharmaceutical company studies, ie Cartesian doubt mixed with smoldering hatred

A dream career for me, matching my strengths and capabilities, if only I were mathematically able! 🙂

More broadly, the genomics approach – where, if any of you remember, Great Things were going to happen as soon as we mapped the human genome (like “personalised medical treatment targeted at an individual based on their genes”) – is partly why I’m sceptical on God-Emperor AI: reality is complicated, there are a lot of things that go with other things to cause third things which down the line cause sixteenth things, and every time we think we’ve cracked it and got one simple model that will let us do all this great stuff with a tweak here and a nudge there – it’s not that easy.

To be fair to the pharamcogenomics crowd, their idea is not crazy and it’s an avenue worth exploring, but I think we’re (for now) only going to get very broad/weak effects where “population with this gene will do slightly better on this drug and population with this gene will do slightly better on that”, which is better than nothing (at least if it saves the “take this drug – it’s not working – keep taking it – still not working – try a higher dose – nope, no good – okay, try this one instead” approach and we go straight to “try that one instead” first).

Just in my short lifetime, we’ve been promised medicine-redefining breakthroughs from stem cells, human genome sequencing, personalized genome sequencing, cloned organs, and nanoparticle drug delivery. CRISPR looks to be next. But the biggest actual breakthroughs have mostly been simple mechanical improvements to known processes (e.g. small-incision, robotic surgeries).

Certainly, I think we should assume that any new development which will be “important and ready soon” is going to hit horrible unforeseen issues, like primate cells proving way harder to clone than other mammal cells.

I’m not sure how you mean the AI connection, though? If you mean “it won’t happen as soon as people think”, I can see the shared pattern where things like the Dartmouth AI conference wrongly assumed that all the hard breakthroughs were done. If you mean the viability or eventual outcome of strong AI, I don’t really follow – unlike medical breakthroughs, we have solid proof that human-intelligence agents can be made (i.e. humans) and are just baffled about how to get there.

I’m not sure how you mean the AI connection, though?

Broadly in the sense that “holy cow, we figured out how to do this thing, huge immense changes to the world as we know it will shortly follow!”

And maybe they do (penicillin*) and maybe they don’t, but certainly not in any of the ways we expect they will. So I remain more convinced that the problem of AI will not be “rogue AI decides to turn all humans into paperclips” but “humans use AI to achieve an aim – generally doing down some opponent, rival or competitor – and unintended consequences follow”.

*e.g. in Samuel Delany’s “Nova” the taboo against sharing food directly from one mouth to another no longer exists, because disease no longer exists, because antibiotics have done away with all sickness. Yeah. Plainly superbugs and antibiotic resistance were not visualised as being a problem in the 60s.

“Suppose (say the pharmacogenomicists) that my individual genetics code for a normal CYP2D6, but a hyperactive CYP2C19 that works ten times faster than usual.”

I believe you meant for a “Not” to be inserted here: “my individual genetics code NOT for a normal CYP2D6, but a hyperactive CYP2C19… “ Unless I’m really mis-reading you.

Very interesting post. The problem of antidepressant pharmacogenomics seems like a sub-problem within the larger issue of the irreproducibility of candidate gene studies. In the 90’s/early 2000’s, there were hundreds of studies looking for associations between specific genes and individual phenotypes. People tested candidate genes that they thought might be associated with a trait (say, hormone receptors and sexuality), and published positive results linking single genes with homosexuality, intelligence, alcoholism, etc. However, about 15 years ago, it became much easier to do genome-wide association studies in 1000’s of people – and approximately none of these candidate genes previously found were reproduced in well-powered genome-wide cohorts. What the genome-wide studies found were dozens of genes each explaining 0.1%-1% of the variance in a trait, as Scott notes above. This led to what’s sometimes called the “dark matter” problem of human genetics – we know that intelligence is ~50% genetic, but using all of the markers we’ve found so far, we can only explain ~5% of the variance in intelligence by looking at a genome. We don’t know where the rest of the genetic variation comes from.

So, not having read the studies linked above but knowing a little about the progress of human genetics research, I agree with Scott’s skepticism that an 8 gene panel could accurately predict a very, very complex phenotype like anti-depressant response.

Promethease is based on SNPedia, and we’re very much in agreement with your analysis. We keep waiting for robust pharmacogenomics findings, and we will be more than happy to include them in SNPedia – and therefore in our $5 Promethease reports – when they come about.

So far, people learn more from their caffeine metabolism and lactose intolerance predictions than from “straight” PGx, although the increased myopathy risk for carriers of certain SLCO1B1 variants when taking statins seems pretty robust too.

And for readers of these comments (and article): feel free to point out your favorite replicated/robust PGx finding, especially if it’s not adequately represented in your view in SNPedia at the moment. We are always adding new information, and there’s bound to be good PGx info sooner or later … no?

Hey, thanks for everything you’re doing, you guys are great.

Do you think we’re at the point where someone can figure out their CP450 alleles from a 23andMe report? I tried to do it myself with Promethease last night and it looked like maybe if I’d spent a really long time learning exactly how to do it I could get a pretty good idea. But I was surprised that it wasn’t automatic on Promethease and I’m wondering whether it’s not possible with any decent level of accuracy.

Also, I see you list a lot of SNPs like “7x higher chance of responding to antidepressants”. I know you guys mostly just quote studies and don’t set yourselves up as gatekeepers, but do you think those kinds of things are plausible, or do you think it’s probably so polygenic that no individual SNP can matter much?

As you realize (and as discussed in SNPedia here), there are over 60 cytochrome genes classified into 14 different subfamilies.

We can figure out some of those 60, and that conclusion usually manifests itself as a ‘genoset‘, since it takes the data from multiple variants to determine one (let alone two) alleles. And an additional problem is that current DNA chip data is unphased, so we can’t know for sure when variants seen in the same gene are actually on the same allele.

As for being gatekeepers, we are a wiki, but we state our guidelines for the types of studies we strive to include in SNPedia in part as follows:

… Our emphasis is on SNPs and mutations that have significant medical or genealogical consequences and are reproducible (for example, the reported consequence has been independently replicated by at least one group besides the first group reporting the finding). These are typically found in meta-analyses, studies of at least 500 patients, replication studies including those looking at other populations, genome-wide significance thresholds of under 5 x 10e-8 for GWAS findings, and/or mutations with historic or proven medical significance. …

We’d love to have a single statistic indicating “primo data that is 99.9% likely to withstand the test of time” but we have to do the best we can with science publications as they exist today.

With respect to the discussion of titration, I think that the best-case scenario for gene testing is on the margin of what you are discussing. If you titrate the doses, you may end up in an equilibrium where the drugs are effective, but there are noticeable side effects so that it’s only just beating a cost-benefits analysis for the patient. It could be that at 40mg Prozac a patient has uncomfortable side effects but the Prozac is working so you stop titrating and stick with that. But maybe if you tried Zoloft you would find that they would have the same effectiveness with zero side effects which would be a huge win. Changing could be too risky with the titrating approach. If gene testing were actually effective then you wouldn’t have to worry about getting stuck in local maxima as much.

Separately, it seems weird that genes would account for such a small percentage of variation in drug metabolism. If not genes, there has to be something. Perhaps the next stage is doing association studies on gut biome variables with drug metabolism. Does anyone have anything to say on the state of that research effort?

I think describing the flaws you found in the LaCrosse study as “cracks” is significantly under-selling them. There were .77/.44=1.75x as many medication-changes in the intervention as in the control group, and an average QIDS-C16 improvement 44.8/26.4=1.7x as large. When I find a flaw that severe, I discount the study’s evidence-strength all the way to zero, and then discount it a little bit more, treating it as evidence against its conclusion.

Yeah, I don’t take that one too seriously. The Union Health one really bothers me, though. Sure, n=9 gives me an excuse to dismiss it, but I really want to know how they did it.

It’s really absurd they didn’t randomize their study. In my single grad course in Causal Inference the entire class revolved around the idea that you really really should always randomize, and if it’s impossible here are the econometric techniques that can hopefully get you closer to randomization.

It’s obviously great you went into it in more detail, but the fact that they didn’t randomize to me is sufficient to dismiss their evidence (conditional on the priors we know that anti-depressant signals are incredibly weak).

Where did you take this class (and in what department, econometrics?) Or did I ask you already?

—

I broadly agree with “always randomize if you can” thought, but you will still have non-compliance and dropout and all sorts of other things that will necessitate causal inference kung fu to deal with.

I took this one (http://www.lse.ac.uk/resources/calendar/courseGuides/MY/2016_MY457.htm).

I’d have loved to take more advanced stuff, but I’m stuck self-teaching, since I’m in the private sector now.

I’m just so surprised they didn’t randomize, and instead took the first 100 in Group A and second 100 in group B. It seems almost an unforgivably naive mistake, but perhaps there was some complicating factor I’m unaware of.

If you are still in London and have money:

http://www.lshtm.ac.uk/study/cpd/causal_inference.html

I thought the same thing, although I don’t know enough about the field to offer any suggestions. I do wonder if that is what the company is already doing. If they have a rich dataset and are using a forest method, I’d be willing to give them a little bit more benefit of the doubt than if they just have a regression with a bunch of interaction terms.

Still, I know nothing about the field.

From the National Education Alliance for Borderline Personality Disorder (NEA-BPD):

*-*-*-*-*-*-*-*-*

Borderline personality disorder (BPD) is a serious mental illness that centers on the inability to manage emotions effectively … Other disorders, such as depression, anxiety disorders, eating disorders, substance abuse and other personality disorders can often exist along with BPD … BPD affects 5.9% of adults (about 14 million Americans) at some time in their life …

Borderline personality disorder often occurs with other illnesses … Most co-morbidities are listed below, followed by the estimated percent of people with BPD who have them:

* Major Depressive Disorder – 60%

* Dysthymia (a chronic type of depression) – 70%

* Substance abuse – 35%

* Eating disorders (such as anorexia, bulimia, binge eating) – 25%

* Bipolar disorder – 15%

* Antisocial Personality Disorder – 25%

* Narcissistic Personality Disorder – 25%

* Self-Injury – 55%-85%

Research has shown that outcomes can be quite good for people with BPD, particularly if they are engaged in treatment … Talk therapy is usually the first choice of treatment (unlike some other illnesses where medication is often first). …

Dialectical behavior therapy (DBT) is the most studied treatment for BPD and the one shown to be most effective. … DBT teaches skills to control intense emotions, reduce self-destructive behavior, manage distress, and improve relationships. It seeks a balance between accepting and changing behaviors …

Medications cannot cure BPD but can help treat other conditions that often accompany BPD such as depression, impulsivity, and anxiety. …. Often patients are treated with several medications, but there is little evidence that this approach is necessary or effective.

*-*-*-*-*-*-*-*-*

The moral of this story seems pretty simple: use antidepressants to remedy synapse disorders; use therapy to remedy connectome disorders; be mindful in clinical practice that confusing these disorders and/or reversing these treatments is harmful.

Here’s a reasonable question: Given the high prevalence of BPD, can any pharmaceutical study be assessed as reliable that does not explicitly control for the (very many) patients for whom BPD is a credible primary diagnosis?

In other words, isn’t everyday life administering a long-term regimen of “dialectical behavior therapy” to pretty much every living person? Despite the (admitted) inconvenience and added complexity, isn’t BPD a clinically crucial variable, that requires careful experimental control, in pretty much any depression-related outcome study?

Most antidepressant studies exclude people with alternate diagnoses, including personality disorders. Although many people with BPD have depression, most people with depression don’t have BPD.

I think the distinction between “synapse disorders” and “connectome disorders” is way too speculative at this point to do anything with. For example, schizophrenia seems like both; schizophrenics have altered brain connectivity, but they also do a lot better on dopamine antagonists.

*-*-*-*-*-*-*-*-*

(from the OP) “Clinicians keep the patients who get good effects [by] switching drugs for the patients who get bad effects until they find something that works.”

(from above) “I think the distinction between “synapse disorders” and “connectome disorders” is way too speculative at this point to do anything with.”

*-*-*-*-*-*-*-*-*

Yet doesn’t the former (common) clinical practice help blind physicians to the everyday reality of the latter distinction?

The portion of patients for whom “drug-switching” fails is substantial (to say the least). It isn’t just physicians who (in the words of the OP) “feel kind of bad about this.”

Eva Candle,

I don’t see how you invoke connectomics as an “everyday reality.” At very best it’s an emerging domain.

Don’t get me wrong, connectomics is very exciting. Speaking personally, there is the potential for great strides within my profession (epilepsy). But vis-a-vis clinical practice, it remains a hypothetical.

Rahien.din, definitely I agree with you that in three great spheres of discourse—scientific, medical, and popular—the distinction between “synapse disorders” and “connectome disorders” is least widely embraced in the medical sphere.