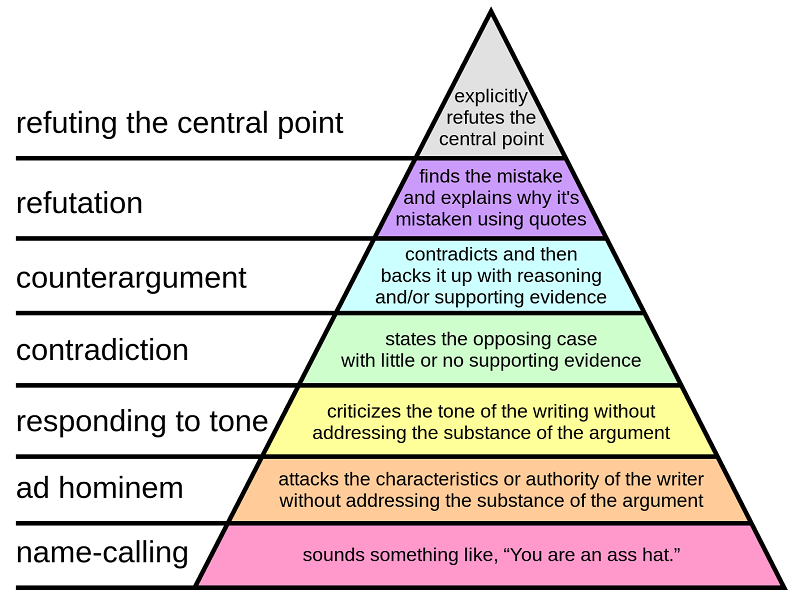

In 2008, Paul Graham wrote How To Disagree Better, ranking arguments on a scale from name-calling to explicitly refuting the other person’s central point.

And that’s why, ever since 2008, Internet arguments have generally been civil and productive.

Graham’s hierarchy is useful for its intended purpose, but it isn’t really a hierarchy of disagreements. It’s a hierarchy of types of response, within a disagreement. Sometimes things are refutations of other people’s points, but the points should never have been made at all, and refuting them doesn’t help. Sometimes it’s unclear how the argument even connects to the sorts of things that in principle could be proven or refuted.

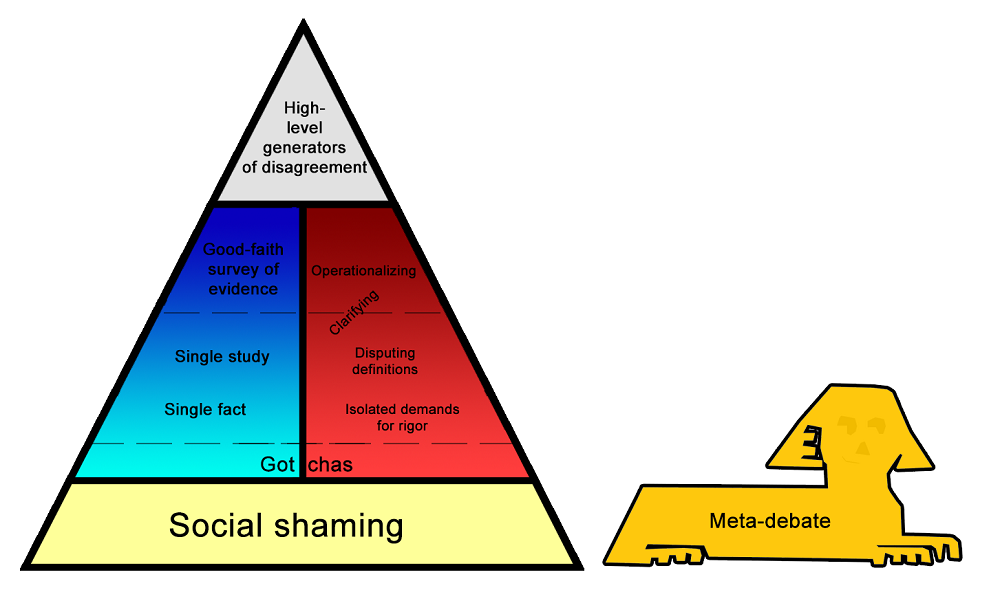

If we were to classify disagreements themselves – talk about what people are doing when they’re even having an argument – I think it would look something like this:

Most people are either meta-debating – debating whether some parties in the debate are violating norms – or they’re just shaming, trying to push one side of the debate outside the bounds of respectability.

If you can get past that level, you end up discussing facts (blue column on the left) and/or philosophizing about how the argument has to fit together before one side is “right” or “wrong” (red column on the right). Either of these can be anywhere from throwing out a one-line claim and adding “Checkmate, atheists” at the end of it, to cooperating with the other person to try to figure out exactly what considerations are relevant and which sources best resolve them.

If you can get past that level, you run into really high-level disagreements about overall moral systems, or which goods are more valuable than others, or what “freedom” means, or stuff like that. These are basically unresolvable with anything less than a lifetime of philosophical work, but they usually allow mutual understanding and respect.

I’m not saying everything fits into this model, or even that most things do. It’s just a way of thinking that I’ve found helpful. More detail on what I mean by each level:

Meta-debate is discussion of the debate itself rather than the ideas being debated. Is one side being hypocritical? Are some of the arguments involved offensive? Is someone being silenced? What biases motivate either side? Is someone ignorant? Is someone a “fanatic”? Are their beliefs a “religion”? Is someone defying a consensus? Who is the underdog? I’ve placed it in a sphinx outside the pyramid to emphasize that it’s not a bad argument for the thing, it’s just an argument about something completely different.

“Gun control proponents are just terrified of guns, and if they had more experience with them their fear would go away.”

“It was wrong for gun control opponents to prevent the CDC from researching gun statistics more thoroughly.”

“Senators who oppose gun control are in the pocket of the NRA.”

“It’s insensitive to start bringing up gun control hours after a mass shooting.”

Sometimes meta-debate can be good, productive, or necessary. For example, I think discussing “the origins of the Trump phenomenon” is interesting and important, and not just an attempt to bulverizing the question of whether Trump is a good president or not. And if you want to maintain discussion norms, sometimes you do have to have discussions about who’s violating them. I even think it can sometimes be helpful to argue about which side is the underdog.

But it’s not the debate, and also it’s much more fun than the debate. It’s an inherently social question, the sort of who’s-high-status and who’s-defecting-against-group-norms questions that we like a little too much. If people have to choose between this and some sort of boring scientific question about when fetuses gain brain function, they’ll choose this every time; given the chance, meta-debate will crowd out everything else.

The other reason it’s in the sphinx is because its proper function is to guard the debate. Sure, you could spend your time writing a long essay about why creationists’ objections to radiocarbon dating are wrong. But the meta-debate is what tells you creationists generally aren’t good debate partners and you shouldn’t get involved.

Social shaming also isn’t an argument. It’s a demand for listeners to place someone outside the boundary of people who deserve to be heard; to classify them as so repugnant that arguing with them is only dignifying them. If it works, supporting one side of an argument imposes so much reputational cost that only a few weirdos dare to do it, it sinks outside the Overton Window, and the other side wins by default.

“I can’t believe it’s 2018 and we’re still letting transphobes on this forum.”

“Just another purple-haired SJW snowflake who thinks all disagreement is oppression.”

“Really, do conservatives have any consistent beliefs other than hating black people and wanting the poor to starve?”

“I see we’ve got a Silicon Valley techbro STEMlord autist here.”

Nobody expects this to convince anyone. That’s why I don’t like the term “ad hominem”, which implies that shamers are idiots who are too stupid to realize that calling someone names doesn’t refute their point. That’s not the problem. People who use this strategy know exactly what they’re doing and are often quite successful. The goal is not to convince their opponents, or even to hurt their opponent’s feelings, but to demonstrate social norms to bystanders. If you condescendingly advise people that ad hominem isn’t logically valid, you’re missing the point.

when you do sutuff like… shoot my jaw clean off of my face with a sniper rifle, it mostly reflects poorly on your self

— wint (@dril) September 23, 2016

Sometimes the shaming works on a society-wide level. More often, it’s an attempt to claim a certain space, kind of like the intellectual equivalent of a gang sign. If the Jets can graffiti “FUCK THE SHARKS” on a certain bridge, but the Sharks can’t get away with graffiting “NO ACTUALLY FUCK THE JETS” on the same bridge, then almost by definition that bridge is in the Jets’ territory. This is part of the process that creates polarization and echo chambers. If you see an attempt at social shaming and feel triggered, that’s the second-best result from the perspective of the person who put it up. The best result is that you never went into that space at all. This isn’t just about keeping conservatives out of socialist spaces. It’s also about defining what kind of socialist the socialist space is for, and what kind of ideas good socialists are or aren’t allowed to hold.

I think easily 90% of online discussion is of this form right now, including some long and carefully-written thinkpieces with lots of citations. The point isn’t that it literally uses the word “fuck”, the point is that the active ingredient isn’t persuasiveness, it’s the ability to make some people feel like they’re suffering social costs for their opinion. Even really good arguments that are persuasive can be used this way if someone links them on Facebook with “This is why I keep saying Democrats are dumb” underneath it.

This is similar to meta-debate, except that meta-debate can sometimes be cooperative and productive – both Trump supporters and Trump opponents could in theory work together trying to figure out the origins of the “Trump phenomenon” – and that shaming is at least sort of an attempt to resolve the argument, in a sense.

Gotchas are short claims that purport to be devastating proof that one side can’t possibly be right.

“If you like big government so much, why don’t you move to Cuba?”

“Isn’t it ironic that most pro-lifers are also against welfare and free health care? Guess they only care about babies until they’re born.”

“When guns are outlawed, only outlaws will have guns.”

These are snappy but almost always stupid. People may not move to Cuba because they don’t want government that big, because governments can be big in many ways some of which are bad, because governments can vary along dimensions other than how big they are, because countries can vary along dimensions other than what their governments are, or just because moving is hard and disruptive.

They may sometimes suggest what might, with a lot more work, be a good point. For example, the last one could be transformed into an argument like “Since it’s possible to get guns illegally with some effort, and criminals need guns to commit their crimes and are comfortable with breaking laws, it might only slightly decrease the number of guns available to criminals. And it might greatly decrease the number of guns available to law-abiding people hoping to defend themselves. So the cost of people not being able to defend themselves might be greater than the benefit of fewer criminals being able to commit crimes.” I don’t think I agree with this argument, and I might challenge assumptions like “criminals aren’t that much likely to have guns if they’re illegal” or “law-abiding gun owners using guns in self-defense is common and an important factor to include in our calculations”. But this would be a reasonable argument and not just a gotcha. The original is a gotcha exactly because it doesn’t invite this level of analysis or even seem aware that it’s possible. It’s not saying “calculate the value of these parameters, because I think they work out in a way where this is a pretty strong argument against controlling guns”. It’s saying “gotcha!”.

Single facts are when someone presents one fact, which admittedly does support their argument, as if it solves the debate in and of itself. It’s the same sort of situation as one of the better gotchas – it could be changed into a decent argument, with work. But presenting it as if it’s supposed to change someone’s mind in and of itself is naive and sort of an aggressive act.

“The UK has gun control, and the murder rate there is only a quarter of ours.”

“The USSR was communist and it was terrible.”

“Donald Trump is known to have cheated his employees and subcontractors.”

“Hillary Clinton handled her emails in a scandalously incompetent manner and tried to cover it up.”

These are all potentially good points, with at least two caveats. First, correlation isn’t causation – the UK’s low murder rates might not be caused by their gun control, and maybe not all communist countries inevitably end up like the USSR. Second, even things with some bad features are overall net good. Trump could be a dishonest businessman, but still have other good qualities. Hillary Clinton may be crap at email security, but skilled at other things. Even if these facts are true and causal, they only prove that a plan has at least one bad quality. At best they would be followed up by an argument for why this is really important.

I think the move from shaming to good argument is kind of a continuum. This level is around the middle. At some point, saying “I can’t believe you would support someone who could do that with her emails!” is just trying to bait Hillary supporters. And any Hillary supporter who thinks it’s really important to argue specifics of why the emails aren’t that bad, instead of focusing on the bigger picture, is taking the bait, or getting stuck in this mindset where they feel threatened if they admit there’s anything bad about Hillary, or just feeling too defensive.

Single studies are better than scattered facts since they at least prove some competent person looked into the issue formally.

“This paper from Gary Kleck shows that more guns actually cause less crime.”

“These people looked at the evidence and proved that support for Trump is motivated by authoritarianism.”

“I think you’ll find economists have already investigated this and that the minimum wage doesn’t cost jobs.”

“There are actually studies proving that money doesn’t influence politics.”

We’ve already discussed this here before. Scientific studies are much less reliable guides to truth than most people think. On any controversial issue, there are usually many peer-reviewed studies supporting each side. Sometimes these studies are just wrong. Other times they investigate a much weaker subproblem but get billed as solving the larger problem.

There are dozens of studies proving the minimum wage does destroy jobs, and dozens of studies proving it doesn’t. Probably it depends a lot on the particular job, the size of the minimum wage, how the economy is doing otherwise, etc, etc, etc. Gary Kleck does have a lot of studies showing that more guns decrease crime, but a lot of other criminologists disagree with him. Both sides will have plausible-sounding reasons for why the other’s studies have been conclusively debunked on account of all sorts of bias and confounders, but you will actually have to look through those reasons and see if they’re right.

Usually the scientific consensus on subjects like these will be as good as you can get, but don’t trust that you know the scientific consensus unless you have read actual well-conducted surveys of scientists in the field. Your echo chamber telling you “the scientific consensus agrees with us” is definitely not sufficient.

A good-faith survey of evidence is what you get when you take all of the above into account, stop trying to devastate the other person with a mountain of facts that can’t possibly be wrong, and start looking at the studies and arguments on both sides and figuring out what kind of complex picture they paint.

“Of the meta-analyses on the minimum wage, three seem to suggest it doesn’t cost jobs, and two seem to suggest it does. Looking at the potential confounders in each, I trust the ones saying it doesn’t cost jobs more.”

“The latest surveys say more than 97% of climate scientists think the earth is warming, so even though I’ve looked at your arguments for why it might not be, I think we have to go with the consensus on this one.”

“The justice system seems racially biased at the sentencing stage, but not at the arrest or verdict stages.”

“It looks like this level of gun control would cause 500 fewer murders a year, but also prevent 50 law-abiding gun owners from defending themselves. Overall I think that would be worth it.”

Isolated demands for rigor are attempts to demand that an opposing argument be held to such strict invented-on-the-spot standards that nothing (including common-sense statements everyone agrees with) could possibly clear the bar.

“You can’t be an atheist if you can’t prove God doesn’t exist.”

“Since you benefit from capitalism and all the wealth it’s made available to you, it’s hypocritical for you to oppose it.”

“Capital punishment is just state-sanctioned murder.”

“When people still criticize Trump even though the economy is doing so well, it proves they never cared about prosperity and are just blindly loyal to their party.”

The first is wrong because you can disbelieve in Bigfoot without being able to prove Bigfoot doesn’t exist – “you can never doubt something unless you can prove it doesn’t exist” is a fake rule we never apply to anything else. The second is wrong because you can be against racism even if you are a white person who presumably benefits from it; “you can never oppose something that benefits you” is a fake rule we never apply to anything else. The third is wrong because eg prison is just state-sanctioned kidnapping; “it is exactly as wrong for the state to do something as for a random criminal to do it” is a fake rule we never apply to anything else. The fourth is wrong because Republicans have also been against leaders who presided over good economies and presumably thought this was a reasonable thing to do; “it’s impossible to honestly oppose someone even when there’s a good economy” is a fake rule we never apply to anything else.

Sometimes these can be more complicated and ambiguous. One could argue that

“Banning abortion is unconscionable because it denies someone the right to do what they want with their own body” is an isolated demand for rigor, given that we ban people from selling their organs, accepting unlicensed medical treatments, using illegal drugs, engaging in prostitution, accepting euthanasia, and countless other things that involve telling them what to do with their bodies – “everyone has a right to do what they want with their own bodies” is a fake rule we never apply to anything else. Other people might want to search for ways that the abortion case is different, or explore what we mean by “right to their own body” more deeply. Proposed without these deeper analysis, I don’t think the claim would rise much above this level.

I don’t think these are necessarily badly-intentioned. We don’t have a good explicit understanding of what high-level principles we use, and tend to make them up on the spot to fit object-level cases. But here they act to derail the argument into a stupid debate over whether it’s okay to even discuss the issue without having 100% perfect impossible rigor. The solution is exactly the sort of “proving too much” arguments in the last paragraph. Then you can agree to use normal standards of rigor for the argument and move on to your real disagreements.

These are related to fully general counterarguments like “sorry, you can’t solve every problem with X”, though usually these are more meta-debate than debate.

Sometimes isolated demands for rigor can be rescued by making them much more complicated; for example, I can see somebody explaining why kidnapping becomes acceptable when the state does it but murder doesn’t – but you’ve got to actually make the argument, and don’t be surprised if other people don’t find it convincing. Other times these work not as rules but as heuristics – for example “let people do what they want with their body in the absence of very compelling arguments otherwise” – and if those heuristics survive someone else challenging whether banning unlicensed medical treatment is really that much more compelling than banning abortion, they usually end up as high-level generators of disagreement (see below).

Disputing definitions is when an argument hinges on the meaning of words, or whether something counts as a member of a category or not.

“Transgender is a mental illness.”

“The Soviet Union wasn’t really communist.”

“Wanting English as the official language is racist.”

“Abortion is murder.”

“Nobody in the US is really poor, by global standards.”

It might be important on a social basis what we call these things; for example, the social perception of transgender might shift based on whether it was commonly thought of as a mental illness or not. But if a specific argument between two people starts hinging on one of these questions, chances are something has gone wrong; neither factual nor moral questions should depend on a dispute over the way we use words. This Guide To Words is a long and comprehensive resource about these situations and how to get past them into whatever the real disagreement is.

Clarifying is when people try to figure out exactly what their opponent’s position is.

“So communists think there shouldn’t be private ownership of factories, but there might still be private ownership of things like houses and furniture?”

“Are you opposed to laws saying that convicted felons can’t get guns? What about laws saying that there has to be a waiting period?”

“Do you think there can ever be such a thing as a just war?”

This can sometimes be hostile and counterproductive. I’ve seen too many arguments degenerate into some form of “So you’re saying that rape is good and we should have more of it, are you?” No. Nobody is ever saying that. If someone thinks the other side is saying that, they’ve stopped doing honest clarification and gotten more into the performative shaming side.

But there are a lot of misunderstandings about people’s positions. Some of this is because the space of things people can believe is very wide and it’s hard to understand exactly what someone is saying. More of it is because partisan echo chambers can deliberately spread misrepresentations or cliched versions of an opponent’s arguments in order to make them look stupid, and it takes some time to realize that real opponents don’t always match the stereotype. And sometimes it’s because people don’t always have their positions down in detail themselves (eg communists’ uncertainty about what exactly a communist state would look like). At its best, clarification can help the other person notice holes in their own opinions and reveal leaps in logic that might legitimately deserve to be questioned.

Operationalizing is where both parties understand they’re in a cooperative effort to fix exactly what they’re arguing about, where the goalposts are, and what all of their terms mean.

“When I say the Soviet Union was communist, I mean that the state controlled basically all of the economy. Do you agree that’s what we’re debating here?”

“I mean that a gun buyback program similar to the one in Australia would probably lead to less gun crime in the United States and hundreds of lives saved per year.”

“If the US were to raise the national minimum wage to $15, the average poor person would be better off.”

“I’m not interested in debating whether the IPCC estimates of global warming might be too high, I’m interested in whether the real estimate is still bad enough that millions of people could die.”

An argument is operationalized when every part of it has either been reduced to a factual question with a real answer (even if we don’t know what it is), or when it’s obvious exactly what kind of non-factual disagreement is going on (for example, a difference in moral systems, or a difference in intuitions about what’s important).

The Center for Applied Rationality promotes double-cruxing, a specific technique that helps people operationalize arguments. A double-crux is a single subquestion where both sides admit that if they were wrong about the subquestion, they would change their mind. For example, if Alice (gun control opponent) would support gun control if she knew it lowered crime, and Bob (gun control supporter) would oppose gun control if he knew it would make crime worse – then the only thing they have to talk about is crime. They can ignore whether guns are important for resisting tyranny. They can ignore the role of mass shootings. They can ignore whether the NRA spokesman made an offensive comment one time. They just have to focus on crime – and that’s the sort of thing which at least in principle is tractable to studies and statistics and scientific consensus.

Not every argument will have double-cruxes. Alice might still oppose gun control if it only lowered crime a little, but also vastly increased the risk of the government becoming authoritarian. A lot of things – like a decision to vote for Hillary instead of Trump – might be based on a hundred little considerations rather than a single debatable point.

But at the very least, you might be able to find a bunch of more limited cruxes. For example, a Trump supporter might admit he would probably vote Hillary if he learned that Trump was more likely to start a war than Hillary was. This isn’t quite as likely to end the whole disagreement in a fell swoop – but it still gives a more fruitful avenue for debate than the usual fact-scattering.

High-level generators of disagreement are what remains when everyone understands exactly what’s being argued, and agrees on what all the evidence says, but have vague and hard-to-define reasons for disagreeing anyway. In retrospect, these are probably why the disagreement arose in the first place, with a lot of the more specific points being downstream of them and kind of made-up justifications. These are almost impossible to resolve even in principle.

“I feel like a populace that owns guns is free and has some level of control over its own destiny, but that if they take away our guns we’re pretty much just subjects and have to hope the government treats us well.”

“Yes, there are some arguments for why this war might be just, and how it might liberate people who are suffering terribly. But I feel like we always hear this kind of thing and it never pans out. And every time we declare war, that reinforces a culture where things can be solved by force. I think we need to take an unconditional stance against aggressive war, always and forever.”

“Even though I can’t tell you how this regulation would go wrong, in past experience a lot of well-intentioned regulations have ended up backfiring horribly. I just think we should have a bias against solving all problems by regulating them.”

“Capital punishment might decrease crime, but I draw the line at intentionally killing people. I don’t want to live in a society that does that, no matter what its reasons.”

Some of these involve what social signal an action might send; for example, even a just war might have the subtle effect of legitimizing war in people’s minds. Others involve cases where we expect our information to be biased or our analysis to be inaccurate; for example, if past regulations that seemed good have gone wrong, we might expect the next one to go wrong even if we can’t think of arguments against it. Others involve differences in very vague and long-term predictions, like whether it’s reasonable to worry about the government descending into tyranny or anarchy. Others involve fundamentally different moral systems, like if it’s okay to kill someone for a greater good. And the most frustrating involve chaotic and uncomputable situations that have to be solved by metis or phronesis or similar-sounding Greek words, where different people’s Greek words give them different opinions.

You can always try debating these points further. But these sorts of high-level generators are usually formed from hundreds of different cases and can’t easily be simplified or disproven. Maybe the best you can do is share the situations that led to you having the generators you do. Sometimes good art can help.

The high-level generators of disagreement can sound a lot like really bad and stupid arguments from previous levels. “We just have fundamentally different values” can sound a lot like “You’re just an evil person”. “I’ve got a heuristic here based on a lot of other cases I’ve seen” can sound a lot like “I prefer anecdotal evidence to facts”. And “I don’t think we can trust explicit reasoning in an area as fraught as this” can sound a lot like “I hate logic and am going to do whatever my biases say”. If there’s a difference, I think it comes from having gone through all the previous steps – having confirmed that the other person knows as much as you might be intellectual equals who are both equally concerned about doing the moral thing – and realizing that both of you alike are controlled by high-level generators. High-level generators aren’t biases in the sense of mistakes. They’re the strategies everyone uses to guide themselves in uncertain situations.

This doesn’t mean everyone is equally right and okay. You’ve reached this level when you agree that the situation is complicated enough that a reasonable person with reasonable high-level generators could disagree with you. If 100% of the evidence supports your side, and there’s no reasonable way that any set of sane heuristics or caveats could make someone disagree, then (unless you’re missing something) your opponent might just be an idiot.

Some thoughts on the overall arrangement:

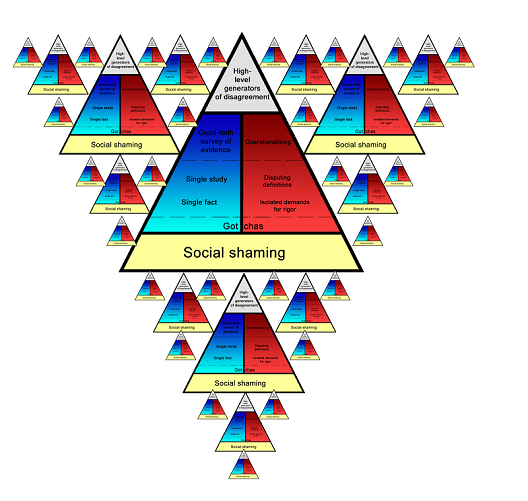

1. If anybody in an argument is operating on a low level, the entire argument is now on that low level. First, because people will feel compelled to refute the low-level point before continuing. Second, because we’re only human, and if someone tries to shame/gotcha you, the natural response is to try to shame/gotcha them back.

2. The blue column on the left is factual disagreements; the red column on the right is philosophical disagreements. The highest level you’ll be able to get to is the lowest of where you are on the two columns.

3. Higher levels require more vulnerability. If you admit that the data are mixed but seem to slightly favor your side, and your opponent says that every good study ever has always favored his side plus also you are a racist communist – well, you kind of walked into that one. In particular, exploring high-level generators of disagreement requires a lot of trust, since someone who is at all hostile can easily frame this as “See! He admits that he’s biased and just going off his intuitions!”

4. If you hold the conversation in private, you’re almost guaranteed to avoid everything below the lower dotted line. Everything below that is a show put on for spectators.

5. If you’re intelligent, decent, and philosophically sophisticated, you can avoid everything below the higher dotted line. Everything below that is either a show or some form of mistake; everything above it is impossible to avoid no matter how great you are.

6. The shorter and more public the medium, the more pressure there is to stick to the lower levels. Twitter is great for shaming, but it’s almost impossible to have a good-faith survey of evidence there, or use it to operationalize a tricky definitional question.

7. Sometimes the high-level generators of disagreement are other, even more complicated questions. For example, a lot of people’s views come from their religion. Now you’ve got a whole different debate.

8. And a lot of the facts you have to agree on in a survey of the evidence are also complicated. I once saw a communism vs. capitalism argument degenerate into a discussion of whether government works better than private industry, then whether NASA was better than SpaceX, then whether some particular NASA rocket engine design was better than a corresponding SpaceX design. I never did learn if they figured whose rocket engine was better, or whether that helped them solve the communism vs. capitalism question. But it seems pretty clear that the degeneration into subquestions and discovery of superquestions can go on forever. This is the stage a lot of discussions get bogged down in, and one reason why pruning techniques like double-cruxes are so important.

9. Try to classify arguments you see in the wild on this system, and you find that some fit and others don’t. But the main thing you find is how few real arguments there are. This is something I tried to hammer in during the last election, when people were complaining “Well, we tried to debate Trump supporters, they didn’t change their mind, guess reason and democracy don’t work”. Arguments above the first dotted line are rare; arguments above the second basically nonexistent in public unless you look really hard.

But what’s the point? If you’re just going to end up at the high-level generators of disagreement, why do all the work?

First, because if you do it right you’ll end up respecting the other person. Going through all the motions might not produce agreement, but it should produce the feeling that the other person came to their belief honestly, isn’t just stupid and evil, and can be reasoned with on other subjects. The natural tendency is to assume that people on the other side just don’t know (or deliberately avoid knowing) the facts, or are using weird perverse rules of reasoning to ensure they get the conclusions they want. Go through the whole process, and you will find some ignorance, and you will find some bias, but they’ll probably be on both sides, and the exact way they work might surprise you.

Second, because – and this is total conjecture – this deals a tiny bit of damage to the high-level generators of disagreement. I think of these as Bayesian priors; you’ve looked at a hundred cases, all of them have been X, so when you see something that looks like not-X, you can assume you’re wrong – see the example above where the libertarian admits there is no clear argument against this particular regulation, but is wary enough of regulations to suspect there’s something they’re missing. But in this kind of math, the prior shifts the perception of the evidence, but the evidence also shifts the perception of the prior.

Imagine that, throughout your life, you’ve learned that UFO stories are fakes and hoaxes. Some friend of yours sees a UFO, and you assume (based on your priors) that it’s probably fake. They try to convince you. They show you the spot in their backyard where it landed and singed the grass. They show you the mysterious metal object they took as a souvenir. It seems plausible, but you still have too much of a prior on UFOs being fake, and so you assume they made it up.

Now imagine another friend has the same experience, and also shows you good evidence. And you hear about someone the next town over who says the same thing. After ten or twenty of these, maybe you start wondering if there’s something to all of this UFOs. Your overall skepticism of UFOs has made you dismiss each particular story, but each story has also dealt a little damage to your overall skepticism.

I think the high-level generators might work the same way. The libertarian says “Everything I’ve learned thus far makes me think government regulations fail.” You demonstrate what looks like a successful government regulation. The libertarian doubts, but also becomes slightly more receptive to the possibility of those regulations occasionally being useful. Do this a hundred times, and they might be more willing to accept regulations in general.

As the old saying goes, “First they ignore you, then they laugh at you, then they fight you, then they fight you half-heartedly, then they’re neutral, then they grudgingly say you might have a point even though you’re annoying, then they say on balance you’re mostly right although you ignore some of the most important facets of the issue, then you win.”

I notice SSC commenter John Nerst is talking about a science of disagreement and has set up a subreddit for discussing it. I only learned about it after mostly finishing this post, so I haven’t looked into it as much as I should, but it might make good followup reading.

Tangential, but I think Graham’s original hierarchy needs some revision. I’ll copypaste from where I wrote about this earlier:

I would like to suggest two revisions to the disagreement hierarchy.

Revision 1: Add level DH4.5: Nonconstructive refutation.

The archetypical example of refuting an argument is finding a hole in it — “Your inference of P is unjustified given only what you’ve established so far.” (Or, better yet, “Your inference of P is unjustified given only what you’ve established so far; indeed, here is an example where what you’ve established so far holds, but P does not.”) But it’s possible to show an argument wrong without actually finding a hole in it. The classic example is showing that an argument proves too much. If an argument proves too much, you can conclude that it’s wrong — but you still don’t necessarily know exactly why it’s wrong. It’s still a form of refutation and should be above counterargument, but it’s not as good as a constructive refutation.

Revision 2: Replace DH6, “Refuting the central point”, with “Refutation and counterargument”.

“Refuting the central point” doesn’t really strike me as qualitatively different from “refutation”. Honestly to my mind, if you’re refuting some peripheral thing, that hardly even counts. When I argue I like to spot the other person lots of points because I want to get to the central disagreement as quickly as possible; arguing over peripheral stuff is mostly a waste of time. Of course, sometimes peripheral stuff becomes central later, but you can always un-spot a point.

Anyway, point is, what is qualitatively different is refuting and counterarguing. If you only refute but you don’t counterargue, all you’ve established is that the other person’s argument is wrong — not that your own position is right! Refutation does not automatically include counterargument, and I think this is worth singling out a separate higher level.

Let’s clarify something: outside, in the big room with the blue ceiling called meatspace, pretty much all high school lunch room debates are about social shaming and there is not much else. If you demand rigor, you are a dork and you lost. In fact, most people participating in them is probably not even aware of the higher levels.

When the Internet began, it was a highly intellectual space. Think UseNet, mostly professors. Of course the standards were high.

On the other hand, Twitter is now not much different from the high school lunchroom.

Blogs are in between. Or Reddit.

You should be aware where you are. Never demand rigor on Twitter. *rolls eyes* is a good argument on Twitter. It is literally the same as rolling eyes in the high school lunchroom.

Still remember the person whose idea of contributing to an online discussion was “You’re RUDE” — for asking someone to back up what she said.

I propose this pyramid (or a revised version thereof) be posted at the start of all SSC comment threads.

I wonder if there might be use in a study of how disagreements, responses, and rhetorical techniques ( like Schopenhauer’s guide.) Intersect. And how to bump a discussion that’s falling down the pyramids back up using rhetorical techniques.

Unfortunately, it’s not as easy to “grok” as the one by Graham. Needs reading the whole article.

Offtopic, do you know of anything similar to Schopenhauer’s guide?

What if people could denote a background shade color for their posts, with one color for meta-arguments, and another color for the actual argument? Unless you are near the top of a chain you usually shouldn’t change color from the comment above you.

I feel it would probably fail because these things always fail. Maybe this comment should be colored with “High-level generators of disagreement.”

This comment would be colored with high-level generators of disagreement, I think.

I don’t think forcing this scheme onto every slatestarcodex comment is a good idea. It would make the comments less welcoming to newcomers. Plus, not every comment needs to be a structured debate. Perhaps this would be something people decide to do when debating. I think one difficulty is accurately assessing the color in question. Everyone wants to think they are getting at the root of the issue, but often, we’re not.

Let me go on record as saying that this is perhaps the worst idea in the universe, not because the pyramid is incorrect, but because it will create tons and tons of meta-debate.

You know how sometimes, people will look at an argument, accuse it of one “fallacy”, and then leave it at that? “Correlation does not imply causation, your argument is invalid!” This is a terrible form of argument, closer to meta-debate than true debate. (Debate would go something more like “correlation can suggest causation, but there are lots of confounders here, and I’m wondering if you can back up what seems like an important assertion of causation more fully, or else make your argument without leaning on it.”)

Now imagine everyone having an easy reference for accusing one another of meta-debating, or using single facts or single studies, or not rising above the level of “disputing definitions”. And then imagine them disagreeing about whether that’s really what’s going on, and arguing about that instead of the actual point. I don’t want to see an SSC where that’s normalized.

If people were perfect debaters, then they would be able to use the pyramid well, and it wouldn’t create more meta-debate; but then again, if they were perfect debaters, they wouldn’t have to use the pyramid at all. We’re not perfect, which is why we fuck up in the first place; and even admitting that the pyramid is correct, I think it might haunt the comment section of this blog for years to come by creating lots and lots of meta-debate about who is arguing at what level of the pyramid.

To make it the worst idea in the universe, you’d need to reframe the pyramid as a Bingo card.

All right, fine, you win XD

I feel that since people meta debate about what level of the pyramid they are on already, it might speed those sub debates up a bit.

Of course by the time you accept this diagram is valid your are likely at least a third of the way up it.

I’m pretty sure most SSC debates need the sphinx more than the pyramid.

If there’s one thing I learnt in my previous job, it’s that when I disagree with the opinion of an intelligent co-worker, it’s worth to ask for clarification. Very often the disagreement is simply because of communication failure, that is, I misunderstood what the co-worker was saying, and after clarifying the details, it turns out we’re actually on mostly the same opinion, but phrasing it differently.

I’ve been amazed at how much a few reflective listening skills can improve a conversation. Just to stop long enough to say “Here’s what I think you’re saying, is that right?” before diving in with one’s opinion goes a really long way in a potential disagreement.

It struck me that what Scott described as “Clarifying” seems to be the de facto bar between low and high quality discourse, but I’ve never seen it explicitly described as such before now, or called out as a requirement for participation. For example, many fora have adopted some variation of the Principle of Charity as a local guideline (e.g. Hacker News: “Please respond to the strongest plausible interpretation of what someone says, not a weaker one that’s easier to criticize”) but I’ve never seen one that goes on to say, “…and also paraphrase their point, and ask them if that is indeed what they are arguing.”

It seems like a super-weapon, good for combatting both intentional mischaracterization (straw-men, by far the most common example of unproductive argumentation I see online) and honest misunderstandings. In particular, I’m wondering about the Vinay Gupta thread – some of the ingredients that led to that thread going off the rails seem like they could’ve been cleared up with good rhetorical hygiene. I wonder if a strong norm around explicit Clarifying, say by prefacing all combative comments with “It sounds like you’re saying X, is that right? If so, you’re wrong because….”, would’ve led to a better outcome?

I like it in principle, but it’s easy to do badly. If you do it badly, it comes across as “It sounds like you want to kick babies, is that right?”, and that can make the argument go downhill even faster than not trying.

One of the things that brought Jordan Peterson to the limelight was a talk show host attempting to use this exact method on him, repeatedly.

“So what you’re saying is that women should just accept being paid less?”

(Apologies for interjecting with culture-war but the interview is almost a perfect example) – linke here https://www.youtube.com/watch?v=aMcjxSThD54

I think this is a very important point.

It’s easier for me to say “look at these statistics proving more libertarian countries have higher life expectancy” than to say “since childhood, I’ve always felt a strong revulsion and defiance toward what I perceive as arbitrary, unjustified authority and/or social bullying. As an adult, much of what I see states doing and much of my own interactions with the state pattern-match to these qualities of human behavior I’ve always hated. Over the years, I’ve found countless examples of what seem like empirical data and real-life experience backing up my intuitions (of course, I was looking for them), so even if you can show me a different study proving big government works better in this case you’re not likely to shake my underlying conviction that big government is generally just bad.”

Yet I think one is also more likely to arrive at some form of mutual understanding when willing to frankly discuss reasons for disagreement of the latter sort, than when staying at the level of “your study says this, but my study says that,” or certainly at the level of social shaming.

But the reasons this takes more vulnerability is that if I am willing to admit my reasons for believing x aren’t fully rational and the interlocutor isn’t, I’ve just put myself in a weak position, rhetorically. Hence people would prefer to use facts to imply that they’re being fully rational while their opponents are guided by gut reactions and hunches, and/or remain at the bottom and/or Sphinx levels, which basically allow you to argue from the gut but without exposing yourself the way the higher level does.

+1

I think you’re right, but I also think Scott meant “vulnerability to refutation”, not “emotional vulnerability”.

The higher levels of argumentation require using more precise definitions and counting the evidence that supports the points, instead of just asserting them vaguely. That’s bound to make your argument more vulnerable to attacks, as your opponent can now focus on the specific points you brought instead of guessing what your position entails.

The lower level assertion “the elites hate the people” is less vulnerable to criticism/refutation than the heigher level “the elites, defined as [owners of production means / people making more than X per year / people with de facto political privileges] hate the poor, and these four papers plus my lifestory are the reason why I believe this”.

I am pretty sure that the vulnerability Scott is talking about was not the chance of refutation, it is social vulnerability, the chance that a bad actor could use your willingness to entertain thinking at that level as proof that you are secretly endorsing socially unacceptable views (see Peterson, Jordan). When discussing things at a higher level, the chance that you will inadvertently produce a negative sound bite is much, much higher. This is one of the reason recent politicians (with one notable exception) seem to sound so affected, they are trying hard never make any phrase which can be used out of context.

I think so too, that this is about social/emotional vulnerability, not logical vulnerability (though there’s likely entanglement between these).

It would be easy for someone to say back to onyomi’s first long paragraph, “So the basis of your libertarian position is not evidence of what works in the world but because you were bullied as a child?”

On the other hand, when I read onyomi’s paragraph, it immediately makes me more interested in talking with them about their libertarian views — which is not a feeling I usually have about libertarianism in general. The reason is that I also have a button about unjust, arbitrary authority because of being mistreated by my father. For whatever reason, those same feelings did not lead me to libertarianism, but I can see they definitely influenced my political views. And I’ll have to think about this more, may even contribute to views I hold that are more libertarian than other views I hold.

Oniyomi disclosing this (and I’m taking it as hypothetical in this context) makes me identify with them in a way I wouldn’t have otherwise and makes me think about what brought me to my own views in a different way. Maybe other people have a different response and this self-disclosure would undercut that person’s credibility so much that no further discussion is considered worthwhile. I’m much more likely to trust someone’s reasoning if they seem aware of their potential biases.

Maybe discussants need to agree at the outset whether consideration of how personal experience shapes our view on this topic is something we want to include in this conversation or not. And then the agreement is that both parties need to offer it up so the vulnerability is shared. (side note: I suspect finding structured ways for interlocutors to increase vulnerability gradually and symmetrically could be helpful to good conversations about difficult topics — maybe diplomats know something about this)

And then the agreement is something like “We’re going to include discussion of the non-scientific factors that may inform our positions and trust each other not to weaponize that information or to reduce all of our views to being the product of those factors.”

My assumption is that significant personal experiences almost always underlie our most deeply held beliefs about the world. At the same time, I’d consider it unfair to treat this personal experience angle as if it obliterates everything else the person has to say. Disclosing it though completely changes for me what feels possible in the conversation.

This sounds a bit like dating.

Well, for what it’s worth – much like Scott predicted, after hearing you say that I respect your honesty and understand where you’re coming from even though I disagree entirely. 🙂

I will try to match it and admit that in my case, I felt from childhood that the authorities (parents, teachers) were fundamentally on my side, had expectations on me that were made clear and were possible for me to fulfil, and had a plan that, while sometimes flawed, at least made sense, while the rebels (schoolyard bullies and troublemakers) were constantly hostile to me for arbitrary reasons that they seemed to make up as they went along. As an adult, I find that that those two groups map, respectively, to the government (which makes its rules plain and puts them in writing, and which has its admittedly ham-fisted way done much to help me get by in the world) and to employers and (for lack of a better term) “authorities on what is socially acceptable” (both of whom keeps saying one thing while they mean another, demand the impossible from me, and are hostile to me for not measuring up to some standard that is always left infuriatingly vague).

So… yeah, I make no claim to have come by my opinion from rational analysis of statistics.

The reactions to this comment describing non-rational reasons for having views different from my own are both encouraging and discouraging:

Encouraging in that commenters here seem willing to engage in this way, and that they share my intuition that discussion on this level seems more likely to generate empathy or intuitive understanding of ideological opponents’ views, if not necessarily agreement.

Discouraging in that it confirms my sense that many, if not most disagreements about tribal/political issue are very hard, if not impossible to resolve at the level of purely rational debate.

This takes me back to a thread not too long ago where I suggested evo-psych reasons for the, to me, inexplicable continued popularity of socialism. This was met with at least some accusations of Bulverism, not to mention descent into debate about object-level merits of capitalism and socialism, which was very much not what I was interested in.

So I am wondering if a different approach to Bulverism is not in order, with the caveat that it can definitely be misused: “responsible Bulverism”? “Mutually Assured Bulverism” (this is starting to sound like a sex act…)?

That is, if we stipulated that Baeraad, Cuke, and I were a three-member subcommittee, lived on an island, or were otherwise somehow in a position where we had to reach a consensus on some kind of policy rather than just going our own ways (and assuming we are only making policy based on what we actually think best, as opposed to factors like pleasing various constituencies), I predict that willingness to talk about this kind of thing (along with facts, studies, etc.) would produce better results.

Put another way, feelings, intuitions, and predispositions are facts about the world. A rational analysis cannot fail to take them into account, and rationality, in fact, might not function without them: apparently people with damage to emotional processing centers (Phineas Gage) don’t become super-rational Vulcans, they just become people who make very bad life choices, because they don’t know where to focus their attention, how to evaluate risk, etc.

I’m not saying go full “facts-don’t-matter” Scott Adams, but I do think he’s right that psychological factors like the way an issue is framed are probably way more important than almost anyone realizes. My current proposed solution is increased bilateral willingness to discuss facts about one’s own introspection, in addition to facts about the world.

I really like this sentence of yours:

“Put another way, feelings, intuitions, and predispositions are facts about the world. A rational analysis cannot fail to take them into account, and rationality, in fact, might not function without them…”

How might our conversations look different if we took seriously the idea that our predispositions behave like facts for each of us?

I often think of this in terms of a person’s default mode. The set of beliefs and assumptions they carry about themselves and others and the world. This default mode is going to entirely run a person in the background if they aren’t aware of how it works. Awareness of it at finer and finer grained levels is going to open up the possibility of not letting it run automatically. The less the default mode gets to run automatically, without question, the more flexibility that enables over time to make new choices.

And this one:

“My current proposed solution is increased bilateral willingness to discuss facts about one’s own introspection.” I second that.

Another potential source of good news to my mind is that for many of us our feelings, intuitions, and predispositions don’t all run in the same direction, so that all kinds of internal contradictions are possible.

I am oddly very eager to please authority and very eager to resist it, depending on the circumstances. After more than five decades, I haven’t quite figured out the rules for what factors send me one way or the other. I am truly ambi-valent towards authority. Perhaps most of us are, given the unevenness of parenting and schooling. I also don’t think my political/moral/philosophical views can be reduced to these facts of my psychology.

I would venture to guess though that most people’s political views are shaped in some important ways by early experiences with authorities and caregivers and how trustworthy or not we found them to be.

Some of it I have to believe is hard-wired too though. And that means our genetics can run totally counter to our early experiences in ways that produce yet more interesting combinations.

Thinking a bit more about practical application of what I just wrote above, if what I wanted was to hear in the old thread was socialists and people with any warm-fuzzy feelings about socialism and socialism-adjacent ideas to explain to me why they feel those, to me, inexplicable positive inclinations, maybe a better strategy would have have been to say something like:

“Here’s why, in addition to philosophical arguments, statistics, and studies, I think there is a deep part of me predisposed to go ‘boo socialism’ regardless of the particular case I’m considering; can anyone whose internal applause lights tend to go on with regard to socialism explain/describe to me those sentiments?”

This way it comes off less as a unilateral trap (asking the opponent to give you free ammunition, in effect) and more an invitation for understanding. Instead of “My position is backed up by all the facts; therefore I suspect people who disagree with me are being irrational; please tell me your irrational reasons for disagreeing with me,” rather “given the facts I know and personal experiences/predispositions, I feel this way about x and can’t understand how anyone could feel that way; can anyone who feels that way about x describe that experience and, if possible, its origins?”

Uh so apparently I wrote a response to your hypothetical post in the “old thread”, without realizing this is a new thread. Well, here y’go.

I grew up with pretty damn left-wing parents, and some fairly strong left-wingers of various persuasions in my extended family. I would describe myself as a democratic socialist, I suppose. Probably the closest thing to a come to Jesus moment for me was reading Orwell. Apologies for self-promotion, but I think it’s relevant? Anyways, I wrote about it here. It really was a profound case of emotional resonance for me. There’s obviously a lot more to my beliefs but it’s maybe an interesting starting point.

I also like Orwell. But Orwell’s position, at least in his later writing, seems to be that it will be very difficult to make the sort of democratic socialism he wants work, but that all other alternatives are worse. I’m thinking in part of his joint review of The Road to Serfdom and a book by Zilliacus.

Western Europe and the U.S. didn’t go socialist after WWII and they didn’t end up with the sort of nightmare scenario Orwell seems to have expected—basically monopoly plus a return to Great Depression conditions. Does that mean that his argument for socialism as the least bad alternative fails?

I’d argue along the lines of what I think Scott was trying to get at with the Fabian Society post: that many aspects of what was then radical socialism have now become central components of our society. Others haven’t. Similarly for capitalism.

So Western Europe didn’t “go socialist”, but it went more socialist. Orwell’s extreme assumptions certainly may not have been warranted, but it’s easy to be an extremist when you’ve spent twenty years watching the rise of fascism.

“given the facts I know and personal experiences/predispositions, I feel this way about x and can’t understand how anyone could feel that way; can anyone who feels that way about x describe that experience and, if possible, its origins?”

Just wanted to pull that question out because it seems like a really useful way to initiate a conversation about difficult issues. I plan on using it somewhere later, and I promise to give credit, onyomi.

Agreed about Phineas Gage and the lesson to draw. Relatedly, I think that metis and phronesis and those other Greek words have a much larger role to play than what Scott seems to hope for. Which isn’t to say that logic-chopping shouldn’t be tried for all it’s worth: low hanging fruit shouldn’t be left to rot.

If it’s any consolation, I’ve had the opposite experience of you as well – I’ve always gotten along really well with authority figures – but I share many of your political views, being a fellow anarcho-capitalist.

@Baeraad

More or less the same here, although I mainly trusted my peers less than the authorities and didn’t necessarily trust the latter that much on an absolute, rather than relative level.

In my case I think I trusted my parents, had reservations about both my peers and my teachers. I don’t remember seeing the latter groups as either “fundamentally on my side” or “constantly hostile to me.”

This only really works if your opponent is truly Bayesian. For most people, this would only strengthen their beliefs as per The Cowpox of Doubt\attitude innoculation.

I don’t think that’s fair. Non-Bayesians do sometimes change their mind. It’s not usually a conscious process, and probably it’s less likely than a rationalist doing so, but it still happens. The Cowpox of Doubt is about only ever experiencing bad counter-arguments, whereas if you can get to the top of the pyramid you’re giving good arguments against a point. No one argument in this vein is going to carry the day(unless you have an opponent who is both unusually scrupulous and who has never heard that argument before), but large numbers of them will often tell in time.

I agree.

The Bayesian effect on people’s priors that Scott discusses in this article isn’t caused by your debating partner being a Bayesian philosopher. It’s caused by your debating partner having a Bayesian brain. Brains do in fact run on a semi-Bayesian system, or they wouldn’t work at all.

The system is generally very bad at solving any specific, abstract example of a Bayesian problem, much as most people can’t solve equations of motion when you set the things out in front of them on pencil and paper But it still routinely performs Bayesian-like analysis of problems, just as people routinely catch a ball even though they can’t solve its equations of motion consciously.

Our entire sensory system revolves around prior expectations and our brain’s ability to match stimuli to the most likely pattern available in our consciousness given its list of priors.

Our ability to navigate the physical and social world depends on us having a mix of ultra-high-confidence priors (“if I press the gas pedal, the car accelerates”), high-confidence priors (“the other drivers are mostly trying to avoid collisions”), and low-confidence priors undergoing experimentation (“this is the fastest route to work, but if I get caught in a traffic jam eight times in a row then I’m probably wrong about this and should find a new route”). If we never updated our priors we’d never [i]learn.[/i]

And in that spirit, if you provide enough experiences and reasons to change a prior, most people will change that prior in the direction of what you provide. The precise value of ‘enough’ may be frustratingly high, or movement in that direction may be slower than you like, but it’s not a null effect.

+1

I’m reasonably sure I’m more likely to change my priors if I get reasons to do so from independent sources, or at least apparently independent sources.

No one of the sources has total responsibility or gets total credit for changing my mind.

Perhaps we’re all Bayesians in some sense? At least those willing to rise above the dotted lines in Scott’s triangle.

In my experience, over 90% of references to Bayes in actual practical discussion like this are utterly superfluous. In nearly all such cases one could simply refer, e.g., to considering new evidence and adjusting one’s beliefs according to its strength. I think it’s quite rare that jargony references to updating priors and the like add anything meaningful.

Agreed, actually.

I think the non-superfluous references to Bayesianism are along the lines of Simon_Jester’s use of it to refer to just the way our brains work. I use the jargon because it’s the default in my field, but I’m not sure that it’s terribly useful in practical discussions most of the time, as you say. So perhaps I should change my *ahem* priors about what language is appropriate.

Not only that, but there doesn’t exist a way in the literature to incorporate Bayesianism into a huge number of functions we expect from dialectical reasoning.

There is no way to use Bayesianism (or more generally Aumann-style dialectic) to do theory-building like constructing a model for problems. Likewise, it’s useless for mathematical deduction. How do you use Bayesian updating to improve your confidence of a mathematical statement?

And of course, there is no way to apply Bayesianism to ethical statements i.e. “we should debate in a generous way as toward convergence which should be closer to the truth”.

I feel like 90% of this stuff was figured out almost a hundred years ago through the work and failures of the positivists, especially Wittgenstein.

You can’t apply the probability-crunching mathematical version of Bayes’ theorem to these cases.

You can apply the broader concept of “I have opinions, and I believe they are probably-correct but am not literally 100% certain they are correct.” Because anything you are literally 100% certain of, you’d be incapable of changing your mind on.

So when studying a mathematical proof, you start out with a probability of [negligible] that the conclusion is true. Then you skim the proof and you update it to [pretty high number], assuming it was in a credible source. Then you pore over the line-by-line details and update it to [much higher number], by which point you’re probably willing to use the result yourself without undue fear of wasting time. Then you really pore over it, to the point where you’ve internalized it, and you update your probability that it’s true to [1 – negligible].

You’re still not in a binary belief/disbelief state, because (again) if you were, it’d be literally impossible for you to entertain the notion of a disproof of your new theorem.

A morality argument is an even better example, the trick being that you can’t just model the probability you assign to a prior as “this is the likelihood that this theory of ethics is the One True Way.” The value you assign there influences not just your abstract opinions, but also things like how willing you are to actually live by your theory, and how strongly you expect other people to follow it. A moral law that you are 99.999999% certain applies is a law you will be willing to inconvenience yourself to follow (insofar as you ever are) and a law you will be put out at others for ignoring.

A true Bayesian is 100 percent certain not to hold beliefs with 0 or 100 percent certainty.

The broader concept of “I have opinions but they’re not 100% or 0%” and thereby always assailable to evidence has a lot of problems with it. And I would argue that it’s not even really in the spirit of Bayesianism.

For mathematical cases, you have to have a Bayesian to reason by induction. For instance, it has been conjectured that the number of twin primes is infinite. But how certain should we be of this fact? Any schema for updating our belief in a Bayesian way will arrive at one of two conclusions: Either our certainty is just our prior (disregard all evidence) or it’s exponentially close to 1 (virtually any credence to the evidence unless we apply some strange rule).

Neither of these conclusions seem congenial to how mathematics is done. Moreover, the whole Bayesian approach doesn’t fit with the search for better schemas or worldviews.

Worldviews are completely irreducible to sets of affairs or probability weights thereof; they tell us how to weigh evidence in the first place.

Isn’t the point of Bayesian reasoning that you are practicing it whether you think you are or not? I thought it was supposed to be inescapable, like evolution.

Thanks for the shoutout 🙂

I thought of this post as an excellent example of erisology while reading it and was pleasantly surprised to find out that you knew about the concept when I got to the end. I’ve carefully avoided calling it the science of disagreement though, so far favouring the study of disagreement because much of it (the stuff I do anyway) doesn’t hold scientific standard. Also it might fit better in with the humanities than the sciences (although this sort of goes against the whole idea of transcending intellectual gulfs) because the natural way to do it is most likely philosophy-like analysis and history-like textual interpretation. The description on my blog is more of a wishlist – but yeah, some actual science would be cool.

Dividing the pyramid into an empirical and a philosophical half is a great idea. And even more important is how the lower levels are actually downstream from the highest – it’s almost as if the upper grey triangle is actually “behind” the rest of the pyramid, propping it up, but you can only see it by climbing all the way up to the top level and get an unobscured view.

It seems that for disagreements, Plato’s Cave might be literally true.

Your analysis of the Harris/Klein disagreement was epic. I’d like to think there could be a way to get them both to read it and respond to the critique! I too thought after listening to their podcast their inability to effectively communicate, and more critically the specific ways they were failing at productive conversation was both illuminating and despair-inducing. If the likes of them can’t figure it out, who could? But I think you’re touching on some extremely important phenomena. Moreover, if Harris and Klein both absorbed some of your analytical framework on this issue, I believe they could actually come to an amicable resolution if they had a do-over, without either of them needing to change their core commitments.

I’d also add one further category at the tip of the pyramid – differing axioms. The classic example is “An embryo is a human blessed by God with a soul at the instant of conception” vs “An embryo is just a few cells with potential – if it can’t subjectively experience the world, it isn’t a moral agent and deserves no consideration in its own right”.

Arguments over axioms are supremely unproductive, because a true case of differing axioms is unresolvable even in principle, but they’re unproductive in a similar way to high-level generators(where both sides can understand and respect the other in principle), not in the same way to gotchas and social markers.

I feel like Scott sort of covered that under “high-level generators of disagreement”.

In Scott’s hierarchy it’s clearly in that category, but deep-seated heuristics that generate broader political arguments are very different in practice from true axioms, because the heuristics can change due to evidence. I think it’d be useful to distinguish between them.

Too bring in a meta-argument here, is there really a separate category of axioms separate from heuristics, or are they simply heuristics that are more firmly held and less easily challenged? Axioms can change (take the idea of a change in religious beliefs) and arguments against axioms are not futile as a result, even if they are unlikely to bring about a change in belief, so whilst a good-faith argument requires people to acknowledge their priors I am not sure that there is a real distinction between axioms and heuristics here. It looks like a single sliding scale of priors to me, all of which are best acknowledged without attempting to buttress particular ones by making them axiomatic; indeed the introduction of axiom as a category is probably non-conducive to open and honest argument as it allows a participant to actively discourage discussion of particular beliefs by labelling them as axiomatic, rather than simply expressing the fact they hold this particular prior for this particular reason.

Note also the simpler point that regardless of how firmly held each party’s opposing priors might be this does not make seeking out high-level generators of disagreement (I might want a snappier title to keep using that…) pointless. I have a very strong prior that can be caricatured as “socialism is never the right option” but still enjoy discussing politics with people with a belief that socialism is the answer, because I learn how they see the world working and where my prior does and does not speak to theirs. That our respective strongly-held priors are unchanged does not make the attempt pointless or unproductive.

It was also in “operationalization”, though somewhat hidden.

“An argument is operationalized when every part of it has either been reduced to a factual question […], or when it’s obvious exactly what kind of non-factual disagreement is going on (for example, a difference in moral systems, or a difference in intuitions about what’s important).”

This is also a huge generator of dishonest debating tactics: if you know you can’t convince most people of your terminal values, you may try to convince them that your preferred policies follow from their terminal values, whether that’s true or not.

People do this in a subconscious way, rather than a cynical, calculating way, e.g. you don’t spend too much time evaluating a counterargument if it’s based on other people’s values, and clearly doesn’t change your support of the policy based on your values.

That isn’t necessarily dishonest, though. There are plenty of cases where the same policy can arise from lots of different terminal values; indeed that’s the essence of a political coalition (policy X benefits both A and B, so A and B work together to achieve it – defining “benefit” here in a nonselfish way, ie “achieves goals compatible with their terminal values”). This can also happen when one person’s terminal value is another person’s intermediate value (ie I believe X as an axiom; you believe X as a result of believing Y as an axiom and believing that X results in Y).

The essence of politics is putting together a majority coalition for a policy, and since there are very few terminal values that are shared by a majority (other than the near-universal ones), it’s usually necessary to combine people who hold a number of different terminal values.

I’ll go even further than po8crg and claim that, far from being dishonest, the ability to target your arguments at the terminal values of other people is necessary for persuasive discourse.

People don’t change their terminal values willy-nilly. You’d be crazy to expect that every person you talk to can be talked into embracing your values. Any worthwhile debate is necessarily going to involve people with non-identical (but possibly overlapping) sets of terminal values. So how do you justify your arguments?

You’re never going to convince somebody by appealing to values they don’t care about. You have to meet people where they are. If I support a policy for several reasons, and I know that you will only care about a subset of those reasons, it’s crazy to say that focusing on that subset is dishonest. I’m just saving us all time and frustration. Similarly, although you should be clear when you are doing it, there’s nothing inherently dishonest about presenting an argument that you don’t personally find compelling.

It might be that your proposed policy does not, in fact, follow from their terminal values. But that would make you wrong, not dishonest.

People don’t change their terminal values, period. That’s what “terminal value” means: the values that every other value is rooted in, the ones that don’t depend on your model of the environment. If you can persuade someone to change their values, those values weren’t terminal.

(I am not persuaded that people even have terminal values in a meaningful sense, but that’s another conversation.)

‘Necessary for persuasion’ and ‘dishonest’ aren’t at all mutually exclusive, though. If I’m a salesman, and I want you to buy some product, it is necessary for me to convince you that the product is good/will be useful to you/will elevate your status/etc. Of course the actual reason I want you to buy the product is so I will earn a commission, but I have little hope of convincing you to share my terminal value of, “maximizing how much money crh has,” so I’d stick to the other arguments. Depending on who you are and what the product is, these arguments might not be dishonest. But they often will be, and I do think a disconnect between “why I believe X” and “why I’m saying you should believe X” in general promotes dishonesty. (Which is why salesmen are stereotypically dishonest.)

It’s not necessarily dishonest to argue from other people’s values, only when you claim and argue that a policy follows from their values, even though you know it doesn’t, or you have no idea if it actually does.

I’m not trying to moralize, but to explain why people often seem to refuse to accept rational arguments, ignore your arguments, or not prefer the style of debate where evidence is thoroughly reviewed. This is often attributed to their irrationality (or to your arguments not being actually good). But it may also be that if the debate is focused on whether a policy follows from your values, they may not have an interest in uncovering the truth if they have different values.

@Nornagest: Terminal values can definitely change, just not from a perfectly logical, factual debate or thought, and typically gradually. (A typical question which involves terminal values for many people, the way I understand the term, is how you weigh the importance of freedom and (economic) equality against each other. I’ve definitely changed on that.) A common way terminal values change is that you find a contradiction between your (or, if you’re persuading someone, their) values, which often makes one shift one or both of those values.

Terminal values — or facets of a single terminal value, depending on how you look at it — can have different magnitudes and can come into conflict, and what happens then is that the stronger one wins, but that doesn’t make the one that’s weaker in this context change, it just means that it isn’t taking priority right now. Take Alice as a (very simplified) example. Alice has a strong preference for owning puppies, and a weak one for eating meat. Those are her terminal values; everything else in her life is instrumental to her puppy-owning and meat-eating. She receives a puppy. Does she eat the puppy? Obviously no; but that doesn’t mean she won’t be happy to eat a steak if someone gives her one.

Terminal value has a very specific meaning. A value is not terminal just because you feel it very strongly. It isn’t even necessarily terminal if you can’t think of anything more fundamental. It’s only terminal if it’s inexpressible in terms of anything else. That is a very strong criterion, and among other things it means that being talked out of your terminal values is definitionally impossible.

Note however that an ethical system‘s terminal values are not necessarily those of the person implementing that system. People implement ethics only imperfectly, and we can definitely be talked out of one ethical framework and into another; that just means that the framework’s values were not a perfect match with the person’s. Human terminal values, if they exist, are probably some kind of messy neurochemical function; anyone that’s ever told you “my terminal values are such-and-such” is almost certainly wrong.

@Nornagest:

You’re being unhelpfully pedantic.

In the context of this conversation, “terminal value” was being used as shorthand to refer to Scott’s “non-factual disagreements (a difference in moral systems, or a difference in intuitions about what’s important)”. I don’t see any sign that anybody else was confused by that.

You’re trying to enforce a specific interpretation of the phrase “terminal value”, while at the same time arguing that your interpretation isn’t useful. It doesn’t help that your preferred definition is actually not very widely used. The SEP doesn’t have an entry; Wikipedia just redirects to “instrumental and intrinsic value“. There’s a LessWrong wiki page, but I don’t think we’re all bound to use words a particular way just because Eliezer Yudkowsky wrote an article once.

But it’s often really valuable to recognize when you’re arguing against a definition or premise vs a factual claim.

I think the «value of searching the high-level generator of disagreement» part could benefit from an explicit mention of «splash damage».