[Epistemic status: Very speculative, especially Parts 3 and 4. Like many good things, this post is based on a conversation with Paul Christiano; most of the good ideas are his, any errors are mine.]

I.

In the 1950s, an Austrian scientist discovered a series of equations that he claimed could model history. They matched past data with startling accuracy. But when extended into the future, they predicted the world would end on November 13, 2026.

This sounds like the plot of a sci-fi book. But it’s also the story of Heinz von Foerster, a mid-century physicist, cybernetician, cognitive scientist, and philosopher.

His problems started when he became interested in human population dynamics.

(the rest of this section is loosely adapted from his Science paper “Doomsday: Friday, 13 November, A.D. 2026”)

Assume a perfect paradisiacal Garden of Eden with infinite resources. Start with two people – Adam and Eve – and assume the population doubles every generation. In the second generation there are 4 people; in the third, 8. This is that old riddle about the grains of rice on the chessboard again. By the 64th generation (ie after about 1500 years) there will be 18,446,744,073,709,551,616 people – ie about about a billion times the number of people who have ever lived in all the eons of human history. So one of our assumptions must be wrong. Probably it’s the one about the perfect paradise with unlimited resources.

Okay, new plan. Assume a world with a limited food supply / limited carrying capacity. If you want, imagine it as an island where everyone eats coconuts. But there are only enough coconuts to support 100 people. If the population reproduces beyond 100 people, some of them will starve, until they’re back at 100 people. In the second generation, there are 100 people. In the third generation, still 100 people. And so on to infinity. Here the population never grows at all. But that doesn’t match real life either.

But von Foerster knew that technological advance can change the carrying capacity of an area of land. If our hypothetical islanders discover new coconut-tree-farming techniques, they may be able to get twice as much food, increasing the maximum population to 200. If they learn to fish, they might open up entirely new realms of food production, increasing population into the thousands.

So the rate of population growth is neither the double-per-generation of a perfect paradise, nor the zero-per-generation of a stagnant island. Rather, it depends on the rate of economic and technological growth. In particular, in a closed system that is already at its carrying capacity and with zero marginal return to extra labor, population growth equals productivity growth.

What causes productivity growth? Technological advance. What causes technological advance? Lots of things, but von Foerster’s model reduced it to one: people. Each person has a certain percent chance of coming up with a new discovery that improves the economy, so productivity growth will be a function of population.

So in the model, the first generation will come up with some small number of technological advances. This allows them to spawn a slightly bigger second generation. This new slightly larger population will generate slightly more technological advances. So each generation, the population will grow at a slightly faster rate than the generation before.

This matches reality. The world population barely increased at all in the millennium from 2000 BC to 1000 BC. But it doubled in the fifty years from 1910 to 1960. In fact, using his model, von Foerster was able to come up with an equation that predicted the population near-perfectly from the Stone Age until his own day.

But his equations corresponded to something called hyperbolic growth. In hyperbolic growth, a feedback cycle – in this case population causes technology causes more population causes more technology – leads to growth increasing rapidly and finally shooting to infinity. Imagine a simplified version of Foerster’s system where the world starts with 100 million people in 1 AD and a doubling time of 1000 years, and the doubling time decreases by half after each doubling. It might predict something like this:

1 AD: 100 million people

1000 AD: 200 million people

1500 AD: 400 million people

1750 AD: 800 million people

1875 AD: 1600 million people

…and so on. This system reaches infinite population in finite time (ie before the year 2000). The real model that von Foerster got after analyzing real population growth was pretty similar to this, except that it reached infinite population in 2026, give or take a few years (his pinpointing of Friday November 13 was mostly a joke; the equations were not really that precise).

What went wrong? Two things.

First, as von Foerster knew (again, it was kind of a joke) the technological advance model isn’t literally true. His hyperbolic model just operates as an upper bound on the Garden of Eden scenario. Even in the Garden of Eden, population can’t do more than double every generation.

Second, contra all previous history, people in the 1900s started to have fewer kids than their resources could support (the demographic transition). Couples started considering the cost of college, and the difficulty of maternity leave, and all that, and decided that maybe they should stop at 2.5 kids (or just get a puppy instead).

Von Foerster published has paper in 1960, which ironically was the last year that his equations held true. Starting in 1961, population left its hyperbolic growth path. It is now expected to stabilize by the end of the 21st century.

II.

But nobody really expected the population to reach infinity. Armed with this story, let’s look at something more interesting.

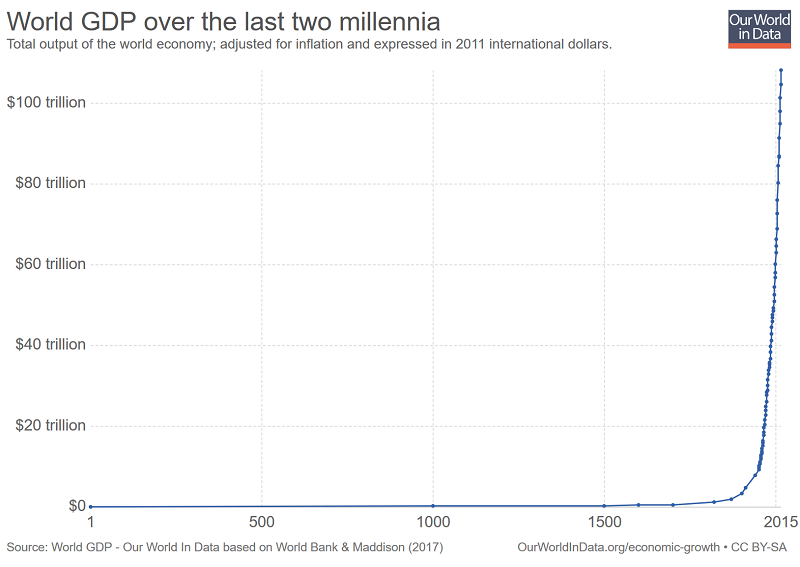

This (source) might be the most depressing graph ever:

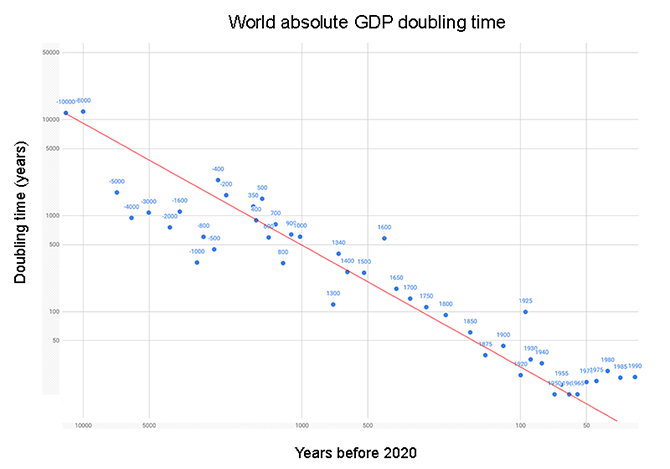

The horizontal axis is years before 2020, a random year chosen so that we can put this in log scale without negative values screwing everything up. This is an arbitrary choice, but you can also graph it with log GDP as the horizontal axis and find a similar pattern.

The vertical axis is the amount of time it took the world economy to double from that year, according to this paper. So for example, if at some point the economy doubled every twenty years, the dot for that point is at twenty. The doubling time decreases throughout most of the period being examined, indicating hyperbolic growth.

Hyperbolic growth, as mentioned before, shoots to infinity at some specific point. On this graph, that point is represented by the doubling time reaching zero. Once the economy doubles every zero years, you might as well call it infinite.

For all of human history, economic progress formed a near-perfect straight line pointed at the early 21st century. Its destination varied by a century or two now and then, but never more than that. If an ancient Egyptian economist had modern techniques and methodologies, he could have made a graph like this and predicted it would reach infinity around the early 21st century. If a Roman had done the same thing, using the economic data available in his own time, he would have predicted the early 21st century too. A medieval Burugundian? Early 21st century. A Victorian Englishman? Early 21st century. A Stalinist Russian? Early 21st century. The trend was really resilient.

In 2005, inventor Ray Kurzweil published The Singularity Is Near, claiming there would be a technological singularity in the early 21st century. He didn’t refer to this graph specifically, but he highlighted this same trend of everything getting faster, including rates of change. Kurzweil took the infinity at the end of this graph very seriously; he thought that some event would happen that really would catapult the economy to infinity. Why not? Every data point from the Stone Age to the Atomic Age agreed on this.

This graph shows the Singularity getting cancelled.

Around 1960, doubling times stopped decreasing. The economy kept growing. But now it grows at a flat rate. It shows no signs of reaching infinity; not soon, not ever. Just constant, boring 2% GDP growth for the rest of time.

Why?

Here von Foerster has a ready answer prepared for us: population!

Economic growth is a function of population and productivity. And productivity depends on technological advancement and technological advancement depends on population, so it all bottoms out in population in the end. And population looked like it was going to grow hyperbolically until 1960, after which it stopped. That’s why hyperbolic economic growth, ie progress towards an economic singularity, stopped then too.

In fact…

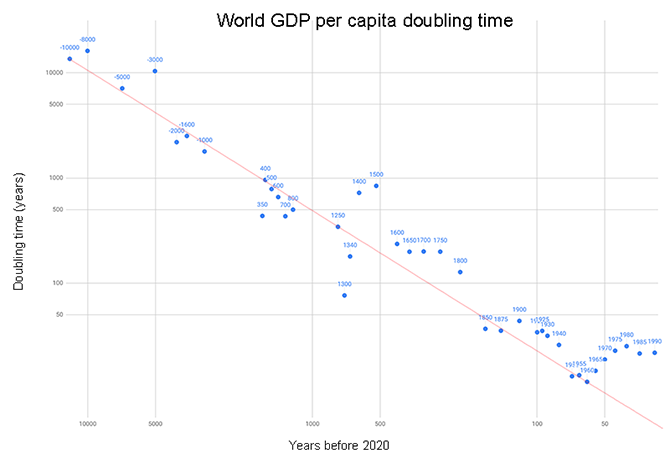

This is a really sketchy graph of per capita income doubling times. It’s sketchy because until 1650, per capita income wasn’t really increasing at all. It was following a one-step-forward one-step-back pattern. But if you take out all the steps back and just watch how quickly it took the steps forward, you get something like this.

Even though per capita income tries to abstract out population, it displays the same pattern. Until 1960, we were on track for a singularity where everyone earned infinite money. After 1960, the graph “bounces back” and growth rates stabilize or even decrease.

Again, von Foerster can explain this to us. Per capita income grows when technology grows, and technology grows when the population grows. The signal from the end of hyperbolic population growth shows up here too.

To make this really work, we probably have to zoom in a little bit and look at concrete reality. Most technological advances come from a few advanced countries whose population stabilized a little earlier than the world population. Of the constant population, an increasing fraction are becoming researchers each year (on the other hand, the low-hanging fruit gets picked off and technological advance becomes harder with time). All of these factors mean we shouldn’t expect productivity growth/GWP per capita growth/technological growth to exactly track population growth. But on the sort of orders-of-magnitude scale you can see on logarithmic graphs like the ones above, it should be pretty close.

So it looks like past predictions of a techno-economic singularity for the early 21st century were based on extrapolations of a hyperbolic trend in technology/economy that depended on a hyperbolic trend in population. Since the population singularity didn’t pan out, we shouldn’t expect the techno-economic singularity to pan out either. In fact, since population in advanced countries is starting to “stagnate” relative to earlier eras, we should expect a relative techno-economic stagnation too.

…maybe. Before coming back to this, let’s explore some of the other implications of these models.

III.

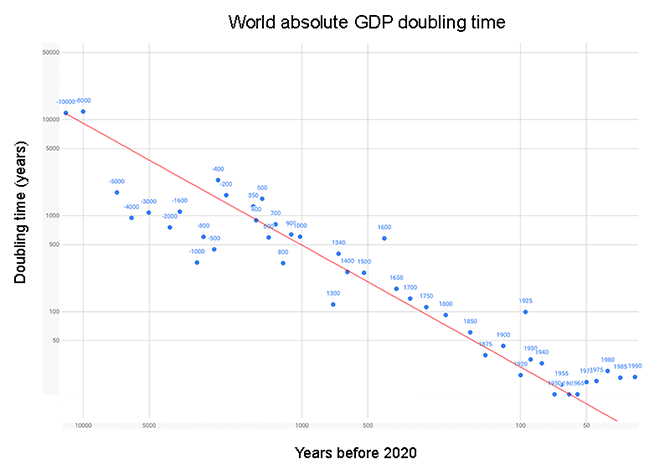

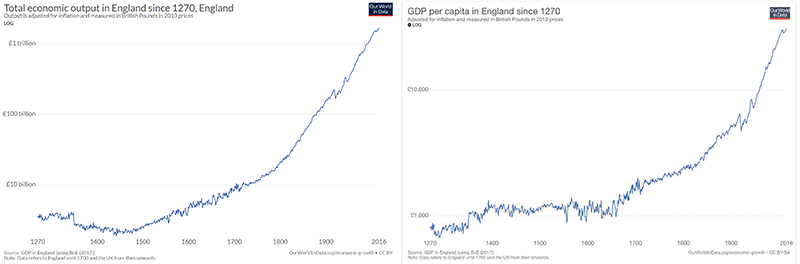

The first graph is the same one you saw in the last section, of absolute GWP doubling times. The second graph is the same, but limited to Britain.

Where’s the Industrial Revolution?

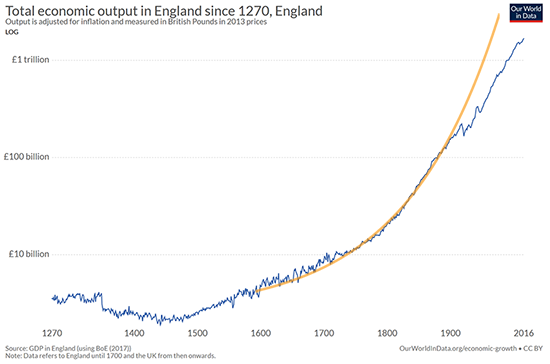

It doesn’t show up at all. This may be a surprise if you’re used to the standard narrative where the Industrial Revolution was the most important event in economic history. Graphs like this make the case that the Industrial Revolution was an explosive shift to a totally new growth regime:

It sure looks like the Industrial Revolution was a big deal. But Paul Christiano argues your eyes may be deceiving you. That graph is a hyperbola, ie corresponds to a single simple equation. There is no break in the pattern at any point. If you transformed it to a log doubling time graph, you’d just get the graph above that looks like a straight line until 1960.

On this view, the Industiral Revolution didn’t change historical GDP trends. It just shifted the world from a Malthusian regime where economic growth increased the population to a modern regime where economic growth increased per capita income.

For the entire history of the world until 1000, GDP per capita was the same for everyone everywhere during all historical eras. An Israelite shepherd would have had about as much stuff as a Roman farmer or a medieval serf.

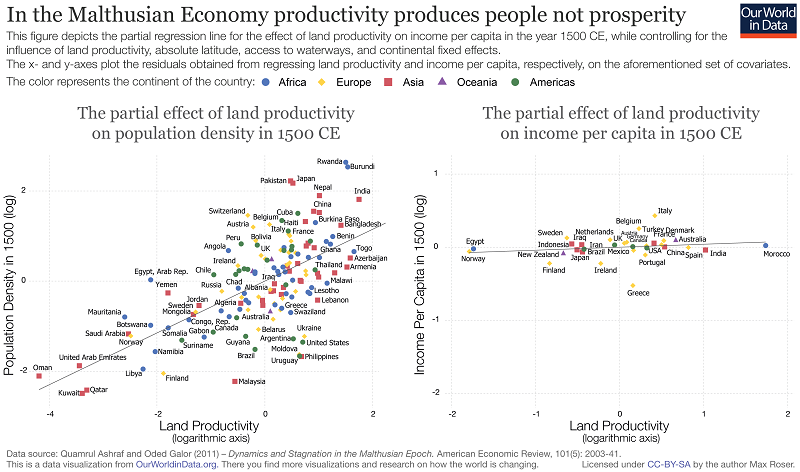

This was the Malthusian trap, where “productivity produces people, not prosperity”. People reproduce to fill the resources available to them. Everyone always lives at subsistence level. If productivity increases, people reproduce, and now you have more people living at subsistence level. OurWorldInData has an awesome graph of this:

As of 1500, places with higher productivity (usually richer farmland, but better technology and social organization also help) population density is higher. But GDP per capita was about the same everywhere.

There were always occasional windfalls from exciting discoveries or economic reforms. For a century or two, GDP per capita would rise. But population would always catch up again, and everyone would end up back at subsistence.

Some people argue Europe broke out of the Malthusian trap around 1300. This is not quite right. 1300s Europe achieved above-subsistence GDP, but only because the Black Plague killed so many people that the survivors got a windfall by taking their land.

Malthus predicts that this should only last a little while, until the European population bounces back to pre-Plague levels. This prediction was exactly right for Southern Europe. Northern Europe didn’t bounce back. Why not?

Unclear, but one answer is: fewer people, more plagues.

Broadberry 2015 mentions that Northern European culture promoted later marriage and fewer children:

The North Sea Area had an advantage in this area because of its approach to marriage. Hajnal (1965) argued that northwest Europe had a different demographic regime from the rest of the world, characterised by later marriage and hence limited fertility. Although he originally called this the European Marriage Pattern, later work established that it applied only to the northwest of the continent. This can be linked to the availability of labour market opportunities for females, who could engage in market activity before marriage, thus increasing the age of first marriage for females and reducing the number of children conceived (de Moor and van Zanden, 2010). Later marriage and fewer children are associated with more investment in human capital, since the womenemployed in productive work can accumulate skills, and parents can afford to invest more in each of the smaller number of children because of the “quantity-quality” trade-off (Voigtländer and Voth, 2010).

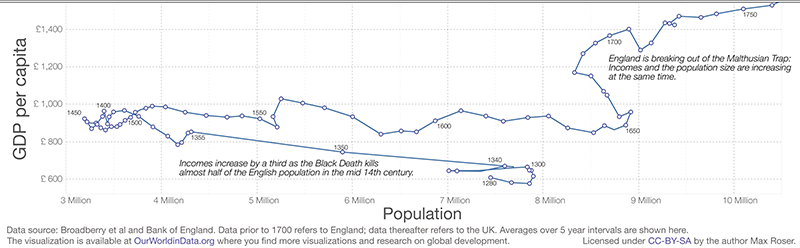

This low birth rate was happening at the same time plagues were raising the death rate. Here’s another amazing graph from OurWorldInData:

British population maxes out around 1300 (?), declines substantially during the Black Plague of 1348-49, but then keeps declining. The List Of English Plagues says another plague hit in 1361, then another in 1369, then another in 1375, and so on. Some historians call the whole period from 1348 to 1666 “the Plague Years”.

It looks like through the 1350 – 1450 period, population keeps declining, and per capita income keeps going up, as Malthusian theory would predict.

Between 1450 and 1550, population starts to recover, and per capita incomes start going down, again as Malthus would predict. Then around 1560, there’s a jump in incomes; according to the List Of Plagues, 1563 was “probably the worst of the great metropolitan epidemics, and then extended as a major national outbreak”. After 1563, population increases again and per capita incomes decline again, all the way until 1650. Population does not increase in Britain at all between 1660 and 1700. Why? The List declares 1665 to be “The Great Plague”, the largest in England since 1348.

So from 1348 to 1650, Northern European per capita incomes diverged from the rest of the world’s. But they didn’t “break out of the Malthusian trap” in a strict sense of being able to direct production toward prosperity rather than population growth. They just had so many plagues that they couldn’t grow the population anyway.

But in 1650, England did start breaking out of the Malthusian trap; population and per capita incomes grow together. Why?

Paul theorizes that technological advance finally started moving faster than maximal population growth.

Remember, in the von Foerster model, the growth rate increases with time, all the way until it reaches infinity in 2026. The closer you are to 2026, the faster your economy will grow. But population can only grow at a limited rate. In the absolute limit, women can only have one child per nine months. In reality, infant mortality, infertility, and conscious decision to delay childbearing mean the natural limits are much lower than that. So there’s a theoretical limit on how quickly the population can increase even with maximal resources. If the economy is growing faster than that, Malthus can’t catch up.

Why would this happen in England and Holland in 1650?

Lots of people have historical explanations for this. Northern European population growth was so low that people were forced to invent labor-saving machinery; eventually this reached a critical mass, we got the Industrial Revolution, and economic growth skyrocketed. Or: the discovery of America led to a source of new riches and a convenient sink for excess population. Or: something something Protestant work ethic printing press capitalism. These are all plausible. But how do they sync with the claim that absolute GDP never left its expected trajectory?

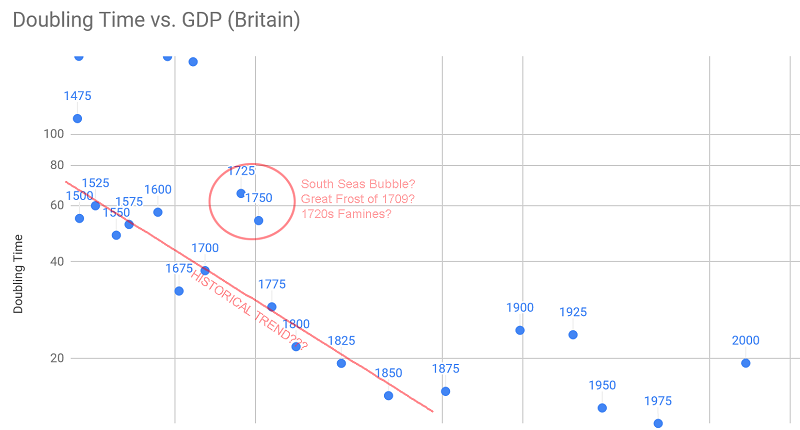

I find the idea that the Industrial Revolution wasn’t a deviation from trend fascinating and provocative. But it depends on eyeballing a lot of graphs that have had a lot of weird transformations done to them, plus writing off a lot of outliers. Here’s another way of presenting Britain’s GDP and GDP per capita data:

Here it’s a lot less obvious that the Industrial Revolution represented a deviation from trend for GDP per capita but not for GDP.

These British graphs show less of a singularity signature than the worldwide graphs do, probably because we’re looking at them on a shorter timeline, and because the Plague Years screwed everything up. If we insisted on fitting them to a hyperbola, it would look like this:

Like the rest of the world, Britain was only on a hyperbolic growth trajectory when economic growth was translating into population growth. That wasn’t true before about 1650, because of the plague. And it wasn’t true after about 1850, because of the Demographic Transition. We see a sort of fit to a hyperbola between those points, and then the trend just sort of wanders off.

It seems possible that the Industrial Revolution was not a time of abnormally fast technological advance or economic growth. Rather, it was a time when economic growth outpaced population growth, causing a shift from a Malthusian regime where productivity growth always increased population at subsistence level, to a modern regime where productivity growth increases GDP per capita. The world remained on the same hyperbolic growth trajectory throughout, until the trajectory petered out around 1900 in Britain and around 1960 in the world as a whole.

IV.

So just how cancelled is the singularity?

To review: population growth increases technological growth, which feeds back into the population growth rate in a cycle that reaches infinity in finite time.

But since population can’t grow infinitely fast, this pattern breaks off after a while.

The Industrial Revolution tried hard to compensate for the “missing” population; it invented machines. Using machines, an individual could do an increasing amount of work. We can imagine making eg tractors as an attempt to increase the effective population faster than the human uterus can manage. It partly worked.

But the industrial growth mode had one major disadvantage over the Malthusian mode: tractors can’t invent things. The population wasn’t just there to grow the population, it was there to increase the rate of technological advance and thus population growth. When we shifted (in part) from making people to making tractors, that process broke down, and growth (in people and tractors) became sub-hyperbolic.

If the population stays the same (and by “the same”, I just mean “not growing hyperbolically”) we should expect the growth rate to stay the same too, instead of increasing the way it did for thousands of years of increasing population, modulo other concerns.

In other words, the singularity got cancelled because we no longer have a surefire way to convert money into researchers. The old way was more money = more food = more population = more researchers. The new way is just more money = send more people to college, and screw all that.

But AI potentially offers a way to convert money into researchers. Money = build more AIs = more research.

If this were true, then once AI comes around – even if it isn’t much smarter than humans – then as long as the computational power you can invest into researching a given field increases with the amount of money you have, hyperbolic growth is back on. Faster growth rates means more money means more AIs researching new technology means even faster growth rates, and so on to infinity.

Presumably you would eventually hit some other bottleneck, but things could get very strange before that happens.

I predict the Singularity has not been cancelled. By the year 2100, the per-capita gross world product will exceed $10,000,000 (in year 2000 dollars).

Robert Lucas Jr. is wrong…per capita GWP growth will accelerate

You should be aware that England and Holland were dominant European Naval powers around that time.

See here: https://en.wikipedia.org/wiki/Dutch_West_India_Company

And here: https://en.wikipedia.org/wiki/East_India_Company

And don’t be misled by “company” — the East India Company was basically the first “joint stock corporation” that there was. Most of English GDP was touched by The Company, because:

The period you’re describing was the rise of (Colonial) Mercantilism. I.e. global trade networks were now unleashed thanks to technological advances. “GDP per Capita” in England, per your example, is leveraging the physical labor of an entire continent (India) who do not show up in that population graph.

Prior to Mercantilism, a “conquered” people would be subsumed within the “Empire” and become taxed subjects, with “Roman Empire GDP per Capita” adjusted to include the entire empire.

But in these graphs you can see the GDP of England going up without reflecting the full population who “labored” to produce that GDP.

Couple that with the population-depleting-plagues you mentioned and boom: Trap escaped by virtue (or lack thereof) of non-citizen labor.

“Who is generating this Wealth — and how — and are they able to capture any of the surpluses they generate?” is always a good question to ponder.

(Sorry for long comment, this is a pet topic of mine and I like to write about it a lot)

Even with a constant population, you can sustain exponential growth of researchers within a given field for quite some time.

Presumably AI and ML in particular will enjoy exponential gains in research talent for decades to come.

I disagree that hyperbolic growth has to be canceled, though I do see bottlenecks I’m not sure how to overcome.

My essential argument is that industrial revolution didn’t change the trend because it’s an essential part of the trend.

But I do see bottlenecks today and in many places don’t know exactly how to categorize them. E.g. bullshit jobs point towards an overachieving reproduction to the point where today’s economy can _support_ all the people around, but doesn’t have the capacity to apply their skills in any meaningful way.

Is this because there truly are no capacities left? Did we just max out the economie’s resource consumption to the rate of resource production? If so, wouldn’t we be better off w/o spending money on bullshit jobs at all? Is this just the only socially acceptable way of implementing welfare programs? Are bullshit jobs just an answer to low-IQ-jobs dying out?

Simply because I like the maxing-out-resources view, I think we actually maxed out on energy-production. We need better ways to produce more, cheaper energy. It fits nicely with my other view that there is no way around nuclear energy and that bad PR for nuclear energy is a major culprit why we don’t have cheap, “sustainable” fission reactors yet. At the same time, there is some hope for the next big leap coming from Princeton, claiming to develop low-mass, high power fusion reactors. (They caught my eye because their reactor is just so incredibly simple, yet controlling a highly complex system.)

Another bottleneck would be dry land surface on earth. I don’t see a lot of effort put into urbanization of sea surface and the Elon is a bit too far off to count. Vertical farming might be a big win, but right now it seems we might just increase efficiency with better controlled environments and GMOs?

But it might all just go to shit anyway due to climate change anyway. We do live in interesting times.

If China and India continue developing economically and become able to generate researchers out of each of their billion person populations, then that could create a massive research boom. Is part of what we’re looking at and living in today just the waiting period before those two booms explode?

For the parts of history where technological growth is negligible—thus social change can run its course—some details are of crucial importance, and they lead to very different societies. So far, I believe I have understood the economics of three boundary conditions:

1) Disease-limited, non-Malthusian. In the “Cursed Paradise”, there is—relatively speaking—an abundance of land, thus enough food can be produced for the whole population with comparatively little labor. The main consequence is that single mothers can raise their children, paternal investment is not particularly useful, pair bonds are weak. Men spend their effort on competing with other men for status and to impress the women in “sports”, art, rhetoric, and raid the neighboring tribes. (Cad-fast.) Evolutionarily, beside disease resistance, this condition rewards r-strategists—unlike the food-limited societies selecting for K-strategists—, possibly leading to pygmies, if the pressure remains for hundreds of thousands of years.

2) Malthusian, labor-limited. Given the food production technology, the climate and the plants/animals used, marginal labor has positive returns all the way up to and even past the limit of how much labor any person can put forth. Enormous paternal effort is necessary all the way until children reach adulthood, but the marriage market is relatively flat and balanced. The (parts of) societies that work like this are quite drab, both because nobody has spare effort, and because nobody has much reason for zero-sum competitions. In practice, some nobility/empire diverts some effort into flashier things via taxation. (Dad-coy, domestic.)

3) Malthusian, land-limited. (Other forms of Magic Box of Food can work out similarly.) Given the food production tech, etc., there is zero yield to marginal labor beyond some point that is significantly less than the humanly possible maximum. (“You can’t herd livestock harder.”) Reproductive opportunity is limited by land ownership. This leads to a skew marriage market, where brides and their families compete for the best grooms. (Why doesn’t polygyny vent the pressure? Because only a vanishingly small proportion of men have so much land=food that they could feed more children than one wife can bear, but a second wife is an extra mouth to feed.) This competition ranges from paying dowries, through oppressing women to signal chastity (basically locking them indoors, and some escort guarding them anytime they venture onto the streets), to abominations such as (trigger warning) FGM (female genital mutilation) and honor killings, to keep the family honor a.k.a. the credibility of the promise that the other daughters of the family are chaste. (/trigger warning) On the upside, surplus labor is available, and there are dowries to pay, so there is a lot of art and other flashy showing-off. (Dad-coy, public.)

Von Förster’s analysis is apparently robust to the fact that he assumed the 3. condition, while as far as I can tell, Europe and temperate East Asia (the typical subjects of this kind of analysis) were in the bulk of the population (peasantry) much closer to the 2. condition for most of their historical existence, with a 3.-type nobility (Chinese foot wrapping, anyone?). In equilibrium, this mistake makes no difference (the labor supply being exhausted looks just like labor having zero marginal returns), but after plagues, the surplus of land frees up less labor in the 3. condition than in the 2. condition.

_______________________________________________________________

If this comment is already so long, I might as well add some wild speculation on the demographic transition. Assuming that its primary cause is the high cost of additional children to working-out-of-home women—and seeing how we already use the public education system during the day—we could just complete the pattern, make them boarding schools and add pre-pre-pre-K until the entry age is 0. Or to be facetious about it, “Orphanages for everyone!”

Orphanages for everyone!

One of the features of many proposed communist utopias. Up to, past, and including The Dispossessed.

There are multiple ways to arrive at this idea. Communist utopians were mostly blank slatists, and thought this would equalize everyone. If someone believes nurture has limited effect, they aren’t very concerned about the perhaps worse shared-environmental effects ruining the people. (On the other hand, the things that we know to matter, notably disease and nutrient deficiency, are problems bureaucracy is extremely good at solving.) High Modernism contains two related approaches: one, “efficiencies of scale and specialization in everything”, figure out how to make childcare come in larger and more similar batches for a longer time; two, “move functions from the household to dedicated institutions”. Natalism and working mothers (-to-be) ask for ways to lower the additional cost of children, which is why we already have some parts of the system.

I think that if the end date for the time axis is increased, say to 2050, the recent data points won’t appear to have deviated from trend so much. The suggestion that the data show the singularity being cancelled may be somewhat sensitive to this choice.

“But the industrial growth mode had one major disadvantage over the Malthusian mode: tractors can’t invent things.”

The core of the article. What if they could?

Good article.

Warning – Point probably made by others before me, but hammering out preliminary thoughts in the 10 free minutes I have today

Let me grant the whole post for the sake of argument. It isn’t really that depressing. Okay, doubling time has remained static. But that is still exponential growth. A while back I idly ran numbers on current growth trends, and if things keep going just as they are, around about the year 2100, Botswana is basically Wakanda.

Even in my own field of science – molecular biology – I am staggered. Exponential increase in our abilities is the norm; half the tools now available for genetic engineering today would have been pure scifi when I was an undergrad (which isn’t that long ago….)

I think that what is sometimes underestimated here is the Giant Head Of Steam progress has going behind it. Pre-existing, proven tech can be exponential in its effects when it is just spread around or mixed up a bit.

Take your point about AI. Before we even get to general AI, we can do some pretty amazing things with AI as it stands – all that’s really required is getting the robotics people to talk with the biology people more than they do (which, again, is happening). Similarly, when someone invents a cool widget in the US, it can now be disseminated throughout the rest of the world, which has an exponential effect all on its own – in some cases leading to weird things, such as the women of Kibera needing remedial classes in keyboard and mouse use, since they’re so used to smartphones and touchscreens…

I’m religiously committed to intervening on points like this, so:

“…once AI comes around – even if it isn’t much smarter than humans…”

AI is already here, and it’s already much smarter than humans (in some ways, and lacks some other human capacities).

If someone wants to learn more about the economic theory side of this phenomena (and are mathematically prepared), I’d strongly recommend reading Acemoglu’s Introduction to Modern Economic Growth. Honestly this article makes great empirical evidence for the theories given for balanced growth paths, labor shocks and endogenous growth in the book.

Isn’t that last part basically Robin Hanson’s “Age of Em” argument? “More ems = more growth/etc”, except you can substitute AI to some degree.

I’m skeptical of this, because birth rates have fallen world-wide (including in places that have not gone through heavy industrialization). I think it has a lot more to do with reducing early childhood mortality and increased access to reliable contraception – it’s not a coincidence that the countries with the highest fertility rates are the poorest, most conflict-prone parts of the planet, and even their rates are lower than some of the historical rates (Colonial America’s fertility rate was higher than the highest fertility rate country today).

One thing that could screw things up is climate change. If we have to spend an increasing amount of our GDP fixing climate disasters that could soak up a lot of the advantages from AI.

There’s one big mathematical problem with the graphs: “Years before 2020” is doing most of the work toward the right side. Everything looks disappointing if you’re expecting the asymptote to be closer than it is. If you redraw those graphs with “Years before 2050”, the trend looks like it is still plausibly going, just with an outlier post-WW2 blip of superfast growth (similar to the outlier of 1300) that wore off.

(Not saying that this settles matters! Just that the graphs are less damning than they first appear.)

See the link to the log GDP graph.

I’ve written about this before, but it bears repeating:

Finite systems produce S-curves that resemble exponential growth… until they don’t. Don’t be fooled into fitting an exponential growth curve onto a finite system. You can only get infinite growth in infinite systems.

If population was going to drive growth, shouldn’t the trend in Africa be reversed? Their population has basically exploded because they got technology that spilled over from other parts of the world, and their population explosion hasn’t resulted in a tech explosion.

They’ve benefited from technology, but it hasn’t pulled them to the technological frontier where most new development occurs.

Under this model why would that matter?

The singularity always has been a somewhat far-fetched idea. In the end, we will end up limited by the same factor that has limited the growth and development of every organism in history: energy availability.

Anybody who is claiming that AI will become infinitely capable, is claiming that its energy efficiency will also become infinite (energy is, after all, finite). I see very little reason to believe that this is possible, and even less reason to believe that it is likely.

Trivial objection: the AI could just capture energy that 2019!humanity isn’t using yet. There’s a bunch of calculations on stuff like crosswind kites (big drop in the cost of wind power, can be deployed in deep water), dynamic tidal power, building solar+HVDC into the Sahara, vortex engines… The theme is that on paper they work out, but “in theory, there is no difference between theory and practice” wariness and general Moloch problems makes for glacially slow progress. An agenty AI could cut through most of these difficulties.

And, of course, it could always go out and start building a Dyson swarm.

Population Growth and Technological Change: One Million B.C. to 1990 by Kremer is extremely relevant.

Abstract:

Its explanation of the end of the growth trend is:

I understand using GDP as a crude proxy for comparing nations, but I’m skeptical about using it to compare fundamentally different modes of living and time periods.

If I invent a magical car that uses no gasoline and runs forever without getting old, and costs nothing to make, it should reduce the GDP because there are fewer economic transactions and less money changing hands, but obviously the car is a net good (I guess except for the people making the cars, but that’s more of a distribution question).

It seems like the real problem is that social scientists like to take a simple linear correlation: that today’s more developed countries have higher GDPs than agrarian societies and pretend that it corresponds to any kind of general underlying truth.

Another problem is that non-market production (especially home production) typically doesn’t get counted in GDP. For example, if you hire a cleaning service to scrub your bathtub, that counts in GDP, but if you do it yourself or make your kid do it, it doesn’t count. That’s mostly okay for modern developed countries, where market production is central enough to our concerns and overall prosperity that ignoring non-market production is mostly harmless.

In a subsistence agriculture society, on the other hand, to ignore home production is to ignore most of the goods and services produced by members of that society. A 21st century developed economy is still enormously richer than a subsistence agriculture economy, but the margin is somewhat smaller if you look at total production instead of just comparing market production.

One concern about your argument: global population has continued growing since 1960, and critically the share of the world population participating in scientific research has sky-rocketed. If what matters to hyperbolic growth is growing the population of available scientists, then why hasn’t accepting all the smart people from India and China into western graduate schools done the trick?

What you really want is a way of converting more money into more *brainpower*. And to keep doing that we don’t need AI; a method to increase human intelligence will suffice.

This is also safer from a value-preservation perspective — humans aren’t exactly friendly (or safe), but we at least have human values.

Of course, you also haven’t established that the singularity is a good thing. Perhaps we could stand to grow in wisdom and virtue before launching ourselves to the stars.

Wouldn’t the Malthusian Trap itself discourage technological advance? There’s not much time to innovate or research when you’re living at the subsistence level.

Also, China has been the most populous region/country/(culture?) of the world for at least the last two millennia, yet very few of the major advancements that have increased agricultural and industrial output of the last 500 years originated there. How does that fit in with the (admittedly crude) “more people means more technological advance” thesis?

China seems to have been technologically ahead for most of history, until the Great Divergence; the Great Divergence is admittedly really mysterious and often phrased as “given that China should have been ahead, why was Europe?” I discuss some reasons this might have happened in Part 3 of this post; some popular explanations are unique European customs of marrying later (therefore having fewer but better-educated kids) and Europe’s more limited population encouraging them to invent labor-saving machinery.

I know that it’s not in fashion to have explanations this detailed, but I think that you can also blame some things that don’t rise to the level of economic universalities.

Like, hand-writing and learning to read Chinese ideograms is not actually massively more difficult than hand-writing and learning to read alphabetic languages. But movable-type printing is much less useful if you have to have (and keep organized) several thousand character dies.

It may be that rice is simply less amenable to labor-saving agricultural inventions than wheat is. Etc.

Ian Morris says China was just slightly behind than Europe until the fall of Rome, and then stayed ahead during the dark ages, in energy capture (aka food production). After that, Europe became way more effective in applying fuel as an input to food production and jumped back ahead.

bah, my edit was eaten. Anyway, Morris says that Europe caught up around the XVIII century, probably by the combination of colonization and reinassance. If there’s a great divergence, it seems to be that the Chinese emperors stopped exploring, while Europe sent Marco Polo there. Insert here the honorable and traditional blaming of Confucianism. Morris himself blames geography, saying that China has more to gain by exploring the Indian Ocean, while Europe has to develop serious navigation chops to go anywhere, but I feel that this is a little adhoc/non falsifiable, as you could explore America by navigating near the coast from China to the north east.

Hold a moment. You say the doubling times in the first graph are taken from J Bradford DeLong’s paper ‘Estimates of World GDP, One Million B.C. – Present’. That paper doesn’t include doubling times, but does (as the name implies) include estimate of world real GDP in 1990 international dollars. Looking at the source data I see that you’ve used the second (ex-Nordhaus) set of estimates from pages 7-8 of the paper (except for some reason 1.431111111 has been entered for 10,000 BC rather than 1.38). It’s unclear why you’ve rejected DeLong’s preferred data set, but that’s an aside.

DeLong does not have any GDP data before 1820. What he has done is noted a relationship between population growth and GDP per capita in the period from the early nineteenth century to WW2. He has data for world population going back to a million BC and he extrapolates the observed relationship back (despite it failing after WW2), i.e. his GDP estimates before 1820 have been directly calculated from the global population. He even comments that he is “enough of a Malthusian” to do this. This incidentally is a different approach from that taken in the paper from which he takes his 1820-present GDP figures.

DeLong then makes a correction for the availability of new goods in later periods, but the graph has been drawn based on the “ex-Nordhaus” figures, i.e. without this correction, so that doesn’t matter.

It seems to me that this makes your conclusion circular: the data for global GDP have been calculated on the (explicitly Malthusian) assumption of a linear relationship between population growth and GDP per capita, and you’ve then used this to show that economic growth depends on population growth.

Well, that at the very least explains the paragraph saying that the Romans could have extrapolated to the early XXI century, tho dunno the rest. I think Ian Morris had better data for prehistory-early history, but I also remember it being mixed into his own development index, and dunno if that is translatable or if it suffered interpolations like here.

Nice catch. I was wondering how on earth they had come up with data for world GDP from 10000 BC, when even just measuring it for 3rd world countries today is a challenge, and arguably not a good method of measuring their standard of living.

I think you’ve pretty much explained how this works: loosely construct “GDP” based on population, plot that proxy version of population against actual population, then put them both on log axes and with a huge tolerance for error. Hey look, a straight line! It breaks down in the modern era because the numbers become small enough that you can actually notice the details, and because we have actual data instead of vague proxies.

I agree the DeLong data alone should not be used to prove Malthus; by my understanding, Malthus is already proved by many other things (and I included some evidence in here).

I agree that this alone cannot prove that economic growth is caused by population growth. The argument I meant to make here was that economic growth looks hyperbolic until 1960, and that given models that we might accept on armchair theorizing grounds, this would make sense since population works the same way.

It seems to me that you can’t say economic growth looks hyperbolic for the period 10,000 BC to 1820: all you can say is that population growth looks hyperbolic (as you’ve already observed in section 1) and if economic growth tracked population growth during pre-industrial times, then economic growth was also hyperbolic. In particular, it seems doubtful to extrapolate a trend backwards from 1820 and then note the absence of a discontinuity at the industrial revolution.

I think you’re right, none of the GDP data used prior to 1820 is directly estimated, instead it’s derived from a model of a population-to-GPD causal link that’s estimated from the 1820-1945 data (he does not exactly say when he stops after WW2, so I can’t tell).

So the fact that the pre-1820 GDP matches the trendline for 1820-1960 is not suprising at all: it would, by construction, regardless of (GDP) reality. All we’re seeing is consistent population growth.

I reran the numbers on my end using annualized rate rather than doubling-time (which uses more of the data), and while the overall trend is visible, and post 1960 (the last 6 data points) does diverge, there are also centuries-long periods of zero-to-negative GDP growth in the dataset.

Assuming the GDP estimates are accurate, you could also say the “singularity was cancelled” in 200 AD, 1250 AD, and 1340 AD. The trend resumed later. It’s just that we’re looking at the current time period under a microscope.

Looking at the last graph (total economic output in England since 1270) in isolation, I’d say that the Singularity was cancelled some time in the early 20th century, and World War I is the first suspect to leap to mind.

I can see three problems with this model, that cast doubt on its conclusions and may explain the post-1960 trend towards “stagnation”.

The first is that most technological advances are collaborative rather than individual efforts, and the scale of the necessary (or at least observed) collaboration increases as we move up the technology axis. And while 1960 may not mark the death of the “lone genius inventor” model, it may be about the time a majority of inventiveness shifted from lone geniuses and small groups to Big Science.

Second, for a gross economic effect of the sort being measured on these graphs, it is not sufficient for the technological advance to occur, it is necessary that it be broadly adopted across the global economy. The more people there are, the harder it is to convince all of them that the new way is the best way and the easier it is for an advance to wind up stuck in a local economic niche.

And finally, the model assumes that inventors are trying to invent things that promote material wealth and GDP growth, rather than trying to find new ways of ensuring positional or status growth. Not only is that not the case, but as an error it probably isn’t constant in time. Pre-1960, a large fraction of the human race suffered from absolute material deprivation, which highly motivates one to seek material improvements in life. Also, people who literally owe you their lives can probably be persuaded to devote some fraction of those lives to increasing your status. Post-1960, at least in the industrialized world where technological advances occur, most everyone is adequately fed, clothed, and sheltered, has access to health care that will almost certainly get them to threescore and ten with no major crises, has global reach w/re information and even personal travel, and is past the point of diminishing returns on the dollars-to-hedons curve. But status competition is still highly motivating.

And status competition impedes the spread of technological advance, because adopting the New and Better Way diminishes the status of the people at the apex of The Way Things Are Done Now. It probably also impedes research collaboration, to a small extent from its corrosive effect within teams but even more so by ensuring that the teams which are formed and funded are the ones with high-status rather than high-capability inventive leaders.

Nor does positing AI fix this, unless you imagine that AI scientists can write better grant proposals for (presumably still human) funding committees than can human scientists, that AI inventors are better at convincing humans to adopt their ideas than are other humans, and that AI will never ever be used for such base purposes as increasing the status of its human masters (or of the AIs themselves).

If the ceiling of physics is lower than we think, then maybe not so strange. I have a hunch the AI “Singularity” will be largely characterized by doing most already defined things much much faster, more so than discovering entirely new realms of existence.

We seem to be at a point in history where we’ve observed everything, we know what it all does, and we only lack the knowledge of how. Even things like dark energy and dark matter are well defined mysteries that cannot be captured in a bottle and technologically applied. It’s our models that contain holes. What remains hidden from our eyes is defined by the scope of what it hides within.

The spirituality of the Kurzweilian Singularity with its “waking the Universe” I imagine looking less like star beings communing with the fundamental soul of existence and more like robot space imperialism.

and

These two statements are in disagreement. If technological advance is mostly driven by population, as von Foerster’s model assumes, then why do we actually observe that most technological advance comes from developed countries far from the Malthusian trap? Why do 1 billion first-worlders produce more innovations than 6.5 billion second- and third-worlders? Why did plague-ridden 17th century Britain produce more innovations than India?

Good point. I think the idea is that the initial decline in population growth and lack of workers prompted a paradigm shift involving a turn to mechanisation and an increase in GDP growth per capita. But then you are totally right – no explanation is given (as far as I can see) for why this increase in GDP growth per capita prompted continued technological development and economic growth of the countries that are now the first world.

The missing link is I think what others have suggested above – that it is capital investment in innovation and technology that matters, and has far more of an acute effect that total population. Then you can argue that the initial growth and capital gains from the turn to mechanisation, as well as the increase in GDP per capita, enabled sustained investment in ‘researchers’ which drove continued development. You can also bring in colonialism (which is otherwise left out of the thesis) and show that the huge wealth transfers from Asia and America to Europe played a key role in pushing development.

While this is interesting and fun, I wouldn’t take it to seriously because a lot of this relies on mixing good data with really bad data. Take your years to double GDP graphs, the estimates from 10,000 years ago are not good data, if those first two data points are off then everything looks different. Say instead of being around 10,000 years to double you are at 6,000 years then the first dozen data points has a line of an entirely different slope than the whole line, and it starts to look like a paradigm shift (not that the other 10 data points should be taken as great data) at 2,000 years ago. This interpretation would then lead you to conclude that the birth of Christ was a major shift in the world economy or something something this point in the Roman Empire etc. You always want to be skeptical when the worst data is exerting a large influence on your interpretations.

Also extrapolating backward gives you odd results. 100 years ago the doubling time was every 30 years, 1,000 years ago it was every 500 years, 10,000 years ago it was every 10,000 years. That would imply that 100,000 years ago you are looking at a doubling time of something like 200,000 years (right? I’m not great at this type of mental extrapolation). Modern humans are ~200,000 years old so we are still a few hundred thousand years away from the first doubling actually occurring, and our proto-human ancestors are 10 million years away from doubling.

It does remind me a little bit of the old “Hockeystick graph” from global warming that used old tree ring data, then switched to actual data (whereas tree rings don’t show nearly the same spike).

Slightly a side-point on the industrial revolution: in Britain, it was caused largely by having too many people. The process was:

1. Landowners start to view their land as an investment by either a) kicking all the peasants out and replacing them with sheep or b) kicking most of the peasants out and using “I could knock this out in my garage”-level improved tools (e.g. seed drills).

2. All the displaced people end up in cities, where enterprising city-dwellers build massive workshops and employ them all doing handicrafts. This is the key point; the first British factories weren’t mechanised.

3. Once you’ve got 200 people all sitting in one place doing the same thing, people start coming up with simple machines to make things go quicker that wouldn’t make sense in a 5-person workshop. That then creates a market for more complicated machines, once “industrial engineer” becomes an actual job.

4. Everyone else in the world sees what’s happening and copies the British, essentially starting the process at stage 3 but scrabbling around for people to work the factories: America used immigrants, Russia had big agrarian reforms etc.

My recollection is that this was taught as the elementary school version of history at British school, but it seems that everyone else learns it as “some people made some machines, then found some workers” because that’s how it happened everywhere else.

The main point of this is that Britain would be a really poor place to look for an exponential economic boom from industrialisation, as it developed gradually out of handicrafts just after another GDP boom caused by the shift from post-feudalism to proto-agribusiness.

This is almost all backwards. You don’t suddenly start kicking people off land and grazing sheep, the sheep grazing happened as rural areas started emptying out to move to urban areas to work in new or expanding industries. Then people started getting kicked off land eventually because lots of reasons (like maintaining infrastructure is relatively more expensive now so you switch to low infrastructure production), I think I have posted some of the commonly accepted numbers for these population areas in open threads before, but the gist is that over long periods of time (hundreds of years) the population growth was basically zero (with lots of variance), new net out migration of even 1% would make a lot of these places ghost towns and the out migration of the early IR was probably a lot higher than that.

The agricultural revolution happened before the industrial revolution, increasing productivity of farmers and thereby making many farmhands redundant. They left for the cities, worked the mines, etc.

Population of England and Wales almost doubled from 1700-1800, and population of London rose by a similar amount. If memory serves its not until the IR that urban population growth significantly outstrips total population growth.

Lots of the early industrial revolution happened in ‘overgrown villages’ and not in established cities. Mostly because guilds in cities had the power to restrict trade to their liking.

Eg Sheffield was a long established centre of metalworking. But the industrial revolution happened in Birmingham. Similar around Manchester and textiles.

But a lot of that was IR, not AR driven. The population was still 65% rural in 1800 and that was down to the low 20s by 1900. The population of GB roughly tripled in this period from 10+ to 30+ million, so roughly 6.5 million people were living in rural areas in 1800 and roughly 6.5 million were still living there in 1900. If you find numbers from the 1700s (harder to find) you don’t see this pattern, you see an increase in population, a increase in toward Urban relative to rural but you also see a total increase in rural population, its just growing at a slower rate than the Urban. This makes the argument that the AR was displacing workers difficult.

The AR was also not based on labor saving devices, it wasn’t ‘one of you stay and drive the tractor, 9 of you are fired’, it was more an increase in productivity per acre which occurred because of things that probably increased the demand for labor, not decreased.

Huh? Then why were there were people protesting about the enclosure movement kicking them off the land they’d previously been able to use, not to mention being evicted in favour of sheep?

Some people were, but its not a representative sample and more of a small scale after effect of everything else. Scotland is the marginal land of Britain and the AR drew disproportionately more workers to higher productivity rural areas followed by the IR to higher productivity urban and urbanizing areas, this weakened these areas (economically/politically/militarily) and then came the booting of people for sheep. One of the reasons that sheep took over is that they require very little labor, but lots of land to produce. The falling effective population in a lot of these areas was a main, if not the main, cause of the explosion in the sheep population, not the other way around.

I’m not sure that’s right. The Highland clearances (definitely marginal UK-wide, but the main sheep thing), were in the second half of the 18th Century (the “Year of the Sheep” was 1792), immediately before the IR. In England, two of the agricultural revolution’s big eighteenth century inventions were the horse-drawn hoe and seed drill – both of these are clearly labour-savers (the other two, a plough that needed fewer horses and crop rotation, I would guess are labour-neutral – I can see the argument going the other way for crop rotation if you weren’t leaving land fallow).

London doubling in size is a big clue that something’s going on in the countryside – prior to modern sanitation, cities were population sinks.

Wait, a little less than a year ago Scott reviewed “Capital in the 21st Century by Picketty,” who argued that the economy always grows at 1% to 1.5% a year no matter what since the Industrial Revolution. According to this post, not only was Picketty wrong, he was super-ultra wrong, the economy grows way faster than that, even after the 1960 slowdown it is still growing at 2%.

Is Picketty using different metrics than von Foester? Are their theories just disagreeing on a fundamental level? I am I so bad at statistics that I can’t see some sort of obvious way to reconcile their theories.

Are you sure you read that right? My impression was that Picketty’s argument was that the rate of return will eventually surpass economic growth in the long run, not that economic growth rate always has a certain value. Either way, the economy certainly doesn’t constantly grow at 1%. In recent years, economists fret about our economic growth not reaching 3% or higher.

Quoting from Scott’s review of Picketty:

So I’m also totally confused about this discrepancy. Is Picketty’s data (he was measuring from Industrial Revolution on) just better than the really long-scale data presented here?

Why not Picketty’s data is per capita adjusted IIRC, which will obviously make it much lower (and basically zero for much of the timescale discussed here).

Oops – should have seen that. Yes that’s totally correct, its GDP per capita growing at a steady rate post Industrial Revolution, which makes perfect sense. Thanks.

How well can we distinguish hyperbolic from exponential growth in historical data? I understand that historical estimates for population size and GDP are quite sketchy (and GDP might not even be a good measure of economy size for pre-industrial societies where there is little trade compared to self-production), so can we really tell the two trends apart?

This doesn’t strike me as particularly ironical, rather it looks like a textbook example of interpolation vs. extrapolation failure, which suggests that von Foerster’s model was likely “overfitted” to the data available up to that point but it didn’t capture the underlying dynamics of the phenomenon.

Doubtful. If many different curves fit the same points within the error bars, then I don’t think we can really talk of a resilient trend.

I’m not an expert at historical productivity and resources but I am a scientist, and I’m suspicions of these plots that fit so far back in time.

It seems to me that as you go back further in time, the data should get more noisy, since average past prediction error should be a monotonically increasing function of time. But this implies that getting an accurate fit between the present and the past should be nearly impossible, even if the underlying distribution is identical (eg, there really is just one hyperbolic curve that defines everything) without having problems of reverse causality.

In other words, if you plot the data and naively fit it to a curve, then that curve will be much more influenced by the more recent data, which is dense and precise, and only weakly influenced by the data deep in the past, which is sparse and noisy; but this is reverse causality. The present data should be a function of past data, not the reverse.

If you just extrapolate from past data, then it should be so nosy that it would be very unlikely get the right fit for the modern data, even if they are from the same underlying distribution, simply due to noise. You’re effectively trying to fit a distribution from its tail, which is very hard to do.

Is there something I’m missing here?

The piece I’m missing in all this is the opacity (to me) of how GDP growth curves depend on decisions about the value of new technologies. Not even the wealthiest monarch could buy a refrigerator, or a phone, or a car in 1750. Those things didn’t exist. Now you can buy a large home refrigerator for some reasonable fraction of median monthly household income. I haven’t seen the principled argument for how you can turn “no refrigerators, at all” to “cheap, ubiquitous refrigerators” into a straight line, or a hyperbolic line, or any kind of line. And it seems that the choices that need to be made in evaluating the GDP-associated-value of thousands of novel products that have arisen over the last 1000 years are a fraught space of opportunities to fudge details to get exactly the curve shape that you want. Or, more specifically, to create straight continuous lines when maybe there should be a series of massive jump discontinuities coinciding with significant inventions.

I understand that economists need some way of estimating the value of money going back in time. It just raises red flags when you start running thought experiments. Take two time travelers, one starting in 1750 and one starting today. They swap places, the one from the future bringing $1000 into the past, the one from the past bringing $1000 into the future. (“Don’t you mean $1000 “real”, 2019 dollars?” Isn’t that the very question?) The standard calculations for GDP would ask how many bushels of cotton (or whatever) you could buy with that money. They wouldn’t ask how many smartphones you can buy in 1750, because you would get a divide-by-zero error when you compared (smartphones per $1000 in 2019 / smartphones per $1000 in 1750). Divide-by-zero errors seem like a serious problem in general.

refrigerators didn’t exist in 1750… but icehouses did.

refrigerators with [insert addon feature here] didn’t exist before the first fridge with that feature.. but the price/value isn’t utterly disconnected from the price of every other fridge that existed before that.

You can sort of go the other way: what’s the cash value of lost works of art if your time traveler brings lost paintings into the future?

There was no price for them the day before the time traveler arrived … but we can still get a decent figure from when lost works of art have turned up by more conventional means like turning up in someones shed.

I have a strong intuition that a refrigerator is not just a better icebox. For one thing, an icebox cannot ever freeze meat. Are all food-preserving technologies the same thing? Is a refrigerator just a very high-tech form of salt?

Anyway, it’s easy to find something that’s truly discontinuous, that truly has no antecedent. A clock is not just a better sundial. A car is not just a better horse. Antibiotics are not just better leeches. There are notes of similarity but these similarities are more misleading than they are helpful.

If I try to steelman the position that I am criticizing, it would be something like this: You can’t say that the worldwide GDP per capita in 2019, in real dollars, is 50 times more than the worldwide GDP per capida in 1 A.D., in real dollars. It obviously makes no sense. Too many things are different and incommensurable. But you can say that worldwide GDP per capita in 2019 is 1.021 times higher than it was in 2018. And in 2018 it was 1.023 times higher than it was in 2017. And you can step backward like this steadily, and when you encounter things that look like discontinuities, like the sudden arrival of affordable refrigerators, you can do your level best to account for their impact on overall quality of life.

This is about where my steelman derails itself, though. Imagine that tomorrow somebody found in a cave somewhere trillions of magic lamps, each lamp containing a perfectly obedient and benevolent genie. These lamps would obviously be very cheap. But you would have to agree that “nominal GDP” would almost immediately cease to mean anything at all. You can think of a smartphone or a refrigerator as a kind of smaller, more restricted genie. It introduces a discontinuity which you are only pretending to “smooth out” because the market imposes a price on the new technology.

Why not?

I bite that bullet. Indoor plumbing is just a better chamber pot and servant to empty it out. An iPod is just a cheaper band of musicians that you pay to follow you around full-time and play music. Texting is just a better messenger pigeon.

The alternative is to give up on comparing the present to the past, which is absurd.

I would model refrigeration in terms of falling costs of food.

In terms of measuring ‘cost’, I would model it in terms of the number of hours a median laborer has to work to acquire a particular item of food, before or after taxes depending on your point of view.

Refrigeration and improved farming are both going to make the # of hours lower.

For things like transportation you can make similar calculations in terms of hours of labor needed to pay for a given length of transportation.

The challenging part comes from comparing luxuries. Automobile companies make new cars that are roughly the same in terms of purchasing power but have more features. However, absence of these adjustments will almost always result in an understatement of growth, so we still have some lower bound to work with.

I find this argument compelling. As a thought experiment, try to imagine some good, preferably a household object, that would be worth $100k, and could be widely available, say to 50% of the US. The quick answer is a general purpose robot, but that avoids the question by answering “general” or all things.

I would suggest that in 1980, a modern phone, as seen on Star Trek, would have seemed like something worg $100k, but of course, phones never cost that much. Mass production has changed the go to market strategy of firms. In the past, objects were first made bespoke for the rich, and thus started very expensive, so economists could see the value created (as they only understand prices). Since the 1960s, objects begin as mass produced items, and target a mass market, so are priced accordingly. Cell phones were never expensive, despite having more computer power than a Cray 2. Self driving cars will never be expensive. There will not be a time when the rich can buy one for over $1m.

I think this change in marketing strategy has broken how economists value objects. Why isn’t a flat screen TV worth $100k? An old movie theater in your house used to cost that. Why isn’t access to all movies online worth millions? Buying a film library would have cost that in 1990. Why isn’t a modern antibiotic worth $100k. If there were few people who could use it, that is how much it would sell for, as that is how much life saving drugs that treat few people cost.

It is hard to understand how much change there has been since the 60s, and how much of this change is in items that are made generally available to everyone, as opposed to things that can only be had by the rich. Even when items are sold to the rich at inflated prices, they are essentially identical to the cheap items the poor purchase. My fridge cost $15k, and honestly, is indistinguishable from the one I bought in Costco for $300 twenty years ago in function.

tl;dr; Progress has continued, it is just not valued as it was, as the benefits start more broadly. We are still on track for a singularity in 2020.

Not sure about the singularity. But eg Wikipedia and Google search and email (and especially Gmail) are services that we mostly consume for free, but would have been extremely expensive to replicate only a few decades ago.

This is related to economists discussing inflation as well.

And another reason to prefer nominal GDP targeting for monetary policy instead of inflation targeting.

Cell phones have absolutely been expensive. The first cellphones, in the mid-Eighties, ran upwards of $3000 (in Eighties money!), and those were evolutions of car phones (available since the Forties) which started out at truly ridiculous prices. They still cost around a thousand dollars by the late Nineties; prices wouldn’t come down to the few hundred we’re familiar with until the early- to mid-2000s, and the type of phone you’d get for that money then would be basically free now.

Some current iPhones are $1000 so, comparably, early cell phones were not expensive. The cost of the service, being able to contact people wherever you are, almost instantly, would have been huge 200 years earlier, and would have dominated warfare, if one side had the technology and the other did not.

Cell phones never cost as much as they could have cost had their marketeers tried to maximize the price, as opposed to maximizing total revenue.

Pointing to an current-gen iPhone and saying that it’s as expensive as a 1999 Nokia is kind of like pointing to a high-end workstation or gaming rig and saying it costs as much as a first-gen Apple Macintosh. It’s technically true but it glosses over huge differences in capability and niche; the closest equivalent of that Nokia runs about twenty bucks now, and even that will come loaded with a browser and email capability and a bunch of other crap that would have blown my mind in 1999 (not that I owned a cellphone then).

Saying that cellphones were never toys for the wealthy is simply not true. It is true that there were never hand-built bespoke cellphones, but that’s mostly true because the hardware was too physically large to be handheld when cell technology was in its bespoke phase (so it ended up in cars instead).

The name for the concept you guys are talking about is a current-weighted price index, or Paasche price index vs the GDP numbers you normally see which are adjusted for a base-weighted, or Laspeyres inflation index. PPI and CPI are both base-weighted.

A Paasche index takes into account, for example, how do you compare free videos being watched on YouTube to VHS rentals in the past, or compare the cost of sending a letter in postage to the cost of a fax transmission to the cost of an email today.

Go one level of abstraction up. You aren’t buying a refrigerator, you’re buying fresh food. Or if it’s not enough, you buy “level of comfort”, which is comparable. Not item-by-item, I’m guessing a Roman patrician would have less ice cream in the summer but more… whatever he had. It’s a fair guess he still came ahead (like others said, he probably had an icebox). So you can find a level where it’s comparable to somebody that can afford a fridge.

In the end you’ll probably have to give up comparing fridges with iceboxes and will have to compare costs of lifestyles – fridge plus vacuum plus microwave equals small merchant with two slaves, for a total cost of $20000 per year or 5000 denarii per year.

I’m old enough to have beaten carpets as a child. Vacuum cleaners are far superior. Getting rid of a wood fire is a huge step forward.

I see the idea of comparing lifestyles, but this is very hard to do, as almost no-one remembers quite what it was like to live in the conditions that were common from 1500BC to 1600AD. During that time, there was essentially no change. The houses I saw in Akrotiri are just as nice (possibly nicer if murals are counted) as the houses from Northern Europe in 1600AD, one of which I grew up in.

That comparison difficulty is true, but you can approximate some of it by looking at how people live today in some very poor locations. Dirt floors, walking long distances to fetch potable water, etc…

I would advise caution when using any data pulled from OurWorldInData, they are a great example of the Garbage In, Garbage Out problem. They combine:

1) compiling data without regard for source quality

2) lacking the motivation or culture to do any sanity check of the results

3) ignoring people that point out mistakes

For a glaring example, see this page War and Peace, especially figure 1. The Taiping Rebellion circle is quite small, lower the Holocaust. Best estimates for the casualties is 20 to 30 millions (see WP), but the source they use report 2 millions, all military, and no civilian casualties.

And to stay in China: where are the other revolts? the death toll of the Ming’s downfall?

To be fair, my impression is that the error bar for Taiping Rebellion casualty estimates is enormous. That said, 2 million does sound pretty damn questionable.

It’s been happenening for a long time.

Anyhow, if there is no singularity of some sort this century, it would seem that dysgenic decline will see a period of technological stagnation lasting centuries. Fertility rates will increase, and Malthuisian conditions will be reimposed, as the population drifts up to the carrying capacity of the industrial economy. Then technological progress will set off again.

PS. These sorts of models have come a long way since then (e.g. see cliodynamics). Intro/book review here.

While we do need a way to turn money into research that is better than colleges, I do not believe it has to be AI. My suspicion is that our research institutions simply don’t scale as well as many other institutions we have. So they’re increasingly less adequate to the amounts of effort required to reach fruit that are less low-hanging.

We’re good at building institutions that nobody really fully understands. They work fine as long as each part of the system has somebody who understands it well enough. Maybe successful research depends on having a relatively large scope of understanding of the field? And our larger research institutions lack that because they in attempting to emulate the architecture of other large institutions, they have to make their members either specialists or administrators?

Companies are pretty good at innovating. Not only in the cutting-edge research sense, but in the sense of finding new things people want. To give a really simple example: Uber’s competition to established, heavily regulated taxis wasn’t any triumph of science, but it did increase productivity.

Given the replication crisis in a lot of softer fields of science, I am hopeful that machine learning will give them a big boost in the near future.

Eg Facebook is collecting a lot of data and finding out things that would be in the softer fields of science if they were to be published.

I am hopeful for machine learning not so much because of any specific result, but because its practitioners are obsessed with avoiding overfitting; and are committed to techniques like hold-out sets. That’s the very opposite of p-hacking in science.

Part of the reason, I suspect, is that ML is often used to make money. So there’s an immediate feedback loop that makes people care about their results being real; instead of just whether they can publish.

One of the GPT-2 demonstrations that had me very interested was the auto-summary and being able to answer questions about a text.

If the quality is good have you any idea how valuable it would be to be able to point something like that at a few thousand research papers and ask a set of simple questions about their text?

Researchers can’t keep track of everything published in the field… but auto-curation to, for example spot common bad practices, filtering and then being able to ask direct questions about a corpus would save more researcher time than you could imagine.

The counter-argument is that major scientific breakthroughs come as a result of pure-research, which isn’t something companies tend to do very often. Product development takes existing technologies and improves them.

The classic example is the internet and computing, which began as something fairly expensive and impractical that only a technologically minded military would have the resources and willingness to invest in, but once these things were developed and became economical, the private economy took over in innovation.

I bring this up not necessarily because I agree w/ it 100%