I.

Allan Crossman calls parapsychology the control group for science.

That is, in let’s say a drug testing experiment, you give some people the drug and they recover. That doesn’t tell you much until you give some other people a placebo drug you know doesn’t work – but which they themselves believe in – and see how many of them recover. That number tells you how many people will recover whether the drug works or not. Unless people on your real drug do significantly better than people on the placebo drug, you haven’t found anything.

On the meta-level, you’re studying some phenomenon and you get some positive findings. That doesn’t tell you much until you take some other researchers who are studying a phenomenon you know doesn’t exist – but which they themselves believe in – and see how many of them get positive findings. That number tells you how many studies will discover positive results whether the phenomenon is real or not. Unless studies of the real phenomenon do significantly better than studies of the placebo phenomenon, you haven’t found anything.

Trying to set up placebo science would be a logistical nightmare. You’d have to find a phenomenon that definitely doesn’t exist, somehow convince a whole community of scientists across the world that it does, and fund them to study it for a couple of decades without them figuring it out.

Luckily we have a natural experiment in terms of parapsychology – the study of psychic phenomena – which most reasonable people believe don’t exist, but which a community of practicing scientists believes in and publishes papers on all the time.

The results are pretty dismal. Parapsychologists are able to produce experimental evidence for psychic phenomena about as easily as normal scientists are able to produce such evidence for normal, non-psychic phenomena. This suggests the existence of a very large “placebo effect” in science – ie with enough energy focused on a subject, you can always produce “experimental evidence” for it that meets the usual scientific standards. As Eliezer Yudkowsky puts it:

Parapsychologists are constantly protesting that they are playing by all the standard scientific rules, and yet their results are being ignored – that they are unfairly being held to higher standards than everyone else. I’m willing to believe that. It just means that the standard statistical methods of science are so weak and flawed as to permit a field of study to sustain itself in the complete absence of any subject matter.

These sorts of thoughts have become more common lately in different fields. Psychologists admit to a crisis of replication as some of their most interesting findings turn out to be spurious. And in medicine, John Ioannides and others have been criticizing the research for a decade now and telling everyone they need to up their standards.

“Up your standards” has been a complicated demand that cashes out in a lot of technical ways. But there is broad agreement among the most intelligent voices I read (1, 2, 3, 4, 5) about a couple of promising directions we could go:

1. Demand very large sample size.

2. Demand replication, preferably exact replication, most preferably multiple exact replications.

3. Trust systematic reviews and meta-analyses rather than individual studies. Meta-analyses must prove homogeneity of the studies they analyze.

4. Use Bayesian rather than frequentist analysis, or even combine both techniques.

5. Stricter p-value criteria. It is far too easy to massage p-values to get less than 0.05. Also, make meta-analyses look for “p-hacking” by examining the distribution of p-values in the included studies.

6. Require pre-registration of trials.

7. Address publication bias by searching for unpublished trials, displaying funnel plots, and using statistics like “fail-safe N” to investigate the possibility of suppressed research.

8. Do heterogeneity analyses or at least observe and account for differences in the studies you analyze.

9. Demand randomized controlled trials. None of this “correlated even after we adjust for confounders” BS.

10. Stricter effect size criteria. It’s easy to get small effect sizes in anything.

If we follow these ten commandments, then we avoid the problems that allowed parapsychology and probably a whole host of other problems we don’t know about to sneak past the scientific gatekeepers.

Well, what now, motherfuckers?

II.

Bem, Tressoldi, Rabeyron, and Duggan (2014), full text available for download at the top bar of the link above, is parapsychology’s way of saying “thanks but no thanks” to the idea of a more rigorous scientific paradigm making them quietly wither away.

You might remember Bem as the prestigious establishment psychologist who decided to try his hand at parapsychology and to his and everyone else’s surprise got positive results. Everyone had a lot of criticisms, some of which were very very good, and the study failed replication several times. Case closed, right?

Earlier this month Bem came back with a meta-analysis of ninety replications from tens of thousands of participants in thirty three laboratories in fourteen countries confirming his original finding, p < 1.2 * -1010, Bayes factor 7.4 * 109, funnel plot beautifully symmetrical, p-hacking curve nice and right-skewed, Orwin fail-safe n of 559, et cetera, et cetera, et cetera.

By my count, Bem follows all of the commandments except [6] and [10]. He apologizes for not using pre-registration, but says it’s okay because the studies were exact replications of a previous study that makes it impossible for an unsavory researcher to change the parameters halfway through and does pretty much the same thing. And he apologizes for the small effect size but points out that some effect sizes are legitimately very small, this is no smaller than a lot of other commonly-accepted results, and that a high enough p-value ought to make up for a low effect size.

This is far better than the average meta-analysis. Bem has always been pretty careful and this is no exception. Yet its conclusion is that psychic powers exist.

So – once again – what now, motherfuckers?

III.

In retrospect, that list of ways to fix science above was a little optimistic.

The first nine items (large sample sizes, replications, low p-values, Bayesian statistics, meta-analysis, pre-registration, publication bias, heterogeneity) all try to solve the same problem: accidentally mistaking noise in the data for a signal.

We’ve placed so much emphasis on not mistaking noise for signal that when someone like Bem hands us a beautiful, perfectly clear signal on a silver platter, it briefly stuns us. “Wow, of the three hundred different terrible ways to mistake noise for signal, Bem has proven beyond a shadow of a doubt he hasn’t done any of them.” And we get so stunned we’re likely to forget that this is only part of the battle.

Bem definitely picked up a signal. The only question is whether it’s a signal of psi, or a signal of poor experimental technique.

None of these commandments even touch poor experimental technique – or confounding, or whatever you want to call it. If an experiment is confounded, if it produces a strong signal even when its experimental hypothesis is true, then using a larger sample size will just make that signal even stronger.

Replicating it will just reproduce the confounded results again.

Low p-values will be easy to get if you perform the confounded experiment on a large enough scale.

Meta-analyses of confounded studies will obey the immortal law of “garbage in, garbage out”.

Pre-registration only assures that your study will not get any worse than it was the first time you thought of it, which may be very bad indeed.

Searching for publication bias only means you will get all of the confounded studies, instead of just some of them.

Heterogeneity just tells you whether all of the studies were confounded about the same amount.

Bayesian statistics, alone among these first eight, ought to be able to help with this problem. After all, a good Bayesian should be able to say “Well, I got some impressive results, but my prior for psi is very low, so this raises my belief in psi slightly, but raises my belief that the experiments were confounded a lot.”

Unfortunately, good Bayesians are hard to come by, and the researchers here seem to be making some serious mistakes. Here’s Bem:

An opportunity to calculate an approximate answer to this question emerges from a Bayesian critique of Bem’s (2011) experiments by Wagenmakers, Wetzels, Borsboom, & van der Maas (2011). Although Wagenmakers et al. did not explicitly claim psi to be impossible, they came very close by setting their prior odds at 10^20 against the psi hypothesis. The Bayes Factor for our full database is approximately 10^9 in favor of the psi hypothesis (Table 1), which implies that our meta-analysis should lower their posterior odds against the psi hypothesis to 10^11

Let me shame both participants in this debate.

Bem, you are abusing Bayes factor. If Wagenmakers uses your 10^9 Bayes factor to adjust from his prior of 10^-20 to 10^-11, then what happens the next time you come up with another database of studies supporting your hypothesis? We all know you will, because you’ve amply proven these results weren’t due to chance, so whatever factor produced these results – whether real psi or poor experimental technique – will no doubt keep producing them for the next hundred replication attempts. When those come in, does Wagenmakers have to adjust his probability from 10^-11 to 10^-2? When you get another hundred studies, does he have to go from 10^-2 to 10^7? If so, then by conservation of expected evidence he should just update to 10^+7 right now – or really to infinity, since you can keep coming up with more studies till the cows come home. But in fact he shouldn’t do that, because at some point his thought process becomes “Okay, I already know that studies of this quality can consistently produce positive findings, so either psi is real or studies of this quality aren’t good enough to disprove it”. This point should probably happen well before he increases his probability by a factor of 10^9. See Confidence Levels Inside And Outside An Argument for this argument made in greater detail.

Wagenmakers, you are overconfident. Suppose God came down from Heaven and said in a booming voice “EVERY SINGLE STUDY IN THIS META-ANALYSIS WAS CONDUCTED PERFECTLY WITHOUT FLAWS OR BIAS, AS WAS THE META-ANALYSIS ITSELF.” You would see a p-value of less than 1.2 * 10^-10 and think “I bet that was just coincidence”? And then they could do another study of the same size, also God-certified, returning exactly the same results, and you would say “I bet that was just coincidence too”? YOU ARE NOT THAT CERTAIN OF ANYTHING. Seriously, read the @#!$ing Sequences.

Bayesian statistics, at least the way they are done here, aren’t gong to be of much use to anybody.

That leaves randomized controlled trials and effect sizes.

Randomized controlled trials are great. They eliminate most possible confounders in one fell swoop, and are excellent at keeping experimenters honest. Unfortunately, most of the studies in the Bem meta-analysis were already randomized controlled trials.

High effect sizes are really the only thing the Bem study lacks. And it is very hard to experimental technique so bad that it consistently produces a result with a high effect size.

But as Bem points out, demanding high effect size limits our ability to detect real but low-effect phenomena. Just to give an example, many physics experiments – like the ones that detected the Higgs boson or neutrinos – rely on detecting extremely small perturbations in the natural order, over millions of different trials. Less esoterically, Bem mentions the example of aspirin decreasing heart attack risk, which it definitely does and which is very important, but which has an effect size lower than that of his psi results. If humans have some kind of very weak psionic faculty that under regular conditions operates poorly and inconsistently, but does indeed exist, then excluding it by definition from the realm of things science can discover would be a bad idea.

All of these techniques are about reducing the chance of confusing noise for signal. But when we think of them as the be-all and end-all of scientific legitimacy, we end up in awkward situations where they come out super-confident in a study’s accuracy simply because the issue was one they weren’t geared up to detect. Because a lot of the time the problem is something more than just noise.

IV.

Wiseman & Schlitz’s Experimenter Effects And The Remote Detection Of Staring is my favorite parapsychology paper ever and sends me into fits of nervous laughter every time I read it.

The backstory: there is a classic parapsychological experiment where a subject is placed in a room alone, hooked up to a video link. At random times, an experimenter stares at them menacingly through the video link. The hypothesis is that this causes their galvanic skin response (a physiological measure of subconscious anxiety) to increase, even though there is no non-psychic way the subject could know whether the experimenter was staring or not.

Schiltz is a psi believer whose staring experiments had consistently supported the presence of a psychic phenomenon. Wiseman, in accordance with nominative determinism is a psi skeptic whose staring experiments keep showing nothing and disproving psi. Since they were apparently the only two people in all of parapsychology with a smidgen of curiosity or rationalist virtue, they decided to team up and figure out why they kept getting such different results.

The idea was to plan an experiment together, with both of them agreeing on every single tiny detail. They would then go to a laboratory and set it up, again both keeping close eyes on one another. Finally, they would conduct the experiment in a series of different batches. Half the batches (randomly assigned) would be conducted by Dr. Schlitz, the other half by Dr. Wiseman. Because the two authors had very carefully standardized the setting, apparatus and procedure beforehand, “conducted by” pretty much just meant greeting the participants, giving the experimental instructions, and doing the staring.

The results? Schlitz’s trials found strong evidence of psychic powers, Wiseman’s trials found no evidence whatsoever.

Take a second to reflect on how this makes no sense. Two experimenters in the same laboratory, using the same apparatus, having no contact with the subjects except to introduce themselves and flip a few switches – and whether one or the other was there that day completely altered the result. For a good time, watch the gymnastics they have to do to in the paper to make this sound sufficiently sensical to even get published. This is the only journal article I’ve ever read where, in the part of the Discussion section where you’re supposed to propose possible reasons for your findings, both authors suggest maybe their co-author hacked into the computer and altered the results.

While it’s nice to see people exploring Bem’s findings further, this is the experiment people should be replicating ninety times. I expect something would turn up.

As it is, Kennedy and Taddonio list ten similar studies with similar results. One cannot help wondering about publication bias (if the skeptic and the believer got similar results, who cares?). But the phenomenon is sufficiently well known in parapsychology that it has led to its own host of theories about how skeptics emit negative auras, or the enthusiasm of a proponent is a necessary kindling for psychic powers.

Other fields don’t have this excuse. In psychotherapy, for example, practically the only consistent finding is that whatever kind of psychotherapy the person running the study likes is most effective. Thirty different meta-analyses on the subject have confirmed this with strong effect size (d = 0.54) and good significance (p = .001).

Then there’s Munder (2013), which is a meta-meta-analysis on whether meta-analyses of confounding by researcher allegiance effect were themselves meta-confounded by meta-researcher allegiance effect. He found that indeed, meta-researchers who believed in researcher allegiance effect were more likely to turn up positive results in their studies of researcher allegiance effect (p < .002). It gets worse. There's a famous story about an experiment where a scientist told teachers that his advanced psychometric methods had predicted a couple of kids in their class were about to become geniuses (the students were actually chosen at random). He followed the students for the year and found that their intelligence actually increased. This was supposed to be a Cautionary Tale About How Teachers’ Preconceptions Can Affect Children.

Less famous is that the same guy did the same thing with rats. He sent one laboratory a box of rats saying they were specially bred to be ultra-intelligent, and another lab a box of (identical) rats saying they were specially bred to be slow and dumb. Then he had them do standard rat learning tasks, and sure enough the first lab found very impressive results, the second lab very disappointing ones.

This scientist – let’s give his name, Robert Rosenthal – then investigated three hundred forty five different studies for evidence of the same phenomenon. He found effect sizes of anywhere from 0.15 to 1.7, depending on the type of experiment involved. Note that this could also be phrased as “between twice as strong and twenty times as strong as Bem’s psi effect”. Mysteriously, animal learning experiments displayed the highest effect size, supporting the folk belief that animals are hypersensitive to subtle emotional cues.

Okay, fine. Subtle emotional cues. That’s way more scientific than saying “negative auras”. But the question remains – what went wrong for Schlitz and Wiseman? Even if Schlitz had done everything short of saying “The hypothesis of this experiment is for your skin response to increase when you are being stared at, please increase your skin response at that time,” and subjects had tried to comply, the whole point was that they didn’t know when they were being stared at, because to find that out you’d have to be psychic. And how are these rats figuring out what the experimenters’ subtle emotional cues mean anyway? I can’t figure out people’s subtle emotional cues half the time!

I know that standard practice here is to tell the story of Clever Hans and then say That Is Why We Do Double-Blind Studies. But first of all, I’m pretty sure no one does double-blind studies with rats. Second of all, I think most social psych studies aren’t double blind – I just checked the first one I thought of, Aronson and Steele on stereotype threat, and it certainly wasn’t. Third of all, this effect seems to be just as common in cases where it’s hard to imagine how the researchers’ subtle emotional cues could make a difference. Like Schlitz and Wiseman. Or like the psychotherapy experiments, where most of the subjects were doing therapy with individual psychologists and never even saw whatever prestigious professor was running the study behind the scenes.

I think it’s a combination of subconscious emotional cues, subconscious statistical trickery, perfectly conscious fraud which for all we know happens much more often than detected, and things we haven’t discovered yet which are at least as weird as subconscious emotional cues. But rather than speculate, I prefer to take it as a brute fact. Studies are going to be confounded by the allegiance of the researcher. When researchers who don’t believe something discover it, that’s when it’s worth looking into.

V.

So what exactly happened to Bem?

Although Bem looked hard to find unpublished material, I don’t know if he succeeded. Unpublished material, in this context, has to mean “material published enough for Bem to find it”, which in this case was mostly things presented at conferences. What about results so boring that they were never even mentioned?

And I predict people who believe in parapsychology are more likely to conduct parapsychology experiments than skeptics. Suppose this is true. And further suppose that for some reason, experimenter effect is real and powerful. That means most of the experiments conducted will support Bem’s result. But this is still a weird form of “publication bias” insofar as it ignores the contrary results of hypotheticaly experiments that were never conducted.

And worst of all, maybe Bem really did do an excellent job of finding every little two-bit experiment that no journal would take. How much can we trust these non-peer-reviewed procedures?

I looked through his list of ninety studies for all the ones that were both exact replications and had been peer-reviewed (with one caveat to be mentioned later). I found only seven:

Batthyany, Kranz, and Erber: .268

Ritchie 1: 0.015

Ritchie 2: -0.219

Richie 3: -0.040

Subbotsky 1: 0.279

Subbotsky 2: 0.292

Subbotsky 3: -.399

Three find large positive effects, two find approximate zero effects, and two find large negative effects. Without doing any calculatin’, this seems pretty darned close to chance for me.

Okay, back to that caveat about replications. One of Bem’s strongest points was how many of the studies included were exact replications of his work. This is important because if you do your own novel experiment, it leaves a lot of wiggle room to keep changing the parameters and statistics a bunch of times until you get the effect you want. This is why lots of people want experiments to be preregistered with specific committments about what you’re going to test and how you’re going to do it. These experiments weren’t preregistered, but conforming to a previously done experiment is a pretty good alternative.

Except that I think the criteria for “replication” here were exceptionally loose. For example, Savva et al was listed as an “exact replication” of Bem, but it was performed in 2004 – seven years before Bem’s original study took place. I know Bem believes in precognition, but that’s going too far. As far as I can tell “exact replication” here means “kinda similar psionic-y thing”. Also, Bem classily lists his own experiments as exact replications of themselves, which gives a big boost to the “exact replications return the same results as Bem’s original studies” line. I would want to see much stricter criteria for replication before I relax the “preregister your trials” requirement.

(Richard Wiseman – the same guy who provided the negative aura for the Wiseman and Schiltz experiment – has started a pre-register site for Bem replications. He says he has received five of them. This is very promising. There is also a separate pre-register for parapsychology trials in general. I am both extremely pleased at this victory for good science, and ashamed that my own field is apparently behind parapsychology in the “scientific rigor” department)

That is my best guess at what happened here – a bunch of poor-quality, peer-unreviewed studies that weren’t as exact replications as we would like to believe, all subject to mysterious experimenter effects.

This is not a criticism of Bem or a criticism of parapsychology. It’s something that is inherent to the practice of meta-analysis, and even more, inherent to the practice of science. Other than a few very exceptional large medical trials, there is not a study in the world that would survive the level of criticism I am throwing at Bem right now.

I think Bem is wrong. The level of criticism it would take to prove a wrong study wrong is higher than that almost any existing study can withstand. That is not encouraging for existing studies.

VI.

The motto of the Royal Society – Hooke, Boyle, Newton, some of the people who arguably invented modern science – was nullus in verba, “take no one’s word”.

This was a proper battle cry for seventeenth century scientists. Think about the (admittedly kind of mythologized) history of Science. The scholastics saying that matter was this, or that, and justifying themselves by long treatises about how based on A, B, C, the word of the Bible, Aristotle, self-evident first principles, and the Great Chain of Being all clearly proved their point. Then other scholastics would write different long treatises on how D, E, and F, Plato, St. Augustine, and the proper ordering of angels all indicated that clearly matter was something different. Both groups were pretty sure that the other had make a subtle error of reasoning somewhere, and both groups were perfectly happy to spend centuries debating exactly which one of them it was.

And then Galileo said “Wait a second, instead of debating exactly how objects fall, let’s just drop objects off of something really tall and see what happens”, and after that, Science.

Yes, it’s kind of mythologized. But like all myths, it contains a core of truth. People are terrible. If you let people debate things, they will do it forever, come up with horrible ideas, get them entrenched, play politics with them, and finally reach the point where they’re coming up with theories why people who disagree with them are probably secretly in the pay of the Devil.

Imagine having to conduct the global warming debate, except that you couldn’t appeal to scientific consensus and statistics because scientific consensus and statistics hadn’t been invented yet. In a world without science, everything would be like that.

Heck, just look at philosophy.

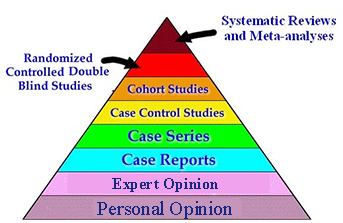

This is the principle behind the Pyramid of Scientific Evidence. The lowest level is your personal opinions, no matter how ironclad you think the logic behind them is. Just above that is expert opinion, because no matter how expert someone is they’re still only human. Above that is anecdotal evidence and case studies, because even though you’re finally getting out of people’s heads, it’s still possible for the content of people’s heads to influence which cases they pay attention to. At each level, we distill away more and more of the human element, until presumably at the top the dross of humanity has been purged away entirely and we end up with pure unadulterated reality.

The Pyramid of Scientific Evidence

And for a while this went well. People would drop things off towers, or see how quickly gases expanded, or observe chimpanzees, or whatever.

Then things started getting more complicated. People started investigating more subtle effects, or effects that shifted with the observer. The scientific community became bigger, everyone didn’t know everyone anymore, you needed more journals to find out what other people had done. Statistics became more complicated, allowing the study of noisier data but also bringing more peril. And a lot of science done by smart and honest people ended up being wrong, and we needed to figure out exactly which science that was.

And the result is a lot of essays like this one, where people who think they’re smart take one side of a scientific “controversy” and say which studies you should believe. And then other people take the other side and tell you why you should believe different studies than the first person thought you should believe. And there is much argument and many insults and citing of authorities and interminable debate for, if not centuries, at least a pretty long time.

The highest level of the Pyramid of Scientific Evidence is meta-analysis. But a lot of meta-analyses are crap. This meta-analysis got p < 1.2 * 10^-10 for a conclusion I'm pretty sure is false, and it isn’t even one of the crap ones. Crap meta-analyses look more like this, or even worse.

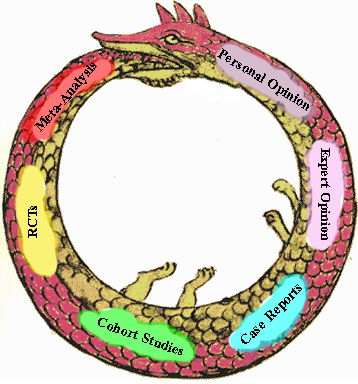

How do I know it’s crap? Well, I use my personal judgment. How do I know my personal judgment is right? Well, a smart well-credentialed person like James Coyne agrees with me. How do I know James Coyne is smart? I can think of lots of cases where he’s been right before. How do I know those count? Well, John Ioannides has published a lot of studies analyzing the problems with science, and confirmed that cases like the ones Coyne talks about are pretty common. Why can I believe Ioannides’ studies? Well, there have been good meta-analyses of them. But how do I know if those meta-analyses are crap or not? Well…

The Ouroboros of Scientific Evidence

Science! YOU WERE THE CHOSEN ONE! It was said that you would destroy reliance on biased experts, not join them! Bring balance to epistemology, not leave it in darkness!

I LOVED YOU!!!!

Edit: Conspiracy theory by Andrew Gelman

“…both authors suggest maybe their co-author hacked into the computer and altered the results.”

Actually, it was more collegial than that. Together, they suggest that one of them may have hacked the results.

Check out Rupert Sheldrake re much of this

Wow, what an amazing post. I love your blog, it’s awesome. I just had my heart broken a little though. This is why I’m a physicist. Still hard as hell and not as clear as many people think, but easier than fields involving biological organisms to get some level of confidence in your results. As long as you actually care about reality. Some physicists are just mathematicians, ie string theorists.

Did you ever read the golem? We read it in a philo of science course i took in the education department. (http://www.amazon.com/The-Golem-Should-Science-Classics/dp/1107604656). It focusses a bit too much on the uncertainty side, but, i think too many people have gone from faith in an invisible sky god to faith in “Science”. I have a post on my blog with an essay i wrote for fun in grad school comparing incentives in science vs incentives in free market capitalism. I always found faith in the invisible hand of free market capitalism to cure all human ills to be a bit too much like faith in the invisible sky god, and faith in “science” is right up there. I have faith that, in the long run, science will probably get closer to reflecting how the universe actually works, but not in any particular current paradigm. Skepticism is a virtue in science. Except in climate change, which i consider more like a religion, which i also have post on.

Pingback: other mind meditation | Meditation Stuff (@meditationstuff)

It makes perfect sense. Schlitz has psychic powers. Wiseman doesn’t. They need to redo the experiment, keeping Schlitz as the starer in both groups.

Pingback: Science smorgasbord 2 | Deadline island

Love your post, and v pleased that you approve of our KPU trial registry 🙂

Just FYI, the other parapsych pre-registry that you refer to (the one Richard Wiseman and I set up for Bem replications) dates back to November 2010 and is no longer active.

“That doesn’t tell you much until you take some other researchers who are studying a phenomenon you know doesn’t exist – but which they themselves believe in – and see how many of them get positive findings.”

This statement made my jaw drop. The illogic is stunning. You have made an assumption, assumed this assumption to be true, and are then deriding the people who are researching the question with an open mind.

I’m pretty sure this doesn’t need to be explained to you, but you don’t, in fact, know that these phenomenon don’t exist until you have studied them scientifically. No ifs, ands or buts. Possible bias by researchers has to be taken into account when considering their results, and when they themselves formulate their experiments – in any scientific research.

However, it is ridiculous to assert that because you personally don’t believe in something anyone trying to determine whether there is a way to scientifically measure and validate alleged phenomenon is automatically wrong.

This is a very worrying thought pattern I see from ‘skeptics’ all the time. It is not skepticism at all, it is a kneejerk response to things that threaten their personal world view and belief system. By this definition 99% of the people on the planet are ‘skeptics’, and the only genuine skeptics are those prepared to challenge their own world views.

I’m concerned that someone could actually take that statement seriously, so, allow me to assert that to actually prove that a group of researchers science is wrong, you actually have to go into their methodology and conclusions and find errors. To take issue with their conclusions and then assume that because you don’t like them their methodology must be flawed is the the least scientific approach imaginable.

Sheesh.

Pingback: Das Versagen der Religionen - Seite 7

Very Nice! A couple of other observations. My father was a process development engineer at Dow Chemical. His design work in this area was to take a research result and test it’s commercial viability in a sizable plant process, a kind of enhanced replication. Researchers thought this was grunt work (the persistent attitude toward replication), and that all that was required was to make what they did in the laboratory bigger. But when you increase the size of a process by 5-7 orders of magnitude, a great many things become different. The lesson is that it isn’t always clear which changes in experimental conditions are important to the outcome, and the opinion of the researcher is a poor guide.

The second observation is from an article I read from the late 70-80s. It was a test of the hypothesis that niacin in large doses reduced the symptoms of schizophrenia. The design was double blind and the people who evaluated the improvement didn’t know who was receiving the niacin. However, the waiting room for the people being evaluated would hold 4-5 people at a time. Naturally they talked, and because of the niacin flush, they quickly figured out who was on placebo. It was, incidentally easy to figure out what the drug was, too. The conclusion was that one-third of the people on placebo broke the blind and went out and bought niacin. No one told the researchers because there were incentives for not telling the blind had been broken. Experimental conditions includes everything, not just what the researcher thinks is critical to successful publication.

Also, there is some evidence that placebos work even when people know they are placebos.

A well-known example of this phenomenon is the development of the Haber-Bosch nitrogen fixation process. Haber established the concept on a tabletop and Bosch overcame obstacles to do it large scale. They rightly share the credit, but it’s easy to slip focus back to the “original genius” perspective. Fascinating stories.

Pingback: What we’re reading: Dealing with missing sequence data, SNP2GO, and the challenge of replication in bad results | The Molecular Ecologist

Pingback: Nothing About Potatoes | Things I found on the internet. Cannot guarantee 100% potato-free.

The first thing that came to my mind when I read about the weird controversy of the results of Wiseman & Schlitz’s is that one scientist has psi-powers and the other not, while the subjects are all in average the same susceptible. Or it is really a kind of placebo that works telepathic. Like, when the one scientist stares at the subject while thinking “you can feel that I’m staring at you now!” this is subconsciously sensed. But if the other looks at the screen he acts more like an observer rather than an influencer. Still, observing the experiment from the outside by scientific means one would not see the difference in the “input” but only the output. However, if so, then a life brain scan of the experimenters would surely be interesting to look at. And again another dataset to analyse more or less objective…

Pingback: Lightning Round – 2014/05/07 | Free Northerner

Hi Scott,

Regarding your biological concerns (from someone who is highly concerned with biology…):

First, you say “exotic physics.” We, naturally, have to be careful when using the word “exotic” in this context. For example, the “physics” that almost everybody would point out as being “exotic”, namely Quantum Mechanics, is actually far more ubiquitous even than the Almighty Omnipresent Holy Lord Himself ! (if He exists).

Then you add that “the amount of clearly visible wiring and genes and brain areas”… …“is obvious to *everyone* ”… …“from anatomists (…) to molecular biologists (…) to JUST ANYBODY WHO LOOKS AT (…) eyes.” (emphasis added). And as a consequence, you expect similar *obvious* correlates. I think we have to remember that even almighty stuff isn’t always (surprisingly enough) obviously “perceptible.” Especially when some sort of canceling out is at play. For example, we all know that electromagnetic force is pretty much almighty (far mightier than gravity; and gravity is not exactly a light weight… – pun intended). Yet, were it not for lightening bolts, even fairly advanced human societies might have passed it by completely, without an inkling of perception of it. See, we have *obvious* physical apparatus for dealing with air (lungs; the inhaling process; exhaling; etc), with water or somewhat solid matter (mouth, teeth, stomach, etc), with visible light (eyes), with sound (ears), etc. But unlike electric fish, we do not have *obvious* biological machinery to deal with “lightening stuff.” Yet, not only is “electricity” itself immensely present in our biological machinery (i.e. even we, humans, take huge advantage of it), electromagnetism actually knits reality tight; and without it, matter would wander astray (even atoms would fall apart). What recent biology is telling us about biological uses of quantum phenomena is that it seems that we don’t have any *obvious* correlate to it in terms of biological machinery. Yet, the correlates that we do have are not only ubiquitous but essential for life. Enzymes work taking advantage of quantum tunneling. Photosynthesis, if my mind serves me well, takes advantage of quantum entanglement. And when I say “take advantage”, what I mean is: cannot do without! So, yes, it is speculative and even unlikely, but… it might just be that the correlates are there. It is just us that cannot see them yet.

And next you add: “There’s also the question of how we could spend so much evolutionary effort exploiting weird physics to evolve a faculty that doesn’t even really work.”, and you remind us that psi won’t give us a head start greater than five percent over chance (as it seems; Ganzfeld), and then you mention its (apparent) absence in our ordinary lives, and the possible paradoxes of precognition (short and/or long term). Well, what might be happening (if psi exists…) is that we are not really looking at *how* psi works and at *what for* psi works. For example, electromagnetism does not exist for making lightening… Lightening bolts are just minor manifestations of the greater phenomenon of electromagnetism. They are almost “spin offs.” The basic and “true” function of electromagnetism in Nature is to knit reality together (Especially protons and electrons “directly”, as happens in atoms. And, oddly enough, as a consequence of this knitting, electromagnetism becomes pretty much… hidden!). So maybe the function of psi (i.e. the *main* function of it in Nature) is not to get humans synched in telepathy or forewarned in precognition or “physically” unencumbered in telekinesis. These might actually be lesser deities, when instead we should rather look for the Big Guy. 🙂

Finally you say something like: “Weird Physics + Invisible Organ-less Non-adaptive-fitness-providing Mechanisms x Precognition x Telepathy x Telekinesis.” Parapsychology does have problems… Admittedly, even according to psi researchers who believe in psi, two chief problems are, 1: possible theories for the paranormal and, 2: the role of the paranormal in Nature. We have been very clumsy in tackling these issues, IMHO. (Note that I am not a psi researcher. I am just considering these issues to be a concern of us all, no matter where we stand regarding it). So we are pretty much in the dark trying to make sense out of something that almost everybody agrees that… may not even exist! But since we (i.e. some of us) *are* trying to make sense out of it, one possibility (aside from the alternatives offered by Scott: poor studies, biased researchers, weird combinations of the two previous alternatives, etc) that comes to my mind when I look at the apparent anomaly in the Ganzfeld database (or when I look at Bem’s results now) is that this anomaly is a very “intentional” phenomenon. What I mean is that: even if you can control electronic devices with the electricity from your neurons (something that we now can routinely accomplish), it takes a lot of practice and “informative feedback” for you to learn how to master it. Yet, when it comes to shifting the odds in the desired direction in Ganzfeld sessions, we seem to be naturals in that… I think the only biological conclusion that can be drawn from it is that, if psi exists, we all use it routinely. But… maybe we do not use it for the things most people (including almost all parapsychology researchers) believe it is used for.

Anyway, just thoughts…

Best Wishes,

Julio Siqueira

So far as the Invisible Organ is concerned, if we don’t know how psi works, how likely would we be to recognize an organ for it?

Hi Nancy,

That is really an impediment. And just to give an example that makes things far far worse: we knew pretty well how enzymes work. We knew immensely well (some might say: astronomically well) how quantum mechanics works. Yet, no one, for decades, was able to unveil the fact that enzymes make use of quantum mechanics. Now, imagine this scenario under the conditions that you reminded us of (we not knowing how psi work). The expected result might as well be: decades or even centuries of searching in the dark.

Some random comments:

Nitpick: I think ‘fail-safe N’ should be avoided whenever possible. It assumes that publication bias does not exist, and so simply doesn’t do what one wants it to do. (See http://arxiv.org/abs/1010.2326 “A brief history of the Fail Safe Number in Applied Research”.)

I agree the Rosenthal results are interesting, but I think the Pygmalion effect is more likely to be an example of violating the commandments & statistical malpractice (Rosental also gave us the ‘fail-safe N’…) than subtle experimenter effects influencing the actual results; see Jussim & Harber 2005, “Teacher Expectations and Self-Fulfilling Prophecies: Knowns and Unknowns, Resolved and Unresolved Controversies” http://www.rci.rutgers.edu/~jussim/Teacher%20Expectations%20PSPR%202005.pdf

Not really. They barely do randomized studies. That’s part of why animal studies suck so hard; see the list of studies in http://www.gwern.net/DNB%20FAQ#fn97

Rosenthal’s fail-safe N should never be used, but not because it assumes that publication bias does not exist, but because it is based on the unrealistic assumption that the mean effect size in the unpublished studies is 0. On the contrary, if the true effect size is 0, then the mean effect size in the unpublished studies would be expected to be negative.

In the Bem et al meta-analysis, the authors calculated, in addition to Rosenthal’s fail-safe N, Orwin’s fail-safe N, which in principle can provide a more realistic estimate of the number of unpublished studies because it allows the investigator to set the assumed mean unpublished effect size to a more realistic, negative, value. But, bizarrely, Bem et al, set the value to .001, actually assuming that the unpublished studies support the psi hypothesis!

Yes, that’s what I mean: publication bias is a concern because it’s a bias, studies which are published are systematically different from the ones which are not, and the fail-safe N ignores this and instead is sort of like sampling-error.

>Imagine the global warming debate, but you couldn’t appeal to scientific consensus or statistics because you didn’t really understand the science or the statistics, and you just had to take some people who claimed to know what was going on at their verba.

You say this immediately after spending 3 sections proving that even in our world, statistics and consensus don’t actually work, but then don’t mention it in this context even to lampshade it.

There is no way this is accidental, because I know you read Jim’s blog, and his influence on this post is quite apparent, and he makes that argument all the time.

I’ve noticed this habit you have before where you bust out some extremely interesting argument and then fail to even lampshade the obvious implication. It’s not plausible that it’s an accident, but it’s also too weird for it to be deliberate. I’m confused.

I very much doubt Scott reads Jim’s blog, outside of Jim’s responses to Scott.

What influence do you see of Jim on this post?

The analogy of the meta experiment to a control using a placebo is slightly wrong. In giving a subject the placebo, you are causing him to believe it might work. He did not enter the experiment believing it would work. Whereas, the parapsychologists all enter the experiment believing parapsychology is real.

Parapsychologists are not distinguished by the property of believing their hypothesis is correct.

It’s a premise of this essay. “…the study of psychic phenomena – which most reasonable people don’t believe exists but which a community of practicing scientists does and publishes papers on all the time…. I predict people who believe in parapsychology are more likely to conduct parapsychology experiments than skeptics”

Hi Hughw,

I think you are oversimplifying the issue. And, as to the (one of the) “premise” of this essay, bear in mind that Scott said *most* reasonable people do not believe in psi. He did not say that *all* reasonable people do not believe in psi. Further, it is said above (in your quote) that the *community* believes in psi. But is is not said that *all* the parapsychologists believe in psi.

Even though we all do not believe in God, Angels, and Demons, the Devil is still in the details…

Best,

Julio Siqueira

http://www.criticandokardec.com.br/criticizingskepticism.htm

Psychologists believe in their hypothesis, just like parapsychologists believe in theirs.

For the Wiseman & Schlitz staring study (or “the stink-eye ‘effect’” as I like to call it), although I haven’t looked closely, I think I might have an explanation for how different results could be obtained with “identical” methods. It wasn’t a double-blind study. Although the person receiving the stink-eye was unaware of when they were being stared at, the person generating the stink was able to monitor the micro-expressions of the participants, and so be influenced in when they ‘chose’ to commence with stink-generation. Under this account, there is a causal relationship, but it goes in the reverse direction, and isn’t mediated by anything spooky, except for the spookiness of the exquisite pattern-detecting abilities of brains.

At least in the 1997 paper that I looked at, the experimenters used randomly generated sequences of stare and non-stare periods – i.e. the decision to stare or not was truly random and not at-will.

Pingback: The Bem Precognition Meta-Analysis Vs. Those Wacky Skeptics | The Weiler Psi

Hi Scott,

I must say that I am sort of a “believer in psi.” Also, I have read pretty much about it over the last ten years, and I have been following to a certain extent the psi-believers vs psi-skeptics debate (on the web, in papers, in books, like “Psi Wars: Getting to Grips with the Paranormal,” etc). Further, I have, on some occasions, taken sides rather fiercely on this issue. Yet, I must acknowledge that many critiques of psi works are of high value. And I did find your evaluation (article) above very interesting and worthy of respect. I would like to comment on a few points:

“None of these five techniques even touch poor experimental technique – or confounding, or whatever you want to call it. If an experiment is confounded, if it produces a strong signal even when its experimental hypothesis is true, then using a larger sample size will just make that signal even stronger.” … … “Replicating it will just reproduce the confounded results again.”

I believe that, if only confounding were involved in the issue, there actually would be a drifting in the results, and not confirmation plus confirmation plus confirmation. It would take more than poor standards to veer the results in one direction: it would take some sort of fraud, at least either conscious or unconscious.

And, the results in the Wiseman and Schlitz’s work was interesting. It is curious that it was not heavily replicated (It might be interesting to find out if it was mostly because of the believers or because of the skeptics…).

I just would like to add, as gentlemanly as possible (and thus, honour the high level of the debate on this page), that I do not share your view regarding Wiseman. But, that is not the issue, anyway.

I also think that Johann made very good contributions to the debate on this page. It is nice that he provided a fair amount of information about quantum mechanics’ based biological phenomena, which is an area of knowledge that has been increasing considerably (and robustly) over the last ten years.

Julio Siqueira

http://www.criticandokardec.com.br/criticizingskepticism.htm

@johann:

Last time i checked, multiplying a positive number by a large constant resulted in a larger number. You need a review of Bayes factors, multiplication, or both.

I don’t think you understand me clearly: the main utility of Bayesian statistics is that we can update a prior to a posterior, by multiplication with a Bayes factor. On a calculational level, this is all that happens, and indeed Scott’s approach doesn’t break from this. However, when it comes to the actual inference, what should happen is more than this; ideally, we should allow our beliefs to be guided by what those numbers actually represent. Because Scott uses two priors, however, the relative odds of his two competing hypotheses (i.e. there is a true effect and there is not) remains the same before and after any particular statistical test of the evidence. Something about this is just not right, IMO.

In practice, I know that it is nonsense to believe what the numbers literally say, without skepticism. People should only take statistics at face-value, for areas that really intrigue them, after having satisfied themselves thoroughly that the experiments under analysis are not explainable on the basis of flaws. But after this has occurred, those numbers have real meaning!

@Johann:

Actually your new post confirms that I did understand you, and that you don’t understand how Bayesian updating works.

In fact the approach Scott proposed for updating his probabilities was dead wrong, because he made the contribution from the new evidence depend on the prior, which violates one tenet of Bayesian inference; and using his method of updating, his posterior probability for psi can never exceed 1/2, which violates another tenet of Bayesian inference.

Bayesian inference always considers (at least) two hypotheses. Often, the second hypothesis is the complement of the first, but this need not be the case. It is perfectly fine to consider the prior odds of an observed effect being due to psi (H1) vs being due to experimental bias (H2). The prior odds is a ratio of two probabilities, P(H1)/P(H2), and is hence a single non-negative number. This number is multiplied by the Bayes factor, which is the ratio of the probability of the data under the psi hypothesis to the probability of the data under the bias hypothesis, and is hence also a non-negative number. Unless this number is 1, or the prior odds 0 or infinity, then multiplying the prior odds by the Bayes factor will result in posterior odds that are different than the prior odds. Clearly, the odds will not remain the same, as you claim.

It may well be that I am technically mistaken in my analysis—I have not deeply studied Bayesian hypothesis testing—but my impression is still that we’re not actually disagreeing on much, although now I have a concern or two about your approach as well. I would be glad to be corrected on any mistake, BTW.

Firstly, I will be as clear as possible about what I mean. I see Scott’s approach as one that conducts two separate hypothesis tests, both correctly performed. My contention, though, is that it is fundamentally wrong to do *both*. It is clear to me that Scott is juggling four subtle hypotheses, when really he’s only interested in two, to start with: psi exists vs. it does not, and invalidating flaws exist vs. they do not. He sets his prior for flaws at 1/10 (implying a prior of 9/10 for no invalidating flaws) and his prior for psi at 1/1000 (implying a prior of 999/1000 for no psi), multiplies both of them by the Bayes factor of a study, let’s say 300, and obtains two posterior distributions, let’s say 30 to 1 for flaws vs. no flaws and 3 to 10 for psi vs. no psi.

Now, since the existence of invalidating flaws effectively begets the same conclusion as the non-existence of psi, we can make the following comparison: At the start of the test, the ratio of Scott’s two priors was (1/10)/(1/1000) = 100/1, implying that he favored the flaws hypothesis a hundred times more than the psi hypothesis. Now, the ratio for his posteriors is (30/1)/(3/10) = 100/1, so it is clear that nothing has changed and the very performance of the test was meaningless. If you believe there are likely to be flaws in a study, why update the numbers?

If you saw something different in Scott’s methodology, feel free to explain.

@Johann:

No. There are only two hypotheses under consideration: H1: Results of experiments purporting to show psi are actually due to psi; H2: Such results are due to bias in the experiments.

The two hypotheses quoted above that are complementary to H1 and H2 (ie, the material you have parenthesized) do not enter into the analysis.

No. It works like this: We start with the prior odds of H1 vs H2:

Prior odds = P(H1)/P(H2) = .001/.1 = .0001 .

We multiply the prior odds by the Bayes factor for H1 vs H2. If D stands for our observed data (in this case, the results of the experiments in the meta-analysis), then

Bayes’ factor = P(D|H1)/P(D|H2) = 300.

And, by the odds form of Bayes’ theorem, we multiply the prior odds by the Bayes’ factor to obtain the posterior odds of H1 vs H2:

Posterior odds = P(H1|D)/P(H2|D) = 300 × .0001 = .03 .

So, prior to observing the data, we believed that results purporting to show psi were 10,000 times as likely to be due to bias than to psi. After observing the data, we believe that results purporting to show psi are only 33 (=1/.03) times as likely to be due to bias than to psi. Our new observations have increased our belief that the results are due to psi relative to our belief that they are due to bias by a factor of 300.

Hopefully that makes sense to you.

For the author, Mr. Steve Alexander,

Placing meta-analyses at the pinnacle of your Pyramid of Scientific Evidence is incorrect. As a practicing frequentist statistician, I am certain. Also, this is one of the few times that I actually agree with Eliezer Yudkowsky! He commented on your post. Substitute “frequentist” for Bayes, and vice-versa, in his comment. The conclusion is the same, in my informed opinion: meta-analyses are less, rather than more, ah, robust, compared to some of the other pyramid levels.

I mention this with good intent (it isn’t like a tiny missing word). You said,

No, noooo! Number 3 is notorious science fraud, Jonah Lehrer. Lehrer acknowledged that he fabricated or plagiarized everything. He even gave a lecture about it at a prominent journalism school, maybe Columbia or Knight or NYU, last year, after being found out. You should probably re-think whether you want to cite him as one of the most intelligent voices you read.

Unlike most critiques of statistical analysis, yours does contain a core of truth!

I enjoyed that, very much.

I think you’re misunderstanding. I am posting the standard, internationally accepted “pyramid of scientific evidence”, and then criticizing it. I didn’t invent that pyramid and I don’t endorse it.

Jonah Lehrer is indeed a plagiarist. He’s also smart and right about a lot of things. Or maybe the people whom he plagiarizes are smart and right about a lot of things. I don’t know. In either case, the source doesn’t spoil the insight, nor does that article say much different from any of the others.

I regret being unclear. I meant that I agreed with this, and only this, in EYudkowsky’s comment earlier:

Me too!

I don’t know what this is about,

“You can’t multiply a bunch of likelihood functions and get what a real Bayesian would consider zero everywhere, and from this extract a verdict by the dark magic of frequentist statistics.”

When I make my magical midnight invocations to the dark deities of frequentist statistics, open my heart and mind to the spirits of Neyman, Pearson and Fisher, I work with maximum likelihood estimates (MLE’s), not “likelihood functions”. There are naive Bayes models and MLE for expectation maximization algos [PDF!], but I don’t know if EY had that in mind.

You’ll lose credibility if you continue to claim that Jonah Lehrer is among the most intelligent voices you read. That is, of course, entirely your perogative. I only wanted to be friendly, helpful.

He acknowledged plagiarizing some things (mostly things I’d regard as fairly trivial and common journalistic sins of simplifying & overstating), but if he plagiarized ‘everything’ I will be extremely impressed. I don’t recall anyone raising doubts about his ‘Decline’ article, involved people commented favorably on the factual aspects of it when it came out, the NYer still has it up, and my own reading on the topics has not lead me to the conclusion that Lehrer packed his decline article with lies, to say the least. If you want to criticize use of that article, you’ll need to do better.

Another online acquaintance: Gwern of Disqus comments, who has found (sometimes-amusing) fault with my comments on inane The Atlantic posts.

So. You like writing about Haskell, the Volokh Conspiracy, bitcoin and the effectiveness of terrorism. Goldman Sachs has not been extant for 300 years. I was saddened by your blithe dismissal of Cantor-Fitzgerald, post-9/11. I worked, briefly, for Yamaichi Securities, on floor 98 of Tower 2, but several years after the 1993 WTC explosion.

Please consider dropping by for a visit on any of my Wikipedia talk pages. You have 7 years’ seniority to me there. David Gerard is a decent person. He wrote your theme song, the mp3, so you must have some redemptive character traits :o) I am FeralOink, a commoner.

Just for the record, I didn’t invent the term “control group for science”, I think that was probably Michael Vassar.

Alan Crossman,

True or not, the term “control group for science” is attributed to you, near and far, all over the internet. The origin seems to be consistent with your (commendably modest, honest) denial, per Douglas Knight comments on She Blinded Me With Science, 4 Aug 2009:

“I think I’ve heard the line about parapsychology as a joke in a number of places, but I heard it seriously from Vassar.”

EY replies, thread ends with yet others, e.g. “Parapsychology: The control group for science. Excellent quote. May I steal it?” and “It’s too good to ask permission for. I’ll wait to get forgiveness ;).”

On 05 December 2009, you wrote, Parapsychology the control group for science. I could find no other, better sources online, attributing it to you or Vassar. Actually, none directly to him, only you.

Eek! I need to put my time to better use. This is embarrassing!

Pingback: Utopian Science | Slate Star Codex

So what would be your recommendation to an endless list of people from LW from EY on down who say things about B/F that are (a) wrong, or (b) not even wrong. Could they do with reading a textbook?

If I had to choose between the LW cohort and the stats (or even data analyst) cohort as to who had generally better calibrated beliefs about stats issues, I know who I would go with.

Yes, Ilya Shpitser! I am a mere statistician and data analyst, doubter of Jonah Lehrer’s veracity, ignorantly idolatrous in my continued use of Neyman, Pearson and Fisher. I love validation.

I recognize your name. You had a lively, cordial conversation with jsteinhardt on LW, following his Fervent Defense of Frequentist Statistics. I smiled with delight as I read of your commitment there.

Pingback: The motto of th… | On stuff.

Pingback: Links For May 2014 | Slate Star Codex

Are there any surveys of what percentage of professional psychologists (or other relevant scientists) believe in psi (or think the evidence for it is strong enough to take it seriously)? Presumably said survey would have to be anonymous to get reliable results, since believers might be embarrassed to say so publicly.

That’s an excellent question, actually, and the answer is yes.

Wagener & Monnet (1979) and Evans (1973) both privately polled populations of scientists, technologists, and academics, and found that between 60-70% of them agreed with the statement that psi is either a “proven fact or a likely possibility” (response bias and other confounding variables exist, though). Consistently low results have been found for belief among psychologists; in Wagener & Monet (1979), psychologists who thought psi was either “an established fact or a likely possibility” were just 36% of the total, compared to 55% natural scientists, and 65% social scientists.

When it comes to the scientific elite, however, it is another matter. Here, evidence from McClenon (1982) seems to point to unambiguous skeptical dominance, with less than 30% of AAAS leaders holding to the likelihood of psi. Still, 30% is a lot of scientists—especially given these are the board members of the AAAS, the largest scientific academy in the world. Add to that the fact that the Parapsychological Association is an affiliate member of the AAAS—despite a vigorous campaign to remove them in 1979—and you have an interesting situation.

We must keep in mind that while both of these pieces of information are interesting, they shouldn’t do much to sway our judgement. In both surveys, it is difficult to gauge to what extent the opinions of those polled were formed in response to the empirical evidence.

You may find the Wagener & Monet (1979) results here: http://www.tricksterbook.com/truzzi/…ScholarNo5.pdf

A larger body of results is reviewed here: http://en.wikademia.org/Surveys_of_academic_opinion_regarding_parapsychology

This is why scientists should be humble and embrace constructivism and second-order cybernetics when they write papers.

The following popular article in Nature mentions a few examples:

http://www.nature.com/news/2011/110615/pdf/474272a.pdf

There is a decent talk on the subject by physicist Jim Al-Khalili, at the Royal Academy, unconnected with parapsychology (he’s got a great bow-tie, though):

https://www.youtube.com/watch?v=wwgQVZju1ZM

^ If the above link doesn’t show, type “Jim Al-Khalili – Quantum Life: How Physics Can Revolutionise Biology” into Youtube instead.

There are also a number of references:

Sarovar, Mohan; Ishizaki, Akihito; Fleming, Graham R.; Whaley, K. Birgitta (2010). “Quantum entanglement in photosynthetic light-harvesting complexes”. Nature Physics

Engel GS, Calhoun TR, Read EL, Ahn TK, Mancal T, Cheng YC et al. (2007). “Evidence for wavelike energy transfer through quantum coherence in photosynthetic systems.”. Nature 446 (7137): 782–6.

“Discovery of quantum vibrations in ‘microtubules’ inside brain neurons supports controversial theory of consciousness”. ScienceDaily. Retrieved 2014-02-22.

Erik M. Gauger, Elisabeth Rieper, John J. L. Morton, Simon C. Benjamin, Vlatko Vedral: Sustained quantum coherence and entanglement in the avian compass, Physics Review Letters, vol. 106, no. 4, 040503 (2011)

Iannis Kominis: “Quantum Zeno effect explains magnetic-sensitive radical-ion-pair reactions”, Physical Review E 80, 056115 (2009)

You can check Wikipedia, if you like, as well; it has those references and a little bit of information.

Let’s see if we can agree on one thing here, Alexander; I think you’ve written a very intellectually engaging piece, with a great deal of thought behind it—certainly one of the more interesting I have read—but I still have some basic concerns I would like to flesh out. I’ll start off with the caveat that I’m favorably disposed towards psi and parapsychology, and that I’m fairly well invested in researching the field, but I hope you’ll agree with me that we can have a productive exchange despite this most unsupportable conviction :-). If I am correct, all participants including myself will leave with an enhanced understanding, and perhaps respect, for the positions of both sides of this debate.

I’ll start by noting my most significant argument in relation to your piece: that if all these experiments, as you graciously concede, are conducted to a standard of science that is generally considered rigorous, are we not well-justified in concluding at least this: “The possibility that psi phenomena exist must now be seriously considered”? If not; what, I ask, can we say in defense of scientific practice? For if we allow it of ourselves to conduct numerous experiments of high-quality, designed by definition to eliminate (or at least strongly mitigate) explanations alternate to the one we have decided to test for, and then do not even bequeath to our conclusions—upon finding a positive result—the concession that the original explanation is a viable one, how do we justify our first impetus to scientifically investigate that explanation in the first place?

To illustrate my difference to your position, consider the following quote from your essay:

“After all, a good Bayesian should be able to say “Well, I got some impressive results, but my prior for psi is very low, so this raises my belief in psi slightly, but raises my belief that the experiments were confounded a lot.”

I’m led to question whether you really did not mean to say something slightly different. After all, if we take these words at face value, can we not—satirically—call them a decent formula for confirmation bias? A prior belief is examined with a strenuous test; that test lends evidence against the belief; we therefore conclude the test is more likely to have been flawed (i.e. evidence against our position causes us to reaffirm our belief). How do you counter this? IMO, statistical inference, whether bayesian or frequentist, only allows us to rule out the hypothesis of chance—it says nothing about the methodology behind an experiment. Thus, people only ever accept the p-value or Bayes factor of a study literally if they already believe the experiments were well-done.

Now let me address some of your specific points, to see if I cannot make the psi hypothesis a slightly more plausible one to you:

You mention Wiseman & Schlitz (1997), an oft-cited study in parapsychology circles, as strong evidence that the experimenter effect is operating here. I certainly agree. At the end of their collaboration, both had conducted four separate experiments, where three of Schlitz’s were positive and significant, and zero of Wiseman’s were. Their results can only be explained in two ways: (1) psi does not exist, and the positive results are due to experimenter bias, and (2) psi does exist, and the negative-positive schism is still related to experimenter effects. Let’s ignore issues of power, fraud, and data selectivity for now (if you find them convincing, we can discuss them in another post).

The rub, for me, is that this is an example of a paper that is designed to offer evidence against hypothesis (1)—Wiseman certainly wasn’t happy about it. The reason is that both experimenters ran protocols that were precisely the same but for their prior level of interaction with subjects (and their role as starers), ostensibly eliminating methodology as a confounding problem. Smell or other sensory cues, for example (as was mentioned in the above comments), could not have been the issue; staring periods were conducted over closed circuit television channels, and the randomization of the stare/no-stare periods was undertaken by a pseudo-random algorithm, where no feedback was given during the session that would allow subjects to detect, consciously or subconsciously, any of the impossibly subtle micro-regularities that might have occasioned in the protocol.

Now, you—understandably, from your position—criticize hypothesis (2), but consider the following remarks from Schlitz and Wiseman, after their experiment had been completed:

“In the October 2002 issue of The Paranormal Review, Caroline Watt asked each of them [Wiseman and Schlitz] what kind of preparations they make before starting an experiment. Their answers were: Schlitz: […] “I tell people that there is background research that’s been done already that suggests this works […] I give them a very positive expectation of outcome.” Wiseman: “In terms of preparing myself for the session, absolutely nothing”

The social affect of both experimenters seems to have been qualitatively different; we can say this almost with complete certainty (and it’s not unexpected). If we acknowledge, then, such confounding factors as “pygmalion effects” (Rosenthal, 1969), it would be only rational to conclude that—should psi exist—attempts to exhibit it would be influenced by them. Even more clearly, IMO (and why parapsychologists tend to see this experiment as consistent with their ideas), it was Wiseman who did the staring in the null experiments, and Schlitz who did the staring in the positive ones. Would it not make sense that a believer in psi would be more “psychic” than a skeptic, if psi exists? (or that a person with confidence in their abilities could make a better theatrical performance, or more likely deduce the solution to a complex mathematical problem, if they are not insecure about their skill level?)

Parapsychologists are only following the data, to the best of their ability. You’ll find that, under the psi hypothesis, the discrepancy in success is relatively simple to explain, whereas under the skeptical explanation we must conclude such a thing as that the most miniscule variation in experimental conditions—so miniscule that it must be postulated apart from the description of the protocol and will likely never be directly identified—can cause a study to be either significant or a failure. We must, in other words, logically determine that our science is still utterly incapable of dealing with simple experimenter bias; not just on the level of producing spurious conclusions more often than not (as Ioannidis et al show), but to the degree of failing to reliably assess literally any moderately small effect. This is itself a powerful claim.

But I will return to the nature of the psi hypothesis later. For now, I will cover parapsychological experimenter effects more broadly. Consider the following: as we probably both agree, Robert Rosenthal is one of those scientists who has done a great deal of work to bring expectancy influences to our attention; his landmark (1986) book, “Experimenter Effects in Behavioral Research”, for example, has not inconsiderably advanced our understanding of self-fulfilling prophecies in science. Would it then surprise you to learn that Rosenthal has spoken favorably on the resistance of a category of psi studies (called ganzfeld experiments) to just the sort of idea expounded by hypothesis (2)? See the following quote from Carter (2010):

“Ganzfeld research would do very well in head-to-head comparisons with mainstream research. The experimenter-derived artifacts described in my 1966 (enlarged edition 1976) book Experimenter Effects in Behavioral Research were better dealt with by ganzfeld researchers than by many researchers in more traditional domains.”

What if I told you that Rosenthal & Harris (1998) co-wrote a paper evaluating psi, in response to a government request, with overall favorable conclusions towards its existence; would you be inclined to read a little more of the literature on parapsychology? (The reference here is “Enhancing Human Performance: Background Papers, Issues of Theory and Methodology”)

Whatever you believe about psi, I agree with you that examination of parapsychological results can do much to bolster our understanding of setbacks in experimentation; however, I also believe that thinking and examining our many attempts (and there are quite literally thousands of experiments, and dozens of categories, with their own literature) to grapple with potentially psychic effects, have the merit of helping to engender a truly reflective spirit of inquiry, for the reason that they represent precisely that ideal of science that we dream of meeting—using data and argument to resolve deeply controversial, and potentially game-changing, issues.

On a superficial level, we already have evidence that parapsychology employs much more rigorous safeguards against experimenter effects than most any other scientific discipline. Watt & Naategal (2004), for example, conducted a survey and found that parapsychology had run 79.1% of its research using a double-blind methodology, compared to 0.5% in the physical sciences, 2.4% in the biological sciences, 36.8% in the medical sciences, and 14.5% in the psychological sciences. These findings are consistent with those of an earlier survey on experimenter effects by Sheldrake (1998), which found an even greater disparity favorable to parapsychology.

Originating out of vigorous debates between proponents and skeptics, however, I find it intuitively plausible that this should be the case (the same amount of vehement scrutiny used to contest telepathy is not used to criticize studies of the effect of alcohol on memory, for example), so these findings—while a bit surprising to me—don’t seem, on reflection, to be very much out of place.

I think, however—and you will probably agree with me—that I could ramble on about

safeguards and variables all day, without any effect on your opinion, if I do not discuss the most crucial, foundational issues pertaining to psi. It would be like trying to convince you that studies of astrology have rigorously eliminated alternate explanations; after all, if the hypothesis we would have to entertain is that the stars themselves, billions of miles away, determine our likelihood to get laid on any given day, it doesn’t matter how strong the data is—we will always suspect a flaw.

I therefore suggest we take a wide-angle view, for a moment, on the psi question. We cannot hope to be properly disposed towards its investigation if we do not—certainly it would be unacceptable to simply absorb the popular bias against it, without critical thought, since that’s exactly the religious mindset we eschew; neither would it be acceptable to enjoin its possibility because we want it to be true, or because it’s widespread in the media.

Let me first address the physical argument. I’m well-versed in the literature of physics and psi myself, but my friend, Max, is a theoretical physics graduate studying condensed matter physics, with a long-standing interest in parapsychology. He and I both agree that you are overestimating the degree to which psi and physics clash. Before I state why, consider that our opinion is not so unusual, for those who have thought about the question at length; Henry Margenau, David Bohm, Brian Josephson, Evan Harris Walker, Henry Stapp, Olivier Costa de Beauregard, York Dobyns, and others are examples of physicists who either believe that psi is already compatible with modern physics, or else think (more plausibly, IMO) that the current physical framework is suggestive of psi. De Beauregard actually thinks psi is demanded by modern physics, and has written so.

In light of these positions, you will see that our perspective is not an unreasonable one to maintain. Basically, we agree that if we take physical theory in its most conventional form (hoping thereby to reflect the “current consensus”), psi and physics are just barely incompatible. I say “just barely” because we have such suggestive phenomena as Bell’s EPR correlations, which Einstein himself derided as telepathy, (but which we now have incontrovertibly proved through experiment) that show how two particles may remain instantaneously connected at indefinite distances from each other, if once they interacted. It is true that this phenomenon of entanglement is exceptionally fragile; however, experimental evidence in physics and biophysics journals these days purports to show its presence in everything from the photosynthesis of algae to the magnetic compass of migrating birds, and more such claims accrue all the time. Entanglement is entering warm biological systems.

The incompatibility arises if we conceive of psi as an information signal; if we think something is “transmitted”; because the no-signaling theorem in quantum mechanics says EPR phenomena are just spooky correlations, not classical communication. You cannot use an entangled particle, as Alice, to get a message to Bob, for example, in physics parlance. However some parapsychologists and physicists don’t think of psi as a transfer, and lend to it the same spooky status as EPR—unexplained non-local influence. If this is correct, and you are willing to accept that non-local principles can scale up to large biological organisms (as the trend of the evidence is indicating), but to a larger degree than has ever been experimentally verified (outside parapsychology, of course), then certain forms of psi are already compatible with physics (e.g. telepathy).