I’m late to posting this, but it’s important enough to be worth sharing anyway: Sandberg, Drexler, and Ord on Dissolving the Fermi Paradox.

The Fermi Paradox asks: given the immense number of stars in our galaxy, for even a very tiny chance of aliens per star there should be thousands of nearby alien civilizations. But any alien civilization that arose millions of years ago would have had ample time to colonize the galaxy or do something equally dramatic that would leave no doubt as to its existence. So where are they?

This is sometimes formalized as the Drake Equation: think up all the parameters you would need for an alien civilization to contact us, multiply our best estimates for all of them together, and see how many alien civilizations we predict. So for example if we think there’s a 10% chance of each star having planets, a 10% chance of each planet being habitable to life, and a 10% chance of a life-habitable planet spawning an alien civilization by now, one in a thousand stars should have civilization. The actual Drake Equation is much more complicated, but most people agree that our best-guess values for most parameters suggest a vanishingly small chance of the empty galaxy we observe.

SDO’s contribution is to point out this is the wrong way to think about it. Sniffnoy’s comment on the subreddit helped me understand exactly what was going on, which I think is something like this:

Imagine we knew God flipped a coin. If it came up heads, He made 10 billion alien civilizations. If it came up tails, He made none besides Earth. Using our one parameter Drake Equation, we determine that on average there should be 5 billion alien civilizations. Since we see zero, that’s quite the paradox, isn’t it?

No. In this case the mean is meaningless. It’s not at all surprising that we see zero alien civilizations, it just means the coin must have landed tails.

SDO say that relying on the Drake Equation is the same kind of error. We’re not interested in the average number of alien civilizations, we’re interested in the distribution of probability over number of alien civilizations. In particular, what is the probability of few-to-none?

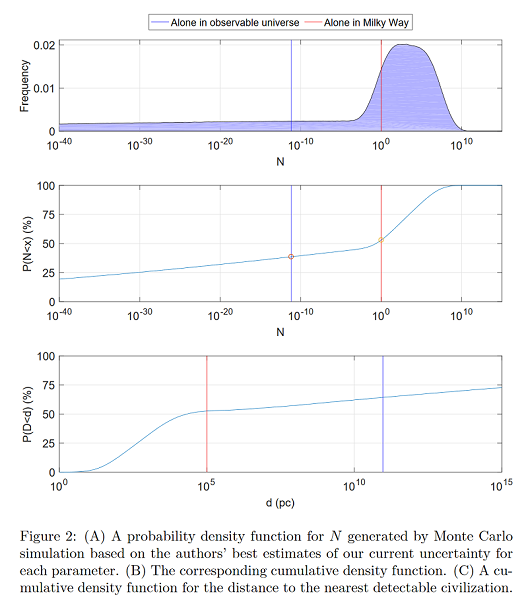

SDO solve this with a “synthetic point estimate” model, where they choose random points from the distribution of possible estimates suggested by the research community, run the simulation a bunch of times, and see how often it returns different values.

According to their calculations, a standard Drake Equation multiplying our best estimates for every parameter together yields a probability of less than one in a million billion billion billion that we’re alone in our galaxy – making such an observation pretty paradoxical. SDO’s own method, taking account parameter uncertainty into account, yields a probability of one in three.

They try their hand at doing a Drake calculation of their own, using their preferred values, and find:

N is the average number of civilizations per galaxy

If this is right – and we can debate exact parameter values forever, but it’s hard to argue with their point-estimate-vs-distribution-logic – then there’s no Fermi Paradox. It’s done, solved, kaput. Their title, “Dissolving The Fermi Paradox”, is a strong claim, but as far as I can tell they totally deserve it.

“Why didn’t anyone think of this before?” is the question I am only slightly embarrassed to ask given that I didn’t think of it before. I don’t know. Maybe people thought of it before, but didn’t publish it, or published it somewhere I don’t know about? Maybe people intuitively figured out what was up (one of the parameters of the Drake Equation must be much lower than our estimate) but stopped there and didn’t bother explaining the formal probability argument. Maybe nobody took the Drake Equation seriously anyway, and it’s just used as a starting point to discuss the probability of life forming?

But any explanation of the “oh, everyone knew this in some sense already” sort has to deal with that a lot of very smart and well-credentialled experts treated the Fermi Paradox very seriously and came up with all sorts of weird explanations. There’s no need for sci-fi theories any more (though you should still read the Dark Forest trilogy). It’s just that there aren’t very many aliens. I think my past speculations on this, though very incomplete and much inferior to the recent paper, come out pretty well here.

(some more discussion here on Less Wrong)

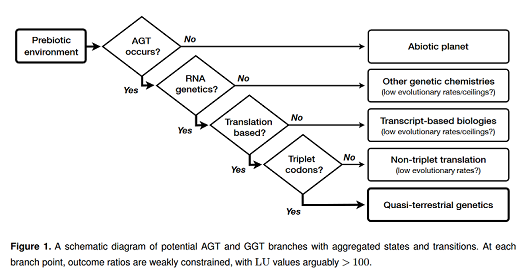

One other highlight hidden in the supplement: in the midst of a long discussion on the various ways intelligent life can fail to form, starting on page 6 the authors speculate on “alternative genetic systems”. If a planet gets life with a slightly different way of encoding genes than our own, it might be too unstable to allow complex life, or too stable to allow a reasonable rate of mutation by natural selection. It may be that abiogenesis can only create very weak genetic codes, and life needs to go through several “genetic-genetic transitions” before it can reach anything capable of complex evolution. If this is path-dependent – ie there are branches that are local improvements but close off access to other better genetic systems – this could permanently arrest the development of life, or freeze it at an evolutionary rate so low that the history of the universe so far is too short a time to see complex organisms.

I don’t claim to understand all of this, but the parts I do understand are fascinating and could easily be their own paper.

I fully agree with using probability distributions rather than point estimates, but I wonder if there are anthropic effects. Say among the “multiverse branches” with any life, 50% have exactly one technological civilization, 40% have ten, 9% have a thousand, and 1% have a million. It seems like there would still be far more observers that are among lots of other civilizations than those that are alone.

It may be that there is some irreducible complexity of a minimal viable entity that can undergo evolution. In that case abiogenesis would be an extremely unlikely event in any strand of multiverse.

Yup. If universes vary in # of civs, then observers will mostly find themselves in universes with a large # of civs.

This is also a strong argument for a creator (god/simulator/whatever); even if they are rare, they will disproportionately create universes with lots of civs, and therefore most observers will be in created universes.

Of course, we also have to condition on not seeing anybody else.

I like the idea that you’re either in a universe where you’re first, or you’re in a universe where you’re so far behind that you’re kept isolated from the higher sapiences as a kind of zoo/sapiopromorphic exhibition. The same way sophisticated human civilizations endeavour to keep isolated tribes isolated.

Interestingly, if you think it’s more likely that sapience-dense universes are driven by dark forest logic, and whoever is first wipes out all budding competing intelligences, this massively increases the probability we’re alone. Otherwise we probably wouldn’t have made it this far.

I saw a talk a while ago on youtube (wish I could remember where) arguing that such behavior would be grossly immoral, precisely because it gives us such an incorrect picture of reality. The ideal of letting us develop along our natural path is a sham because of all the false conclusions we would draw about the nature of the universe and our place in it.

I think the SDO gets closer to the truth than the Drake Equations, but just needs a hair of improvement: Specifically, what are the probabilities that > 1 alien civ exists, but also have a chance of detecting each-other prior to one or the others extinction? Let’s go with orangecat’s suggestion of 90% that it’s less than 10…

We may be assuming or relying too much on the idea that conventional radio communication would be the primary means of interstellar communication and is the best “smoking-gun” type of evidence of advanced alien civilization when EM based communication may just be a short technological bridge. Assuming that most civilization uses conventional radio communication for 1000 years of their history, that’s a brief blip compared to the history of the universe, and would require the listening civilization to develop their own radio communication within a specific 1000 year blip, and also be in close proximity (maybe 1000 light years) so that the two civilizations could still be at concurrent stages of development.

There’s at least evidence to suggest conventional radio is a small blip in the technological development of a civilization (much like the idea that Freeman Dyson had suggested about Fossil Fuels being the initial *spark* and moving towards nuclear/renewables). Advancements in OAM multiplexing would mean that signals at certain frequencies could potentially look no different than solar flares (a sudden build up in energy at certain spectra) but in fact contain multiple streams of data at different orbital frequencies and are indecipherable with conventional antennae. We’ve also begun development on using neutrino beams as a means of communication. I wouldn’t be surprised if advanced civilizations switch to neutrino transmitters to avoid EM interference for interstellar probes that get sent beyond a planet’s heliosphere, and reserve low power EM for wireless communication on a homeplanet that would be too low compared to background noise for something like SETI to pick up.

Honestly the lack of radio transmissions from aliens seems like a red herring with regards to the fermi paradox because the real issue is not seeing massive areas of space where all the stars have been disassembled or enveloped with dyson swarms.

At an advanced level of tech you really only need in theory a single person who wants to send out von-neumann probes for the civilization to become extremely obvious pretty quickly (on cosmic timescales).

Similarly there’s also pretty good reasons you’d want to extinguish/surround stars as well, since otherwise all that energy is mostly just getting permanently lost as extremely dispersed unusable light in interstellar space and speeding up the heat death of the universe.

True, but can we even quantify how much time it takes for a civilization to even reach that level of technology (Dyson sphere or massive von neuman probes)?

I’m skeptical of any type of full scale Dyson sphere eclipsing the full flux from a star, since stars need to be sufficiently large to be self sustaining, (for example in our case, our sun has 12,000 times the surface area of Earth) and so even boot-strapping something like a Dyson swarm that could absorb 1% of our Sun’s energy as an array of 1m thick panels containing absorber, converter, and storage would end up requiring 5.1 * 10^14 cubic meters of *stuff* or strip mining our entire planet (Like, every damn mountain!) along with purposing an enormous amount of asteroids, never mind all the energy needed to assemble and transport those materials.

The question basically is: how much surface area could we expect a star to be covered based on the age/tech level of an alien civ? Given those numbers, how easy can it be to detect a swarm if 1%, 0.1%, 0.01%, 0.001% of a star’s flux is less than what is visible in all spectra other than infrared?

As for Von Neumann probes, it’s possible we’ve already been visited by them, and/or they have limitations to their replication protocol. Dyson’s proposed size for his “astro-chicken” was 1kg, but how much matter would it take to make a self-replicating astro-chicken-factory? At this moment, we could say that human civilization *itself* is a von neumann probe factory bootstrapping itself 😛

Our calculation in “Eternity in six hours” showed that self-replicating factories could build a Dyson sphere out of Mercury in 40 years. Building Dyson spheres in a linear fashion takes forever, but self-replication makes it very fast on astronomical timescales.

Enjoyed it. Stumbled across three typos:

just before 5.1: “comically insignificant” (unless that’s a joke)

section 7: “civlizations”

last paragraph: “likeliehood”

A very important result; thank you for your writeup!

HTML typo: “…explaining the formal probability argument. Maybe the Dark Forest trilogy.”

Also, would you mind labeling the graphs better? I’m guessing the N is something like “number of civilizations / number of stars,” which means that the area to the right of the red line represents occurrences of “More than one civilization in the Milky Way in our simulation,” but I’m not sure there.

Yep. Neat!

Also, on the typos topic, the word “in” after the word “hidden” before “the supplement.”

As far as I can tell, the x-axis of the second graph should be labelled “x” rather than “N”. (Though I’m sure this is copied from the paper, and not Scott’s work.)

Yeah some more detailed explanation of the graphs would have helped me a lot.

I’ve always privately resolved the Fermi paradox in my head by figuring that if a species becomes intelligent enough to send radio signals, it’ll probably quickly either destroy itself or take over the universe (the “all-or-nothing” assumption), making it so that there’ll only ever be one intelligent species around at a time. So maybe it’s not surprising that we’ve found ourselves to be alone.

But I’ve never bothered to think carefully about it, and it’s possible that that reasoning is confused. For example: suppose you only think the all-or-nothing assumption is true with some probability. Then, if you observe that there are no aliens who’ve sent you radio signals, should you decide that it’s therefore more likely we’ll one day either destroy ourselves or take over the universe? That seems pretty counterintuitive to me…

I think the standard retort to that is that the universe seems quite old right now, such that if it were easy for planets to generate life we should be surprised that we were the first.

Well, unless it’s way easier to destroy yourself than to take over the universe, and we’re not the first. That would suggest that we should be pessimistic about our own prospects.

This whole line of reasoning feels so weird to me though—using apparently-irrelevant information about space to try to predict our own future—that I don’t seriously subscribe to it. Or maybe it’s just my bias towards optimism. 🙂

Ah, I clicked the link in the post to your previous post about the Great Filter. Yeah, it seems like this recent paper is providing some evidence against the Great Filter by arguing for a valid alternative explanation to why we’re seeing no aliens.

Well, no, not quite — It’s still clear that there are plenty of filters, some greater than others. But it raises my belief that the biggest filters are in our past — those are the ones for which they reported the largest uncertainty.

Its not obvious to me that this does anything to the paradox at all.

Seems like the most coherent way to think about it, is that whatever filters are out there, are basically the aforementioned ‘God’s coinflips’

If you don’t make it past the coinflip, your distribution is 0

A drunk physicist told me that that old planets have the following advantages:

1) The ratio of U235 to U238 gets lower, making nuclear weapons easy to manufacture on young planets.

2) The ratio of heavy water to normal water gets lower, making water unable to sustain a runaway fusion reaction. (Humans tested underwater nuclear weapons before we realized this)

Does anyone know if this is reasonable?

The first definitely is, the second less so but still technically accurate. It’s a question of averages, though, so while yes there’s less nuclear material on an older planet its unlikely you’ll get to a point where there’s none before its parent star leaves the main sequence. And you don’t need a huge volume to make enough weapons to wipe out a civilisation

Even on a planet around a red dwarf star where it could have a stable biosphere after billions of years, warlike inhabitants could synthesize their radiologicals in particle accelerators. It would be more expensive, but cost is no real barrier when it comes to weapons

The asteroid that wiped out the dinosaurs was equivalent to around 100 million megatons, the entire global nuclear arsenal is 10,000 megatons.

Some humans would survive the dinosaur killer.

It’s not that easy to permanently wipe out a technological civilization with nukes.

I think the second one doesn’t work because the estimates I’ve seen is that you would need a 20 million megaton nuke (200 million times more powerful than the Tsar Bomba) and deuterium concentration 20 times higher for the oceans to sustain a runaway fusion reaction:

http://blog.nuclearsecrecy.com/2018/06/29/cleansing-thermonuclear-fire/

Not sure how high the deuterium concentrations get on newer planets but unless they are many orders of magnitude higher again, then the aliens are going to have to be really reckless to build an ocean igniting bomb before they understand the danger.

For number 1, it seems to check out physically, but I don’t think it really matters with respect to how easy nukes actually are to make. On Earth, we were already using reactor-bred fuel at about the same time we were using natural fuel (uranium vs. plutonium bombs), and there’s at least one path that lets you breed weapons-grade fuel from thorium instead.

Number 2, I don’t think that’s an issue? Even if there were an exothermic fusion reaction from plain water to some other atomic mix (there probably is even with plain light water), I think the temperature required to sustain would be higher than is compatible with a self-sustaining reaction on a planet. It’s not like combustion, where it’s entirely possible for matter at that temperature to just hang around waiting to burn for a while; nuclear-reaction temperatures are pretty much incompatible with matter remaining in the same place unless you’ve got enough to gravitationally confine it (which would need greater than a planet’s mass). The expected result from any fusion of (light or heavy) water, from whatever trigger, is that the fuel would all explode out away from the reaction site and quickly lose heat until it was below the necessary temperature.

If you go back far enough, you don’t even need to enrich uranium to get a chain reaction.

There are U238 deposits in Africa that were once a natural nuclear reactor.

https://en.wikipedia.org/wiki/Natural_nuclear_fission_reactor

Is the universe that old though? It’s only about 14 billion years old and can potentially support life for probably another trillion years. I’d say it’s in its infancy. It also seems like we’re in the first generation of stars that would be able to create life.

The traditional formulation of the paradox is that, while the galaxy is arguably young, it’s plenty old for a spacefaring civilization to have spread over it if there was one.

On Earth, there’s no obvious reason why intelligence couldn’t have arisen, for instance, in the age of the dinosaurs (say a hundred million years ago), and guesstimates for how long it would take such an intelligence to settle the entire galaxy (e.g. in this paper) range from one to ten million years.

It is interesting, though, that the Drake Equation doesn’t have an explicit term for the age of the universe. The paper observes that the last term L (length of time over which such civilizations release detectable signals) is currently bounded by the age of the universe.

I saw a paper a few years back that I cannot currently find that suggested that while yes, the galaxy is “old enough,” the younger stars have only just died off. These are the ones that are large, burn hot and fast and die in a supernova that releases enough high energy radiation to sterilize volumes of space hundreds (or even thousands) of light-years in diameter. When figuring out how much time a galaxy has had to evolve complex life, this should be taken into account.

Also, our solar system has the benefit of residing in the far less dense spiral arms of the galaxy.

This sounds reasonable to me. Maybe there is some sort of catastrophe (could be man-made, could be a nearby supernova, could be some terrible solar storm), which happens no more than once every few centuries and only affects technologically advanced civilizations, while leaving more primitive ones relatively unscathed.

Earth people have only been technologically advanced for around 200 years, it’s hard to tell what will happen next. Maybe we’ll soon find out what the catch is.

It’s not straightforward, because all elements heavier than helium are formed in stars, so first generation stars didn’t have planets. The Sun has an expected lifetime of 10 billion years and the age of the universe is 14 billion years. Life on Earth evolved about 3.7 bya, so naively that may have been reasonably early.

That said, hotter stars have shorter lifetimes, and they are particularly good sources of heavy elements, so my best guess would be that there have been planets for nearly 10 billion years.

Also, it doesn’t feel like the path from abiogenisis to humans was optimal. We definitely know that life supporting planets have existed for 3.7 billion years, which seems like it should have been plenty of time for an advanced civilisation to evolve.

“so first generation stars didn’t have planets. ”

is it impossible to have a gas giant that’s all hydrogen and helium?

I don’t think that is known for certain, but last time I checked the consensus was that for anything less that a star you probably need an initial rocky core to begin pulling in the hydrogen and helium.

It’s been a long time since I studied this, but I think brown dwarf stars can form from hydrogen and helium, i.e. the star forms as usual but the mass is insuffient to ignite. A brown dwarf in a binary system is effectively a gas giant.

Be that as it may, a planet composed solely of hydrogen and helium could not support life.

No. You need to be able to cool efficiently. Gravitational instabilities can create objects ~7Jupiter masses with solar metalicity. However, this only applies to the very first generation of massive stars which have exceedingly short lives (<1Myr). The main sequence lifetime of intermediate mass B stars is less than a Gyr. We have had sufficiently steady star formation in our galaxy that there continuous formation of planetary systems around solar like stars for the past ~12Gyr.

Is there any reason to assume humanity is not the first species in the universe to meet all the conditions required for a civilisation detectable in space? Both you and Robert Jones significantly use “seems” to justify a position the universe is quite old and should therefore have produced intelligent life before, which is an indication of a totally impressionistic viewpoint (I’m not claiming this is irrational, just as far as I can see on an unsubstantiated reaction to a big number).

Empirically we have a limited data set for how long the universe takes to produce life capable of sending signals beyond their own planet, and that figure is 14 billion years or so. Whatever it seems like, we know this figure applies to us, and therefore is a realistic estimate for a minimum period of time required for such a lifeform to appear.

This obviously assumes Earth has been an ideal environment for producing life capable of sending signals beyond their own planet. But unless someone can show this is not the case this seems like a reasonable assumption to make in light of of our very limited information, and pretty much a certainty for the small area of local space where we have been able to scan for radio waves.

There seems to be a reluctance in human intellectual endeavours to consider ourselves uniquely special, which might relate to the detaching of science from religion (which does normally consider humans to be uniquely great and wonderful). But an assumption of human uniqueness is a position that actually fits the evidence we have best at the moment. We might not be unique or the first, it being a big universe, but the best hypothesis has to be we are in the first generation of species detectable from other planets.

Watchman, there’s no reason to assume that humanity is not the first, in the sense that there’s no paradox. It’s completely consistent with what we know for civilisations at least as advanced as ours to be super-rare, so the expected number per galaxy (or universe) is 1 (or less).

What would be hard to credit would be that the Drake equation yields an answer much larger than 1, but that of millions of civilisations in our galaxy, ours happens to be the first to have become capable of sending signals beyond its own planet. What prevented dinosaurs from developing a civilisation as advanced as ours? It looks like it’s just chance, so if there were millions of planets on the same general trajectory as ours, some of them would have had intelligent dinosaurs (so to speak) and would have been sending radio signals millions of years ago.

This whole area is really impressionistic. If you want to be rigorous, you just have to throw your hands up and say we have no idea.

I suspect that you may be right the real “paradox” stems from Copernican modesty, because it requires us to accept that Earth is an exceptional planet in one way or another (either because it is unusual in possessing the pre-requisites for intelligent life or because an extremely unlikely event happened here).

I like the idea of Copernician modesty. Wonder why I’ve never encountered it before.

I’m not so sure that it’s just luck dinosaurs didn’t create an advanced civilisation though. It might be a practical constraint such as the fact grass (with its key role in producing surplus food and energy) had not evolved, to pick out one single speculative factor (and one could, if so inclined, then presuppose that dinosaurs were necessary for the evolution of grass for some reason…). Luck would only apply if the conditions for that luck to produce an advanced civilisation were in place, and (since there was a lot of time for dinosaurs to get lucky in) it seems better to suggest that dinosaurs suffered from some constraint(s) that stopped any potential lucky break towards advanced civilisation happening.

To defend my hypothesis here more directly there is a technical possibility that an earlier dominant species might achieve an advanced civilisation but as there is no evidence for this it is better to suggest advanced civilisation only became possible at the point it did. This requires the belief that civilisation will develop as soon as conditions are right, but as one condition for civilisation (as far as we know) is the have a species as adaptable and opportunistic as humanity then that seems a reasonable assumption.

I’m not going to suggest this is definitely right, but the assumption that advanced civilisation is not possible before a certain amount of conditions have been met, and that humanity is at the end of a process that has met those conditions as quickly as possible, seems reasonable.. And it allows us the wonderful answer to the where’s all the aliens question of ‘just where we are, wondering where all the aliens might be’.

It is worth noting that we apparently don’t have great evidence that the dinosaurs didn’t create a sophisticated technological civilization.

https://www.theatlantic.com/science/archive/2018/04/are-we-earths-only-civilization/557180/

This is an article by one of the two authors of a paper looking at whether we’d be able to identify evidence of advanced technological civilizations in our own past; the other author is the head of Goddard Institute for Space Studies, so it’s not like this is cranktown. Although they do refer to it as the Silurian Hypothesis, because why wouldn’t you?

Actual article here: https://arxiv.org/abs/1804.03748

The general answer is that it would be very tough to identify the existence of even a global industrial civilization that lasted for a hundred thousand years, let alone the brief couple of centuries like ours.

The specific answer is that you might be able to work it out by finding the geological evidence left behind by releasing a bunch of fossil carbon into the atmosphere and causing drastic climate change; however, they also point out that if a species were capable of surviving as an industrial society for more than a few hundred years, they probably found a way to do it without wiping themselves out with climate change, which would therefore reduce our ability to detect them.

A couple thoughts on “Prior Earth Civs”:

1.) I’m kind of undecided if it’s possible to detect such a past civ or not. But I’d say if it got to 1960s level we should be able to observe whatever it left behind on the moon and further we should be able to detect radioactive isotopes and the like.

2.) There’s possibly another filter that goes like this:

“Humans needed fossil fuels to bring about the industrial revolution. If there is a first civ that uses all the fossil fuels (and possibly metals like iron) then no other civs can arise until those fossil fuels regenerate. Meaning that if there is a nuclear or other apocalypse, planets can only support an Industrial Civilization once every ~500 million years (or however long it takes the oil to come back)”

I think this isn’t entirely true. I figure, you know, 18th century England/France was a pretty scientifically advanced place. They’d probably eventually work out the math behind solar or nuclear power. Though it could delay them by a thousand years or so. Still a thousand years delay is a much smaller delay than 500 million years.

I think that you could identify any civilization on a similar scale to ours through graveyards. If you have billions of individuals being ritualistically buried across the globe over thousands of years then they ought to be over represented in the fossil record, you would at least expect to find skeletons near impressions of their technology.

Fossils are mentioned in the paper. The issue is that fossilization is really, really ludicrously rare, and only occurs in the exact right circumstances. Almost nothing actually gets fossilized.

I recall reading something (which source I of course can’t now recall – maybe ‘A Short History of Nearly Everything’ but maybe not) that mentioned how we have a really skewed idea of Earth’s past because of the fossil record. The great majority of organisms that ever lived left no fossils, and so we have this incredibly tiny sample from the ultra-rare instances where it did occur, and that lets us imagine we know what was going on in the past.

The example they gave would be if a civilization of intelligent beings living on Earth a few hundred million years from now thought they had a good grip on what life was like in our time, because they had discovered fossils of a housecat, a mastodon, and a tricerotops.

Fossils basically give us an idea of which animal fell into exactly the right patch of mud every few million years, and that’s about all. In the hypothetical example of a species trying to deduce our existence in a hundred million years, the odds they would find any fossil remnant of our civilization are very small. (In fact, the authors there argue that even if our industrial civilization exists for another hundred thousand years, we will probably be hard to find from the fossil record – let alone our mere few-centuries blip.)

I do like the authors there somewhat cheekily pointing out that there is at least one period in Earth’s history where it actually does appear that all the fossil fuels got somehow removed from the ground, burned, and caused a period of massive global warming. They are very careful not to actually claim that this is because of dinosaurs driving trucks, but, you know, their whole paper is basically arguing that we don’t have any particular reason to think it wasn’t, either.

Wouldn’t “truck fossils” or unusually shaped iron be pretty easy to spot too? Again, especially on the Moon.

So if they got to 1950s level. However anything before Sumerian level would be pretty tough to spot still.

I mean like animal fossils are easy to miss because they’re not that big. But Naval Cruisers are sort of gigantic.

Or they can build a civilization using biofuels or wind/hydro power or whatnot, which is almost certainly possible for humans and even more certainly possible for some non-trivial subset of aliens living on the set of all possible life-bearing worlds. Worst case, industrialization is an evolutionary change over a millenium or so rather than a revolutionary one over a century, which is hardly a disadvantage in this case and may be advantageous in terms of favoring social stability and long-term planning.

I don’t think “everybody screws up the first time and is then condemned to the preindustrial dark age for ever and ever” is a terribly good candidate for the Filter. But, with this mathematical formalism, we should throw it in the mix as another possibility.

For humans you are still talking about (estimates) of about 5 million people alive prior to agriculture to 7 billion alive today. Civilization can handle 1,000 times as many individuals as proto civilization for humans, so if it lasts even for 1/10th of the time any fossil you found would be 90% (assuming a lot to get that number about distribution, I know) from the civilized era. That also doesn’t note that many earlier fossils will be found with tools and other signs of proto-civilization.

Sure, most species don’t leave fossils but most species don’t span the globe like humans, while practicing ritualistic burial (which I view as highly likely to be a component of any civilization for various reasons) and also making untold numbers of durable artifacts.

There is also a large distinction between an inaccurate view of the past, and a lack of any knowledge. If some future species only finds remains of cell phones they might have no idea what our civilization looked like or what they are for, but they would still be strong evidence that our civilization existed.

The claim being made is that those durable goods aren’t durable in the timescales we’re talking about. Even skyscrapers, aircraft carriers, and other large structures will be completely eroded and leave no sign other than faint chemical residue in a geological strata in a matter of a few hundred thousand years – let alone cell phones and graveyards. Over a timescale of millions of years, everything is reduced to molecular-scale dust, except for the vanishingly small percentage of objects which just happen to wind up in precisely the right environment to preserve them, or to leave the right imprint in a patch of mud and get filled in with a sedimentary rock, which someone is then lucky enough to find.

Basically, there’s a really good chance that in any given million year period of history, there might be only a handful of fossils made, if any; you can fit all of human civilization from the first flint-knapped tool in Africa to the moon landing into the gaps in the fossil record and still have room left over for the new few hundred thousand potential years of civilization.

The point about relics on the moon is a good one – stuff up there would last a lot longer. On the other hand, it would get buried over a long enough period of time. A dinosaur lunar module buried under five hundred million years of moon dust might still have evaded notice.

Which percentage isn’t actually vanishingly small, because check out all the museums full of impressively un-vanished dinosaur fossils (and their basements full of even more numerous but less impressive fossils).

If a tree can be fossilized, so can a wooden ship. And the lifespan of a wedding ring dropped in the mud might plausibly be measured in eons. Has anyone actually done the math on the probability of a civilization’s worth of artifacts entirely vanishing, or is it all just “steel rusts, mumble mumble Ozymandias” handwaving? If there’s math, a pointer would be genuinely appreciated.

Did some Googling and found this – http://www.bbc.com/future/story/20180215-how-does-fossilisation-happen

(It references Bryson so I think it must indeed be A Short History of Nearly Everything I am fuzzily remembering.)

Every fossil is a small miracle. As author Bill Bryson notes in his book A Short History of Nearly Everything, only an estimated one bone in a billion gets fossilised. By that calculation the entire fossil legacy of the 320-odd million people alive in the US today will equate to approximately 60 bones – or a little over a quarter of a human skeleton.

But that’s just the chance of getting fossilised in the first place. Assuming this handful of bones could be buried anywhere in the US’s 9.8 million sq km (3.8 million square miles), then the chances of anyone finding these bones in the future are almost non-existent.

Put another way – we have a few thousand dinosaur fossils – and dinosaurs were a globally extant form of life for more than a thousand times longer than our species has existed (let alone our cell phones).

Just for completeness’ sake, here is the bit I was misremembering:

IT ISN’T EASY to become a fossil. The fate of nearly all living organisms-over 99.9 percent of them-is to compost down to nothingness. When your spark is gone, every molecule you own will be nibbled off you or sluiced away to be put to use in some other system. That’s just the way it is. Even if you make it into the small pool of organisms, the less than 0.1 percent, that don’t get devoured, the chances of being fossilized are very small.

In order to become a fossil, several things must happen. First, you must die in the right place. Only about 15 percent of rocks can preserve fossils, so it’s no good keeling over on a future site of granite. In practical terms the deceased must become buried in sediment, where it can leave an impression, like a leaf in wet mud, or decompose without exposure to oxygen, permitting the molecules in its bones and hard parts (and very occasionally softer parts) to be replaced by dissolved minerals, creating a petrified copy of the original. Then as the sediments in which the fossil lies are carelessly pressed and folded and pushed about by Earth’s processes, the fossil must somehow maintain an identifiable shape. Finally, but above all, after tens of millions or perhaps hundreds of millions of years hidden away, it must be found and recognized as something worth keeping.

Only about one bone in a billion, it is thought, ever becomes fossilized. If that is so, it means that the complete fossil legacy of all the Americans alive today-that’s 270 million people with 206 bones each-will only be about fifty bones, one quarter of a complete skeleton. That’s not to say of course that any of these bones will actually be found. Bearing in mind that they can be buried anywhere within an area of slightly over 3.6 million square miles, little of which will ever be turned over, much less examined, it would be something of a miracle if they were. Fossils are in every sense vanishingly rare. Most of what has lived on Earth has left behind no record at all. It has been estimated that less than one species in ten thousand has made it into the fossil record. That in itself is a stunningly infinitesimal proportion. However, if you accept the common estimate that the Earth has produced 30 billion species of creature in its time and Richard Leakey and Roger Lewin’s statement (in The Sixth Extinction) that there are 250,000 species of creature in the fossil record, that reduces the proportion to just one in 120,000. Either way, what we possess is the merest sampling of all the life that Earth has spawned.

The whole chapter on fossils is worth a read. He mentions that Trilobites were both the most evolutionarily successful animal in Earth’s history, surviving about 300 million years all over the planet, and that they lived in almost the optimum environment to actually be fossilized – and even the number of Trilobite fossils discovered is still measured in the tens of thousands. A whole specimen is still quite rare.

EDIT – One last note, for another way to think of it – based on those numbers, we should expect that about 73 of the current 8.7 million species on Earth will appear in the fossil record in the future.

Bones also aren’t durable on that time scale, and yet we find evidence of bone, even if we don’t find the bone itself.

I would guess that ritualistic burial increases those odds a ton, humans currently preserve their dead intentionally, increasing the time that they could become fossils by at least an order of magnitude, and perhaps 3 or 4 orders. Just that observation alone makes it unlikely that we are going to be represented in the fossil record at an average rate.

The other issue is that humans have way more than just their own bones to get preserved. Most animals have their own bones, footprints and maybe a burrow, I have a house, car, some potion of all the roads, trash, pollution and all kinds of other crap to leave behind. It is still an enormous long shot that I will become part of the long term record somehow, but if we went by weight of all the things that could be preserved of mine the odds of something being found vs that of a similarly sized animal has to be a 1,000 times in my favor.

Sure, that all sounds reasonable. But if we say modern humans and our artifacts are 1,000 times more likely to be found than other species, that still means the odds are 1 in 120 that we get discovered.

Given multiple extremely rough, sketchy probability values being weighed against each other, so take the actual numbers with a grain of salt, but the point is that the odds are so heavily weighed in the other direction that saying something makes it more likely isn’t necessarily the thing that makes a difference.

Note, the point is also not, “There is no chance artifacts from a global technological civilization could ever be discovered.” Just that failing to find them isn’t proof of anything. It isn’t weird that we wouldn’t find them – all else being equal, you should expect not to find evidence of any particular thing that happened in the deep past.

Whilst absence of evidence is not evidence of absence, I’m pretty sure there’s a positive case against a dinosaur (or preceding era) advanced civilisation which doesn’t require us to try and use the very incomplete fossil record. It goes something like this:

1. There is no direct evidence of an earlier advanced civilisation on this planet.

2. Evidence such as the apparent disappearance of fossil fuels is not, without evidence of consumption, evidence of earlier civilisation. It is evidence that something happened but that’s not exactly definitive about what this was.

3. Our knowledge of what is requires to make a civilisation is perhaps biased by our own species’ experience, but certain prerequisites seem to be required (examples follow):

a. An agricultural surplus to allow the critical mass of civilised beings to shift to non-agricultural work. This is based on grass and cereals in our own case, and I’m not sure earlier plants had the same productive capabilities, although my paleobotany is not that strong.

b. A source of power to allow the development of metallurgy to the point that widespread fossil fuel extraction was viable (better tools, and pumps are kind of essential for this). For us this was charcoal, which requires trees or palms, neither of which I believe were widespread in the period of the dinosaurs. Tree ferns being frond based seem less likely to provide the required carbon as fronds don’t carbonise as well.

c. A market or extractive economy spanning much of the globe to generate enough demand to fuel manufacturing and to supply resources when the major inputs are still space-extensive raw materials. The implication of this is that we can’t argue for an isolated civilisation as civilisation seems to require a large amount of the planet to be involved.

4. It seems unlikely that a civilisation could develop without the conditions in 3. above. However different models have been proposed and it might be worth addressing a couple raised here:

a. Water and wind power. Note that (with charcoal) this was sufficient to get to about the eighteenth century, but its hard to see how the subsequent key advances that make these viable power sources for an advanced civilisation, primarily batteries, power transmission and improved materials, would come about without use of fossil fuels. These are key ingredients for the plastics and to a point modern ceramics involved for a start. And metallurgy to produce even nineteenth-century electrical systems needed reliable supplies of good fuel.

b. Nuclear and solar. It’s tempting to suggest that a civilisation that developed nuclear engineering without fossil-fuel backed technologies in material science would definitely go extinct pretty quickly… I can’t see how either technology, relying as they do on the conversion or containment of energy using materials developed with or from fossil fuels would be viable without fossil fuels.

5. Whilst 3-4 are not conclusive (and subject to correction by someone with better knowledge or who has read more relevant Wikipedia pages) it seems that if we are looking for civilisation we are looking for something that has utilised available resources in a similar way to our own. This means that the traces of civilisation would be recognisable to us, in particular metallurgy and perhaps the traces of radiation. Both should leave anamolous geological features that are not presently identified.

6. So any putative early civilisation has to have the prerequisites for civilisation in some form, and would likely have to draw on fossil fuels to develop from that point (apparently without leaving geological indicators that they had done so). Neither situation seems very likely. Any proponent of an earlier advanced civilisation needs to at least hypothesise where it developed the prerequisite resources or how the civilisation might differ. This isn’t meant to be a barrier to arguing the case, but a suggestion that there is a case that needs to be made beyond saying we can’t disprove the idea.

I am going to zoom out to the broad points, which are as follows.

1. You can’t compare humans to the average likelihood of being preserved because we are way off the scale in terms of both potential artifacts and our behaviors which make them more likely to be preserved. It is plausible that we are talking well more than 1,000 times more likely, heck I wouldn’t even say plausible. I would say that humans are way over 1,000 times more likely to be preserved than any individual species of hummingbird, shrew, or insect.

2. There are going to be a great number of signs pointing at our existence in the geologic record basically all going “something weird happened here”. You have a mass extinction event in terms of the number of species, but (perhaps) without a decline in total fossils left. After humans are gone there is likely to be a massive explosion in new species, depending on how we go you could well see the world overrun by the descendants of dogs, pigs, chickens, wheat, corn and apples or you could see all large land animals disappear and then have a new bunch pop up suddenly. There is going to be a weird sedimentary layer where it will look to future geologists as if someone dug up and concentrated all kinds of elements. The amount of gold in that layer will probably be curios itself, let alone the iron, copper, lead, aluminum etc.

The combination of these two strongly imply that humans will leave something behind that will point to our existence and that there will also be broader signs encouraging the interested to look where and when they need to so that they can find it.

I should note at the start that I do not actually believe in the existence of a civilization of advanced primordial serpent men, as awesome as that would be. I mostly just find the thought exercise interesting. That said…

Couldn’t you argue that the Paleocene-Eocene Thermal Maximum is at least circumstantial evidence for just that? The fossil fuels didn’t just disappear – something caused them to both be removed from the ground and burned, their carbon released into the atmosphere, causing a massive global warming effect.

In the original paper I linked to that started this, the primary thing they point to suggesting that this was some undetermined natural process and not intelligent action is that it happened over a period of a few hundred thousand years, and not a few centuries. This would certainly represent a very different/slower model of civilization than ours, but it does seem like it could plausibly represent consumption of fossil fuels. (Even though it probably wasn’t.) And of course this was 55 million years ago, so it would have been a hypothetical post-dinosaur civilization, but still.

I also don’t know enough botany to have the foggiest idea if it would have been possible to get agriculture going with pre-grass plants. But from above, it does seem like you could get pretty decent carbon-bearing fossil fuels, because something burned a crapload of them and caused a global warming mass extinction 55 million years ago.

Again, this doesn’t mean those fossil fuels were consumed, but it means they probably could have been.

(Also, I just looked it up, and trees originated 300 million years ago or so, and by the end of the dinosaur era they were pretty prevalent.)

This is the real sticking point, I think (and what inspired that paper that started this whole tangent.) The question of whether there would be any traces left in the geological record that we would recognize is apparently a pretty debatable one.

They make the point that most radioactive isotopes, for instance, don’t actually have a half life long enough to be detectable in the timeframes under discussion here. They do make the note that a full-scale global nuclear war would leave significant amounts of Plutonium-244 and Curium-247 that would last long enough and be spread out enough to potentially detect – but less global nuclear activity might be tough to spot.

Metallurgy seems even more problematic on the scale of millions of years. It’s not that there’s nothing that could be detectable – to quote, “anthropogenic fluxes of lead, chromium, antimony, rhenium, platinum group metals, rare earths and gold, are now much larger than their natural sources […], implying that there will be a spike in fluxes in these metals in river outflow and hence higher concentrations in coastal sediments.” The tricky part is that there tend to be plausible natural explanations for a lot of these types of events too. Proving it was caused by a civilization is the hard part.

To flip back to the Paleocene-Eocene Thermal Maximum again – imagine that hypothetical future civilization trying to deduce our own existence from geological records, without artifacts. They’ll see the results of the fossil fuels being burned, and they might find the metal residue we left in sedimentary layers. The question then becomes, though, how they rule out natural, non-intelligent explanations for us in the same way we default to the same for similar events in our own past?

The really fun part of that paper is that they go through and identify the likely geological footprints we would leave if you knew what to look for – and then look at several events we know about in the geological record that seem to fit some of those criteria. They don’t come right out and say, “Dude, dinosaurs with rocket packs!” but that’s clearly the wink they’re having at the audience.

But that’s really the problem they’re trying to solve – how do you tell the difference between the evidence we leave behind and, say, the Jurassic Ocean Anoxic Event? Or alternately, do we call that event potential evidence of an ancient civilization too?

(They do suggest that it seems possible that synthetic plastics might last long enough to be detectable and identifiable as synthetic, although we don’t have a great way to predict the decay process for plastics on a geological scale, so that one’s kind of an X-factor.)

@MrApophenia

I’d say the big importance of this debate is if we find a lot of old planets teeming with animal life. If we find such a planet one of the first things we should do (besides testing the animal’s intelligence) is search for artifacts. So these thought exercises are likely to be very important and valuable.

In such a scenario the easiest place to look would probably be objects like the moon where things would remain intact for millions of years at the least. Then digging up/using radar/advanced fossil hunting.

Thanks, that’s helpful. So, maybe 15,000 bones for civilized humanity since the early Bronze Age, and then we have to guesstimate the ratio of technological artifacts to bones, normalized by effective fossilizability.

We’re still going to need the math on this part, though. Tricky.

This is contradicted by the earlier stuff, fossils are not found randomly, they are found in specific types of rock, or get concentrated by rivers running through certain types of rock. Civilization tends to concentrate potential fossils even further. Every time someone finds half of a mandible that could have been an early human ancestor a dozen graduate students will spend a summer searching the surrounding areas for more remains. To discover evidence of dinosaur civilization you don’t need to randomly find a piece of it, you just have to find an interesting specimen of any kind near where evidence of civilization is and it is reasonably likely to be discovered.

I think this is wrong, we have a few thousand good skeletons, each with dozens to hundreds of bones, and tens of thousands of individual specimens. The total for all dinosaur fossils is probably in the hundreds of thousands at least.

Speaking of gold, are there any processes that would deform all of it completely over the next billion years?

Investment bars and wedding rings seem like a fairly unequivocal sign that we were here, even if only a few are ever found.

I did more reading, and you are right about the volume of individual fossil specimens. There is actually a database of every dinosaur fossil recovery site and fossil ever written up in a published paper (to as high a completeness as the project has been able to catalog, of course), called the Paleobiology Database – as of the most recent article I found it comes out to about 8,000 fossil sites, 25,000+ organisms, and lots and lots of individual bones.

However, even all that reading kind of reinforced the broader point too. Here is an interesting article on statisticians who tried to use the variance in frequency of various dinosaur fossils discovered to estimate the total number of dinosaurs, including undiscovered ones.

The results came back with a couple thousand total species of dinosaurs – which is almost certainly wrong, and the explanation was that the fossil record just doesn’t provide enough information to draw conclusions like that.

http://blogs.plos.org/paleocomm/2016/03/30/how-many-dinosaurs-were-there/

Steve Brusatte of the University of Edinburgh is sceptical though: “I would take these numbers with an ocean full of salt”, he said. “There are over 10,000 species of birds – living dinosaurs – around today. So saying there were only a few thousand dinosaur species that lived during 150+ million years of the Mesozoic doesn’t pass the sniff test. That’s not the fault of the authors. They’ve employed advanced statistical methods that take the data as far as it can go. The problem is the data. The fossil record is horrifically biased. Only a tiny fraction of all living things will ever be preserved as fossils. So what we find is a very biased sample of all dinosaurs that ever lived, and no amount of statistical finagling can get around that simple unfortunate truth.”

Is this commonly experienced?

I’ve found that it seems more common for religions to consider humans less powerful, less durable, less morally consistent etc. than the sapient beings they traditionally believe to originate beyond Earth, even if they do consider humans uniquely great in the terrestrial order.

Which religions believe sapient beings come from beyond earth? Scientology perhaps. Everything else is in origin heliocentric and so doesn’t have the correct mental concepts. Angels are not of this sphere, but are part of the world not aliens.

@Watchman:

They don’t need to be, for the point that religions don’t necessarily “consider humans uniquely great”; I was trying to taboo ‘celestial’ in my comment, not ‘alien’. Believing someone is not from Earth don’t mean one has to believe they come from space or other planets—one might conjecture them to be omnipresent or otherwise nonlocal, or maybe that they come from outside the simulation.

In light of that,

Belief that a sapient being created the Earth or the universe at large occurs in many variants of Abrahamic belief systems; this belief, requiring a being to exist either before the Earth or outside of our timeline altogether, implies that that being is not itself from Earth.

Not if the first gains an effectively insurmountable advantage, as seems likely (singularity). Then we only need to explain why our local effectively omnipotent agent chooses not to reveal itself to us; but that’s just N=1, so any number of explanations will do.

What do you mean by “probably”?

Eyeballing Sandford et al’s graphed Monte Carlo estimate, with p>0.9 the number of technological civilizations in the universe is either exactly one or greater than one hundred billion. So you’re going to need a something like a 99.999999999% probability of self-destruction prior to universal conquest, to make this work by any other means than “there can have been only one”.

Even assuming civilizations are absolutely limited to conquering no more than a single galaxy, and that their galactic conquests will not be be remotely visible, you still need 99.9% probability of self-annihilation, and that’s three unreasonable assumptions right there.

@Baby Beluga

Yup, that’s my understanding too. We very likely live under the sway of some effectively omnipotent entity, either one that took over its lightcone (which we lie within), or took over the whole universe (if lightspeed is not a fundamental limit), or many universes, or that is simulating us or otherwise created our universe.

Anthropic considerations make this even more likely.

Overall, growing up as a natural civ and finding oneself on the verge of singularity in an old universe is *extremely unlikely*. Only a tiny fraction of civs satisfy these conditions. (Admittedly I haven’t crunched the numbers, but this is my intuition).

At this point I am reminded of the Wearing the Cape series. At one point, a character (from the future) explains one theory of why there is no other detectable life in the universe (and they can detect much better than we can): the Hyperion Hypothesis that the odds of life arising in this universe are in fact actually zero. Life on Earth came from another dimension.

why counterintuitive? seems logical to me

it’s a very very outside view evidence, so it doesn’t really combine with most of your other sources of evidence abt the hypothesis [we’ll one day either destroy ourselves or take over the universe]

Do the authors offer an analogy to something else that we know to be true?

I know you’ve heard this before, but abiogenesis occurred really quickly. It took vastly longer for multicellular life to occur than single-celled life; if anything, I’d guess that’s where a lot of the filter (such as there is one) is.

I’ve only skimmed the paper and the abiogenesis supplement, but it looks like they’re modelling an extremely wide range of abiogenesis likelihoods on the grounds that we don’t have empirical data to go on and there’s a ton of theoretical reasons for a low likelihood to be plausible.

Empirically, we’ve examined one Earthlike planet in detail, and we’re pretty sure abiogensis occurred exactly once there: zero is unlikely since there’s clearly life now (but panspermia hasn’t been conclusively ruled out), and more than one would predict multiple families of RNA transcription mechanisms, which we fail to observe despite diligent effort. And that one is the minimum we’d expect to observe evidence of, since we need either abiogensis or panspermia in order for us to be around at all to observe it. Abiogenesis occuring in the first few tens of millions of years after the oceans formed does suggest a higher likelihood, but the lack of multiple independent abiogeneses on Earth suggests a lower likelihood, especially if we consider the possibility alluded to in the paper that the conditions for abiogenesis might only exist for a relatively short window (in which case early abiogensis wouldn’t mean anything because the choice are “early” or “nothing”).

It depends on how long it would take the results of an abiogenesis event to spread across the oceans and fill any potential niches for other abiogenesis-created primordial life. We’d still say life has a very high chance of arising even if things line up so it only happens every 50,000 years or so, if it only takes 25,000 years for the first one to spread across the world.

I read somewhere that before life (and the consequent coal and carbonate rock deposits) the density of carbon in the oceans was roughly the same as chicken soup. One mechanism for the first successful life to suppress further abiogenesis is just consuming all the free fixed carbon.

I’ve read that we know abiogenesis, at least to the point of the selfish gene, took less than a million years is because the entire ocean goes through the circulation through hot crustal rocks in that time, so nothing that is non-self-reproducing can accumulate for longer than that.

One can extend this to guessing that early life was a sequence of innovations, each one allowing the contemporary life-system to get access to greater stores of energy and/or carbon in the ocean, and thus adaptively radiating a new lifestyle and snuffing out the evolutionary advantage of duplicating that feat again.

There is one instance of this that we have some evidence for: water-based photosynthesis. The first organism that assembled the machinery to do that (and it is clearly a derivative of simpler photosynthetic systems) could obtain electrons from water rather than being limited to using low-energy carbon species. In that case, the new substrate wasn’t exhausted, but the new life form became the dominant auto/chemotroph (the base of the food chain) by sheer numbers.

Intriguingly, there is an alternative photosynthetic system — some bacteria use rhodopsin, I think. to extract energy from light, though they don’t use it to drive the water-to-fixed-carbon reactions.

Interestingly, the quality of life that is needed to successfully fill the ocean is terrible. Assuming that the ocean is sterilized by the crustal water circulation in 100,000 years, the first reproducer has to fill the ocean in maybe 25,000 years. But it takes only 120 doublings to turn a single bacterium into the mass of the entire ocean, so a primal life-form with a net doubling time of 100 years would survive.

Can someone explain the ‘crustal circulation’ thing? What is that? I’ve googled it and not found a definition.

I don’t see how that works; what is it that is supposed to “accumulate” in a non-living ocean?

If, say, p(abiogenesis) is 1E-9 per ocean-year, then Earth gets an ocean, and in the first million years, life does not exist. Anything that has accumulated in that ocean is by definition random sludge that isn’t life, isn’t selected for being life-friendly, and isn’t what we want, but that doesn’t matter because it doesn’t last and we start over in the second million years with a “new” ocean.

And life doesn’t evolve in that ocean, or the one after it, etc, but in year five hundred million A.O. and after five hundred sets of baby-free bathwater being thrown out and replaced, Gaia rolls a natural 18 or whatever and we get life. Which, being lively, fills all the niches and doesn’t go away. This is consistent with observation.

Oceans being cycled every million years can only affect the probability of abiogenesis to the extent that abiogenesis depends on many millions of years of accumulation of stuff that isn’t life, isn’t selected for life-friendliness, but is nonetheless necessary for life, and I don’t think that’s the case. It doesn’t take millions of years to salt an ocean with simple organic compounds, and anything more complex that hasn’t been evolutionarily selected for is almost certainly the wrong stuff that will have to be torn back down to simple organic compounds to be useful for life.

But, if it turns out that there is some long-term accumulation that is necessary for abiogenesis, the fact that it doesn’t happen (at least on Earthlike planets) is a case for abiogenesis being vanishingly unlikely, because now it can only happen if there is some freakish circumstance that causes an eon’s worth of accumulation to happen all at once. Which, again, is consistent with observation.

It seems plausible to me that there were multiple abiogenesis events, but only one has left surviving descendants.

If there are multiple possible mechanisms but ours provides a strong advantage, it’s entirely possible that abiogenesis occurred multiple times, but our protocellular ancestors crowded out all the other ones.

Multicellular life has evolved a bunch of separate times though. The wikipedia article counts 46 independent times in eukaryotes https://en.wikipedia.org/wiki/Multicellular_organism#Occurrence and a bunch more in prokaryotes (although they do not achieve the same complexity). At the very least that suggests that the filter is one of the adaptations of eukaryotes rather than multicellularity itself, given that multicellularity seems to develop frequently in eukaryotes. My guess would be mitochondria but there’s also an argument that perhaps prokaryotes could develop complex multicelluarity but are outcompeted by the existing eukaryotes in those niches. Overall though it’s not really that long after the oxygen crisis that we get eukaryotes and then multicellular life. That suggests that the lack of multicellular life was not because it’s hard for it to develop but because it requires an oxygen rich environment which took a long time to arrive after the first life. This has to take a long time because the oxygen released by photosynthesis has previously been chemically captured by dissolved iron and it was not until all of this iron was saturated that oxygen could accumulate in the atmosphere.

Looking at the history of life as a whole, it’s amazing to me how fast we went from the cambrian explosion to today. Multicellular life before the Cambrian explosion lasted longer than all the time since. On a geological timescale, we hit intelligent life really fast after getting molluscs. That suggests to me that the changes associated with the Cambrian explosion may be one of the harder hurdles to reach in the fermi paradox equation.

According to wikipedia, the earliest known evidence of life is 3.77 to 4.28 billion years old, or 120 to 630 million years after ocean formation. In the chart in your link the “Life” arrow should probably point to 4280m, and that’s the earlier end of the estimate’s range.

In the book Life Ascending many elements are covered. My take-away from the book is that the hard part of abiogenesis (the part which can’t be done with bucket chemistry) is the formation of the cell membrane. Just about all of the other elements such as RNA replication, etc., can be done at large scale with buckets full of stuff in a lab.

Thus it’s possible that the gating issue is the right situation in which what we think of as a cell membrane can develop such that cellular life can develop and expand. At that point, natural selection can take over.

I always got the impression the initial cell membrane is just some sort of bubbles which can come about by waves splashing on the shore, tidal vents. etc.

So the hard part is you need to get the right stuff trapped in the bubbles.

+1

What? Did they get self-replicating RNA molecules to arise from basic building blocks, without any material from living organisms to start it? I’d be extremely surprised at that. (And that’s the part I’d expect to be the hardest.)

I’ve never understood the issue to begin with, because it depends on the assumption that big, easily observed accomplishments are possible. Our current understanding of physics seems to be that they’re extremely difficult to the point that it’s likely nobody would bother. You can’t move or send messages faster than light, period, and that’s been our understanding for about a century now, right? Yes, you can have cryogenics or generation ships, etc., but the people you’re sending are, from Earth’s perspective, effectively ceasing to exist. You can send a message and get a response every eight years at the soonest. There’s no hope of practical trade and you’d go bankrupt trying to use it as a valve for surplus population.

The only reason you would have for doing it would be a commitment to preventing human extinction in the distant future, or insurance against asteroid strikes. But we can’t even be bothered to get off our asses about climate change, and we’re pretty sure that will have major repercussions in the lifetimes of today’s children. People just aren’t motivated to expend giant heaps of resources on fighting extremely distant or hard-to-conceptualize threats. Sure, we talk about it because we like the drama of it, but who honestly wants to leave everything they’ve known behind forever to live in under extremely harsh and confined conditions with no hope of help or contact with anyone but a relatively small group of other similarly beleaguered people? Probably not many people. It’d be a life sentence in a (very unsafe) sciencey jail. Is it that improbable that intelligent life elsewhere would have similar priorities?

(I’m also not sure why it would be easier to colonize a planet in another star system–which is not likely to be particularly friendly to human life–than to create habitats in orbit around Sol, or greenhouses on Mars or whatever)

From the POV of the subset of Earth that is incapable of thinking in terms of centuries, yes. From the POV of, e.g., the Catholic or Mormon church, no. From the POV of a starship’s own passengers, no. From the point of view of transhumans or aliens with millenial lifespans, no.

The assumption that all decisions regarding interstellar travel or communications, for all species ever, will be made by the short-sighted majority of contemporary humanity or its exact equivalent in every alien species, is not one you can make with the confidence that your argument requires.

Have you read The Sparrow by Mary Doria Russell? Relevant to your comment, it’s about a Jesuit first-contact mission to a new planet and its aftermath, with lots of meditation on theodicy. Thoroughly enjoyable to the irreligious, as well (though I suspect growing up Episcopal at least gave me some theological background).

If you were a digitally uploaded transhuman with an effectively infinite lifespan, would you hang around for a very long time in some no-name system far from home? Or would you transmit yourself back once you’ve got your fill of exploration?

Why not both?

Well, with the long-lifespan aliens, you’re arguing that evolution will produce a species that calculates risk-reward/cost-benefit very, very differently from our own, regardless of how long it lives. What kind of environment would produce a creature that reasons in such a fashion, ignoring practical questions of expense and probable outcome and throwing itself on a grenade for the sake of an extremely large and abstracted group’s theoretical benefit in a distant future? Even assuming that it is feasible for a species to live that long (and not have horrible overpopulation problems). Not even getting into the transhumanism business, I’ll just say I have feasibility doubts there as well.

EDIT: This is to say, we don’t “think in terms of centuries” because that kind of thinking will get you killed on the savanna, or any other kind of evolutionarily competitive environment.

You don’t need to posit long-lifespan aliens at all. Entirely human institutions have observedly undertaken centuries-long projects with no immediate survival benefit; the Church’s cathedrals are the immediate example that comes to mind.

The idea that all of humanity will ignore the opportunity due to short time horizons is not credible. 95% of it, sure. But the remaining 5% will be the ones who actually do spread out and colonize the universe.

You can see the cathedral being built. It’s right there in the middle of the city, being raised up stone by stone. Just seeing it get built is a testament to the bishop’s power and prestige. People in another star system are invisible. Any conversation you attempt with them will be like trying to stream video transcribed from a dialup modem into morse code, sent by smoke signal, then reconverted into video by Navajo windtalkers. Those people, that ship, are gone. Even assuming they can recreate more than the palest miserable shadow of civilization on a hostile planet on their own.

I could see an interstellar voyage being launched by fanatical separatists, I guess. But anybody who wants to escape the rest of the human race that badly will not have good odds of holding an expedition together in tight quarters under stressful conditions.

I think you underestimate fanatical religious separatists. For one example springing readily to mind, consider the early years of Mormon Utah.

Utah is not one thousandth as remote or hostile as an alien world would be. Plenty of irreligious or modestly-religious people settled desolate parts of the American West.

I think you’re typical-minding here. You’re assuming the bishop ordered construction of the cathedral to project his power and prestige, rather than his stated (and probably actual) reason: to glorify God.

There are a small number of people who would commit to freezing themselves for an interstellar journey to explore and found a civilization, knowing no one on earth would ever know what they did. Some would even do it for the purpose of glorifying God.

While I obviously can’t go back and interview Medieval bishops, I’ve seen a lot of modern shiny-church-building drives. It is totally a prestige thing. Not to the extent of complete hypocrisy–part of it is that a big church might draw in the unbelievers–but there’s a reason Puritans et al reacted against church ornaments. It’s very, very easy for the cathedral to turn into the bishop’s giant stone (ahem) erection. Why should it surprise anyone if powerful men with miters have some of the same motives for building enormous edifices as men with crowns?

I’m not saying no one made cathedrals to show off, I’m saying some certainly did to glorify God. I had this daydream myself, as a Catholic and an electrical engineering student working in a robotics lab. I thought how neat it would be if eventually my robots could lead to the whole “post-scarcity society” thing where you could just build anything. I would design a beautiful cathedral my robots would build and call it “The Cathedral of the Miracle of the Multiplication of Loaves and Fishes” and feel deeply satisfied attending Mass in it. That wouldn’t have anything to do with showing my “power and prestige” because in the post scarcity society everyone else would have the same robots, too, so it wouldn’t really “cost” anything. It would just be for God.

It seems like there’s a general disagreement with your argument that people are making in different ways, and is one of the frequent objections to most proposed candidates for The Great Filter: it requires universality but is almost certainly not universal. That no subset of any civilization would bother with the large expenditures of space colonization given the downsides. Space exploration is not a Moloch problem like climate change. It doesn’t require everyone to coordinate. Lots of nations have launched space exploration missions with small fractions of their budgets. Elon Musk is trying to do it all by himself. If technology eventually improves such that interstellar travel is possible, albeit expensive, all it takes is one Future Musk here or one Alien Musk somewhere in the galaxy to get it going.

For another objection, if you insist that the only reason anyone does anything is peen waving, then okay, it’s not inconceivable that a future space race is “whoever can launch a credible colony ship first wins.” That’s the Science Victory condition in the Civilization games.

Yes. I’ve played Civ. But it’s a video game. And once you’ve landed a single ship, the accomplishment is done, which is sort of the point; it’s a thing you do just to show you can. The same way we landed on a desolate rock in the sky. Then did it some more. Then everybody got bored and we stopped doing it, and now we lack the capacity for doing it any longer, and it’s nobody’s priority except for places like China who haven’t done it yet. There’s talk about private spaceflight, and that’s exciting because that’s never been done.

If you want to tell me that we’ll launch a mission for the same reason, say we do. What then? There’ll be initial excitement, then decades of waiting, during which time a lot of other things will happen and civilization will advance to the point of being unrecognizable to the people who left it. By and by they’ll land on some wretched rock and set about establishing a base, sending back lots of updates about equipment maintenance and radiation concerns. Assuming they manage to set up a base that doesn’t flounder, they will be, basically, an unedited livestream of The Martian that goes on indefinitely, doesn’t get scripted to be exciting, and features old technology and values decades out of sync with what prevails back home. If it doesn’t work we’ll get scraps of information about whatever catastrophe killed them, possibly not even enough to tell what happened. How motivated are we going to be to start other missions?