[Content warning: religious people might feel kind of like this objectifies them and treats them as weird phenomena to be explained away.]

A major theme of this blog is: why do people disagree so intractably? And what can we do about it? Yes, genetics is a big part of the answer, but how does that play out in real life? How do those genes exert their effects? Does it involve human-comprehensible ideas? And how do society’s beliefs shift over time?

Gervais and Norenzayan (from here on “G&N”) write about how Analytic Thinking Promotes Religious Disbelief. They make some people take the Cognitive Reflection Test (CRT), a set of questions designed so that intuition gives the wrong answer and careful thought gives the right one. Then they ask those people a couple of questions about their religious beliefs (most simply, “do you believe in God?”). They find that people who do better on the CRT (ie people more prone to logical rather than intuitive thinking styles) are slightly less likely to be religious. In other words, religion is associated with intuitive thinking styles, atheism with logical thinking styles. I assume Richard Dawkins has tweeted triumphantly about this at some point.

Then they go on to do a couple of interventions which they think promote logical thinking styles. After each intervention, they find that people are more likely to downplay their religious beliefs. In other words, priming logical thought moves people away from religion.

If this seems fishy to you, it seemed fishy to the Reproducibility Project too. They ordered a big replication experiment to see if they could confirm G&N’s study. The relevant paper was just published on PLoS yesterday, and we can all predict what happened next. Let’s all join together in the Failed Replication Song:

Actually, it’s a little more interesting than this. Let’s look at in in more depth.

G&N bundled five different experiments into their original paper. Study 1 was the one I described above; make people take the CRT, elicit their religious beliefs, and see if there’s a correlation.

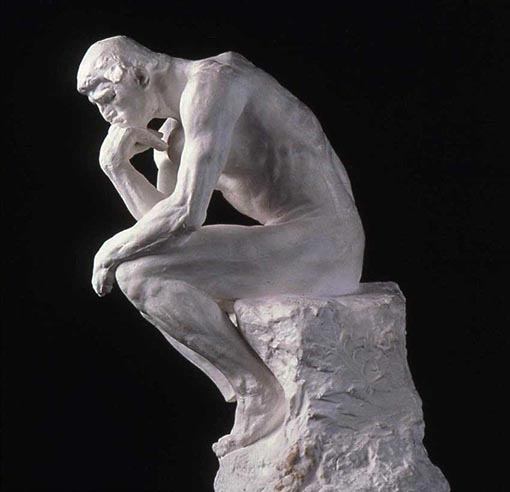

Study 2 “primed” people (n = 57) by making them look at one of two sculptures; either Rodin’s The Thinker, or a classical Greek sculpture of an athlete. Their theory was that looking at The Thinker would prime analytic thought, and they “proved” it in a pilot study where it improved performance on a syllogistic reasoning test. Then they deployed it on the question at hand; people who saw a picture of The Thinker were less likely to admit belief in God than people who saw the athlete sculpture, p = 0.03, with a respectable effect size of d = 0.6. Therefore, priming analytical thought decreases belief in God. There is no YouTube video that can express my opinion of this study, and I am not even going to try to find one.

Study 3 tried something similar. They made people (n = 93) unscramble words, (the example they give is “high the flies plane” to “the plane flies high”). Some of these words were things about logical thinking, like “analyze” and “ponder”. Again, this was supposed to prime logical thought. Again, they did a pilot study to confirm that it worked (or maybe just because all those sentences about planes flying high had primed their brain to think about pilots). Again, when they made subjects do it and then assess their religious beliefs, they admitted slightly less belief in God, p = 0.04, d = 0.44.

Study 4 was a replication of Study 3 with a larger and more diverse sample (n = 148) and broadly similar results.

Study 5 was another variation on the same theme. Previous studies had shown that hard-to-read fonts prime analytical thinking, probably because they require lots of cognitive effort and time, so you’re already activating effortful parts of your brain instead of just making a snap judgment. So they asked people to rate their beliefs in God using two questionnaires; one in an easy-to-read font and one in a hard-to-read font. The people who got the hard-to-read questionnaire reported less religious faith (p = 0.04, d = 0.3)

Medieval Bibles looking like this probably caused the Enlightenment

The Reproducibility Project effort completed replications of Study 1 (the direct CRT/religion correlation), and Study 2 (the sculpture prime).

Their replication of Study 1 used 383 people (they were aiming for 2.5x the size of the original study for statistical reasons I don’t entirely understand, but fell very slightly short). It was essentially negative; on two out of their three measures of religion, there was no significant rationality/atheism correlation, and on the third it was much smaller than the original study, so small it might as well not exist. They nevertheless declined to publish these results for two reasons. First, because they were a “conceptual” rather than “direct” replication; they switched from the CRT to a slightly different test of reasoning ability because everyone on Mechanical Turk already knew the CRT (!) Second, because “subsequent direct replications of this correlation have pretty conclusively shown that a weak negative correlation does exist between these two constructs”.

I am really confused by this second point. Everyone else has found that there’s a relationship between rationality and atheism, your replication attempt finds that there isn’t, so you decide not to publish the replication attempt? Some might call this the whole point of doing replication attempts. I know that Reproducibility Project are good people, so I am just going to assume I am hopelessly confused about something.

Then they move on to their replication of Study 2, the one that they did publish. This is the one with Rodin’s The Thinker. Once again, they used a sample 2.5x the size of the original, in this case 411 people. This time it was a “direct replication” with everything done exactly the same way as G&N (they used college students to solve the MTurk saturation problem). They found no effect of sculpture-viewing on religion, p = 0.38, h^2 = 0.001. Of note, and really cool, they confirmed the quality of their study by simultaneously testing the same sample for an effect they knew existed, and finding it at the level it was known to exist. They did a bunch of subgroup analyses and adjustments for confounders, and none of them did anything to recover the effect found in the original study.

The Reproducibility Project doesn’t get around to replicating studies 3, 4, or 5. But Studies 3 and 4 have been investigated by a different group in a slightly different context (CRT on liberal/conservative) and they find that the prime doesn’t even work; people who do the rational word scramble task don’t do better on the CRT. And the effect used in study 5 has been spectacularly falsified by sixteen different replication attempts – that is, hard-to-read fonts don’t even make you more rational at all, let alone make you less religious because of that increased rationality. Maybe it’s time for another Traditional Social Psychology Song:

So, why is this interesting? Seven bajillion vaguely similar priming-related studies have failed replication before. Now it’s seven bajillion and one. Can’t we just sing the relevant snarky songs and move on?

Probably. But my problem is that I keep trying to maintain these lines between studies where I know where they went wrong, and studies that seem like the sort of thing that shouldn’t go wrong. And when I see studies that I think shouldn’t go wrong, go wrong, I like to take a moment to be suitably worried.

My usual understanding of why these sorts of studies go wrong is a combination of overly complicated statistical analysis with too many degrees of freedom, unblinded experimenters subtly influencing people, and publication bias.

These studies don’t have overly complicated statistical analysis. They’re really simple. Do a randomized experiment, check your one variable of interest, do a t-test, done.

And these studies don’t have a lot of opportunity for unblinded experiments to subtly influence people. The whole thing was done online. That seriously dampens the opportunity for weird Clever Hans-style emotional cues to leak through.

That leaves publication bias. I said the original paper contained five different studies, but for our purposes that isn’t true. If you count the pilot studies, it actually included seven. A brief description of the two pilots, summarized from the supplement:

Pilot 1: 40 people see either The Thinker or the athlete sculpture, then are asked to solve a series of syllogisms where the intuitively correct answer is wrong. The people who saw The Thinker got more answers right, p less than 0.01, d = 0.9.

Pilot 2: 79 people unscramble either words relating to reasoning, or words not relating to reasoning. They are then asked the trick question “According to the Bible, how many of each kind of animal did Moses take on the Ark?” (I didn’t know this was an Official Scientific Trick Question when I wrote about Erica using it in Chapter 5 of Unsong). The people who unscramble rationality-related words are more likely to get the correct answer, p = 0.01.

So how do you get publication bias on seven different but related experiments performed in the same lab?

That is, if there’s a 5% chance of each experiment coming out positive by coincidence, then the chance of all of them coming out positive by coincidence at the same time is 0.05^7 = about one in a billion.

Yet the alternative – that these people performed a hundred-forty different experiments and reported the seven that worked – isn’t very plausible either. In particular, consider the two studies that combined a pilot with a main experiment. Unless there wasn’t even a pretense of doing anything other than milking noise, this had to be a single pilot study, followed by a single main experiment, with both of them being positive. And again, the chances of this happening by coincidence are really low.

So what happened? A commenter brings up that they used different measures of religious belief in each study, for unclear reasons. Is it possible that they used all three of their measures for everyone, and took whichever worked?

I’m not sure. And this has cemented something I’ve been thinking a lot about lately – a move from “this study’s probably not flawed because X” to “I should always be concerned that studies may be flawed, until they replicate consistently”. Probably there are some people who know enough statistics that all of these patterns make sense to them. But if you’re at my level, I would recommend against trying to play along at home.

Or as someone on Twitter (sorry, I lost the link) put it recently: “Peer review is a spam filter. Replication is science.”

II.

There’s a loose end here which deserves some attention – the Reproducibility Project’s claim that “subsequent direct replications of this correlation have pretty conclusively shown that a weak negative correlation does exist between [reasoning ability and low religious belief]”.

I want to talk a little about these other studies. This is going to be kind of politically incorrect – it’s always sketchy to say science has proven that people only believe certain things because they’re irrational. So in order to keep tempers low and maintain the analytical frame of mind we need to deal with this logically, please stare at this picture of The Thinker for thirty seconds.

Done? Good. Pennycook et al (2016) does a meta-analysis of all the work in this area. He finds thirty-five different studies totaling over 15,000 subjects comparing CRT scores and religious beliefs. Thirty-one are positive. Two of the remaining four detected an effect of the same magnitude as everyone else, but didn’t have enough power to prove it significant.

The remaining two negative studies are delightful and deserve to be looked at separately.

McCutcheon et al’s is titled Is Analytic Thinking Related To Celebrity Worship And Disbelief In Religion?. Unsatisfied with just asserting that irrational people become religious, they expand the claim to add that they become the kind of person who’s really into celebrities. They do manage to find a modest link between irrationality and score on the “Celebrity Attitudes Scale”, but the previously-detected irrationality-religion link fails to show up. This is a little worrying because it’s a paper that got published on the strength of a separate finding (the celebrity one) and incidentally failed to find the religion link, which means it’s a rare example of something being publication-bias-proof.

The other one was Finley et al’s Revisiting the Relationship between Individual Differences in Analytic Thinking and Religious Belief: Evidence That Measurement Order Moderates Their Inverse Correlation. They find that if you measure rationality first and then ask about religion, more rational people are less religious, and theorize that doing well on rationality tests primes irreligion. But if you measure religion first and then ask about rationality, there’s no link. Among 410 people, those in the CRT-first condition produced a rationality-atheism correlation significant at p = 0.001; those in the religion-first condition got nothing, p = 0.60. I don’t see a direct comparison, and the difference between significant and nonsignificant isn’t necessarily itself significant, but just by eyeballing this is obviously a big deal. This is also worrying, because it’s another example of a study that found an exciting finding and so got published despite failure to replicate the result at issue.

But Pennycook responds by pointing out seven other studies in his meta-analysis that ask for religion before testing rationality yet still get the predicted effect. In fact, overall there is no noticeable difference between religion-first studies and rationality-first studies. Some others assess rationality and religion on different sittings, and still get the same results. Also, now it looks like priming doesn’t affect your religiosity or rationality. So Finley’s paper has to be wrong, which means it’s yet another example of strong p-values in a large sample size in the absence of any real effect.

At this point we’re left with 31 good studies finding an effect and 2 good studies not finding it. Most of them converge around an effect size of r = – 0.20. Pennycook does the usual tests for publication bias, and as usual doesn’t find it. I think at this point maybe we can conclude this is real?

A few other things worth looking at:

Is this effect true only in college students and mechanical Turkers? No. Browne et al look at 1053 elderly people’s CRT scores and religiosity, and find the effect at the same level as everyone else.

(they also find that women do much worse on the CRT than men. I looked to see whether this is a common finding, and indeed it is; in a sample of 3000 people taking a 3-question test, men average about 1.47 and women about 1.03, p < 0.0001. This remains true even when adjusting for intelligence and mathematical ability. I'm not sure why I've never seen any of the sex-differences crowd look into this seriously, but it sounds important. If you have a strong opinion about this, please stare at the above image of The Thinker for another thirty seconds before commenting)

Is this effect simply an artifact of IQ? After all, there’s some evidence that IQ increases irreligion, and CRT score correlates heavily with IQ (see eg this book review). This is the claim of Razmar & Reeve, who do a study that finds that indeed, it’s not that more rational people are less religious, it’s that smarter people are both more rational and less religious. But Pennycook responds with a boatload of research finding the opposite; the gist seems to be that both IQ and CRT are independently correlated with irreligion, but the CRT correlation is stronger than the IQ one. Trying to tease apart the effects of two quantities that are correlated at 0.7 sounds really hard and I am not surprised that people can’t figure this out very well.

(This paper brings up another interesting fact – on a lot of these tests, religious people take less time to solve problems, even when both sets of people get the right answer. This reminds us that high-CRT shouldn’t be considered strictly better than low-CRT in the same way that high-IQ is strictly better than low-IQ. It’s more like tradeoff between System 1 fast and heuristic-laden thinking, vs. System-2 slow and deliberative thinking. This tradeoff seems to exist, at different points in different people, regardless of their IQ.)

The paper says:

Importantly, the degree to which cognitive ability versus style are predictive of religiosity has theoretical consequences. As discussed by RR, a primary relation with cognitive ability is consistent with the idea that people naturally gravitate toward ideologies that match their level of cognitive complexity. Thus,according to this position, religious ideologies are less complex than secular ones, and, as a consequence, more likely to be held by less cognitively complex individuals. In contrast, a primary relation between cognitive style and religiosity is consistent with the idea that Type 2 processes are selectively activated by religious disbelievers to inhibit and override intuitive religious cognitions. Importantly, under this formulation, religious disbelief does not necessarily require a high level of cognitive ability

.

III.

Overall my takeaway from reading some of this stuff is:

1. “Analytical cognitive style”, ie the slow logical methods of thinking that help you do well on the CRT, probably increases likelihood of being an atheist and decrease the likelihood of being religious, even independent of IQ with which it is highly correlated. The effect size seems pretty small.

2. IQ probably also increases likelihood being an atheist and decreases likelihood of being religious, even independent of CRT with which it is highly correlated. The effect size seems very small.

3. Openness To Experience probably has complicated effects that make people less fundamentalist but more spiritual.

4. These are all long-term trait effects. There’s no good evidence that “priming” analytical thinking style can make you more or less religious in that exact moment. Probably the effect size here is zero.

5. Gender differences on the CRT are higher than gender differences on almost any other test and this seems to be underexplored.

6. Just because a paper has relatively simple statistics that are hard to fake, doesn’t mean it’s likely to replicate.

7. Even given (6), just because a paper is an online survey with little room for experimenter effects, doesn’t mean it’s likely to replicate.

8. Even given (6) and (7), just because a paper has many different studies that all confirm the same effect, doesn’t mean it’s likely to replicate.

Extra bonus takeaway: I was too quick in dismissing the CRT’s ability to convey extra interesting knowledge beyond IQ, and I should look into it more and maybe get Stanovich’s book. Also, there’s some similar research on CRT and politics which I should probably look into, although I don’t even know how long I’m going to have to stare at that Thinker picture for that one.

Extra extra bonus takeaway: I should include CRT on next year’s SSC survey.