Last night I asked Tumblr two questions that had been bothering me for a while and got some pretty good answers.

I.

First, consider the following paragraph from JRank:

Terrie Moffitt and colleagues studied 4,552 Danish men born at the end of World War II. They examined intelligence test scores collected by the Danish army (for screening potential draftees) and criminal records drawn from the Danish National Police Register. The men who committed two or more criminal offenses by age twenty had IQ scores on average a full standard deviation below nonoffenders, and IQ and criminal offenses were significantly and negatively correlated at r = -.19.

Repeat offenders are a 15 IQ points – an entire standard deviation – below the rest of the population. This matches common sense, which suggests that serial criminals are not the brightest members of society. It sounds from this like IQ is a very important predictor of crime.

But r = – 0.19 suggests that only about 3.6% of variance in crime is predicted by IQ. 3.6% is nothing. It sounds from this like IQ barely matters at all in predicting crime.

This isn’t a matter of conflicting studies: these are two ways of describing the same data. What gives?

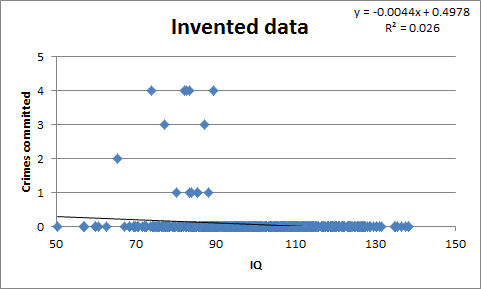

The best answer I got was from pappubahry2, who posted the following made-up graph:

Here all crime is committed by low IQ individuals, but the correlation between IQ and crime is still very low, r = 0.16. The reason is simple: very few people, including very few low-IQ people, commit crimes. r is kind of a mishmash of p(low IQ|criminal) and p(criminal|low IQ), and the latter may be very low even when all criminals are from the lower end of the spectrum.

The advice some people on Tumblr gave was to beware summary statistics. “IQ only predicts 3.6% of variance in crime” makes it sound like IQ is nearly irrelevant to criminality, but in fact it’s perfectly consistant with IQ being a very strong predictive factor.

II.

So I pressed my luck with the following question:

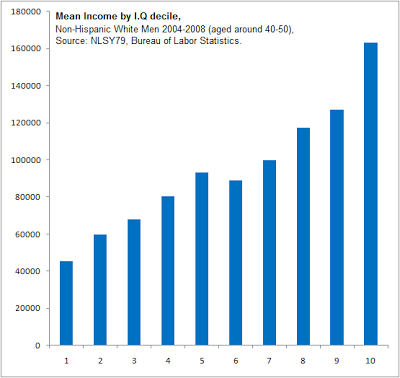

I’m not sure why everyone’s income on this graph is so much higher than average US per capita of $30,000ish, or even average white male income of $31,000ish. I think it might be the ‘age 40 to 50’ specifier.

This graph suggests IQ is an important determinant of income. But most studies say the correlation between IQ and income is at most 0.4 or so, or 16% of the variance, suggesting it’s a very minor determinant of income. Most people are earning an income, so the too-few-criminals explanation from above doesn’t apply. Again, what gives?

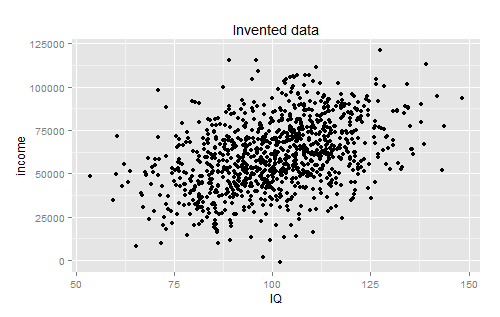

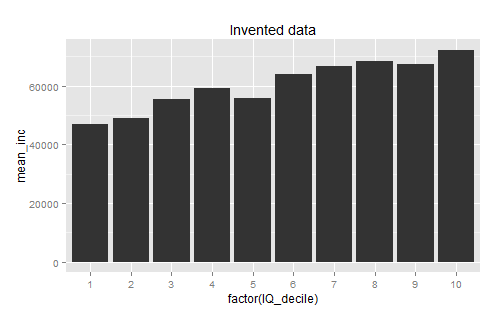

The best answer I got for this one was from su3su2u1, who pointed out that there was probably very high variance within the individual deciles. Pappubahry made some more graphs to demonstrate:

I understand this one intellectually, but I still haven’t gotten my head around it. Regardless of the amount of variance, going from a category where I can expect to make on average $40,000 to a category where I could expect to make on average $160,000 seems like a pretty big deal, and describing it as “only predicting 16% of the variation” seems patently unfair.

I guess the moral is the same as the moral in the first situation: beware summary statistics. Based on the way you explain things, you can use different summary statistics to make things look very important or not important at all. And as a bunch of people recommended to me: when in doubt, demand to see the scatter plot.

Yeah, I guess shorter posts is technically “less blogging.”

I’m halfway convinced that every time he claims he’s going to be doing less blogging, he’s actually just testing the theory that saying he was going to do less blogging is what caused the big drop in readership he described a few posts ago.

Of course. Shorter posts are “less blogging”, whereas a reduction in the number of posts would be “fewer blogging”. 🙂

At least we are covering IQ here. If you are going to waste time blogging about how unreliable summary statistics are, it’s always good to do it with a notoriously unreliable summary statistic like IQ as your baseline.

GIGO all day baby!

Sigh, IQ is unreliable? Actually, IQ test scores are among the most reliable (repeatable) test scores in psychology.

Please try harder next time.

That’s like saying coral it’s the driest life I the ocean.

The income one is probably also distorted by rare outliers, this time ones who make orders of magnitude more than anyone else. Thus the mean is probably not a good measure to use.

Yes. Although NLSY79 topcodes income, so this will be minimized somewhat. They actually do it in an interesting way: they take the top 2%, average them, and give everyone in the top 2% that value.

I’d go with a combination of

a) that. Median income is about $30K. US per-capita is about $50K. Outliers matter.

b) Half the population doesn’t work, so per-worker-capita is about $100K, which is roughly the mid-point on that graph.

c) 40-50 is probably close to the highest earning point in anyone’s career.

Are you sure (b) isn’t already taken into account by the $30k figure?

Age cohort has a much larger effect than generally realized:

http://econlog.econlib.org/archives/2015/05/shocking_new_da.html

As far as I can see, your link is about net worth and not income. That people accumulate wealth (generally various forms of property while paying off loans) as they grow older – who hasn’t noticed this?

For the income one, think of binning as a method of smoothing data. It’s the same principle as Loess curves and ensemble bagging: averages smooth out and decrease variance.

In the first case, the issue is that p(low IQ | criminal) is very high, but p(criminal | low IQ) is still very low. People don’t have a good intuition for how those two things are very very different. Saying it’s a “strong predictive factor” I guess might be true relative to other predictive factors (low IQ predicts criminality better than height I assume), and it might be true in a relative sense (those with low IQ are 10x more likely to commit crimes), but that doesn’t make it true in an absolute sense.

One might also conjecture that high IQ people are less likely to get caught, and that biases the stats.

Also the implied model with a strict correlation is a linear relationship, which doesn’t make sense in this case. A zero-inflated count model would be much better. One could still describe it with the right summary statistics, just not pearson correlation (which is what the authors of that paper used).

The second case might make more sense if you put error bars on the bar plot. I don’t know if the BLS releases standard deviations but one could generate some fake data with the desired properties.

Related: http://en.wikipedia.org/wiki/Anscombe%27s_quartet

What still blows my mind is Simpsons Paradox

“All things with a star on their belly are sneetches, but not all sneetches have stars on their belly.” Especially after that Silvester McMonkey McBean shows up with his machine.

Aye, and they have high incomes, political influence and community respect, perhaps even elected office. We just don’t (usually) call them criminals.

Ah, Simpson’s paradox. Drove me insane for a very long time which is why it’s one of my favorite paradoxes. I feel like I didn’t understand it fully until I worked through Pearl’s Causality.

But if you want to build some intuition quickly, I like this picture:

http://singapore.cs.ucla.edu/LECTURE/section3/sld005.jpg

(For some reason) Small fish swim for the small mesh net. Large fish swim for the large mesh net.

Looking only at the final catch, you have a lot more small fish. But each net (individually) would surely catch more large fish than small fish.

Something important to note is that the “reversal” you see with Simpson’s paradox cannot happen with causal interventions. There has to be some kind of confounding factor. The paradox is so counterintuitive because we’re used to thinking of conditioning as being like an action that we take instead of a passive observation.

I am somewhat fearful that I screwed up the explanation here and Ilya Shpiter is about to enter this thread and tell me how wrong I am…

Am I crazy or is it enough to just say this to explain the paradox:

A1/B1 + A2/B2 ≠ (A1+A2)/(B1+B2)

The real trick is not only explaining why that happens, but explaining why we are surprised (that is what Pearl did).

Incidentally, I think the Newcomb paradox is of the same type (an explanation exists in terms of representational expectations).

Hello,

I am a little sad that this might be my reputation around here, but I suppose these things do not arise in a vacuum, and it is on me to do better. I don’t know if it helps, but I get things wrong all the time — math is hard! I think there is a post by me in the past out on the internet somewhere, where I got the Simpson’s paradox explanation wrong, actually. Please don’t let the possibility of being wrong discourage you from posting.

And I will work on not coming across as a “math ogre.” 🙂

The last thing I want is to discourage any discussion of causality for fear of me pouncing from the shadows.

For what its worth, I am very glad to have you here since you are one of the few commenters who really knows on a deep level what they are talking about. I’m sure a lot of commenters value your presence here as well.

I have occasionally felt a similar feeling of hesitance. But its not that I’m scared of you or another commenter unfairly berating me. I’m afraid of being wrong. But I think being wrong isn’t a reason not to comment, its a reason for me to be humble and open to learning when I’m trying to talk about something I don’t yet fully understand.

You could be a Mensa-member genuine certified genius house painter, and you’re still only going to make a certain limit of income (unless you decide to rebrand as an interior design consultant) while someone not as high IQ as you but, for instance, a financial controller in a fairly big company would make a higher income. If you’re high IQ, you’re going to be steered towards academic pursuits and “the professions” by schools and career advisors etc. And as you get older and have more experience in the field, the wages generally go up.

And there may be strange blips in the results; looking at this site which I pulled up to see what income ranges for different jobs were, apparently for financial controllers “Pay for this job rises steadily for more experienced workers, but goes down noticeably for employees with more than 20 years’ experience.”

That’s odd and I can’t understand it. On the face of it, there would be a point where in your 40s or so you’ll hit the ceiling for pay, then as you get older and (one would presume) more experienced in the job, your value goes down? I’d expect that for high-tech jobs where younger is better because less fixed mindset, more up to date, and so on – but not for jobs where knowledge of market trends and past performance is relevant, and keeping up to date on new legislation is simply a matter of learning the damn codes and implementing them.

A hypothesis about financial controllers: pay starts going down with experience because the good ones eventually get promoted to a different job. In other words: conditional on you still being in the job after 20 years, you’re probably not particularly skilled at it. The skilled financial controllers start out paid ok, get raises and promotions and such, and then get promoted out of financial controller. So you see their contribution to the increasing salary with experience for a while, but then they all leave.

That idea struck me when I was reading the Horatio Hornblower novels, which made a big deal about the Royal Navy using a very small number of officer ranks, relying strictly on seniority (specifically, time you’ve been your current rank) to determine which of two people of the same rank should be giving orders to the other.

To a certain degree it made sense: someone who’s been a Lieutenant for two years is probably better at Lieutenanting than someone who’s only been a Lieutenant for three months. But it breaks down when you’re dealing with someone who’s been stuck at Lieutenant for 15 or 20 years, as that indicates that he’s not good enough to get promoted to Captain.

It might be different elsewhere, but at least in the U.S. Army, you get two chances at promotion as an officer, and if you don’t get promoted, you get kicked out.

It was very different in the Royal Navy of the 17th through early 19th centuries.

Step 1: If you were of the right social class and wanted to go to sea (and could afford the fees), you were commissioned as a Midshipman

Step 2: If you were a Midshipman and passed a very thorough written exam, you were promoted to Lieutenant. There was no time limit on this; you could be a 40-year-old Midshipman if you were stubborn and clueless enough.

Step 3: ???

Step 4 – Profit: If you were a Master and Commander or Post-Captan, subsequent promotions occurred on the basis of strict seniority until you were dead, retired, or Admiral commanding the Royal Navy.

Step three was the tricky one. There was no rule or procedure for being promoted above Lieutenant, except that if an Admiral happened to need an extra Commander or Captain he could turn a Lieutenant into one on a whim. The essence of a successful career in the Royal Navy, then, was to arrange for an Admiral to Take Notice of one’s potential.

Being a Lieutenant for 20 years could mean that you’re just not that good at it, or it could mean that you are very good at doing something boring and unglamorous, or very good at doing things in detached commands far from the attentions of Admirals. Run the best damn middle gundeck on any Ship of the Line in the fleet, and if that ship isn’t an Admiral’s flag you’re never getting past Lieutenant.

To add to what John Schilling said, there was also a selection process involved in being assigned to duty, beyond the selection process for attaining a particular rank.

Officers were only on active duty when assigned to a ship, fleet, or other duty post. Between assignments, Captains and Lieutenants were on half-pay reserve status, and Midshipmen were civilians (as with enlisted sailors and technical specialists crew members, midshipmen were hired on by the Captain for a specific deployment and mustered out when the deployment was over). In order to get assigned to active duty, one of the following generally needed to happen:

1. Someone in command of a ship or fleet had a vacancy of your rank and wanted you for the job. A captain could simply hire a midshipman on his own initiative, and the Admiralty would generally assign a lieutenant to a captain or a captain to an admiral upon request if they were available.

2. Someone at the Admiralty got the idea based on your record and reputation that you’d do a good job at a particular assignment.

3. Someone at the Admiralty had gotten the idea that you had the talent to make a good senior officer and decided to give you assignments that would give you opportunities to distinguish yourself.

4. You had social or family connections to someone with the political clout to trade favors with someone in the Admiralty to give you an assignment.

5. The personnel resources of the Navy were stretched thin and the Admiralty would grab any warm body with the right rank for the assignment.

1-3 were far from perfect, but did tend to select for merit, and officers who got a reputation for marginal competence tended to find new duty assignments hard to come by, at least in normal time periods when there were many more officers than duty posts. Nepotism helped get people from well-connected families more and better opportunities to prove themselves, but even then, if you screwed up too big or too often, your relatives would stop sticking their necks out for you. #5 only came up rarely, such as at the peaks of the Napoleonic Wars.

Half-pay reserve status happened for Admirals, too. Technically, “Admiral” was a posting, not a rank. The N most senior active-duty people on the Captain’s List were given fleet commands (described by a rank (Admiral, Vice Admiral, or Rear Admiral) and a color (red, white, or blue) indicating seniority of that rank, derived from the historical division of the navy into three squadrons). Anyone the Admiralty wanted to skip over for fleet command despite seniority would be given the post of “Admiral without Distinction of Squadron” (informally referred to as a “yellow admiral”, by analogy with the color squadrons) and would collect a reserve officer’s half pay while enjoying the social status of a retired admiral.

There was also a Darwinian selection effect, due to the brutal an unforgiving nature of the naval operations themselves and the similarly brutal and unforgiving nature of the likely court martial if you lost your ship or failed in your mission and didn’t have the good grace to get yourself killed in the process.

Basically, if you screwed up big, you were likely to get killed at sea or get shot for cowardice or kicked out of the navy in disgrace for incompetence when you got home. You and your career would generally survive multiple missions only if you fought and won, you contrived to succeed in your missions without fighting, or you survived losing a battle that had clearly been lost through no fault of your own.

The mandatory court-martial for losing one’s ship wasn’t an automatic career-killer. It was for the most part a genuine “something went wrong here and we should figure it out before the next time” court of inquiry, and only an exercise in meting out punishment if the inquiry found a punishable offense. And being mandatory, it didn’t carry the “If he wasn’t up to something they wouldn’t have arrested him” stigma of a modern criminal proceeding.

And surrendering to a more powerful foe to avoid needless bloodshed was then considered a reasonable and honorable thing to do. Note that Captain James Dacres of HMS Guerriere, famous for his ignominious surrender to the USS Constitution, was given two subsequent commands of larger and more prestigious ships, made Rear Admiral on active duty, and retired as a (yellow) Vice-Admiral.

Much is made of Admiral Byng’s execution for “failing to do his utmost against the enemy”, as proof of the unforgiving nature of the 18th-century Royal Navy. But that was an exceptional case, and he had to work at it. Cowardice, incompetence, hypocrisy, and general assholery all at once, in the course of losing a high-profile battle of great strategic importance. Dacres’ example is more typical.

And in case anyone is wondering, Dacres’ escape from perpetual lieutenancy was a combination of Eric’s cases 3 & 4 – his politically connected father got him an assigmnent as aide-de-camp to an Admiral, who was in turn impressed enough to assign him temporary command of a small warship and see if he’d do something noticeable. Byng, son of an admiral, was straight-up nepotism, but apparently quite capable in his earlier assignments.

Labor economists typically throw in a square of experience (or age) term into their models trying to explain labor income. The non-linear relationship between age/experience/years of education and salary is quite common.

Cognitive powers decline starting in your 20s (just like overall athleticism).

For a while – possibly a long while depending on the area – a person is more valuable being less intelligent but with greater knowledge. At some point you’ve gained all the knowledge that you’re going to gain and all you have going forward is cognitive decline.

Helpful analogy – baseball players peak at 27-30 years old but sprinters peak much earlier. The knowledge and skill acquisition in baseball offsets the physical decline for longer.

Cognitive powers decline starting in your 20s

So everyone on here is over the hill? 🙂

Do we have any idea of the age-ranges of readers/commenters here, just to get a general idea of which of us are drooling incompetents sliding into second childhood and which of us are the bright young things?

Going first: I’m the former category. Just getting into my 50s. Definitely feeling the physical decline and probably the start of the mental as well.

That’s nice and cheerful to get you all going, yes?

I’m not 40 yet, but it seems like in the past few months I’ve started having “senior moments” significantly more frequently than before- things like going to the other room to get something and not remembering what it was I wanted when I get there, or thinking “what’s that word that describes how twitter mobs go after someone, it’s on the tip of my tongue, it starts with A.” (I figured it out by looking up “random” in the thesaurus- “arbitrary.”)

If what I have read of neuropsychology is even remotely correct, the cognitive powers start declining at birth, at least for a given value of powers.

Extremely simplified model:

With experience, often used neural pathways are reinforced and less used ones are ‘pruned’. This is known as learning. As the available pathways become fewer, a person becomes ‘set in their ways’ and have a harder time learning new stuff as well as a harder time coming up with genuinely new ideas.

The thing with a very young child is that any idea seems likely.

The sweet spot is when one is still able to generate new ideas and still have enough reinforced pathways to guard against utter nonsense. When this is seems to vary wildly from person to person.

Also, the decline can apparently be slowed by learning new skills and being exposed to new ideas that reinforce new pathways. I read somewhere that learning to ride a motorcycle after turning 40 had a measurable positive effect on the synapse level, I don’t know if that one was ever reproduced.

Also, when it comes to learning, as long as your current knowledge is not directly false, the lack of available neurons can be compensated for by branching off the existing well used pathways which is why it is easier to learn new material that is related to something you already know.

I don’t think I’m directly wrong about this, but I realise that my level of understanding is at the very bottom rung of Wittgenstein’s ladder here.

Oh, and I’m 45 and consider a day without learning at least one new fact/idea is a day wasted, so probably not totally brain dead yet 🙂

I’ve been feeling mental decline in terms of memory and raw computing power since about 15, and from what my parents have told me, it sounds like my memory has been steadily declining since sometime in the single digits. On the other hand, wisdom is more practically useful than intelligence, except when it comes to picking up useful skills, which I wasn’t doing much of back then anyway.

“What still blows my mind is Simpsons Paradox”

How do you feel about this picture?

http://postimg.org/image/g5582bcyd/

http://en.m.wikipedia.org/wiki/Anscombe%27s_quartet

This is a thing. Thanks David Juran!

If you want to have more fun, compare IQ with poverty, and compare poverty with imprisonment.

The fact that the IQ / Income correlation is counter intuitive to you should make you go back and re-think your assumptions about how IQ effects the world. My sense is that you have essentially been in the camp of, “societal outcomes are explained by IQ”.

To go back to Alex, Bob and Carol from growth mindset, those potential bars are significantly overlapping. Yes, over a large population of ABCs, the average of A outperforms the averages of B and C, but any individual Alex has a pretty good chance of being outperformed by lots of Carols.

The data itself isn’t counterintuitive, the way it sounds in different statistical summaries is.

Yes, but many of your blog posts have concentrated on just the statistical summaries. And your conclusion here is that the statistical summary can tell you a story that is partially to very misleading, especially in an intuitive sense.

Again, going back to the growth mindset example, the example you made up for Alice, Bob and Carol is a point of data about how you intuitively think about ability, and you made the bars essentially non-overlapping.

That doesn’t seem to map to what you already know about the percentage of variance explained by “ability” if ability is simply IQ.

One of the pairs of bars were overlapping, and the other pairs of bars were non-overlapping for illustration. I don’t see a problem here.

Also, the bars are not necessarily overlapping given that scatterplot. Those data points are individual people. We could generate a similar scatterplot given a model in which income is 50% genetically determined intelligence and 50% genetically determined charisma for instance*. You can make _no_ inference about the how variable someone’s eventual income is from that graph.

*Hopefully unnecessary disclaimer, I am not advocating this model, I am demonstrating why you can’t conclude that ability is variable from that graph. Many models can generate the same result.

It’s true that an individual persons “bar” for outcome of income may actually be small (given that imaginary dataset), but if the only data point you have is IQ, the prediction bar you should draw is large.

And if you back and look at the actual example you can see that Carol barely overlaps with Bob, rather than mostly overlapping, with the differences coming in the last 10% of the range.

Carol’s “laziest” bottom is Bob’s hardest working top end.

If you’re shifting from “potential bar” to “predictive bar” then yes I agree. But this tells us nothing about whether Carol can move along the bar by having the right mindset. Her level of success could be completely determined but we only have a fuzzy measure of it. We need more information to tell.

@Alexander:

Scott has numerous posts where he essentially regards IQ itself as essentially determining outcome. But the data Scott is pointing at here suggests that IQ is one of many factors, although it may be the most important factor, its is one of (likely) many that all have an effect.

In the post for the ABC example, Scott uses IQ several times as a proxy for overall ability. Although he does note that IQ is not the only factor in overall ability, he never mentions any other possible factor.

Here is the sentence that appears directly over the graph I linked earlier:

“Likewise, mindset theory suggests that believing intelligence to be mostly malleable has lots of useful benefits. That doesn’t mean intelligence really is mostly malleable. Consider, if you will, my horrible graph:”

Clearly Scott is treating IQ as determining the potential bar in that example.

No it does not, here is a model that would reproduce the data: success = IQ + randomness. There is no strict need to propose other factors to explain this data. This is a statistical point, and I feel a bit guilty pointing it out. But statistics is hard and its easy to make wrong conclusions or even correct conclusions with incorrect reasoning.

For the record I agree of course that there are many things that contribute to success. Almost everyone believes this, hence Scott’s rant about the “Bloody Obvious Position”. However, the data here alone tells us almost nothing.

@Alexander:

Note that I modified my statement about multiple other factors by including the word likely. I recognize we aren’t considering in this hypothetical what the other factors are. But even if it is just one other factor (randomness), it doesn’t change my basic point.

Scott is treating IQ as if it, by itself, can be used to reduce the possible outcomes to a small range. But even if the other factor is randomness, then it just means that the outcome is almost completely random and without cause or correlation.

Parenthetically: If that other factor was truly, truly random, like in some sort of a quantum way, that would be sort of deeply weird. Probably we really mean so complexly caused as to be undetectable. Or something.

“No it does not, here is a model that would reproduce the data: success = IQ + randomness.”

In that model, success is due to two factors: IQ, and some other factor that you’ve labeled “randomness”.

HeelBearCub

I think that I may have been reading into you more than what you actually wrote and been unnecessarily pedantic as a result. Because you framed this discussion terms of the growth mindset post, I read you as trying to “sneak in” your preferred explanations for success in a way that wasn’t warranted. In particular, the idea that for any given person, there is a wide range of potential levels of success they could achieve depending on their mindset or environment – which is not supported (or opposed) by the data.

But if you’re only claiming that IQ is not a hugely predictive metric on the individual level, then of course I agree. It’s exactly as predictive as the numbers show. Whether you call it “small range” or a “moderate range” is venturing into politics and I’d rather just state the actual confidence intervals.

@Alexander:

Ah, yes, I can see how that would be a natural assumption to make. I wasn’t actually trying to sneak in any any other explanation. The ABC graph was a handy illustration, but I wasn’t actually intending to address anything about growth mindset. I really just think that Scott’s posts have been more reductive than is warranted when it comes to using IQ as an explanation for outcomes.

I agree that describing the ranges as small, moderate or large doesn’t actually add much clarity. I do think that visualizing how much the ranges overlap is useful though.

Based on seeing the “graduates” of our early school leavers programme turning up in the court pages of the local paper, I’d say that IQ by itself isn’t the only predictor.

Yes, if you have lower IQ you’ll struggle in school, get lower qualifications, and be confined to the type of manual and unskilled/semi-skilled work that is being phased out. Service industries are taking over from manufacturing industries and they don’t pay as well.

On the other hand, as you point out, lower IQ does not mean “will become a criminal”. There’s a certain level of intelligence necessary for crime as for anything else.

What does count as contributing factors on top of, or beside, less academic/lower IQ? Well, let’s see:

Drugs. This is why I’m “not just no but HELL NO” when it comes to the perennial question of legalising drugs. Yes, even weed. The guys outside at break time sparking up are the ones who’ll wash out of the programme and fall back into petty crime. The girls will go on to develop a nice little heroin habit, have kids that will be taken into care as they’re deemed unfit mothers, have a string of convictions behind them and be looking at doing solid jail time by the time they hit 25 (to take two examples I personally know).

Being easily led, in conjunction with what used to be called bad company or bad companionship. It sounds laughably Victorian but it’s one of those “Gods of the copybook headings” things. Especially when we’ve been quoted studies about how parental influence has little to do with how kids turn out or the development of their characters, hanging around (particularly when you’re the vulnerable one of the group) with guys who are involved with petty crime will lead to you being dragged into that, and probably being used as the catspaw by the smarter ones. Again, personal experience of that: 16 year old boy at the school where I worked, severe learning difficulties and was of low IQ, only child of elderly parents who, now that he was growing up and getting bigger and stronger, couldn’t control him and he didn’t want to be controlled; they were worried because he suddenly had a lot of money that there was no way of accounting for (they weren’t giving it to him and he didn’t have a job so he wasn’t earning it); suspicion that he was involved in crime and being used by so-called “friends” as the one to take the blame if the cops caught up to them.

Parents and family: is there such a thing? Again, broken homes, single parenting, lack of support, unemployment, no engagement with education, so on so on and so forth. If there’s no support at home, it’s easier to drift into crime, especially when you have a group of buddies urging you on (see above re: bad company)

Employment: when the times were going good, there was plenty of work in areas such as construction, so apprenticeships were there for the more able and even the less able could get some kind of manual/unskilled work. When that goes, as in the current climate (though it’s starting to pick up again), it’s life on the dole and again, the temptations of petty crime.

You are confusing the problems caused by drugs with those caused by prohibition of drugs.

A person getting addicted to a drug is most likely due to the person+drug combination, I include the person because they made the choice to take it the first time, and there is a good chance that they have an addictive personality in the first place.

A person going to jail and having a “string of convictions behind them” is caused by making drugs illegal. People go to prison for drugs either because 1. They got caught with drugs on them. 2. They stole money or goods to fuel their habit. or 3. The drug made them go bonkers and they attack someone.

#1 is obviously a direct consequence of prohibition, no need to go into any detail.

#2 is indirectly caused by prohibition: drugs have to be sold on the black market, which results in higher prices, lower quality, unreliable concentration, poisonous dilutionts, super-concentrations (yes, that does happen at the same time as lower quality), linkage to the criminal underworld, and funding of criminals. On the free market these problems disappear rapidly.

3. depends on the specific drug, but is exacerbated by prohibition because there are advantages to the dealers in drugs that have higher concentrations, which they can dilute with drain-o or rat posion. Or alternatively develop a new drug that is cheaper than one of the “staples”, for one of the recent examples see krokodil as a heroin substitute.

None of this makes taking drugs a good idea, but the confusion of causes is common among anti-drug peddlers: it is their equivalent of the pro-drugist’s “MARIJUANA CURES ALL DISEASES!!!111one!!” spewage.

Even if drugs were legal, the convictions result from more than just “going bonkers” in the running amok sense. Assume legal and not too-heavily-taxed drugs:

1. Typical heavy pot smoker has almost no ambition, and therefore doesn’t have a job nor much money. He wants something, and rousts himself to do something about that want. The easy route, at that point, is probably something criminal – steal the item directly, or steal money to buy the item.

2. Drugged driving.

3. Impaired judgment from too much drug use leads to over-reaction to some slight, or some other violation of another person – starting fights, groping women, etc.

Is that really true of a typical pot smoker? I’ve known quite a few and just my bare system 1 pattern recognition tells me there was no relationship at all between whether or not they smoked and how much ambition they had. I’m pretty sure the last three Presidents of the U.S. all smoked it at some point.

Adam – for heavy pot smokers, my experience is that loss of ambition is very typical. For occasional pot smokers, much less so.

In my experience heavy pot usage is correlated with low ambition, but in fact low ambition and motivation encourages smoking, rather than the other way around.

Anthony: perhaps you go the causation reversed?

@ Foo Quuxman

You are confusing the problems caused by drugs with those caused by prohibition of drugs.

I agree that quite a few of the problems mentioned in this thread are caused, or made catastropic to the user, by the illegality. Legal booze has similar physical effects, but not the indirect (ie legal) effects except in extreme cases.

I checked, and in Denmark in the sixties (when all the men studied in this study would have committed their crimes) drugs were legal to own and legal to use. Only production and possession of large amounts with intents to sell was illegal.

Yet they had a crime spike in the sixties, like everywhere(?) else. With the age and gender demographics of crime, the demographic studied in this study would have had to stood for most of it.

No, a person getting a string of drug-related convictions is not due to “oh, drugs possession is illegal”. Conflating the two cases I know, it’s due to multiple offences of shoplifting and similar petty crime to get money to feed her habit, getting into fights, public drunkenness, public being under the influence of prohibited substances, stabbing another person in the stomach at a party when probably everyone there was pretty much drunk, stoned or both, and generally being such a public nuisance due to anti-social behaviour that they get constantly moved around from housing estate to housing estate because of complaints from the neighbours, on top of generally being unable to sort their lives out.

If the only objection to legalising soft drugs (though I don’t know how much difference such a division between ‘soft’ and ‘hard’ drugs makes) was the simple fact of possession making you a criminal, then I’d probably say sure, go ahead and legalise them.

Seeing the effects of drug use is a different matter. Even petty drug-dealing and suppliers on a small scale are a complete pain in the arse when they hang around a house on a council housing estate, much less get established in one. Things go very downhill from there.

Maybe you and others you know can recreationally consume and still manage not to spend every spare penny on your habit, get up and go to work, get a job and/or education in the first place – good for you.

But making it legal is just making it easier for people to wreck their lives – and yes, I’ll anticipate you here, people do have the right to wreck their lives. But then society is left to pick up the pieces, and a lot of the people who are gung-ho for legalisation are not the ones dealing with the fallout and who would be the first on the phone to complain to the council and the police over a drugdealer living next door to them and all the crime and chaos that attracts.

If drugs were legal, they wouldn’t need to shoplift and commit other petty crimes to buy drugs, because drugs would be sold on the free market. How many times do you hear about someone committing petty crime in order to afford cigarettes?

And some of the things you mention either aren’t related to drugs at all, or are related to alcohol, which is legal. You don’t seriously think soft drugs like marijuana make people get into fights, do you? But alcohol does.

“Yet they had a crime spike in the sixties, like everywhere(?) else.”

Didn’t Scott make a post about the relationship between lead and crime? Isn’t the 50s-60s around the time period that lots of people with cars + leaded gasoline started becoming ubiquitous?

alexp and anonymous, at least two cases I know of, the causation ran the direction: start smoking pot heavily, lose one’s ambition for almost anything other than getting stoned again.

Jiro – “If drugs were legal, they wouldn’t need to shoplift and commit other petty crimes to buy drugs, because drugs would be sold on the free market.”

It doesn’t matter how cheap they are, if you have no job, they’ll be too expensive to afford without resorting to petty crime. If being a heavy tobacco smoker was incompatible with keeping a job, you’d see a lot more crime related to tobacco. (And you do see a lot of petty crime from the worst alcoholics – the ones who can’t wait until quitting time to crack open a beer.)

Anthony, would you say the same thing of videogames?

Some of the best videogames are free, but in fact you do see videogame addicts engage in petty theft in the form of piracy quite often.

I don’t like the analogy. First, I have no reason to think that “addicts” are more likely to pirate games (so that addiction would cause piracy). Second, be one pro or anti online piracy, “theft” is a box where it seriously doesn’t fit.

But anyway, my point is that the reasons Anthony stated for believing that marijuana causes loss of ambition are apparently also observed with video games (I don’t think those are very good reasons).

Cauê, if someone is such a videogame “addict” that they can’t hold down a job, and don’t really care about that, I expect that they will also be more likely to commit petty crimes to feed themselves and their addiction.

How do you know?

I regret to say that I have never been to Ireland. However, I have done historical work on populations that include a lot of Irish-American people. And one frustration with this data is just how few Irish-Catholic names there seem to be.

Given a piece of historical data about an individual in Boston named (say) Joseph Murphy, or Patrick O’Malley, without more detail, you actually know very little, because in a city like Boston at the time, there were probably hundreds of Irish-American men with those exact names.

Of course name duplication can defy the odds. Michigan State University used to have two completely unrelated professors, both named “Charles R. St. Clair”.

But in a context where there is only a relative handful of surnames, and only a few culturally acceptable given names, name duplication would seem to be a constant headache.

For a sense of scale, there are 100k Irish in Boston proper today. There were a lot more 50 years ago. There are about 100k in the average Irish county.

We must not forget the effects that the devil jazz will cause the kids to dance in a wild frenzy that will cause them to be overtaken by an insatiable desire to have unprotected conjugation. All forms of fun should be strictly prohibited based on my definition of: all, forms, of, fun, should, be, strictly, prohibited.

Except that these things are happening with prohibition, as opposed to without it.

Do you predict WA and Colorado to suddenly get way worse on the fronts you mentionted? (You seem to be baselessly predicting they will.)

I would bet for the opposite effect.

I predict there will be little change in Colorado and Washington because pot was, like in California, already pretty much legal.

There’s a myth that there are lots of people in jail merely for drug possession. In the U.S., this is pretty much completely false relative to marijuana, and even generally untrue with respect to harder drugs. Occasionally, the only charge is the drug charge, but that’s because the cops arrested the person for some behavior which might be hard to get a conviction for, or which might rate more of a sentence than is really appropriate, but they know they can make the possession stick, and the penalty won’t be too awful.

Danish men born at the end of World War II, assuming they have conscription tests at age 16 like in Norway, were tested in the early sixties, and were at peak age for criminal activity in the late sixties. The age of accountability in Denmark is 15, and I believe it was that in the sixties also.

Crime increased in the sixties, but it almost certainly wasn’t drug crimes – they didn’t even start tracking that until the mid-seventies. Nor was it sexual offenses. Thanks to liberalization, fewer people were convicted of that than earlier. It was apparently property crimes, including car theft and robberies, that stood for most of the increase in crime.

Since the study asked about two or more crimes committed before the age of 20, that means you’re talking about people getting caught and convicted for at least two crimes in just four years – most likely property crimes. At that point, Denmark being Denmark in the sixties, you’re a very troubled kid. So criminality predicts low IQ? Big whoop, I bet any Danish police officer could spot these kids a mile away. They would be the ones having the full basket of issues.

What I would be a lot more interested in was stuff like lead as a confounder here. Looking for something like that, that you might actually be able to do something about, is a lot better than attempting to identify criminals by the correlation to performance on Raven’s progressive matrices.

The testing age is 18 for conscription (forsvarets dag/sessionsprøven).

Also particularly beware comparisons in “predicted variance” for different populations, since this will change with the population variance for predictions that are equally “good” (e.g. in terms of standard error of estimate). Similarly for regression on conditional distributions. Similarly for comparisons between entirely different statistical problems.

While I’m here, I’ll disagree with (or at least second requests for clarification on) “it’s perfectly consistent with IQ being a very strong predictive factor” in the first case and “describing it as ‘only predicting 16% of the variation’ seems patently unfair” in the second.

For 1, in what sense is IQ a strong predictive factor? At best I’d say crime is a predictor of IQ in that model. Knowing someone’s IQ, you’re barely more likely to be right about their criminality than I, in total ignorance, am.

For 2, I’m also not sure what you’re getting at. Speaking extremely roughly, all variation independent of IQ appears to be five times as important as IQ-correlated variation for describing that population. There also happens to be a lot of variation. Could you elaborate on why you feel it’s wrong to describe it that way? Is it enough to include both relative and absolute measures of “predicted variance”?

One of the interesting things about IQ is that while, as you point out, low IQ is by itself only very weakly predictive of criminality, it amplifies other risk factors. Like: growing up fatherless. Or having a history of drug or alcohol addiction, or having other markers of .high time preference / low impulse control. Or just growing up in a trash culture that glorifies the thug life. Combine any of those factors with low IQ and you get a probability of criminal record that goes way, way up relative to IQ alone or any other individual factor alone.

No source for this, sorry. It’s a pattern I’ve noticed in violent-crime offenders while studying the criminological issues around firearms regulation.

That maps pretty well onto my intuition. (Or should I say, my intuition maps to that explanation pretty well?)

I’m hesitant to try to come up with any policy suggestions based on that (especially not without some sources) but it seems like it’s a potentially Very Important Factor.

This is completely orthogonal to the question.

You are substituting your intuition for data.

I agree that I have no data.

I was trying to say ESRs conjecture seems intuitively correct to me and thus I really wish I had some actual data.

Well, in the first example, on the Invented Data graph, if I knew someone had higher than average IQ, I could be certain they were not a criminal. That sounds like pretty high predictive power, in a fuzzy vague sense, to me.

Alright. That seems like an artifact of how certainty turns up in the model, since in real life, you just have different but still very small probabilities. Maybe something like “relative risk” would be better suited to talking about that, although that has similar problems as a summary statistic.

Well, let’s assume there’s only two factors to criminality, Dumb-ass and Ass-hole. You need both factors to become a convicted criminal. If you’re a dumbass but not an asshole you are harmless. If you’re an asshole and not a dumbass you become a politician or a pushy salesman or something else where your total lack of ethics is a competitive advantage without the huge downside risk of jail time. People who are sufficiently dumbass to be criminals are way more common than people who are sufficiently assholes, so that’s by far the dominant factor.

That means that all the concepts attached to criminal other than breaking the law are overwhelmingly asshole traits. This suggests that when people can see what the smart assholes are doing, they will want to criminalize it and will have a tendency to call them criminals even if the current body of law doesn’t cover that activity.

Heh. That’s pretty good.

The other thing your dumbass/asshole generative model suggests is that if you can figure out any way to do it, concentrating your police resources on stupid people is efficient.

Unfortunately, given the population statistics, that smart move is immediately going to land you in a racial-politics quagmire.

It’s entirely possible that at least part of the reason for the predictive power of IQ on arrest history in the first place is already police concentrating their resources on stupid people.

Maybe, maybe not, but at bare minimum, “arrest history” is a very imperfect proxy for criminality. Probably a decent proxy for public displays of violence, though.

Also: it’s easy to show that if you have two factors, one relatively common and one relatively rare, both needed to cause a particular outcome, then the common one must only explain a small fraction of the variation[1].

Consider a scenario where you need low IQ (call it factor A) and 6 equally common factors (call them B, C, D etc.) to make a criminal – so you need A&B&C&D&E&F&G. So A should explain a smallish fraction of the variation, being one factor among seven. The conjunction of B&C&D&E&F&G is pretty rare, rarer than A, and (A)&(B&C&D&E&F&G) is a necessary and sufficient condition for a criminal. Now consider again a two-factor model, factors A and X, where A&X is necessary and sufficient, and X is as common as B&C&D&E&F&G is – the fraction of the variation for A shouldn’t be different here. Therefore if you have a common factor and a rare factor, jointly necessary for some outcome, then the common factor will have low r or R2 values.

[1] The trouble with things like r is that people talk about fractions of the variance, and variance is weird, in that it tends to be the square of what you were really interested in.

But you could be very certain they were a criminal even if you didn’t know their IQ. Almost no one is a criminal in that made up data.

And we don’t know for sure that made up data is accurate. Without the actual data, you don’t know what is happening- lots of data patterns will lead to the same sort of summary statistics.

Scott, the made up data seems highly predictive of non-criminality for one subset. But knowing someone is below average intelligence doesn’t help very much at all in predicting whether they will be a criminal or not.

And of course, the actual data doesn’t look like that, as there intelligent people who commit crimes. So you wouldn’t even be able to subset it.

if I knew someone had higher than average IQ, I could be certain they were not a criminal

No. You can’t be CERTAIN. You can think or have a very high confidence or predict they are not LIKELY to be a criminal, but you cannot say “HIGH IQ = NOT NEVER NOHOW CRIMINAL”.

Or how do you explain all the white-collar crime and the highfliers who pretty much ruined the country with speculation?

The likes of Bernie Madoff show that wanting to believe even when something sounds too good to be true over-rides a lot of good sense. The same way the Nigerian email scams in their early days appealed to people who should have had some kind of business integrity but didn’t or couldn’t resist the lure of easy money, even if it involved screwing over the people and government of an African nation.

Running the risk of being insensitive, what about Dr Harold Shipman? Doctors are presumably reasonably intelligent; this site estimates physicians to have an average IQ of 160.

Certainly not every doctor is a serial killer! But if you assumed “doctor = higher IQ” and from that pronounced “I can be certain this person is not a criminal”, you would have been badly wrong in that case (and indeed, the aura of respectability and trustworthiness around the medical profession probably helped to deflect suspicion for a long time).

Indeed, when you delete half of a sentence, sometimes the other half doesn’t make sense by itself.

He’s talking about the invented data, not reality. Of course reality includes high-IQ criminals.

Physician average IQ is not remotely close to 160. If you read that link it makes no attempt to determine the IQ of different professions, it just looks at their average income and then maps that to modeled relationship between average IQ and income (which is highly inaccurate at high incomes). As you can see, that results in surgeons having an IQ of 230 which not even one person in the entire world has. Similar absurd results would be evident for any high-income job.

You really seem to have a pattern of misrepresenting what other people have said.

If you’re inventing data, you have to have some reason for why you put what you put where you put it.

If your initial assumption is that “leprechauns repair shoes”, then you can invent data about “number of pairs of shoes worn out in the last fifty years” and see that people tend not to bring their shoes for repair when they wear out but instead buy new shoes.

You can then extrapolate from that “The trend for not getting shoes repaired is caused by a decline in the leprechaun population.”

If I assume “high IQ means not a criminal”, of course I’m not going to put my imaginary criminals on the high IQ end of my invented scale. That still does not mean that my invented data has any relation to actual reality.

If I’m making up my data, I don’t get to make pronouncements about what IQ does and does not permit me to say with certainty.

Deiseach, you’re going the road of this guy here.

Scott had some invented data someone came up with that has a low R**2 value but in which IQ appears to be, conversationally speaking, a good predictor of crime, because nobody with above-average IQ in the dataset is a criminal. The invented data is specifically to highlight that you can have this situation where R**2 is low but a variable seems usefully predictive, the thing that this blog post is about. I’m not sure it’s necessarily intended to be reflective of the real world.

Deiseach, that is a total mess of a post. When someone mentions to you that you seem to have serious communication problems, it really doesn’t help to post a non sequitur about leprechauns.

As best as I can figure out, you post:

Asserts that if one wishes to explain X, and decides to analogize it to Y, one needs a specific reason for choosing Y; one cannot simply say “My point is really clear in the case of Y”.

Includes an analogy involving leprechauns for no discernible reasons.

Hypotheticals are chosen for their illustrative power, not for how well they comport with reality. If they were perfectly based in reality, they wouldn’t be hypohteticals. If you don’t understand basic concepts such as “hypothetical”, perhaps you should find a less intellectual place to spend your time.

I haven’t bothered to click through that link, but that number is impossibly high. 160 is +4 SD, or roughly 1 in 10,000; even if every individual of that IQ went into medicine, which they don’t, there are still only about 30,000 people with that kind of IQ in the US. Google tells me that there are in fact about a million doctors in America. (America selected because it’s intuitive to me; analogous figures should exist for Ireland or wherever.)

Now, some of them are immigrants, which would bugger up the numbers since there’s some selection going on there; but even if every person in the world with 160 IQ got an MD and moved to the States, there wouldn’t be enough of them. If we ignore that selection effect, on the other hand, doctors’ average IQ can be no more than 140. By comparison with the figures I’ve seen for students in other technical fields, I’d personally estimate somewhere more in the neighborhood of 130 and maybe lower.

FWIW doctors per capita is about the same in America and France.

You really should click through to that link.

Now I have. Yeah, the estimate for doctors is bunk, and the reason given by someone above me is correct (viz. the model goes to hell at higher salaries).

It would be more interesting if it gave us both modeled and actual IQ, which would give us a sense of what proportion of salary for a given job comes from intelligence (and thus scarcity of candidates) vs. other factors. But finding that data would likely be hard, and if we had it we’d be able to generate the inverse figures (salary predicted from average IQ vs. actual salary), which attack that issue more directly.

With a correlation of -.19 … if someone has an IQ that’s 1 SD higher than average, his expected number of crimes would be 0.19 SD lower than average.

That works out to 0.066 fewer crimes than average per 15 IQ points.

The only reason you can be “certain” the high IQ person isn’t criminal is that in this particular made-up example, the data just happens to have zero high-IQ criminals. That doesn’t have to be the case.

If you took an infinity of possible dataplots of IQ/crimes, all with correlation -.19, and the same mean and variance of IQ and criminal acts, you’d find that the 0.066 fewer crimes per SD works out, on average. It’s just that this particular plot isn’t very linear.

Another way to think about it: the correlation coefficient gives you information only about what you can infer about average criminality for a given IQ, based on a “randomly chosen” IQ. That’s perfectly consistent with “I can infer a lot from a high IQ, but I can infer a lot less from a low IQ.” The “average,” in some sense, is -0.19.

When that happens, by the way, it’s a sign that you’re doing a linear regression for a dataset that really isn’t a straight-line relationship.

Sure, but that’s a totally different probability. p(law-abiding|high IQ) is 1. That’s not fuzzy or vague, you’re totally right that it is highly (indeed, perfectly) predictive. Of course, p(law-abiding|high IQ) tells you very little about p(criminal|low IQ), except that it must be greater than p(criminal).

This is a more general problem: p(A|B) is typically not the same as p(not A|not B). I suspect that this is a common fallacy because it is similar to a perfectly valid *logical* inference: “if A, then B” is logically equivalent to “if not B, then not A”. But even the logical relationship doesn’t apply to statistics: p(A|B) is not the same as p(not B|not A). Illustration:

Five people with traits A or -A and B or -B:

(A,-B);(A,-B);(A,-B);(A,B);(-A,B)

P(A) = .8

P(B) = .4

P(A|B) = .5

P(B|A) = .25

P(-B|-A) = 0

Sorry to belabor this point, but I actually thought P(A|B) = P(not B|not A), and I was surprised when I proved the opposite. Anyway, modus tollens is a lie and I will never understand anything.

Learning they have high IQ doesn’t improve your guess of criminality much, in the sense of squared error.

Suppose they’re high IQ and not a criminal. You learn they’re high IQ, and your estimate of number of crimes committed goes from .001 to 0.

Correlation is about improvement in prediction (when the only way you’re allowed to use what you learn is a linear model)

Depends what you mean by “crime”; whether you mean something analogous to “IQ” or “high IQ”. If, in the invented data, I know that someone is a criminal, that tells me a lot about their IQ. If I know whether or not someone is a criminal, that on average tells me little about their IQ, because chances are they aren’t a criminal, and knowing that someone isn’t a criminal tells you very little about their IQ.

The way I explained it on my blog a few years ago:

The correlation (or r-squared) doesn’t tell you the effect of IQ on salary. It tells you the importance of IQ to salary *relative to everything else that’s important to salary*. Like age, education, location, work habits, sex, and so on. In that light, “16% of the variation” is A LOT.

If you want to know the effect of IQ on salary, look at the regression equation. It probably looks like

SALARY = $30000 + $15,000 * (IQ Decile)

Which answers the question you really want answered. Notice that the equation, alone like that, doesn’t tell you anything about the r-squared or correlation. You could invent datasets with any r-squared you wanted, but still $15,000 per decile.

The analogy I used: suicide. Suicide explains only a small portion of the variation in lifespan, because only around .0001 of the population dies of it each year. But the effect of suicide on lifespan is, obviously, very high! In other words, with made up numbers,

Lifespan = 70 – 40 * (suicide dummy variable)

r-squared = .01

Here’s the link.

Part of the problem is the real equation is more like:

SALARY = 30000 + 15000 * (IQ Decile) + N(0, 30000)

It’s a strong positive relationship, but still a lot of residual uncertainty. The low r-squared value isn’t telling you much about the importance of the variable in question. It’s telling you a single-variable model is a very poor fit.

On a slight tangent, I went to the NLSY79 dataset to see if I could come up with a better example pulling a few variables, but since they measured thousands of things and won’t give you a complete dataset but require a convoluted web search to find what you want, I gave up. Nowhere in the “achievement tests” section was there an IQ test, though. All they had was the ASVAB. I don’t know if things were different in the 70s, but when I was in school, people who planned to go to college didn’t take the ASVAB. In fact, I was actually in the Army and never took the ASVAB, because commissioned officers aren’t required to take it. We’re assigned a job based on GPA, physical fitness, and leadership evaluations from our pre-commissioning program.

This book is essentially all about this topic and a good read too.

re: IQ & crime – the real conclusion you can draw is that IQ is a rough proxy for “reasonably bright with not overly awful upbringing” which implies “very probably not a criminal in the usual sense.”

In other words – not so much that low IQ causes crime, but that higher IQ prevents it.

A similar thing applies to poverty and crime – it’s not “povery causes crime” but more “non-povery prevents crime” – as in, if you have a reasonable social-economic status, you have much better things to do than be a criminal.

re: Salary – related to what Phil said, but note that the higher up you get, the *wider* the variance. Because really bright people may retire early, or live on savings or investments while reporting low or no income, or marry other bright people and stay home with the kids, or have an exotic idea about how to live very very cheaply on a farm somewhere, and thus have very high utility standard of living at low cost.

The real implication is “higher IQ means less likely to be stuck in a life long dead end grind”

Yeah, probably that: the higher your IQ, the more choices you have, the more options, the more things are open to you.

Note that “Lies, damned lies and statistics” does not refer to a technical problem with statistics as a field, but rather to the subtlety of correctly interpreting statistical-technical jargon.

Always plot your data!

That’s the first question in my IQ FAQ:

Q. Is IQ really all that important in understanding how the world works?

A. In an absolute sense, no. Human behavior is incredibly complicated, and no single factor explains more than a small fraction of it.

In a relative sense, yes. Compared to all the countless other factors that influence the human world, IQ ranks up near the top of the list.

http://www.vdare.com/articles/why-do-we-keep-writing-about-intelligence-an-iq-faq

Phil (above) is right, I think. This back-and-forth just published reflects a similar issue and so might give another way to think about it outside of the IQ stuff.

Essentially, the Original reported a large difference in momentary happiness depending on whether a (self-rated) moral or immoral event happened. The Critique shows that, at the same time, moral and moral events predicts a small amount of variance in momentary happiness. The Response points out that both descriptions of the data are accurate, but they are asking different questions.

In short, people do really seem happier following moral events than immoral events, but moral events only predict a small part of the variation in happiness over the course of the day. Lots of things predict happiness and moral events is one part of that.

Original: http://www.sciencemag.org/content/345/6202/1340.abstract

Critique: http://www.sciencemag.org/content/348/6236/767.1.abstract

Response: http://www.sciencemag.org/content/348/6236/767.2.abstract

To bring it back to IQ and Crime: People who commit crimes on average have lower IQ, but given the noise in both of these measures, plus the many other things that are associated with both IQ and Crime (from genetics, to upbringing, to specific circumstances at moment of the crime etc), IQ only explains a small variance in crime. As Phil illustrates, the same could be said about income and IQ or lifespan and suicide.

Pingback: 1p – Beware Summary Statistics | Exploding Ads

The correlation you report is not the correlation between IQ and committing crimes; it is the correlation between IQ and getting caught for committing crimes.

An important distinction, no?

Correct. This is particularly relevant because the evidence at the JRank link says low verbal intelligence seems to be more strongly correlated with crime than low other types of intelligence.

Much investigative work continues to be about interrogation, and this is more true the further back you go, to before DNA testing, cellphone location tracking etc. became available. And verbal intelligence is exactly what you need to lie convincingly to people who have a lot of practice at detecting dishonesty.

I’m not convinced by the usefulness of “crime” as a catch-all category anyway. My idea is that there are two kinds of crimes. One is those that criminals don’t really try to escape investigation for, because what they’re doing is super obvious or they’re drunk or they just don’t think about it. These are bar fights, kids stealing cars, liquor violations and the like. This is what I think higher-IQ people generally don’t do. But what about more careful crimes like insurance fraud, corruption or violations of professional duties? Anything a criminal has planned to get away with, by careful plotting and actively avoiding or destroying evidence, is its own kind of crime I think. We don’t know that higher-IQ people really don’t do that a lot. We only know that either they don’t or police are quite bad at catching them.

It is pretty difficult to violate professional duties below the age of 20.

to be even more precise, it’s the correlation between IQ and being convicted of a crime.

Pingback: 1p – Beware Summary Statistics | Profit Goals

I was used to interpret the correlation r through the equation

b=r*(s_x/s_y)

Where b is the slope and s_x / s_y are the empirical deviations of the data. (I’ll be more careful now, after looking at the anscomebe quartet).

But can anybody please explain (preferably with equations) how to understand (or derive from the definition of r) the phrase “r=??? means x explains ???% of the Variance in y”

In response to amazing_MuhKuh’s question:

Given a set of pairs (X,Y) (normalised by subtracting out out the means of both), one can fit a model X = bY + E by least squares optimisation, and b will be as you described. The variances of X, Y, and E will be related by var(X) = b^2 var(Y) + var(E). That is, the variance of X decomposes into two parts, that “due to” Y and that “due to” E.

There’s a reason I’m using the scare quotes, which I’ll come back to.

The proportion of var(X) that is “explained by” Y is then b^2 var(Y)/var(X). Since var(X) = s_x^2, this simplifies to r^2. Thus given a correlation of r, one often says that Y “explains” a proportion r^2 of the variance of X.

Given any set of pairs (X,Y), one can mechanically compute r and fit the model X = bY + E. However, if Y and E are not independent (as in the examples of Anscombe’s quartet), this interpretation of r^2 is no longer valid. X might be uniquely and precisely determined by Y, even when r=0.

One can just as easily fit a model Y = aX + E, where a = r*(s_y/s_x), and say that X “explains” r^2 of the variation in Y. This line is different from the previous one unless r=1. The previous line with the axes swapped would have slope (s_y/s_x)/r.

Now for those scare quotes.

“Noise.” In statistics this means “unmodelled variation”: everything else, whatever it might be, that makes Y less than a perfect predictor of X, lumped into the unobservable variable E.

“Due to,” “explained by.” When dealing with observational data (you look and gather pairs (X,Y)), as opposed to interventional data (you force Y to a particular value and see what you observe for X), these have to be understood as terms of art which do not mean a causal relationship or an explanation, unlike the everyday meaning of those words. The variance in X “due to” or “explained by” Y is nothing other than the proportion that was calculated above. Both sets of data described in the top post are observational.

“Predicted by” might be better than “due to” and “explained by”, but the terminology is what it is. (Even “predicted by” is not satisfactory, as the “prediction” may not replicate on new data.)

When one has, from whatever source, causal information about the relation between X and Y, saying that Y has a causal influence upon X and not vice versa, and that E (whatever it is) is causally independent from Y, then “due to” and “explained by” are closer to their everyday meanings.

If you compute the variance of the residuals from the regression line, this variance will smaller than the variance of the original y values; the fractional reduction is r^2.

To derive this, consider the simplest case where X and Y have mean 0 and variance 1. Then the correlation coefficient is r=covar(Y,X)

Now we ask about the variance of the residuals, var(Y-rX). We have

var(Y-rX)

= var(Y) + var(rX) – 2 covar(Y,rX)

= var(Y) + r^2 var(X) – 2 r covar(Y,X)

= 1 + r^2 – 2 r^2

= 1 – r^2

compared to var(Y) = 1, we see that the variance of the residuals is reduced by a fraction r^2 compared to the variance of Y.

This is, by the way, why it’s very important to visualize data, scatterplots being of among the most important.

Scatterplots are wonderful things, and its amazing how they are able to demolish even the most subtle statistical sleight of hand. But you can’t always make a scatterplot. When you are dealing with highly multidimensional data sets with non-linear effects, you have to rely on statistics. Which is why epidemiology (among many things) is hard.

Pingback: Interesting Links for 20-05-2015 | Made from Truth and Lies

1) I’m not a data guy, but is there a convienient way to graph the distribution within each IQ decile on your income bar graphs? For example, maybe a color where the color gets more transparent the fewer people found at that level in that decile, or a diamond instead of a bar where the width of the diamond at any point indicates the number of people at that point? (Or both?) If I’m right, that would show you the variance within the deciles and maybe clarify some of it.

2) I think Steve Sailer has an interesting way of looking at it upthread. It’s possible that (1) IQ doesn’t tell you very much when compared to everything else in the universe but (2) IQ is still the most predictive single factor, or one of the most. (That can be gamed, though, depending on how you group together or split “factors” when you’re defining them).

This is a good idea, and the answer is “yes”. The first way that comes to mind is a box-and-whisker plot for each decile.

(I’m the pappubahry who made the graphs in this post, and I’d make this example as well, but the computer I’m on is having issues installing some software I need at the moment… maybe tomorrow.)

library(ggplot2)

library(ggthemes)

iq <- rnorm(1000, mean=100, sd=15)

normed <- (iq - mean(iq)) / sd(iq)

income <- rep(30000, 1000) + rep(15000, 1000) * normed +

rnorm(1000, mean=0, sd=30000)

df <- data.frame(income=income, iq=iq,

dec=cut(iq, unique(quantile(iq, seq(0, 1, 0.1))),

include.lowest=TRUE))

g1 <- ggplot(aes(iq, income), data=df) + geom_point() + geom_smooth(method="lm") +

theme_few()

g1

g2 <- ggplot(aes(dec, income), data=df) + geom_boxplot() + theme_few()

g2

Gives you these:

plot1

plot2

Probably more informative than the simple bar chart.

That is called a violin plot. They’re great if you really want to see everything, but for purposes like this, it is probably better use the box and whiskers to display the summary statistics for the distribution in the decile. The violin plot is a good check to see if the box and whiskers is misleading because the distribution is weird (eg, bimodal).

Thanks everybody!

One rule about correlation that’s helpful for me:

In a linear regression, correlation (r) is the conversion rate between standard deviations of the two variables.

That is, linear regression predicts that, when x is c standard deviations above x_mean, y is r * c standard deviations above y_mean on average.

(Similarly the opposite regression predicts that when y is c standard deviations above y_mean, x is r * c standard deviations above x_mean on average.)

Applying this to the first paper: “The men who committed two or more criminal offenses by age twenty had IQ scores on average a full standard deviation below nonoffenders”

Maybe >=two criminal offenses before age twenty is 2.0 standard deviations above average? So variable x at +2.0 standard deviations implies y is -1 stdev. This would imply correlation = -1 / +2.0 = -0.5. You’re right to think -.19 sounds weak given this fact. My guess is that the relationship is quite a bit weaker in the rest of the data because no one can have negative criminal offenses. (Maybe a non-linear fit would be better?)

In the second case, consider a linear model with constant a0 and uncorrelated factors each normalized to mean 0:

income = a0 + a1 * iq + a2 * factor2 + a3 * factor3 …

The bar graph is showing something like the values of a0 + (a1 * iq) for different deciles of iq.

As you point out, these bars vary a lot. To be specific: stdev(a0 + a1*iq) is rather large as a fraction of stdev(income).

a0 is constant so stdev(a0 + a1*iq) = stdev(a1 * iq).

Related to the correlation rule in the first comment there’s another fact that stdev(a1 * iq) = r * stdev(income) where r is the correlation. Here r = 0.4, so it makes sense for stdev(a0 + a1*iq) to be a decent fraction of stdev(income).

The bar graph makes sense given the correlation number.

But if the bar graph values are varying 40% as much as income itself, why isn’t the predictor explaining 40% of the variance? Wouldn’t combining two such predictors and making the same graph give values that vary 80% as much as income?

Well no, and for the same reason adding two (uncorrelated) normal distributions of stdev 0.4 doesn’t give a normal distribution of stdev 0.8, it gives a normal distribution of stdev 0.57. And it takes six such distributions to reach a normal distribution of stdev ~ 1. For me that core fact is the hardest part to internalize.

In short I don’t think you need to be so hard on summary statistics here!

In the first case, the authors show a strong relationship between two variables in a subset of the domain, which doesn’t contradict a smaller correlation over the full set. And the bar graph from the second case is like what we’d expect from two variables with that correlation.

I find r-squared values to be virtually useless for that exact question. The real question you’re asking is “How statistically significant is the slope of the regression?” There are p-value tests for slopes (and intercepts), confidence intervals for them, etc. they are just harder to calculate.

I’m not exactly sure off the top of my head if the same formulas generalize to non-linear regressions or not.

For the first graph, it helps me to think of it like cancer screening tests – a low base rate means that even something highly predictive will be wrong most of the time.

I’m not sure how to apply this intuition to the second case though.

The intuitive explanation of the second case is that, although IQ explains more of the variability in income than any other easily measurable single variable, no single variable explains more than a small amount of variation in income. This would give it a high p-value as a predictor, but a model with only this predictor would have a very low r-squared value.

The term we should really be using for IQ is “significant.” Whether or not it’s predictive depends on what you’re trying to predict. Give me two groups of 1000 people each, one with a mean IQ of 90 and one with a mean IQ of 120, and I can probably accurately predict the difference between their mean incomes. Randomly select any two individuals with IQs of 90 and 120 and I’m not going to be so accurate.

Regardless of the amount of variance, going from a category where I can expect to make on average $40,000 to a category where I could expect to make on average $160,000 seems like a pretty big deal, and describing it as “only predicting 16% of the variation” seems patently unfair.

Important, recurring point – a lot of these summary statistics are about the whole population, rather than particular bits of it. The extremes might be quite different, but most people by definition aren’t in the top or bottom decile, so that difference only makes a small contribution to the overall pattern of variation.