[This post was up a few weeks ago before getting taken down for complicated reasons. They have been sorted out and I’m trying again.]

Is scientific progress slowing down? I recently got a chance to attend a conference on this topic, centered around a paper by Bloom, Jones, Reenen & Webb (2018).

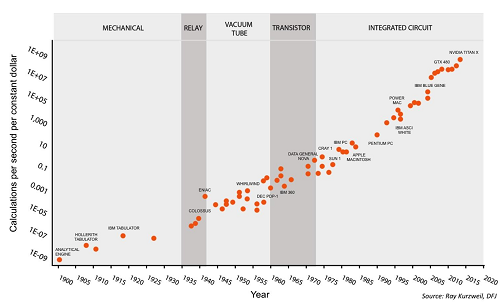

BJRW identify areas where technological progress is easy to measure – for example, the number of transistors on a chip. They measure the rate of progress over the past century or so, and the number of researchers in the field over the same period. For example, here’s the transistor data:

This is the standard presentation of Moore’s Law – the number of transistors you can fit on a chip doubles about every two years (eg grows by 35% per year). This is usually presented as an amazing example of modern science getting things right, and no wonder – it means you can go from a few thousand transistors per chip in 1971 to many million today, with the corresponding increase in computing power.

But BJRW have a pessimistic take. There are eighteen times more people involved in transistor-related research today than in 1971. So if in 1971 it took 1000 scientists to increase transistor density 35% per year, today it takes 18,000 scientists to do the same task. So apparently the average transistor scientist is eighteen times less productive today than fifty years ago. That should be surprising and scary.

But isn’t it unfair to compare percent increase in transistors with absolute increase in transistor scientists? That is, a graph comparing absolute number of transistors per chip vs. absolute number of transistor scientists would show two similar exponential trends. Or a graph comparing percent change in transistors per year vs. percent change in number of transistor scientists per year would show two similar linear trends. Either way, there would be no problem and productivity would appear constant since 1971. Isn’t that a better way to do things?

A lot of people asked paper author Michael Webb this at the conference, and his answer was no. He thinks that intuitively, each “discovery” should decrease transistor size by a certain amount. For example, if you discover a new material that allows transistors to be 5% smaller along one dimension, then you can fit 5% more transistors on your chip whether there were a hundred there before or a million. Since the relevant factor is discoveries per researcher, and each discovery is represented as a percent change in transistor size, it makes sense to compare percent change in transistor size with absolute number of researchers.

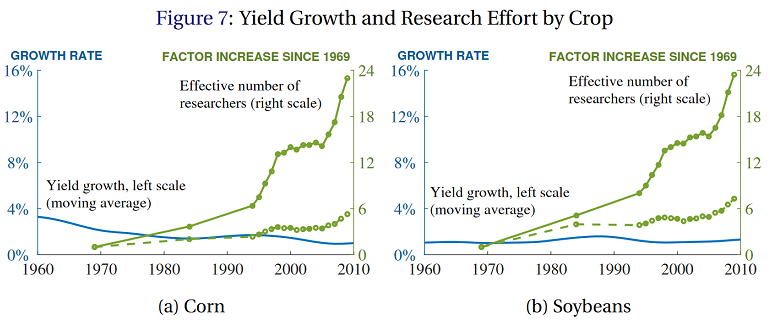

Anyway, most other measurable fields show the same pattern of constant progress in the face of exponentially increasing number of researchers. Here’s BJRW’s data on crop yield:

The solid and dashed lines are two different measures of crop-related research. Even though the crop-related research increases by a factor of 6-24x (depending on how it’s measured), crop yields grow at a relatively constant 1% rate for soybeans, and apparently declining 3%ish percent rate for corn.

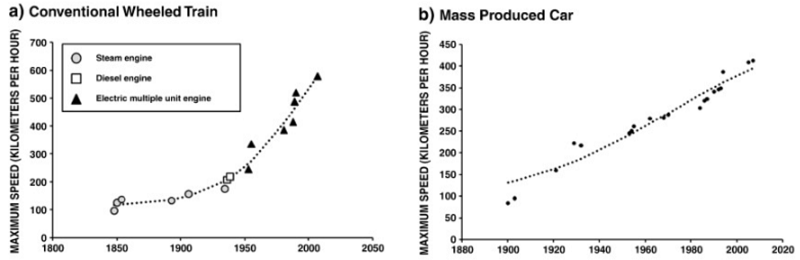

BJRW go on to prove the same is true for whatever other scientific fields they care to measure. Measuring scientific progress is inherently difficult, but their finding of constant or log-constant progress in most areas accords with Nintil’s overview of the same topic, which gives us graphs like

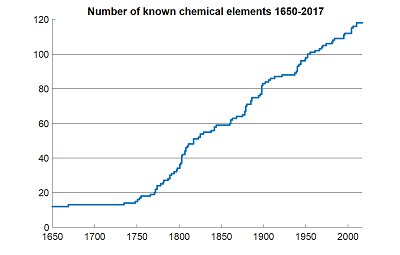

…and dozens more like it. And even when we use data that are easy to measure and hard to fake, like number of chemical elements discovered, we get the same linearity:

Meanwhile, the increase in researchers is obvious. Not only is the population increasing (by a factor of about 2.5x in the US since 1930), but the percent of people with college degrees has quintupled over the same period. The exact numbers differ from field to field, but orders of magnitude increases are the norm. For example, the number of people publishing astronomy papers seems to have dectupled over the past fifty years or so.

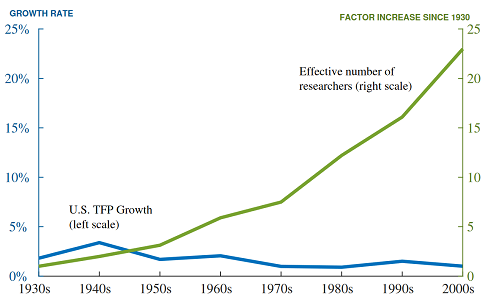

BJRW put all of this together into total number of researchers vs. total factor productivity of the economy, and find…

…about the same as with transistors, soybeans, and everything else. So if you take their methodology seriously, over the past ninety years, each researcher has become about 25x less productive in making discoveries that translate into economic growth.

Participants at the conference had some explanations for this, of which the ones I remember best are:

1. Only the best researchers in a field actually make progress, and the best researchers are already in a field, and probably couldn’t be kept out of the field with barbed wire and attack dogs. If you expand a field, you will get a bunch of merely competent careerists who treat it as a 9-to-5 job. A field of 5 truly inspired geniuses and 5 competent careerists will make X progress. A field of 5 truly inspired geniuses and 500,000 competent careerists will make the same X progress. Adding further competent careerists is useless for doing anything except making graphs look more exponential, and we should stop doing it. See also Price’s Law Of Scientific Contributions.

2. Certain features of the modern academic system, like underpaid PhDs, interminably long postdocs, endless grant-writing drudgery, and clueless funders have lowered productivity. The 1930s academic system was indeed 25x more effective at getting researchers to actually do good research.

3. All the low-hanging fruit has already been picked. For example, element 117 was discovered by an international collaboration who got an unstable isotope of berkelium from the single accelerator in Tennessee capable of synthesizing it, shipped it to a nuclear reactor in Russia where it was attached to a titanium film, brought it to a particle accelerator in a different Russian city where it was bombarded with a custom-made exotic isotope of calcium, sent the resulting data to a global team of theorists, and eventually found a signature indicating that element 117 had existed for a few milliseconds. Meanwhile, the first modern element discovery, that of phosphorous in the 1670s, came from a guy looking at his own piss. We should not be surprised that discovering element 117 needed more people than discovering phosphorous.

Needless to say, my sympathies lean towards explanation number 3. But I worry even this isn’t dismissive enough. My real objection is that constant progress in science in response to exponential increases in inputs ought to be our null hypothesis, and that it’s almost inconceivable that it could ever be otherwise.

Consider a case in which we extend these graphs back to the beginning of a field. For example, psychology started with Wilhelm Wundt and a few of his friends playing around with stimulus perception. Let’s say there were ten of them working for one generation, and they discovered ten revolutionary insights worthy of their own page in Intro Psychology textbooks. Okay. But now there are about a hundred thousand experimental psychologists. Should we expect them to discover a hundred thousand revolutionary insights per generation?

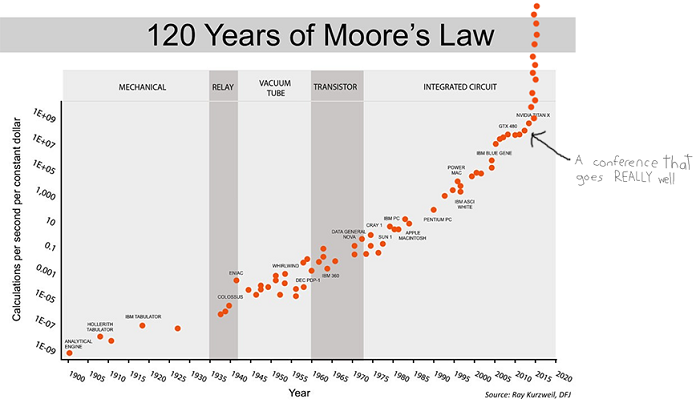

Or: the economic growth rate in 1930 was 2% or so. If it scaled with number of researchers, it ought to be about 50% per year today with our 25x increase in researcher number. That kind of growth would mean that the average person who made $30,000 a year in 2000 should make $50 million a year in 2018.

Or: in 1930, life expectancy at 65 was increasing by about two years per decade. But if that scaled with number of biomedicine researchers, that should have increased to ten years per decade by about 1955, which would mean everyone would have become immortal starting sometime during the Baby Boom, and we would currently be ruled by a deathless God-Emperor Eisenhower.

Or: the ancient Greek world had about 1% the population of the current Western world, so if the average Greek was only 10% as likely to be a scientist as the average modern, there were only 1/1000th as many Greek scientists as modern ones. But the Greeks made such great discoveries as the size of the Earth, the distance of the Earth to the sun, the prediction of eclipses, the heliocentric theory, Euclid’s geometry, the nervous system, the cardiovascular system, etc, and brought technology up from the Bronze Age to the Antikythera mechanism. Even adjusting for the long time scale to which “ancient Greece” refers, are we sure that we’re producing 1000x as many great discoveries as they are? If we extended BJRW’s graph all the way back to Ancient Greece, adjusting for the change in researchers as civilizations rise and fall, wouldn’t it keep the same shape as does for this century? Isn’t the real question not “Why isn’t Dwight Eisenhower immortal god-emperor of Earth?” but “Why isn’t Marcus Aurelius immortal god-emperor of Earth?”

Or: what about human excellence in other fields? Shakespearean England had 1% of the population of the modern Anglosphere, and presumably even fewer than 1% of the artists. Yet it gave us Shakespeare. Are there a hundred Shakespeare-equivalents around today? This is a harder problem than it seems – Shakespeare has become so venerable with historical hindsight that maybe nobody would acknowledge a Shakespeare-level master today even if they existed – but still, a hundred Shakespeares? If we look at some measure of great works of art per era, we find past eras giving us far more than we would predict from their population relative to our own. This is very hard to judge, and I would hate to be the guy who has to decide whether Harry Potter is better or worse than the Aeneid. But still? A hundred Shakespeares?

Or: what about sports? Here’s marathon records for the past hundred years or so:

In 1900, there were only two local marathons (eg the Boston Marathon) in the world. Today there are over 800. Also, the world population has increased by a factor of five (more than that in the East African countries that give us literally 100% of top male marathoners). Despite that, progress in marathon records has been steady or declining. Most other Olympics sports show the same pattern.

All of these lines of evidence lead me to the same conclusion: constant growth rates in response to exponentially increasing inputs is the null hypothesis. If it wasn’t, we should be expecting 50% year-on-year GDP growth, easily-discovered-immortality, and the like. Nobody expected that before reading BJRW, so we shouldn’t be surprised when BJRW provide a data-driven model showing it isn’t happening. I realize this in itself isn’t an explanation; it doesn’t tell us why researchers can’t maintain a constant level of output as measured in discoveries. It sounds a little like “God wouldn’t design the universe that way”, which is a kind of suspicious line of argument, especially for atheists. But it at least shifts us from a lens where we view the problem as “What three tweaks should we make to the graduate education system to fix this problem right now?” to one where we view it as “Why isn’t Marcus Aurelius immortal?”

And through such a lens, only the “low-hanging fruits” explanation makes sense. Explanation 1 – that progress depends only on a few geniuses – isn’t enough. After all, the Greece-today difference is partly based on population growth, and population growth should have produced proportionately more geniuses. Explanation 2 – that PhD programs have gotten worse – isn’t enough. There would have to be a worldwide monotonic decline in every field (including sports and art) from Athens to the present day. Only Explanation 3 holds water.

I brought this up at the conference, and somebody reasonably objected – doesn’t that mean science will stagnate soon? After all, we can’t keep feeding it an exponentially increasing number of researchers forever. If nothing else stops us, then at some point, 100% (or the highest plausible amount) of the human population will be researchers, we can only increase as fast as population growth, and then the scientific enterprise collapses.

I answered that the Gods Of Straight Lines are more powerful than the Gods Of The Copybook Headings, so if you try to use common sense on this problem you will fail.

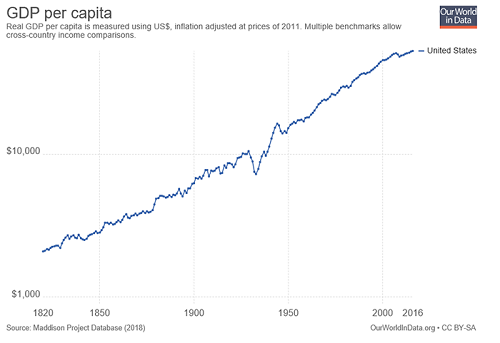

Imagine being a futurist in 1970 presented with Moore’s Law. You scoff: “If this were to continue only 20 more years, it would mean a million transistors on a single chip! You would be able to fit an entire supercomputer in a shoebox!” But common sense was wrong and the trendline was right.

“If this were to continue only 40 more years, it would mean ten billion transistors per chip! You would need more transistors on a single chip than there are humans in the world! You could have computers more powerful than any today, that are too small to even see with the naked eye! You would have transistors with like a double-digit number of atoms!” But common sense was wrong and the trendline was right.

Or imagine being a futurist in ancient Greece presented with world GDP doubling time. Take the trend seriously, and in two thousand years, the future would be fifty thousand times richer. Every man would live better than the Shah of Persia! There would have to be so many people in the world you would need to tile entire countries with cityscape, or build structures higher than the hills just to house all of them. Just to sustain itself, the world would need transportation networks orders of magnitude faster than the fastest horse. But common sense was wrong and the trendline was right.

I’m not saying that no trendline has ever changed. Moore’s Law seems to be legitimately slowing down these days. The Dark Ages shifted every macrohistorical indicator for the worse, and the Industrial Revolution shifted every macrohistorical indicator for the better. Any of these sorts of things could happen again, easily. I’m just saying that “Oh, that exponential trend can’t possibly continue” has a really bad track record. I do not understand the Gods Of Straight Lines, and honestly they creep me out. But I would not want to bet against them.

Grace et al’s survey of AI researchers show they predict that AIs will start being able to do science in about thirty years, and will exceed the productivity of human researchers in every field shortly afterwards. Suddenly “there aren’t enough humans in the entire world to do the amount of research necessary to continue this trend line” stops sounding so compelling.

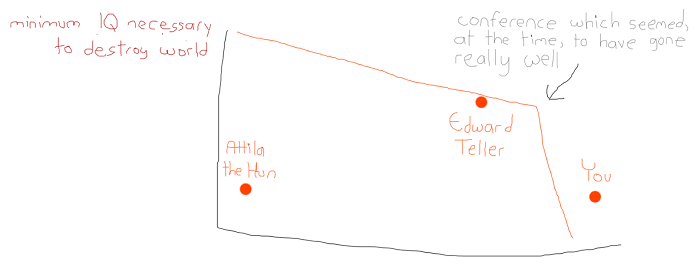

At the end of the conference, the moderator asked how many people thought that it was possible for a concerted effort by ourselves and our institutions to “fix” the “problem” indicated by BJRW’s trends. Almost the entire room raised their hands. Everyone there was smarter and more prestigious than I was (also richer, and in many cases way more attractive), but with all due respect I worry they are insane. This is kind of how I imagine their worldview looking:

I realize I’m being fatalistic here. Doesn’t my position imply that the scientists at Intel should give up and let the Gods Of Straight Lines do the work? Or at least that the head of the National Academy of Sciences should do something like that? That Francis Bacon was wasting his time by inventing the scientific method, and Fred Terman was wasting his time by organizing Silicon Valley? Or perhaps that the Gods Of Straight Lines were acting through Bacon and Terman, and they had no choice in their actions? How do we know that the Gods aren’t acting through our conference? Or that our studying these things isn’t the only thing that keeps the straight lines going?

I don’t know. I can think of some interesting models – one made up of a thousand random coin flips a year has some nice qualities – but I don’t know.

I do know you should be careful what you wish for. If you “solved” this “problem” in classical Athens, Attila the Hun would have had nukes. Remember Yudkowsky’s Law of Mad Science: “Every eighteen months, the minimum IQ necessary to destroy the world drops by one point.” Do you really want to make that number ten points? A hundred? I am kind of okay with the function mapping number of researchers to output that we have right now, thank you very much.

The conference was organized by Patrick Collison and Michael Nielsen; they have written up some of their thoughts here.

Scientific progress doesn’t slow down, it goes “boink”.

Sorry, somebody had to say it.

> Shakespearean England had 1% of the population of the modern Anglosphere, and presumably even fewer than 1% of the artists.

seriously? how are you so completely out of touch with human nature? i don’t understand what it takes to result in you confidently denying extremely basic facts about our history.

here’s a small excerpt from the book Impro, which you certainly sound like you could never possibly understand

Please don’t make comments like this, this kind of hostile condescension has zero benefit and we need less of it, kthx

it would be good if you didn’t make comments like this. we need less of your kind of hostile condescension, kthx

amaranth:

Do you have anything interesting to say, or are you just here to waste everyone’s time?

That excerpt isn’t very convincing. https://www.princeton.edu/culturalpolicy/quickfacts/artists/artistemploy.html

@amaranth

You are reading Scott in bad faith. He could very well have meant professional artists, who surely were more rare back then than now.

you are reading me in bad faith

I’m a firm believer in an upvote, downvote system like on Reddit. There’s no reason to prioritise early comments over later ones. Stuff like this should move down and the better comments that came in later should be elevated. I bet if you asked, Scott, a dozen people who read this blog could swiftly sort out a system like that for these comments.

I would upvote this comment.

So would I. Upvote Downvote is the thing I really like about Reddit. It prioritizes by importance/interest to the relevant community.

One of the downsides of reddit’s upvote system is that it also privelages early comments, due to the snow ball effect of initial upvotes -> more visibility -> more upvotes -> and so on.

Vote systems also promote snarky/low-value comments.

I support research into a system that filters out the low quality comments without promoting other kinds of low quality comments, such as

-upvotes_up_to_a_cap (something low, like 50 votes) or perhaps

-a simple vote-threshold system, like hiding any comments that receives >90% down votes.

Naturally, all vote counts should be hidden to disincentivise vote seeking.

Reddit actually has multiple sorting options. ‘Top’ sorts merely by upvotes minus downvotes, but ‘best’ considers sample size.

Hiding comments with low votes is just a way of saying “if I say anything the community disagrees with strongly enough, the argument doesn’t have to be had”.

Vote scoring in general inherently increases the prevalence of groupthink and a lively discussion forum becoming a place that HAS a lowest common denominator, where posts that don’t appeal to it die off.

The proof is in the pudding: the SSC reddit is not higher quality than the SSC commentariat.

Selection effects.

The best commenters here are the kind that wouldn’t be on reddit.

But one reason I am not on reddit is that I do not like systems that muck with comment order. I could perhaps see as workable a voluntary system that allowed one to vote up/down and also hide comments below a certain threshold. But I wouldn’t use it.

As HBC says. The selection effects are themselves evidence of the inferiority of the reddit comment system.

(See also previous testimony where SSC commenters admitted that they’re more abrasive on reddit.)

@ HeelBearCub:

Reddit has options to sort by chronological order, if the user so chooses. Or are you talking about a system that forces users to use such sorting? There’s a place for deliberate social engineering to shape an online community, but it’s not clear to me that that particular change would be an improvement.

@ AG:

An alternate selection effect: people who preferentially engage on platform X rather than Y will tend to be those that believe X is superior to Y. What metrics are we grading on? (Why did we choose those metrics?)

And, uh, I think I interpret “SCC regulars keep getting banned off the subreddit” a little differently. There’s a longer discussion about people’s increased willingness to shit where other people eat, but I don’t think it’s too much to argue that the effect may be symmetrical.

@Dan L

The implication of SSC regulars being banned from the subreddit depends: do you believe that the subreddit has higher comment quality than the blog comments?

I just explicitly asked you what metrics you were using. That wasn’t rhetorical – I can think of a few where the subreddit comes out ahead, and a few where these comments win out, and a few more where it’s competitive.

And I can think of a few mechanisms completely orthogonal to comment quality that might render the userbases mutually unpalatable. That’s the part that actually annoys me, I think – the jump from “I don’t like that kind of discussion” to “therefore they’re terrible” without even a veneer of objectivity.

I used to agree with you, but after spending a lot of time with the Reddit-like Less Wrong system and also a lot of time with the voteless SSC system, I’ve found nothing to privilege the former over the latter. Sure, we get a few comments like the ancestor, but so did Less Wrong, and a bunch of vote manipulation drama on top of it.

The systems I really hate, though, are the ones with upvotes but no downvotes. Those encourage groupthink while doing nothing to discourage being an asshole, so they produce a lot of groupthinking assholes.

Scott is correct. In Shakespeare’s day, 80% of the population had to farm for food, less than half were literate, women weren’t allowed in most literate professions, and “writes for publication” generally meant “either noble or personally sponsored by nobility.” (Shakespeare was part of “The Kings’ Men”, for example, an acting company with King James I himself as their sponsor.)

So far fewer of of England’s population then had any chance to work full-time making publishable or permanent art.

I understand you prefer a different definition of “artist,” where anyone who makes up a new bit of song or changes a story when they tell it to their kids is an artist. But every famous Elizabethan writer came from a background far wealthier than ordinary farmers; the vocabulary alone guaranteed that. Ordinary folk didn’t get the education or the access to write for national posterity.

So no, you were never going to get the works of Shakespeare from some village’s best farmer-singer. Not because she wasn’t capable of it, but because the economy and prejudice of the time was never going to give her the chance to find out.

You also weren’t going to get it because she wasn’t capable of it. Shakespeare is a ludicrous, ludicrous outlier. I have no time for conspiracy theories along the lines of “the works of Shakespeare were written by the Earl of Oxford” because they don’t explain anything. A conspiracy theory along the lines of “Shakespeare was an alien cyborg from the future”, on the other hand, might have some merit.

I’d also add that “writes for publication” is a bit of a stretch, as applied to Shakespeare. He was certainly aware that his work was being/would be published (“So long lives this”, “states unborn and accents yet unknown” etc.) but the publication was a mix of sketchy pirate versions during his lifetime and pretty good versions edited by his friends and colleagues after his death. He made his money – very good money for the time – from the live performance of his work, probably mostly in his capacity as what we would now call a producer.

have you heard of material shaping lol ink and paper is one of many materials

>small excerpt

That’s just standard elderly griping. And yes that is a basic element of human nature.

In ancient times it was conceivable for one bright individual to learn everything of note up to the edge of human knowledge across most fields.

Now, even if I wanted to spend all day every day reading research papers I’d have trouble keeping up with the cutting edge in just one field like chemistry. To really keep up I’d need to focus on one tiny area of chemistry.

Shakespeare plagiarized from previous works and his contemporaries. 99.something% of the stuff written in Shakespearean England was shit and nobody remembers it. We remember a few dozen authors of note from an entire generation. it’s like pointing to that one remaining lightbulb still going after 50 years and saying “oh they don’t make them like that any more” without realising the sampling bias. That one lightbulb is the one survivor from hundreds of thousands, the last Remanent of a long tail hovering just above zero.

There’s now hundreds of thousands of kids writing stories. Most of them are shit. A couple hundred thousand of the stories are harry potter fanfics. But it doesn’t really matter. Some really good authors end up standing out and in 300 years elderly curmudgeons will be griping that the year 2318 doesn’t produce anything on a par with the hundreds of great authors spawned from the millennial generation.

Engineers these days are perfectly capable of designing a lightbulb that would last fifty years; it just wouldn’t be one you’d want to buy for your house, because it would be both expensive and substantially dimmer than the lights you normally use. It’s all about the trade-offs…

If you want a regular incandescent lightbulb to last 50 years, like the Centennial Light, which is 110 years old, the recipe is fairly well-known. Run it at a low temperature, ideally cherry-red, and make sure it’s not a halogen type. Leave it turned on all the time, so it doesn’t experience thermal shocks from being turned on and off. Using a carbon filament rather than a tungsten filament would help (carbon’s vapor pressure is a lot lower than tungsten’s) but is not necessary. Using a thicker filament, like the ones used in low-voltage lightbulbs, will also help. To lower the temperature, a garden-variety leading-edge dimmer is probably adequate, but a transformer to lower the voltage might be more reliable than the triac in a dimmer. (It doesn’t really count as “lasting 50 years” if you have to replace the dimmer four times in that time, does it?)

And, yes, it’s going to be very dim and energy-inefficient.

A triac dimmer will probably reduce bulb lifetime compared to using lower voltage. The transients make the filament vibrate. DC would be best, but smoothly varying AC is better than the chopped-up stuff from a triac.

LED light bulbs last about 30,000 hours as opposed to 1,000 hours for incandescent bulbs. That’s about 13+ years, ten hours a day. Each bulb has a solid state rectifier to turn the AC into DC, a switching regulator to dial down the voltage for the LED and the LED itself. It’s an impressive piece of work. Modern semiconductors can deal with power levels that would have been considered insane 50 years ago, and let’s not forget the challenge of building an diode that emits white light in sufficient quantities. The choice was red, green and amber until the early 1990s.

I upvote this comment.

Also I’m old enough to when we knew how how to cherry-pick anecdotes with which to bash the kids on our own, didn’t have to use some other whiny old fart’s book to do it for us.

I think Shakespeare is also just a special case. There really is no comparable outlier I can think of in any other field; the closest might be Don Bradman.

As a non-native English speaker, why the emphasis on Shakespeare as the writer/dramatist to end all writers/dramatist?

He was late 16thC. In France, early 17hC we have at least 3-4 literary giants: Corneille, Moliere, Racine and Jean de la Fontaine. Plus a fair amount of second tier guys, on par with, say, Marlowe or Middleton (in terms of modern name recognition, though I might be biased coz no one ever taught me English literature and, for example, I only know Marlowe b/c of the Shakespeare authorship conspiracy theories and his murder/untimely death).

I more than half suspect it’s just a case of Shakespeare having a well-organized group of fanboys. Since I was educated in English, I had to read and watch and analyze quite a bit of Shakespeare over the years, and if there’s genius there, I must have missed it. Reactions from my fellow students were similar. I’m willing to grant him some respect for having legs, as they say; it’s impressive that his works still being performed hundreds of years after they were written. But my best guess is that he is merely a capable professional artist with an influential and well-organized group of fans, rather than some sort of otherworldly genius.

I’m reminded of an old story about an automated system being used to grade the quality of kids writing.

The idea was simple: the system graded each students work, a human graded it and if there was more than a certain percentage divergence it was graded by a panel then put into the training data set. Some interesting points were that the system could output the reasons and highlight problem text etc.

So of course someone had some fun feeding it the “classics”

Which it pretty universally graded as being poorly written.

But of course that doesn’t count because the classics are transcendent and anything that says they’re poor is by definition wrong.

never mind that the more systematic you make your assessment method the worse they do.

It’s pure fashion. it’s not the done thing in the english department to say “oh, ya, most of those ‘classics’ aren’t all that great”… not no, that would be terribly crass. So you have a culture of, to put it utterly crudely, people constantly jacking off over the classics. Criticizing them is like turning up on /r/lotr/ and saying bad things about tolkien’s work or /r/mlp and stating that it’s not all that.

They’re the equivalent of the few works that managed to make it into top 10 popularity rankings back when the field was being defined and so the nostalgia for them got encoded in the culture like how people view games they played as kids through rose tinted glasses.

There’s probably a lot of dreck called “classic”, but I don’t think an automated grading system for kid’s writing thinking it’s dreck means anything. First of all, I wouldn’t trust such a system anyway; it’s as likely to seize on superficial features (correlated to what you want) in a training set as it is to go for what you’re looking for. Second, it’s operating way out of its domain when grading the classics; English has changed a bit since Shakespeare’s day, for instance.

Shakespeare’s work has maintained its popularity for centuries, and in multiple languages; I think it’s beyond any accusation of faddishness.

To name another work that a kid’s grading system would hurt, there’s Moby Dick. It’s IMO (and not just mine) nigh-unreadable, and there’s that crack about the odd chapters being an adventure story and the even chapters a treatise on 19th century whaling. Yet the story keeps getting re-written and re-adapted. That, IMO, probably means it’s ‘classic’ reputation is deserved.

For another take on automatic grading, there’s a story called “The Monkey’s Finger” Asimov wrote about his own story “C-Chute”, based on an argument he had with his editor about it. Asimov obviously took the author’s side.

Count me as one of his fanboys. I wish I were better able to articulate why I think he’s a genius – maybe I’ll chew it over and try to come up with something.

But yes, in English writing at least, there’s no one who comes anywhere near Shakespeare.

French literature was actually (one half of) my degree subject, so I’ve read a fair amount of Racine, Molière and the rest in the original, and I just can’t agree. Are they great writers? Sure (Molière the best, for my money). I actually prefer Musset to any of them, but I grant that’s an idiosyncratic matter of taste.

Taken as a poet, I don’t think Shakespeare is in a noticeably different class to them, or to a number of other English language poets. As a playwright, however, he’s completely unique. The scope and depth of his insight into a vast range of characters has no parallel (Chekov and Sondheim the closest, for my money, and neither very close).

The best comparison in French literature isn’t a playwright at all; it’s Proust. Both have (different kinds of) exceptional insight about the world and the ability to elucidate those insights on the page so as to make them comprehensible to the rest of us clowns, to a degree that others don’t. I just think Shakespeare’s significantly further out ahead of other playwrights in that respect than Proust is other novelists.

I’ve read the entirety of Shakespeare(as defined as “complete collection of…” type entirety) a couple of times now trying to figure this out. I find he’s occasionally very good, often drags, that type of thing.

I think it’s partially big for the same reason chess persists throughout the ages, in that it got filed into a “smart people do this, so if I do this, I must be smart” box with a few other things(classical music, watching indie film) and thus is much, much bigger than I’d expect if everyone was unable to use it as a flag.

This isn’t to say some people don’t absolutely, genuinely love these things, just that most of the people who talk about the genius of them probably don’t genuinely enjoy them as much as they do a well made piece of contemporary mainstream art, outside of the status effect.

Shakespeare’s influence is just a result of the social butterfly effect, not intrinsic to his works: https://lasvegasweekly.com/news/archive/2007/oct/04/the-rules-of-the-game-no-18-the-social-butterfly-e/

In another world, someone else with comparable quality had the same amount of influence, while Shakespeare became the forgotten.

Don’t forget that Shakespeare was a Tudor apologist. It was a new dynasty, and England as a whole was getting wealthier. Shakespeare rose with the tide.

John von Neumann?

Then again, von Neumann had contemporaries like Edward Teller that he was only maybe an order of magnitude smarter than. But none of Shakespeare’s contemporaries even comes close to Shakespeare.

This isn’t the best place to sneer at Harry Potter fanfic.

That wasn’t really meant as a sneer, I think it’s wonderful to see so many people getting into writing that way.

The vast… vast…. vast majority of fanfic is crap… but then the majority of everything is crap and there’s some real nuggets in among the dross.

have you heard of material shaping lol ink and paper is one of many materials

When Weber, Fechner and Wundt created psychology, they were not only three very smart guys converging on a new idea. They also did so specifically inside academia, inside the most progressive city of their entire country (which was also the center of the book industry and made it really easy for them to publish a lot), all while their entire country was going through incredible boom times.

Imagine that at the same time as them, a philosopher, a physicist and a physiologist had made the exact same discoveries in Kazakhstan. It is quite possible. They could just as easily read Darwin in Kazakhstan, they would know about atomic theory and could make the same basic realization that the psyche has to be a material thing that can be studied experimentally like other material things. But we would never know, because who cares what happened in the 1870s in Kazakhstan.

Or go to Ancient Greece. Sure Epicurus was a very smart guy. But did it take a very smart guy to come up with the Principle of Multiple Explanations or with the Epicurean paradox? I think a clever tribesman in the Amazon could have come up with those, given some spare time. But Epicurus happened to live during the rapid rise of Hellenic culture that was then cemented by the Romans, so he got a huge signal boost that no tribesman in the Amazon got.

Was Shakespeare a literary genius? Sure! And additionally, he was a firmly English artist competing with classical art in a time when the English were rapidly expanding and seeking to distinguish themselves from the Catholics who were really into classical art. Maybe someone else in the same era wrote even better, but was less well-connected, or too Catholic, or a woman, or lived in Lithuania and wrote in Lithuanian. We wouldn’t know.

All three examples of excellence in creating novel knowledge were in the right place at the right time: in places where history was being made, places and times that coming generations would be highly likely to learn about. And all of them stand out much more in the simplified and compressed view of historical hindsight than they stood out to their contemporaries. I don’t think this is a coincidence.

So sure, there are indeed low-hanging fruit, but maybe they get picked all the time. Maybe what starts a new scientific field, or a philosophical school, or a literary classic has less to do with being another one of the guys who pick those fruit – and more with having the clout to make people listen when you say there are more fruit further up?

Maybe someone else in the same era wrote even better, but was less well-connected, or too Catholic, or a woman, or lived in Lithuania and wrote in Lithuanian. We wouldn’t know.

That reminds me of Mark Twain’s Captain Stormfield’s Visit to Heaven, which features a 16th-century shoemaker who, in his spare time, wrote sonnets whom he never dared show anyone one, but which are acknowledged in paradise as the greatest poetry known to man.

I don’t remember that, but Captain Stormfield did include mention of a man who would have been a great general (the world’s greatest) but he never got into the military because he didn’t have thumbs.

I’ve read that as a boy and as a (sort of) man, and I’ve come to the conclusion that the cranberry farmer in that story didn’t get enough of my bandwidth at the time – like overall Twain seems to be saying that finding the thing you are supposed to do/made to do is the thing, and that the cranberry farmer is lucky to have his new profession even if he could have the General’s job. I missed that for a long time.

There were few texts translated into Lithuanian language, but in practice, Lithuanian language in written form did not during Shakespeare days. You cannot write (even better) if you do not have tools for it.

s/Lithuanian/Polish/

(At least I think Polish was the primary literary language of the region in Shakespeare’s time? Not sure. It definitely was in the 18th and 19th century. I think Adam Mickiewicz might be a good case in point — he was born in what is now Lithuania and is considered Poland’s greatest poet. Is he better than Shakespeare? I have no idea, because I’ve never read any of his work or seen anyone discuss its literary merit.)

The evidence for Shakespeare’s greatness is better than this implies; Schiller’s German translations of Shakespeare’s plays are considered classics of German literature. Verdi wrote operas based on Shakespeare’s plays. Language barriers matter, but not so much that an English-language perspective is guaranteed parochial. (Likewise, Anglophone poetry students regularly read some of the best French and Hispanic work, and recognize Goethe and Schiller and the Greeks as important.)

I feel like Scott talked about this briefly before, and I’m slightly surprised he didn’t look back on it, unless I’m misreading him.

There’s probably plenty of “low-hanging-fruit” but as you said, the problem isn’t just identifying a low hanging fruit. Genius is 99% perspiration you may still need a lot of resources to bring in idea to fruition. Not every child can be Taylor Wilson. It may not even be present circumstances but historical ones as well. Wilson wouldn’t have come up with his idea if Farnsworth and Hirsch didn’t make their advancements.

I feel like an additional point, maybe a #4 realted to #3, is that there’s less time on average for innovation form any individual. Scott wrote an interesting parable on how expanding the cutting edge of a field requires growing not only knowledge on the field, but also pedagogy in that field, pedagogy in general, knowledge in related fields. A lot of this may be one has to “connect more dots” to come up with some type of innovation. Yudkowski had to connect DIY/Maker knowledge with building a better light box, along with knowledge of the lightbox/treatment for SAD. Even if exponential more people were going into makerspace/DIY, we would expect that the number who were good enough or familiar with the components to build the box would be smaller, and then also knowledgeable of SAD and or psychology would be drastically smaller. Same thing with the psychology side of things. There may be exponentially more psychologists, but smaller the number that deal with depressive issues and are motivated to find solutions, but even smaller still the number that also are familiar enough with modern electronics. This comes back to there being less time on average for being able to connect the dots, because more hours per year are being spent schooling, and more years (masters is the new bachelors). In doing so, we’re eating up valuable time when people are at the prime of their divergent thought, able to consider “connecting dots” that people ossified in their field wouldn’t think about.

Scott seems optimistic that AI will overcome this barrier by basically exponentiating on exponential scientists. However, The Economist recently published an interesting article on AI’s attempting to accomplish their objective function like Corporations. Corporations kill creativity. We may be putting blinders on these AI’s depending on the training sets we give.

This is why Taleb encourages Bricolage for knowledge generation as opposed to the systems we have in place now. Maybe we need to focus our efforts less on defining “the right” objective functions, and instead try to come up with an AI that behaves more like a polymath and less like a corporation.

On the connecting-the-dots point, while resources are growing exponentially the threshold for breakthroughs is growing factorially?

Partially yes. It’s a very good and succinct way of putting it. I also want to emphasize that we’re also not focusing on divergent thinking as the necessary resource for generating solutions. I linked to the results of the paperclip creativity exercise, because it’s illustrating to see results where young children can easily come up with hundreds of answers, and older adults in the 10s, maybe 20s.

Like, if you told a child to ask engineers in the 1940s “What if we could use a phonograph with vinyl records to read/write entry data instead of punchcards?” some would be amused, but most would think the child was being silly. Children are however, the most likely candidate to ask this sort of question, and so one that does, and then learned a bit about how magnetics tapes worked that was lucky could end up developing the hard drive

So if a new innovation required say, four dots to connect (vinyl record, magnetic tape, punch cards, storage application) you may need to sieve through twenty-four different ideas before you say “eureka!”. This could be handled by an adult that still had a fair amount of creative and divergent thinking. As soon as a new innovation required five dots to connect though, you may be looking at a hundred-twenty different possible ideas to sift through to get that “eureka!” That may be possible if you have a group of geniuses working together that can put the knowledge together, but that may also be possible by a single child prodigy that has been exposed to a lot of different things to also come up with a significant innovation

Once you have ideas that need six or seven dots to connect, then no number of adults can possibly connect them, as you’re basically approaching the Dunbar number. A group of children though, could manage it. Force children to waste time doing homework instead of playing and socializing and self-directed learning and they no longer have those opportunities.

I think your example there of the 19-year old is a just-so story. The more important part there wasn’t the child or his divergent thinking (adults can and do come up with innovative ways to clean the ocean on a fairly regular basis) – it was the capital to actually build the thing to show to a VC firm.

I was also reminded of “Ars longa, Vita brevis” by this post.

That.

But also b/c I’d like to push back on the idea of low-hanging fruits.

Sure, Pythagoras and Thales theorems are now taught to kids less than 15 yo. and most of them get those and can use them to solve geometric problems.

But, for Pythagoras or Thales to come up with such stuff… Boy, they must have been some level of SD away from the norm of their times.

Basically – low hanging fruits aren’t hanging low when your arms are that much shorter than what they are now…

Probably not, because so far as innovation goes, “it takes a village” – people bounce ideas off each other, and the best rises to the top.

No people of similar quality to engage with, and you go nowhere.

There is also a startlingly logical pattern to where human accomplishment has historically been centered. For instance, the cultural and scientific explosion in Classical Greece was hitherto unprecedented in world history. But did you know that it was also the world’s first society to transition from “priestly literacy” (1-2%) to “craftsman literacy” (~10%)? This not only dectupled (thanks SA for improving my vocab) the literate population, but created previously unheard of concentrations of literate smart people. Add in the fact that the Greek world’s population was quite respectable for its time period – up to 10 million people, or not much less than that of contemporary China (!) – and things start to really make sense.

I remember thinking about that concept in Scott’s Steelmanning of NIMBYs and hence why I linked to Kleiber’s law as a power law that says innovation scales at the 5/4 rate instead of linearly (“10x size => ~17x more innovation) as a potential reason, so there is something to be said about that.

It’s also why I phrased out my more general comment on the interrelation of previous ideas as a generator for new ones. Have a big city, more people who know the right components can bounce ideas off each-other. Have access to capital interested in investing into adjacent technology, and you have the resources to put that idea into fruition. Less resourced needed per person for sustenance also means more surplus for research or creative endeavors.

I still insist though, that childhood creativity still plays a major part. Scott’s previous posts: Book Review: Raise a Genius and the atomic bomb as Hungarian Science Fair Project also shed some light. If one looks at (what I’ll call for lack of a better term) the “20th century Golden Age of Hungarian Mathematics” it didn’t just come out of a highly localized area, but could perhaps be linked to a good number of creative children having the opportunity to learn well (great institutions) with community support (not just in the same city, but also within the Jewish community) and possibly bouncing ideas off eachother (not in a “hey let’s build an atom bomb sense” but more of a “bet you can’t figure this out” sense)

I feel like my responses have also been a synthesis of multiple previous Scott posts put together. I think I’ve linked to five different ones now?

Moore’s Law is not an academic “research” program really. It is a (mostly) industry-based engineering program driving semiconductors down the learning curve. Every doubling of the number of transistors manufactured results in the same decrease in cost. These learning curves are very general and seem to apply to almost everything. But for sure it is true that the number of scientists involved to make this happen is increasing dramatically.

Here is a post from a couple of years ago on my blog on Cadence Design Systems (we produce the software for designing the transistors/chips). It turns out almost all transistors are memory (99.7%), and due to the transition to 3D NAND that is still improving at an amazing rate. Logic not so much, and analog maybe not at all.

This sort of learning curve, I’m sure, applies to corn and soybeans. The relevant driver is almost certainly not the number of scientists but the tons of beans/corn produced, the value of which goes to pay for an increasing number of scientists. It is a total guess, but I wouldn’t be surprised if you plotted marathon times against total number of marathon entries ever run, that you’ll get a perfect straight line.

For research, I would guess that number of researcher-years will give the same straight line. Every doubling of the number of researchers in a field produces the same “cost reduction” which I guess would be significant papers or something. Not quite sure how to make the learning curve fit into this, but it is clearly relevant. Obviously Edward Teller learned from everyone on the Manhattan project in a way that Atilla the Hun could not, which has to explain partially why Atilla didn’t come up with the H-bomb (let’s just assume he had the same IQ).

There are also things that suffer from Baumol’s cost disease which don’t fit the model. The archetypal example is that it still takes 30 minutes and 4 players to play a string quartet. In your (Scott) field, it still takes 15 minutes to have a 15 conversation with a patient (although the drugs probably follow something more like Moore’s Law).

I think that if it really mattered humanity could easily figure out how to write shorter string quartets or play them faster.

These days, that one string quartet performance can be recorded and replayed endlessly, so we really do get a lot more music for a given amount of labor…

The bit about the God of Straight Lines reminded me of Tyler Cowen on Denmark’s economic prosperity. 1.9% real GDP per capita growth per year isn’t particularly remarkable, but do that for the better part of 120 years and it really adds up.

Especially if you have some big efficiency gains on running each AI. Imagine throwing the equivalent of a trillion AIs at a problem.

Did you mean to post this link? https://marginalrevolution.com/marginalrevolution/2016/08/what-if-there-are-no-more-economic-miracles.html

Yes. I entered the wrong link.

This is not a good scale for this. Almost all elements that haven’t been ‘discovered’ are radioactive with a half life of seconds making their discovery essentially trivial.

https://en.wikipedia.org/wiki/Island_of_stability

Is Moore’s Law still in effect? Apple went almost 3.5 years between its early 2015 and late 2018 upgrades to its MacBook Air laptop. A big reason for that is because CPU chips from Intel (Moore’s company) aren’t improving as fast as they used to.

As a fan of computer games since the early nineties, I’m torn between feeling glad that I no longer have to buy expensive new hardware every year to run new games smoothly, and worried about what this (obvious, despite denials from some quarters) stagnation portends.

On the other hand, we’re also pretty darn close to photorealism, so game designers can focus on crafting excellent games instead of constantly chasing technological advancement. Personally I think we’re living in a golden age of video games. I’ve been playing games since the early 80s and am suffering from an abundance of extremely high quality games I do not have time to play. In the past two years I have played several games I consider “among the best game I’ve ever played.”

Moore’s law, as its explicitly stated (density of transistors) still seems to be in effect. Commercial CPUs in 2014 used 14nm dyes, and the latest CPUs in 2018 now use 7nm dyes. Since density scales with the inverse of dye size, that’s a quadrupling in four years. 5nm dyes come out in 2020, which means that there’s at least one more doubling cycle left.

Experimental labs have prototyped sub-1nm transistors, so there may be as many as six doubling cycles left. Then past that, we can start thinking about layering 3D chips instead of 2D dyes, spintronics, memrisotrs, replacing silicon with graphene, and other exotic approaches. My guess is that Moore’s law lasts ways pasts anyone expects. It’s not inconceivable for it to run thirty more years.

The big asterisk with Moore’s law from 2008 onwards, is that it doesn’t payoff in terms of faster CPUs. Because of fundamental physical limits, computers hit their max clock speeds a long time ago. Nowadays increased transistor density is used to add more cores, rather than faster cores. So the same task pretty much takes the same time as ten years ago, but you can do more in parallel.

Simply upgrading to a new CPU doesn’t give you the automatic speedup that it did in the 90s. You have to effectively utilize the multicore facilities, which from a software perspective involves a lot of tricky engineering pitfalls. That’s the big reason why device makers don’t rush out to to upgrade CPUs at every upgrade cycle.

AFAIK the law still holds, but the time between advances seems to be lengthening. So, if you’ve got a long enough time horizon, you’ll still see roughly double the number of transistors every two years, but now it might be four years before the next generation (with 4x the number of transistors) comes online.

It’s still in effect in terms of transistor density, not in terms of core clock frequency, which is why the number of cores is now increasing exponentially.

Measuring a single product is a really bad idea. The main thing that you are observing is Apple wanted to phase out the “Air” brand name! They made new laptops during that time, just not “Air.” You can tell that they wanted to dump the name because the new laptops were lighter than the “Air.” They failed to phase out Air because Intel stumbled, but that doesn’t mean that Intel lost 5 years, or that anyone else lost any time.

1. Moore’s law for speed broke down 10 or more years ago, not 5 years ago.

2. Recently Intel’s lead over the competition fell maybe from 2 years to 1 year. But both Intel and the competition are still chugging along making transistors cheaper.

3. Intel is worse than the rest of the industry at making use of more transistors, especially along the metrics Apple cares about (eg, power consumption). The iphone is pretty much as fast as the fastest laptop Apple sells.

4. Apple makes just-in-time designs. If Intel fails to meet their timeline or specs, it messes up their whole vibe and they abandon laptops and concentrate on phones. But small errors by Intel have small effects on the product lines of other companies.

From 3+4 it’s pretty clear that Apple is going to switch away from Intel. For years there have been low-end Windows laptops that don’t run Intel. But Apple doesn’t want to fragment its line that way. When it switches, it will switch the whole line, including desktops.

As someone who studied Shakespearean Lit in college, it would not at ALL surprise me to learn that there were 100 writers just as good as Shakespeare living today. Shakespeare isn’t famous as he is simply because he was the very very best; he’s famous because he was so good *and* was the first to get to that level at a time when the playing field was a lot less crowded than it is nowadays (and also he got lucky on the publicity front). I think there are many writers of similar caliber around today, they’re the folks who are in writer’s rooms creating the new golden age of television. There is so much excellent fiction and drama around right now that we are massively spoiled for choice! That was not true in Shakespeare’s day.

I’m not so sure. I won’t speak for plotting or characterisation, but purely at the level of the language, reading Shakespeare really is quite incredible. There’s no-one writing at that level today. (OK, maybe that’s partly because our priorities have changed and ‘elevated, poetic diction’ is no longer something our writers are interested in, but still.)

There’s also the question of how much credit we give for doing something first. If Shakespeare invented some particular idiom, and nowadays dozens of writers are merely competently using it, do we give Shakespeare more credit for that? Even if the experience is the same for a (naive) audience, it seems that in some way we should.

The closest thing to a Shakespeare-style play written by a contemporary artist is “Hamilton” by Lin-Manuel Miranda. Honestly, I think it holds up pretty well in comparison.

I haven’t seen it, but from what I know I suspect Hamilton is actually a really good fit. I’ve long held that part of the reason poetry is no good nowadays is that all the people who would be writing good poetry go into songwriting (or rap) instead. So it stands to reason that the closest analogue for the language of Shakespearean plays would be in a rap-based musical.

Except for the fact that Miranda is a terrible lyricist.

The closest living writer to Shakespeare is Sondheim, though I’m not sure you could count him as still active.

Shakespeare’s plotting is legitimately not that great. Only one of his plots is one that he created, several have impossible inconsistencies or terribly convenient co-incidences, and many are resolved in ways that we would consider ridiculous and laughable by modern standards, including a literal deus ex machina in one play and “everybody dies!” or “everybody gets married!” in many others. And of course order and good is always promised to be restored at the end. Those were the genre conventions at the time, but we’ve left those genres behind and would probably consider such resolutions excessively simplistic now in modern writing.

Likewise I think you are correct that the language is really a matter of style and that elevated poetic diction is no longer valued in theater. Shakespeare’s language was absolutely excellent but the style these days in drama is for more naturalistic writing. I have no doubt many people could write plays similarly but Elizabethan style prosody is not what the market demands in movies and theater.

Plotting, sure. Characterisation, on the other hand…

Yeah, and dril is creating new memes that get warped into slang all of the time. There are plenty of people online who are influencing language as much as Shakespeare presumably did. The difference is that fiction is now more subservient to reflecting the culture than defining it, but that’s more a side-effect of globalization and increased wealth, allowing people to develop their culture by millions more avenues than from only the local theater.

For that matter, aren’t Yudkowsky and Scott at Shakespeare levels of slang creation, for this community?

And to really support phoenixy’s point, there’s literally a trope called “Buffy Speak,” named for the way YA dialogue style was so indelibly defined by Joss Whedon. And hey, there’s even a Shakespeare connection here!

Who cares about influence? I’m talking about beauty.

Beauty is utterly subjective. In addition, the reason we find certain things beautiful is because of their influence, the way they’ve influenced culture primes us to find certain things beautiful (while someone from another culture may not).

People find dril tweets have an ethereal charisma to them. Shitposting is an art.

If you think beauty is utterly subjective, you really aren’t the sort of person to be commenting on literary merits. Just like someone who thought science was utterly subjective shouldn’t be evaluating the claims of the most recent psychological studies.

Someone believing that aesthetics can be objective doesn’t give them any more qualification in judging artistic merit, either. Whole swaths of people curating for modern art museums with fancy degrees in it prove my point.

My stance is that Shakespeare is good, great, but that his greatness is not unique. Our veneration of him is more strongly influenced by factors outside of his work’s quality than anything else, and that the perception that Shakespeare was/is uniquely great isn’t true. There are many works that are of comparable quality, merely unrecognized, because gaining popularity is both hard and mostly based on luck.

“There’s no-one writing at that level today” is a function of you not being able to find them, not their not existing.

For example, one of James’ hypotheses about why is that our priorities have changed. I agree, but in the direction that our priorities take much more of the visual dimension into account, that people with Shakespeare levels of talent may have become directors rather than writers, cinematography instead of prose.

See also how therefore new adaptations of Shakespeare to audio-visual mediums convert some of the text into visuals, and so remove the need for parts of the text. When you have a closeup on an actor’s face conveying their intricate emotions, you don’t need a monologue from the days when people could barely see their heads from the theater balcony, much less facial expressions. Consider the adaptations that remove the text entirely, especially Kurosawa’s. You don’t need a monologue about the spirits in the forest, when you can have a shot of the fog in the trees.

(For that matter, any good translation of Shakespeare into a foreign language implies a translator as good as the man himself, such is the amount of interpretation needed in translation.)

As such, prose has also evolved to serve visuals, even in text forms, as they prioritize evoking an experience in the brain similar to watching a film or TV. It takes skill to do that, skill that could have the same magnitude as Shakespeare’s but in an orthogonal direction.

Like, say, crafting a perfect shitpost tweet. “I will face God and walk backwards into Hell,” a nice complement to “Villain, I have done they mother.”

The argument for Shakespeare’s merit rests not on the fact that he influenced the English language (the influence pales in comparison to, say, William the Conqueror) or that his work required skill (so does farting the alphabet backwards), but on the claim that he wrote some of the most beautiful poetry ever written. If you think that such a claim can’t be adjudicated on account of beauty being utterly subjective, you really don’t have any business debating his poetry’s merit. Just like, since I deny the ability of astrology to predict the future, don’t have any business debating whether the sidereal or tropical horoscope is more accurate. I deny the claims that are a pre-requisite to debate.

For instance, you say:

But, to the person who believes in the beauty of poetry, the monologue is not a means but and end. It’s the whole point. We go to the theater (or open the book) to hear poetry, because it’s beautiful. Claiming it’s no longer “needed” due to cameras is like claiming we no longer need ribeye steaks because of Soylent. Someone who would say such a thing obviously has no taste for ribeye steaks, and probably has no business discussing whether they should be preferred to filet mignon.

You are right that our culture, like the centuries after the fall of Rome, has moved away from text back toward visual media. Neil Postman is good on this.

The point is that the beauty of prose is operating off of different standards, and therefore people with the same level of talent produce drastically different results. One of them produces Shakespeare’s poetry. The other writes the script to Throne of Blood.

Secondly, the argument for Shakespeare’s greatness is commonly rooted in the claim that no one else achieves the same level. Therefore, it is a valid counterargument to dispute that “same level” is a meaningless measure, and so “Shakespeare’s greatness” as defined by that measure is also invalid. There is nothing wrong with saying that neither horoscope is more accurate because all horoscopes are bunk, and in invalidating our “right” to evaluate sidereal vs. tropical, does not make those who do debate that any more correct.

Shakespeare is not necessarily greater on content quality, because measuring content quality is not so possible, and so we can only evaluate his greatness by other measures, such as influence or popularity. We cannot measure if various horoscopes are accurate, but we can measure how often they are read or followed.

Strongly agreed.

Even truer for the visual arts. This guy is more technically adept than probably anyone even a century ago, let alone five.

Eminence is precedence.

This is perhaps nitpicking, but I don’t think you want to go all the way back to the Greeks; you probably only want to go back to the scientific revolution. Of course, this only changes the question a little.

Also I’m not sure it makes sense to credit the Greeks for the cardiovascular system? I mean, they didn’t even know that arteries carried blood, IINM.

Are you distinguishing Galen from “the Greeks”? Some people claim that he showed that the arteries contained blood not air. It’s hard to imagine that anyone every believed that they didn’t contain blood. It’s probably a misinterpretation of Erasistratus, who said that arteries carried pneuma, but he probably meant in the blood, not in place of the blood. And he talked about vacuum pumps, but he probably meant that the same applied to liquids as to gas.

Anyhow, Herophilos wrote a book “On Pulses” about how the heart pushes blood through the arteries.

Gordon Moore’s publication of what came to be called Moore’s Law was intended to be a self-fulfilling prophecy to help his firms, then Fairchild and from 1968 onward Intel, raise the capital and recruit the talent to make it come true.

The gods of Moore’s Law act through those who respond to their grace.

That’s lovely.

You might be right that 3 is the dominant factor, but I want to make a case for 1 (lots of essentially not useful researchers).

If you Google for top machine learning journals (http://www.guide2research.com/journals/machine-learning), basically… none of these matter. There are ~5 top ML conferences (and a bunch of area-focused ones, e.g., CVPR, but ~1-3 per area). If you look at the top 4 CS schools and look where the best professors publish, you’ll find that this is roughly true.

I don’t know how to write this without sounding like an elitist prick, but most of the progress is made by very few researchers. For example, Kaiming He (http://kaiminghe.com/ , I have no relation to him) has published some of the most impactful papers in computer vision (ResNet, ResNeXt, Faster R-CNN, Mask R-CNN, etc). Every top entry in MS-COCO used Faster R-CNN or Mask R-CNN.

By extension, I’m not entirely sure how productive the rest of the researchers are relative to these standouts. I’m also not sure if I have a solution to this problem.

If the number of ML researchers has recently dectupled, and there are 50 good researchers and 950 crappy ones, that’s consistent with either:

1. In the past there were 50 good researchers and 50 crappy ones

2. In the past there were 5 good researchers and 95 crappy ones.

Which do you think is true?

Most likely something in between, of course. The question is closer to what end, though (I suspect closer to 1).

[epistemic status: guessing based on my inside view of academics]

That’s a great question. I think there’s very non-linear growth (logarithmic? I’m not sure) of number of good academic researchers.

Here’s a few reasons I believe it:

– Roughly, the majority of work in a subfield (e.g., ML) is done in 1-10 schools. These schools are expanding their faculty non-linearly wrt to the number of researchers in the field.

– Where does all the talent go since presumably there are good people? Well, if you get paid $500k to be a Tesla ML engineer, that’s pretty attractive. Related: a striking number of IMO gold medalists work in finance.

– PhDs are essentially apprenticeships. It’s hard to learn all the nuances of being a good researcher (doing the experiments / proving stuff is not the hard part. It’s choosing the problems and effectively communicating it) without being mentored for years. Mediocre researchers probably don’t produce world-class researchers.

– What about the good people who go to not-top tier schools? It’s significantly harder to do research: funding is harder, finding good students is harder, you’re likely tasked with service, etc. Thus, I believe their productivity would go down.

Unfortunately I don’t have hard numbers.

I would think almost certainly closer to #1.

A new field with uncertainty about stability and growth will tend to attract only those people who are really interested in that field (as you mention in the post), or those who happen to be nearby and looking for a job (nepotism/connections comes to mind, or some version thereof).

As has been the history of new companies and startups, most of these fields fail, leaving the people in them scrambling for new positions and sometimes entirely new professions. That’s not something a lot of your 9-5 group is interested in doing. They will wait until the field is more secure and lucrative before diving in.

The pretty good 2011 movie “X-Men: First Class” reminded me that there really does sometimes exist a “first class” phenomenon where the first year of students at a new elite academy or program goes on to great success. This is most notable in Hollywood, where the first class of the American Film Institute school included Terrence Malick, David Lynch, Paul Schrader, and Caleb Deschanel. Similarly, the first students of CalArts in Valencia in Character Animation in the 1970s included Brad Bird, Tim Burton, John Lasseter, and John Musker.

Congregation of talent or old boy’s network?

Or first mover effect? Perhaps they got taught something revolutionary and this allowed them to take advantage of previously untapped opportunity. Later classes then found those niches already filled.

My dad was in the first class at a new grammar school (selective state schools, now mostly phased out). It’s striking how successful his contemporaries (and him) have been relative to what you’d expect from a group of working and lower-middle class kids in the 1950s. He puts this down to them always being the top kids in the school so not having any sense of being obliged to kowtow to others.

I think this is first-mover effect.

Consider – when “machine learning” first existed in the popular press – the projects were done in DARPA labs or were moonshot google projects made up of people making their own tools and kind of making stuff up as they go. If the field grows in stature and economic opportunity – those people that published the early tools happen to get a lot of prestiege (and thus funding, decent undergrad students to order around, fancy laboratories, etc) and become considered the “good” ones by default.

This effect gets worse as time goes on (see – low hanging fruit) and leads to us thinking that we have lots and lots of bad researchers and only a few good ones – when in fact we have lots more people publishing much smaller pieces of the same puzzle, but the first guy who moved in got entire field-changing innovations named after him, specifically.

I think Scott is trying to separate out the effects of ‘intrinsically good researcher’ (factor 1) from environmental factors such as ‘with the best equipment’, ‘has the best networks’, and ‘has a head start on everyone else’. The data that most of the progress is made by very few researchers is consistent with there being 10x more good researchers now, but still only few with the best labs, networks, and head start.

My pet theory for the slowdown of science, which I think is distinct from the three possibilities you discuss, is that it has to do with negative network effects. If there’s ten scientists in ancient Greece, they’re probably either working on entirely separate fields that have no potential overlap, all hanging out at the forum together, aware of every detail of each others’ work through extensive interlocution. For the umpteen million scientists today, though, everyone’s work intersects with or involves the work of hundreds of other experts, who are all publishing a constant stream of scientific literature and conference proceedings. Our grand communication infrastructures mitigate the problem, but we run up against the sheer limitations of human bandwidth; you can only read so many papers in a day, particularly if you want to get other work done. The key result of this isn’t that work winds up being duplicated (although that does also happen), but that it winds up being irrelevant to the greater course of science.

A large fraction of scientific papers are never cited, or cited only a handful of times (and that, often, by the same scientist.) Any given morsel of scientific progress usually solves a very small part of a larger whole, but the larger whole may be discontinued, shown to be impossible, or superseded by entirely different approaches. For instance, in the far future, people will know that the best technology for powering cars is either hydrogen, synthetic hydrocarbon fuels, some chemistry of battery, or some other exotic approach; each of these have small armies of scientists working on them, but only one will “win,” and the work of the others will be largely wasted. If you want to know what’s slowing science down, a force that can nullify entire subfields sounds like a likely candidate.

I guess a count against this theory is that it doesn’t address the hundred-Shakespeares problem. A new innovation in transistors is mostly only worthwhile if it happens to work in the same context as the hundred other most popular new transistor innovations; but theoretically, a really good book is inherently worthwhile, independently of what other authors are doing. Or perhaps that’s not true either?

Unrelated point; I would also argue that the slowdown of science is a bit overstated, since, though you have all these charts showing mere linear increases over time, you have more charts over time. You can’t extend a chart about transistor size to the 1500s. Coming decades will likely see the rise of linear increases in things we can now barely imagine.

This theory makes the most sense to me, as well. I’d venture to add some more things to your model: hyperspecialization and interdisciplinary researchers.

I think one result of this is that it generates the need for more types of researchers, kind of bypassing the human bandwidth limitation via parallel processing 😉

On the one hand, making progress inside of a particular field tends to require years of studying previous research (although hopefully not as bad as this). On the other hand, a lot of scientific and technological discoveries lean on tools and research from multiple fields, which necessitates people who understand a bunch of different things with various levels of detail.

If you look at two fields A and B, you can imagine a continuum between them with experts in just A and B at each end, and a range from people who do A and dabble in B, people who are equally into both, etc. The larger the inferential distance between A and B, the more points on the continuum you have to sample to bridge the gap, and thus the more interdisciplinary researchers you need.

This is then compounded by adding field C that has to interact with both A and B in the same way, etc. Assuming (extremely pessimistically) that every new field has to be fully bridged with every other field, and the inferential distance between fields keeps expanding, this gets exponential pretty fast. I don’t think this is exactly the case because it’s pretty unlikely that we’ll ever have to connect, say, marine biology to microchip design (although that does sound pretty cool). But it’s a first approximation.

As a concrete example, my dad makes a living by being that one guy who knows everything about how to set up design software environments for analog VLSI engineers. (I think. If I understand him correctly. Also he does do other things, but you get the point.) So he’s not exactly a software guy, and not an engineer or a designer either, but to do his job he has to be somewhere in between those, and be able to talk to both. Another good example is applied ML researchers in a particular domain, like computer vision for analyzing crop quality using hyperspectral imaging. (I swear this is a thing.) They’re not ML researchers working on entirely new algorithms, not exactly domain experts in crops, and they’re not developing new hyperspectral cameras or researching how they work. They just have to know a lot about each of them to do their jobs. And they have to be able to read through new ML research to stay on top of their field, and talk about crops with the crop people and camera design with the people designing their cameras.

On a more abstract level, I think what’s going on is that as our body of knowledge grows, the search space we can access also grows. As the domain-specific problems we’re trying to solve get harder because we exhaust the low-hanging fruit inside the domain, we have to look at more of the search space for tools we can use. This ends up requiring more researchers just to be able to look at all the things.

It’s not just research either: people have been studying for longer, where they only start to work later in life.

>it’s pretty unlikely that we’ll ever have to connect, say, marine biology to microchip design

Unless we want to design a cyborg-fish or something. That doesn’t sound like such an urgent civilizational need, but I’m not so eager to say we’re not ever likely to do that. And thinking about it,that doesn’t even have to be a fish – a biologically and cybernetically enhanced human adapted for living in sea will do just as well.

That all sounds right, but isn’t this just a formalization of what we mean by “using up all the low-hanging fruit”? As the frontier of knowledge gets further and further away, it’s harder to get to the frontier, harder to push against it, and harder to effectively train others when you do make progress. This is what we mean by reducing returns to scale.