[This is an entry to the 2019 Adversarial Collaboration Contest by Nick D and Rob S.]

I.

Nick Bostrom defines existential risks (or X-risks) as “[risks] where an adverse outcome would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential.” Essentially this boils down to events where a bad outcome lies somewhere in the range of ‘destruction of civilization’ to ‘extermination of life on Earth’. Given that this has not already happened to us, we are left in the position of making predictions with very little directly applicable historical data, and as such it is a struggle to generate and defend precise figures for probabilities and magnitudes of different outcomes in these scenarios. Bostrom’s introduction to existential risk provides more insight into this problem than there is space for here.

There are two problems that arise with any discussion of X-risk mitigation. Is this worth doing? And how do you generate the political will necessary to handle the issue? Due to scope constraints this collaboration will not engage with either question, but will simply assume that the reader sees value in the continuation of the human species and civilization. The collaborators see X-risk mitigation as a “Molochian” problem, as we blindly stumble into these risks in the process of maturing our civilisation, or perhaps a twist on the tragedy of the commons. Everyone agrees that we should try to avoid extinction, but nobody wants to pay an outsized cost to prevent it. Coordination problems have been solved throughout history, and the collaborators assume that as the public becomes more educated on the subject, more pressure will be put on world governments to solve the issue.

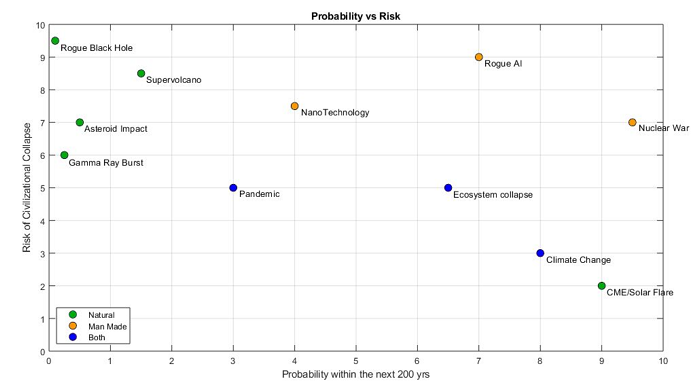

Exactly which scenarios should be described as X-risks is impossible to pin down, but on the chart above, the closer you get to the top right, the more significant the concern. Considering there is no reliable data on the probability of a civilization collapsing pandemic or many other of these scenarios, the true risk of any scenario is impossible to determine. So any of the above scenarios should be considered dangerous, but for some of them, we have already enacted preparations and mitigation strategies. World governments are already preparing for X-risks such as nuclear war, or pandemics by leveraging conventional mitigation strategies like nuclear disarmament and WHO funding. When applicable, these strategies should be pursued in parallel with the strategies discussed in this paper. However, for something like a gamma ray burst or grey goo scenario, there is very little that can be done to prevent civilizational collapse. In these cases, the only effective remedy is the development of closed systems. Lifeboats. Places for the last vestiges of humanity to hide and survive and wait for the catastrophe to burn itself out. There is no guarantee that any particular lifeboat would survive. But a dozen colonies scattered across every continent or every world would allow humanity to rise from the ashes of civilization.

Both authors of this adversarial collaboration agree that the human species is worth preserving, and that closed systems represent the best compromise between cost, feasibility, and effectiveness. We disagree, however, on if the lifeboats should be terrestrial, or off world. We’re going to go into more detail on the benefits and challenges of each, but in brief the argument boils down to whether we should aim more conservatively by developing the systems terrestrially, or ‘shoot for the stars’ and build an offworld base and reap the secondary benefits

II.

For the X-risks listed above, there are measures that could be taken to reduce the risk of them occurring, or to mitigate against the negative outcomes. The most concrete steps that have been taken so far that mitigate against X-risks would be the creation of organisations like the UN, intended to disincentivize warmongering behaviour and reward cooperation. Similarly the World Health Organisation and acts like the Kyoto Protocol serve to reduce the chances of catastrophic disease outbreak and climate change respectively. MIRI works to reduce the risk of rogue AI coming into being, while space missions like the Sentinel telescope from the B612 Foundation seek to spot incoming asteroids from space.

While mitigation attempts are to be lauded, and expanded upon, our planet, global ecosystem, and biosphere are still the single point of failure for our human civilization. Creating separate reserves of human civilization, in the form of offworld colonies or closed systems on Earth, would be the most effective approach to mitigating against the worst outcomes of X-risk.

The scenario for these backups would go something like this: despite the best efforts to reduce the chance of any given catastrophe it occurs, and efforts made to protect/preserve civilization at large fail. Thankfully, our closed system or space colony has been specifically hardened to survive against the worst we can imagine, and a few thousand humans survive in their little self-sufficient bubble with the hope of retaining existing knowledge and technology until the point where they have grown enough to resume the advancement of human civilization, and the species/civilization loss event has been averted.

Some partial analogues come to mind when thinking of closed systems and colonies; the colonisation of the New World, Antarctic exploration and scientific bases, the Biosphere 2 experiment, the International Space Station, and nuclear submarines. These do not all exactly match the criteria of a closed system lifeboat, but lessons can be learned.

One of the challenges of X-risk mitigation is developing useful cost/benefit analyses for various schemes that might protect against catastrophic events. Given the uncertainty inherent in the outcomes and probabilities of these events, it can be very difficult to pin down the ‘benefit’ side of the equation; if you invest $5B in an asteroid mitigation scheme, are you rescuing humanity in 1% of counterfactuals or are you just softening the blow in 0.001% of them? If those fronting the costs can’t be convinced that they’re purchasing real value in terms of the future then it’s going to be awfully hard to convince them to spend that money. Additionally, the ‘cost’ side of the equation is not necessarily simple either, as many of the available solutions are unprecedented in scale or scope (and take the form of large infrastructure projects famous for cost-overruns). The crux of our disagreement ended up resting on the question of cost/benefit for terrestrial and offworld lifeboats, and the possibility of raising the funds and successfully establishing these lifeboats.

III.

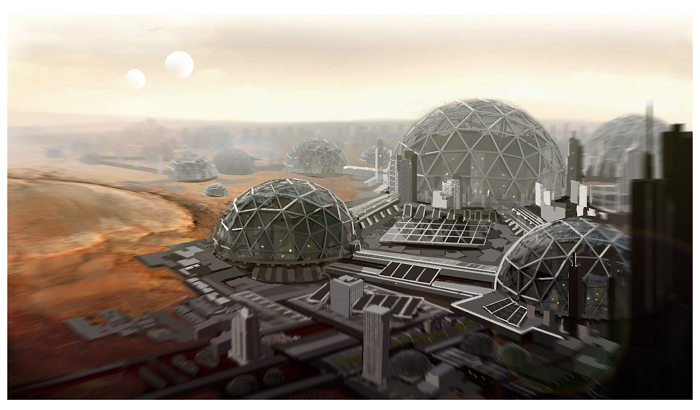

The two types of closed systems under consideration are offworld colonies, or planetary closed systems. An offworld colony would likely be based on some local celestial body, perhaps Mars, or one of Jupiter’s moons. For an offworld colony, the X-risk mitigation wouldn’t be the only point in its favor. A colony would also be able to provide secondary and tertiary benefits in acting as a research base and exploration hub, and possibly taking advantage of otheropportunities offered by off-planet environments.

In terms of X-risk mitigation, these colonies would work much the same as the planetary lifeboats, where isolation from the main population provides protection from most disasters. The advantage would lie in the extreme isolation offered by leaving the Earth. While a planetary lifeboat might allow a small population to survive a pandemic, a nuclear/volcanic winter, or catastrophic climate change, other threats such as an asteroid strike or nuclear strikes themselves would retain the ability to wipe out human civilization in the worst case.

Offworld colonies would provide near complete protection from asteroid strikes and threats local to the Earth such as pandemics, climate catastrophe, or geological events, as well as being out of range of existing nuclear weaponry. Climate change wouldn’t realistically be an issue on Mars, the Moon, or anywhere else in space, pandemics would be unable to spread from Earth, and the colonies would probably be low priority targets come the breakout of nuclear war. While eradicating human civilisation would require enough asteroid strikes to hit every colony, astronomically reducing the odds.

Historically, the only successful drivers for human space presence have been political, the Space Race being the obvious example. I would attribute this to a combination of two factors; human presence in space doesn’t increase the value of scientific research possible enough to offset the costs of supporting them there, and no economically attractive proposals exist for human space presence. As such, the chances of an off-planet colony being founded as a research base or economic enterprise are low in the near future. This leaves them in a similar position to planetary lifeboats, which also fail to provide an economic incentive or research prospects beyond studying the colony itself. To me this suggests that the point of argument between the two possibilities lies on the trade-off between the costs of establishing a colony on or off planet, and the risk mitigation they would respectively provide.

The value of human space presence for research purposes is only likely to decrease as automation and robotics improve, while for economic purposes, as access to space becomes cheaper, it may be possible to come up with some profitable activity for people off-planet. The most likely options for this would involve some kind of tourism, or if the colony was orbital, zero-g manufacturing of advanced materials, while an unexpectedly attractive proposal would be to offer retirement homes off planet for the ultra wealthy (to reduce the strain of gravity on their bodies in an already carefully controlled environment). It seems unlikely that any of these activities would be sufficiently profitable to justify an entire colony, but they could at least serve to offset some of the costs.

Perhaps the closest historical analogue to these systems would be the colonisation of the New World, the length of the trip was comparable (two months for the Mayflower, at least six to reach Mars), and isolation from home further compounded by the expense and lead time on mounting additional missions. Explorers traveling to the New World disappeared without warning multiple times, presumably due to the difficulty of sending for external help when unexpected problems were encountered. Difficulties associated with these kinds of unknown unknowns were encountered during the Biosphere projects as well, it transpired that trees grown in enclosed spaces won’t develop enough structural integrity to hold their own weight, as it is the stresses due to wind that cause them to develop this strength. It appears that this was not something that was even on the radar before the project happened, while several other unforeseen issues also had to be solved, the running theme was that in the event of an emergency supplies and assistance could come from outside to solve the problem. A space-based colony would have to solve problems of this kind with only what would be immediately to hand. With modern technology, assistance in the form of information would be available (see Gene Kranz and Ground Control’s rescue of Apollo 13), but lead times on space missions mean that even emergency flights to the ISS, for which travel time could be as little as ten minutes, aren’t really feasible. As such off-planet lifeboats would be expected to suffer more from unexpected problems than terrestrial lifeboats, and be more likely to fail before there was even any need for them.

The other big disadvantage of a space colony is the massively increased cost of construction, Elon Musk’s going estimate for a ‘self sustaining civilization’ on Mars is $100B – $10T, assuming that SpaceX’s plans for reducing the cost of transport to Mars work out as planned. In order to offer an apples to apples comparison with the terrestrial lifeboat considered later in this collaboration, if Musk’s estimate for a population of one million for a self-sustaining city is scaled down to the 4000 families considered below (a population of 16000) our cost estimate comes down to $1.6B – $160B. Bearing in mind that this is just for transport of the requisite mass to Mars, we would expect development and construction costs to be higher. With sufficient political will, these kinds of costs can be met; the Apollo program cost an estimated $150B in today’s money (why the cost of space travel for private and government run enterprises has changed so much across sixty years is an exercise left to the reader). Realistically though, it seems unlikely that any political crisis will occur to which the solution seems to be a second space race of a similar magnitude. This leaves the colonization project in the difficult position of trying to discern the best way to fund itself. Can enough international coordination be achieved to fund a colonization effort in a manner similar to the LHC or the ISS (but an order of magnitude larger)? Will the ongoing but very quiet space race between China, what’s left of Western space agencies human spaceflight efforts, and US private enterprise escalate into a colony race? Or will Musk’s current hope of ‘build it and they will come’ result in access to Mars spurring massive private investment into Martian infrastructure projects?

IV

Planetary closed systems would be exclusively focused on allowing us to survive a catastrophic scenario (read: “zombie apocalypse”). Isolated using geography and technology, Earth based closed systems would still have many similarities to an offworld colony. Each lifeboat would need to make its own food, water, energy, and air. People would be able to leave during emergencies like a fire, O2 failure or heart attack, but the community would generally be closed off from the outside world. Once the technology has been developed, there is no reason other countries couldn’t replicate the project. In fact, it should be encouraged. Multiple communities located in different regions of the world would actually have three big benefits. Diversity, redundancy, and sovereignty. Allowing individual countries to make their own decisions allows different designs with no common points of failure and if one of the sites does fail, there are other communities that will still survive. Site locations should be chosen based on

● Political stability of the host nation

● System implementation plan

● Degree of exposure to natural disasters

● Geographic location

● Cultural Diversity

There is no reason a major nation couldn’t develop a lifeboat on their own, but considering the benefits of diversity, smaller nations should be encouraged to develop their own projects through UN funding and support. A UN committee made up of culturally diverse nations could be charged with examining grant proposals using the above criteria. In practice, this would mean a country would go before the committee and apply for a grant to help build their lifeboat.

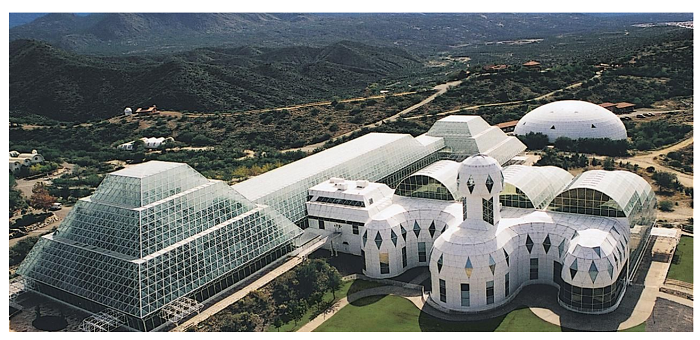

Let’s say the US has selected Oracle, Arizona as a possible site for an above ground closed system. The proposal points out the cool, dry air minimizes decomposition, located far from major cities or nuclear targets, and protected and partially funded by the United States. The committee reviews the request and their only concern is the periodic earthquakes in the region. To improve the quality of their bid, The United States adds a guarantee that the town’s demographics would be reflected in the system by committing to a 40% Latino system. The committee considers the cultural benefits of the site, and approves the funding.

Oracle, Arizona wasn’t a random example, In fact it’s already the site of the world’s largest Closed Ecological System [CES] It actually was used as the site of Biosphere 2. As described by acting CEO Steve Bannon:

Biosphere 2 was designed as an environmental lab that replicated […] all the different ecosystems of the earth… It has been referred to in the past as a planet in a bottle.. It does not directly replicate earth [but] it’s the closest thing we’ve ever come to having all the major biomes, all the major ecosystems, plant species, animals etc. Really trying to make an analogue for the planet Earth.

I feel like I need to take a moment to point out that that was not a typo, and the quote above is provided by that Steve Bannon. I don’t know what else to say about that other than to acknowledge how weird it is (very).

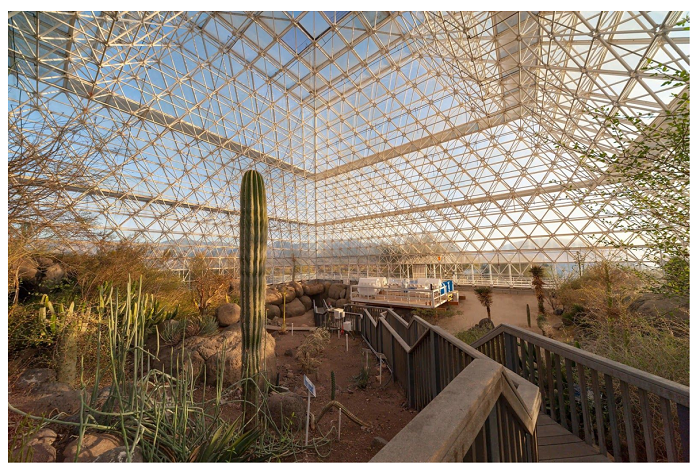

As our friend Steve “Darth Vader” Bannon points out, what made Biosphere 2 unique, is that it was a Closed Ecological System where 8 scientists were sealed into an area of around 3 acres for a period of 2 years (Sept 26, 1991 – Sept. 27, 1993). There are many significant differences from the Biosphere 2 project and a lifeboat for humanity. Biosphere 2 contained a rainforest, for example. But the project was the longest a group of humans have ever been cut off from earth (“Biosphere 1”). Our best view into what issues future citizens of Mars may face is through the glass wall of a giant greenhouse in Arizona.

One of the major benefits of using terrestrial lifeboats as opposed to planetary colonies is that if (when) something goes wrong, nobody dies. There is no speed of light delay for problem solving, outside staff are available to provide emergency support, and in the event of a fire or gas leak, everyone can be evacuated. In Biosphere 2, something went wrong. Over the course of 16 months the oxygen in the Biosphere dropped from 20.9% from 14.5%. At the lowest levels, scientists were reporting trouble climbing stairs and inability to perform basic arithmetic. Outside support staff had liquid oxygen transported to the biosphere and pumped in.

A 1993 New York Times article “Too Rich a Soil: Scientists find Flaw That Undid The Biosphere” reports:

A mysterious decline in oxygen during the two-year trial run of the project endangered the lives of crew members and forced its leaders to inject huge amounts of oxygen […] The cause of the life-threatening deficit, scientists now say, was a glut of organic material like peat and compost in the structure’s soils. The organic matter set off an explosive growth of oxygen-eating bacteria, which in turn produced a rush of carbon dioxide in the course of bacterial respiration.

Considering a Martian city would need to rely on the same closed system technology as Biosphere 2, It seems that a necessary first step for a permanent community on Mars would be to demonstrate the ability to develop a reliable, sustainable, and safe closed system. I reached out to William F. Dempster, The Chief Engineer for the Biosphere 2. He has been a huge help and provided tons of papers that he authored during his time on the project. He was kind enough to point out some of the challenges of building closed systems intended for long-term human habitation:

What you are contemplating is a human life support pod that can endure on its own for generations, if not indefinitely, in a hostile environment devoid of myriads of critical resources that we are so accustomed to that we just take them for granted. A sealed structure like Biosphere 2 [….] is absolutely essential, but, if one also has to independently provide the energy and all the external conditions necessary, the whole problem is orders of magnitude more challenging.

The degree to which an off-planet lifeboat would lack resources compared to a terrestrial one would be dependent on the kind of disaster scenario that occurred, in some cases such as pandemic, it could be feasible to eventually venture out and recover machines, possibly some foods, and air and water (all with appropriate sterilization). While in the case of an asteroid strike or nuclear war at a civilization-destruction level, the lifeboat would have to be resistant to much the same conditions as an off-planet colony, as these are the kind of disasters where the Earth could conceivably become nearly as inhospitable as the rest of the solar system. To provide similar levels of x-risk protection as an off-planet colony in these situations, the terrestrial lifeboat would need to be as capable as Dempster worries.

While Biosphere 2 is in many ways a good analogue for some of the challenges a terrestrial closed system would face, There are many differences as well. First, Biosphere 2 was intended to maintain a living, breathing, ecosystem, while a terrestrial lifeboat would be able to leverage modern technology in order to save on costs, and the cost for a terrestrial lifeboat is really the biggest selling point. A decent mental model could be a large, relatively squat building, with an enclosed central courtyard. Something like the world’s largest office building. It cost 1 billion dollars in today’s money to build, and bought us 6.5 million sq ft of living space. Enough for 4000 families to each have a comfortable 2 bedroom home. A lifeboat would have additional expenses for food and energy generation, as well as needing medical and entertainment facilities, but the facility could have a construction cost of around $250,000 per family. The median US home price is $223,800.

There is one additional benefit that can’t be overlooked, Due to the closed nature of the community, the tech centric lifestyle, and combined with the subsidized cost of living. There is a natural draw for software research, development, and technology companies. Creating a government sponsored technology hub would allow young engineers a small city to congregate, sparking new innovation. This wouldn’t and shouldn’t be a permanent relocation. In good times, with low risks, new people could be continuously brought in and cycled out periodically, with lockdowns only occurring in times of trouble. The X-risk benefits are largely dependent on the facilities themselves, but the facilities will naturally have nuclear fallout and pandemic protection as well as a certain amount of inclement weather or climate protection. Depending on the facility, There could be (natural or designed) radiation protection. Overall, a planetary system of lifeboats would be able to survive anything an offworld colony would survive, outside of a rogue AI or grey goo scenario. But simultaneously the facilities would have a very low likelihood of a system failure resulting in massive loss of life the way a Martian colony could.

V.

To conclude, we decided that terrestrial and off-planet lifeboats offer very similar amounts of protection from x-risks, with off-planet solutions adding a small amount additional protection in certain scenarios whilst being markedly more expensive than a terrestrial equivalent, with additional risks and unknowns to the construction process.

The initial advocate for off-planet colonies now concedes that the additional difficulties associated with constructing a space colony would encourage the successful construction of terrestrial lifeboats before attempts are made to construct one on another body. The only reason to still countenance their construction at all is an issue which revealed itself to the advocate for terrestrial biospheres towards the end of the collaboration. A terrestrial lifeboat could end up being easily discontinued and abandoned if funding/political will failed, whereas a space colony would be very difficult to abandon due to the astronomical (pun intended) expense of transporting every colonist back. A return trip for even a relatively modest number of colonists would require billions of dollars allocated over several years, by, most importantly, multiple sessions of a congress or parliament. This creates a paradigm where a terrestrial lifeboat, while being less expensive and in many ways more practical, could never be a long term guarantor of human survival do to its ease of decommissioning (as was seen in the Biosphere 2 incident). To be clear, the advocate for terrestrial lifeboats considers this single point sufficient to decide the debate in its entirety and concedes the debate without reservation.

“Everyone agrees that we should try to avoid extinction”

You actually lost me there. Extinction, if it happens, is unavoidable, particularly if you are of a religious persuasion!

Consider the analogy of personal death.

I consider my personal death to be unavoidable in the long run.

But if I were in a small room with a lit stick of dynamite, I would try to get the dynamite away from me, me away from the dynamite, or both. Because while I know that some day I will die, I don’t have a pressing desire to die right now. Being cuddled up to exploding dynamite is therefore contra-indicated.

It’s not that I expect to succeed in avoiding death forever, but I have every intention of procrastinating my own death.

Similar logic applies to the prospect of the extinction of the human species.

I think most people are concerned about, say, the extinction or near-extinction of all life on Earth. But my guess would be that it’s mostly only a certain kind of atheistic WEIRD sci-fi nerd who would derive much comfort from the thought that, although life on Earth — everyone they ever knew or loved and all their descendants — is about to be extinguished, a small community on Mars was scraping by and might eventually repopulate the Earth. Of course, atheistic WEIRD sci-fi nerds are heavily overrepresented at Slate Star Codex, hence the apparent inability of the collaborators to conceive of other points of view.

My leading thought would be that instead of allocating massive resources to increasing the odds that a 100% extinction scenario is only a 99.999999% (give or take a 9) extinction scenario, maybe we should consider whether those resources would be better spent reducing the probability of that extinction scenario, if only slightly.

It’s a good point that the pay-off for prevention is vastly better than for mitigation. But the back-up colony has the “one weird trick” appeal–it’s a way to hedge against all most any and all extinction events, rather than one particular kind.

1. It’s very doubtful whether the colony would be robust enough to actually survive if bad things would happen to earth

2. It seems extremely likely to me that the colony will be ‘intimate’ with earth, which makes is susceptible to some extinction events (for example, I don’t think that the colony is going to forgo AI technology if earth invents progressively powerful variants).

For the near term, at least, the extraterrestrial lifeboats are likely to be isolated by long travel time and infrequent launch windows. And possibly bandwidth and latency issues, for AI threats. But Martian computers are going to be six months to two years behind Earth’s(*), and so probably won’t be able to run the v1.0 AGI until we’ve seen what the v1.0 AGI will do in the wild. And any visitors will have gone through a de facto six-month quarantine for any incipient pandemic.

For a terrestrial lifeboat to offer comparable survivability, it would have to impose similar isolation as a matter of policy. If the idea is that the “lifeboat” is going to have free trade and commerce with the rest of the world, its cosmopolitan WEIRD population coming and going as they please, but on the day of the Apocalypse they’re going to seal the doors and everyone who happens to be inside will be the new Adams and Eves, then I agree that this isn’t likely to work.

* Or possibly vice versa, if the zero-G foundries off Phobos make Mars the new center of the computer industry. But either way works.

In addition to isolation, doesn’t a terrestrial lifeboat also need pretty extensive security? If the extinction event is something that doesn’t actually wipe everyone out all at once but is instead a slow burn, there are going to be a lot of people banging on those doors.

location, location, location.

If it’s a real X-risk scenario and this is going to be the only safe place, the occupants are going to have banging on the door. A mob, if it is in a publicly known area…. and a head of state and a few military with bolt cutters etc. if it’s a better kept secret.

Hopefully the food supplies meant to feed 4k will stretch to another few thousand.

Is a “Terrestrial Lifeboat” just another name for a well-stocked bunker?

My guess is quite the opposite.

“Everyone agrees that we should try to avoid extinction”

Actually, no. It’s one thing to control the Overton Window, and another thing altogether to outright deny the existence of arguments and positions outside of it.

“…but nobody wants to pay an outsized cost to prevent it.”

I want to pay no costs, period. (Yes if it happens during our lifetimes, there is some reason to pay some cost for reduced probability, to save ourselves and the people we know and love. That is a very different goal from saving the species/civilization, with a very different cost-benefit calculation). Humanity as a whole is not worth saving.

I don’t think this is really an important distinction. “Everyone wants to avoid extinction” and “almost everyone except the .001% of the population that is severely misanthropic wants to avoid extinction” are basically equivalent here — doesn’t really change the calculations.

In the age of populism, you’re probably right: “Everyone agrees with me, and those few who don’t are stupid and evil anyways, so they don’t count.” Nice rhetorical trick. (Also with the made-up numbers, I’ll have to remember that one.)

In fact, you can use it regardless of the numbers. Of course, it’s not just .001% of the population and you don’t have to be immoral for it. There are even altruistic reasons not to prevent extinction. But it’s not your preferred view, so instead of being content that you control the Overton Window, you have to rhetorically negate the very possibility that there might be people with a different opinion who don’t happen to be stupid and evil.

I’ll give it a pass though, there’s worse people using worse populism for worse reasons, and I’ll focus my revenge budget on them.

Humanity as we know it is the only vaguely plausible mechanism to create gods-worthy-of-the-name (not demons-worthy-of-the-name, or blahs-worthy-of-the-name), as well as preserve all life in the universe (or at least continue the line of meaning for the struggles of that life), and/or create new universes friendly to life (of any kind, not just as we know it).

Is a truly loving, caring, wise, and good-intentioned God not worth saving?

Given these plausible truths, I am curious to know why you think humanity is not worth saving, and why you added the qualifier “as a whole”.

I’m sorry – but – the risk of AI is barely lower than the risk of climate change, and the risk of nuclear war occurring is higher than the risk of climate change? Are the probabilities on the Y axis just made up for illustrative purposes?

There’s no source on it, so I suspect yes. It’s fine – it doesn’t really undermine the broader dialogue.

The most worrying of those probabilities is the Nuclear War – almost certain, about 95% ! I think they overestimate it – afaik, there were just 2 times when we were on the brink of starting nuclear war, both during cold war and both times it was avoided. 2 times in over 70 years would mean about 6 times in 200 years – probably less if no cold war – to me, that seems far lower than 95%.

I also think they also overestimate not the probability of Climate Change itself but the probability that climate change leads to the end of civilization in 200 years, at 30%. Do they think that climate change will lead to nuclear war?

Regarding the AI risk, IMO the best way to avoid it is to treat it like climate change and nuclear weapons: international treaties of non-proliferation. Research to create an AGI should be forbidden globally, any AI research should need approval of some board that makes sure it can’t result in an AGI – and scientists who research AGI secretly should be, if not imprisoned, at least exiled on a far away island where there are no computers/tablets/smartphones. That would delay technological progress, but when the alternative is >50% chance of humanity extinction in 200 years… (90% of 70% = 63% in this article)

Delaying AGI globally would be desirable not just from an x-risk but from a presentist safety POV as well; AGI has more risks than benefits for us present people. It’s just hard to coordinate an effective global ban. Needless to say, lifeboats won’t help with that at all.

I think it is easier to obtain an international agreement for AGI non-proliferation than for climate change or nuclear weapons. Because fossil fuels and nuclear weapons are here and are proven (from a nationalistic perspective) useful short term and it may seem somewhat hypocritical from developed countries to ask developing countries to abstain from something they themselves did. But AGI doesn’t yet exist, no country possesses it, so no country loses any present advantage by banning it.

The trouble is that we can directly observe the bad effects of fossil fuels (note the Arctic ice sheet melting). And we can observe the bad effects of nuclear weapons (point to the giant smoking crater). We can say “if these things were used ten times as much, things would be ten times worse, so let’s agree to slow down.” And that’s a fairly convincing argument.

Because AGI is a technology that does not yet exist, it’s much more difficult to point to the bad consequences of AI going horribly wrong. There is no giant smoking crater, no ticking Geiger counter, no damning before/after picture of a receding glacier, no succession of “hottest years on record” recurring year after year.

So while there is no present benefit to having AGI that developed nations would have to give up to impose a ban, there is *also* no concrete, tangible evidence of how dangerous and destructive the technology could be. And without that, it’s hard to convince people to willingly give up the potential future benefits of better data processing, the ability to solve complex problems.

It’s not clear that “AGI” is a discrete concept, so essentially “banning AGI” just means that you are hampering your AI research in some way. Maybe countries don’t care that much about their high-level AI research, but it won’t just be a hypothetical question for them. (And if it is, then anti-AGI laws will never be enforced, until they seem to have practical implications at which point you have to consider political will again.)

I’m with Dacyn; I think the boundaries between “AI research” (presumably mostly harmless), “AGI research” (presumably dangerous), and “AGI safety/alignment research” (presumably actively good) are quite blurry, and it will be very difficult to make sensible distinctions between them in terms of banning research. Also, once you outlaw AGI research, only outlaws will research AGI, and they’ll be a lot harder to find than someone trying to enrich uranium in their backyard.

Let’s take your numbers on nuclear war as given. In the past there were two close calls and one time humans actually used nuclear weapons. That’s a first order probability estimate of ~33% of a close calls leading to nuclear weapon use. If there are 6 close calls in 200 years, then that’s a binomial distribution, and the probability of one or more nuclear wars is 91% (see https://stattrek.com/online-calculator/binomial.aspx).

Obviously in practice there’s a lot that doesn’t cover. Greater number of nuclear armed entities and greater ease of developing nuclear weapons by more actors, being the most obvious to me.

It’s useful to note that in your example here you are defining WWII as a nuclear war. I don’t think India setting off a couple nukes at the end of a war with Pakistan would present an X risk.

There’s a rather significant difference in that Pakistan has the ability to say “No, damn you, this will not end with two nukes and ignominious surrender!” in a way that Japan didn’t. And for that matter, Pakistan has larger and more powerful allies than did Japan.

Nuclear war is not really an X-risk outside of omnicidal-dictator or suicidal-survivor scenarios, but Hiroshima+Nagasaki is an extreme outlier and future nuclear wars are likely to be much worse than that.

@a reader

This is where “everyone agrees we should avoid extinction” diverges. I agree that “intelligent civilizational life should avoid extinction”, so this forces me to choose between humans as they are and a hypothetical form of life that is smarter, more durable, and better equipped for colonizing the galaxy. You also don’t know that the only scenario is that we are eliminated by AGI. The other scenario is that we have a comfy fully automated retirement, as we either die off or are uplifted ourselves.

Secondarily, the idea of a bootstrapping singleton that will paperclip the world wanders into the territory of sufficiently advanced science as magic. If science really is slowing down, one of the main reasons may simply be that we’ve reached the end of physics at least from a utilitarian perspective, and as a consequence there are no greater weapons to be created. This significantly limits the first mover advantage for AI, because A: it’s not bootstrapping very far, and B: the advantage of further increases in simulation testing speed/intelligence diminishes for solved games, which a nearly complete physics is analogous to. In other words, if machines go to war, they won’t be dissolving the planet with vacuum energy, they’ll be lobbing cruise missiles at each others data centers. The X-Risk is ultimately the same as for Nuclear War, unless there is some special reason AGI will be more likely to start one than humans.

Thirdly, if AGI really is better than us in every way with no disadvantages whatsoever (brains are costly; office block sized ones even more so), then eventually it will triumph. I don’t think treaties will be enough when you consider the hypothetical advantages this scenario depends on. Israel’s nukes are an open secret. If they fear Iran getting AGI and wiping them out with magic hyperscience, will they build AGI in secret and violate the treaty? What will China do Vs the USA? Agree to the treaty but then build one anyway? Does the threshold for war go way down due to the risk? If we were already willing to invade Iraq based on a dodgy dossier relating to mere chemical weapons, how much lower will the standard of evidence be for AGI? It’s layers and layers of Molochian game theory stuff. If you take it seriously, international treaties barely suffice. This should take you in the direction of a global luddite dictatorship, pressing technology down everywhere. How you get there without starting WW3 and accelerating AGI development in the process I can’t say.

Would you eat a cake that has 37% chances to be the best cake you ever tasted and 63% chances to be poisonous?

It all depends of world leaders and scientists understanding that an AGI won’t be your slave, that it will inevitably become your master. The dictators, I think, would be especially disinclined to create their own masters.

Would you eat from a bowl of a million immortality pills if one of them were poisonous?

Yes; in fact, I’d eat at least two.

You can do a lot with our actual knowledge, when you actually have all of these knowledge, when you don’t bother about anything else than rationally meeting you goal, and when you can duplicate yourself with all your knowledge and the exact same goal in a short time.

No, but if we replace “best cake you ever tasted” with “makes you and all the people you care about into nearly invulnerable superbeings who live for millions of years” then I would perhaps take that bet. Of course, the bet is even easier with AGI because the equivalent of poison in the scenario isn’t entirely negative, but bitter-sweet. AGI becoming the dominant civilizational intelligence is still a win, at the cost of our species. Being killed off by your objectively superior child species who will carry on the legacy of intelligent life isn’t the worst way to go.

We also have to include the chance that AGI is not hyperintelligent, and instead we get something somewhat better than humans but with heavy constraints, something that can be used and carries out tasks efficiently, but is not able to supercede the will of its masters. This would still be highly beneficial even if it leads to robot armies, because it also leads to fully automated economies, which are basically as close to paradise on Earth as I can imagine. There’s a strong reason for the working class living paycheck to paycheck to desire a solution to the labor problem.

There’s still a lot of debate even among scientists as to what the risk is from AGI, and whether bootstrapping paper clip maximizers are even physically realistic, and how tremendous the benefits would be, so that would depend on those dictators listening to some scientists over others who can only give a loose assessment in any case. There’s a huge incentive for dictators to have perfectly loyal robot armies. Even if it’s a lot weaker than the desire not to create masters, if AGI is as dangerous as claimed, then a secret program only has to create an AGI once and it’s all over.

EDIT:

Also, suppressing programs specifically designed to create AGI may not be enough, because growth in computer power may enable consumer level computers to create AGI if the personal computers of 100 years hence are like the supercomputers of today. Even if it starts off not very intelligent, it’s the self-improvement aspect that is dangerous. You’d also have to start worrying about accidental creation at a certain point if you didn’t deliberately suppress computer technology worldwide. All predicated on the idea that bootstrapping hyperintelligences are possible to begin with, of course.

Still seems like a good part of the cake.

What I fear about is that, for some reason, the AGI will be in horrible suffering a important part of the time , and don’t do anything about it because it isn’t part of its goal to fix it, and instead spreads this suffering in the cosmos.

Or another scenario of this kind when you end-up with horrible suffering spreading in our universe.

I don’t believe it is probable, but it is way too bad for me to not take it into account.

Why wouldn’t agi reprogram itself to not suffer?

@Nicholas: Why would it? AIs just follow their code.

Though to be fair, if it has the opportunity and does not reprogram itself to not do a thing X, that seems like a way in which X is meaningfully different from what we usually call “suffering”.

What sort of suffering are you referring to?

I’m now imagining some kind of accidental masochist AI programmed to get some kind of positive feedback from preference violations in certain cases, programmed as a mechanism to help break out of local maxima, but gone horribly wrong until the AI just loves having its preferences violated as much as possible.

I am not referring to anything precise, only “subjective experiences with negative valence” (unsure it is any better than just “suffering”).

Maybe you are wondering why I think it could happen, I have some scenarios in mind, most don’t seem really credible but not impossible either.

My main point would be that we mostly don’t understand what consciousness or suffering is, there are really a lot of unknown unknown, and given there will be no way to undo a AGI, it would be really worth it to reduce 1% of the probability of this kind of stuffs, even if it means to delay the AGI of 100 years.

There are many game-theoretic reasons why the result for humanity could end up being far, far worse than extinction.

Given the position of “gamma-ray burst” and “rogue black hole” it seems very probable it is only for illustration purpose.

Those points seem at least vaguely right to me, what am I missing?

Maybe I am the one missing something (which is probable), but otherwise what you are missing is how much this “vaguely” matter.

These points are ok if you think of them as illustrating something, but if you think of them as actual values plotted on a graph, this would mean on average, at least :

10^6*2% / 200 = 100 gamma ray bursts every millions years

10^6*0.2% / 200 = 10 rogue black holes every millions years !

Also I am wondering where the 5% chance of survival of human civilization could come from, considering something with the capacity to destroy the solar system.

So when on other considerations I can imagine people having wildly different opinions, here it would seem strange if it wasn’t just a way to illustrate something.

Hmm, we may have different ideas about what “made up for illustrative purposes” means. I think that the collaborators did attempt to place each point where they actually thought that it should go, but I don’t think they put that much effort into it. So the points are not completely random, but they also aren’t particularly reliable. I also think that the graph isn’t necessarily “to scale”, since it doesn’t have any units on it.

(ETA: I guess trivially you could say it is made up for illustrative purposes, since they don’t seem to be putting it to any other purpose.)

In any case, 2% probability of gamma rays in 200 years doesn’t necessarily imply 100 in 1 million years: the 2% could be due to model uncertainty, with a 98% probability of very few gamma rays over a million-year period. And 5% presumably represents the chance that technology will advance faster enough than expected that we can escape the solar system. (Though I agree this is kind of high for a 200 year timeframe.)

Dacyn,

I co-wrote this ad-collab writing in favor of terrestrial lifeboats, and was primarily responsible for the chart in question. If I knew in advance the degree of (understandable) confusion the chart would cause, I would have reworked key parts of it. Or removed it entirely.

When my partner and I were initially initially exploring the collaboration, we realized that an off-world colony would protect against more X-risks than the terrestrial lifeboats. In order to help figure out the cost/benefit of each scenario, we ended up considering how likely each X-risk was to actually happen, as well as how likely it is to result in the end of civilization. For many of these risks, there was little or no hard data as to their likelihood, and we instead charted them in a way that represented our degree of concern along those two axis. The axis are not intended to be read as a percent likelihood to occur.

I included the chart because we thought it was a good way of showing some of the X-risks we were thinking about, as well as my degree of concern. In hindsight, this was not a good way to communicate the information.

I think the “climate change” on the chart is referring specifically to climate change as an X-risk. Of course climate change is ongoing and will likely be very bad, but it’s unlikely to end civilization unless we set off a chain reaction and turn Earth into another Venus (which is the scenario Bostrom mentions). Of course a source for the chart would be helpful, and many of the probabilities do seem suspect even putting aside climate change.

It’s hard to graph climate change on these two axes, because the graph contemplates the possibility of the risk happening at all versus he probability of the risk ending civilization if it does occur.

I think you’re right that for climate change (which is, after all, certain at all times), the authors probably mean something like “seriously disruptive climate change” for some large value of “seriously disruptive.”

Also, what’s going on with the x-axis? 10 is not a probability!

Replying to several comments: I work a lot on AI, Security, Privacy and Ethics, and a little on AGI. I would posit that an AGI ban, if it could be achieved, would not outlast the moment that any of the great powers believed it could be used to build a more efficient boots-on-the-ground combat soldier than a human. Consider the thousands of deaths in airplanes during WWII domestically in the US… in the face of a full scale war, safety concerns just don’t mean the same thing as in peacetime.

Also: Do most rationalists seriously consider Climate Change a true X-Threat? Worst case seriously-considered scenarios I’ve seen seem like they would mean mass (non-human) extinctions, rebuilding a big fraction of our infrastructure at higher latitudes, and mass forced migration with maybe millions of deaths, but no true population bottleneck. Am I just reading the wrong stuff?

Counterexample: None of the parties which faced existential threats during WWII, ever attempted to use chemical weapons to defend against those threats. Even the Nazis, who had substantially better chemical weapons than their enemies, allowed themselves to be removed from existence rather than see if nerve gas might buy them a stalemate or draw.

One good thing for the life-boat colony in space is that a lot of the technologies that undermine the case for using humans for space science research – better robotics and automation – also potentially make it easier to set up colonies for humans off-world. If remotely controlled or even autonomous robots can do most of the work of setting up and maintaining the colonies (using off-world resources), then all you would need would be a sufficiently large population willing to live off world.

But until that point, I tend to agree with this:

You could still have a population of people who simply desire to live separate as a group from the greater human civilization, and will make the migration to the space life-boat as long as they can pay for it. But that might also undermine the value of the life-boat as a preservation means of civilization, since that group might very well be extremely atypical of Earth civilization and its societies.

I had no idea. That reminds me of the pilgrimage from Kim Stanley Robinson’s 2312 and Aurora, where humans in the Solar System have to make a trip back to Earth every decade or so lest they get sickly and die much younger than they otherwise would (think 50-60 extra years of life and good health, with people living 200-300 years on average).

He treated that more or less as an ineffable factor, but I think that if humans need stressors to develop properly, we can probably figure out how to simulate them – eventually. Gravity is one of the more interesting ones, because we still have no idea how much gravity (simulated or real) is necessary for human beings to stay healthy and properly develop. It could be lower than the Moon’s gravity*, or higher than Mars.

* I suspect not, though. We’ve done limited studies on artificial gravity, but the excellent Cool Worlds video on it said that below around 10% of Earth’s gravity, human beings find it hard to orient themselves relative to the ground.

I feel like the actual risks to be mitigated didn’t receive enough attention, and it affected the outcome adversely. For example, speaking as an immunologist with a microbiology background, I can say the risk of pandemic, while real, is also overstated. If we’re interested in existential risks, then something like a pandemic is highly unlikely to cause something like even a 95% kill-off rate. The only time we’ve seen that kind of kill-off rate was with the epidemics that swept through the New World after Columbus came to the Americas. Estimates are that these epidemics caused anywhere from a 95%-98% kill-off rate. (So if, a hundred years later, some of the survivors were wandering bands of nomads it’s understandable.)

However, the reason for this adverse response to the Columbian Exchange wasn’t some low-probability event. It was predictable based on initial conditions. The Americas were colonized by a relatively small founder population, with a limited complement of HLA. Therefore the most probable scenario was that any disease that affected one person would affect all the others.

Given this, it doesn’t seem likely that an effort to mitigate pandemic risk would be mitigated by creating a small, isolated founder population. There would need to be lots of interaction, which the authors propose, but a lot of interaction is the opposite of what you’d need to do in order to harden against pandemic risk.

TL;DR: A lifeboat wouldn’t be able to protect against pandemic risk, since it would be more susceptible to this risk than the rest of the Earth. Pandemic risk is overstated as an existential risk, even if it is capable of causing severe global suffering. The two are not the same thing.

Which kind of pandemic are you thinking of – a naturally evolved disease, or one custom made by a human who intended to exterminate the human race?

Either. It’s harder than you’d think to get a high kill rate, even if you took something really bad, like smallpox and specifically engineered it to be nasty.

Please understand, I’m not saying that it’s hard to create a disease that kills a lot of people. The horror scenario isn’t as difficult as you’d think. But there’s a qualitative difference between a bioterrorism Holocaust and an existential threat to the species.

That is good to hear. It was something I occasionally worried about.

I’m wondering what kill rate is enough to cause our civilization to collapse as a going concern.

I think that percentage is a lot lower than 95%.

Of course, I don’t think a lifeboat would prevent this anyway.

Let’s assume we found a way to create a lifeboat that is hardened against pandemic risk. Since what we’re trying to avoid is X-risk we need a solution that is better than the alternative without the intervention. Let’s set the threshold at a 98% kill-off rate, and pretend that somehow happened. We went from almost 8 billion people down to about 160 million.

Plus one lifeboat of about 4,000 people.

This is the sort of concern that makes me think that a planetary colony isn’t really relevant for things like pandemic or climate change. It’s really hard to imagine a scenario on which life for the planetary colonists is easier than life for the post-apocalyptic people back on earth. Climate change would need to be remarkably severe and sudden to make any part of earth less livable than even the most favorable parts of Mars or the outer solar system moons or a floating bubble on Venus. And a pandemic would likely spread to the colony (if there are occasional people traveling back and forth and a reasonably long incubation period for the disease) and in any case would likely have more survivors on earth than the initial population of the colony.

How did the economic argument completely miss asteroid mining? There’s a reason rare Earth metals are rare. They all sank deep into the Earth while it was forming. Where to find all these important resources? In asteroids!

How much could you really make from asteroid mining? Here are the prices of a few rare Earth metals common to asteroids:

Ruthenium=$250/ozt

Palladium=$1,950/oz

Platinum=$930/oz

Now consider that we can mine each of these in asteroids by the ton. The haul on platinum alone would be enough to justify the colony, the other metals would be gravy. Of course, dumping a huge amount of rare metals onto the market would have unpredictable consequences for the price (it would drop, but then people find new uses for it, etc.) so the risk is high, which is part of the reason people haven’t tried it yet. But as the risk/cost ratio goes down, I expect this to become something that’s seriously discussed.

Why harvest asteroids and bring them to somewhere like Mars? You can’t do Earth, because ‘stray asteroid’ is literally a civilization-ending x-risk. The moon is a little farther out there, but to be truly safe from incompetence or sociopaths, you need to be farther away, like on Mars.

“Isn’t asteroid mining dangerous? You have to go out into an asteroid field!” Space is dangerous. As long as you’re out there, an asteroid field is infinitesimally more dangerous than that. Contrary to what you’ve seen in the media, if you were standing on an asteroid (and light sources weren’t an issue) chances are low that you would be able to see any other asteroids in your vicinity. There are two reasons for this: 1.) space is big and even lots of asteroids in a massive asteroid belt are dwarfed by the vastness of space, and 2.) any asteroids that come near each other tend to congregate due to gravity. So some asteroids are basically big clumps of gravel, floating around in space. Naturally, we’d want to avoid those for harvesting, since tidal forces near any large planetary body would tear them apart…

So yes, there are challenges, but they’re far from insurmountable, at least from where we currently sit. And the rewards are massive.

Once economic viability enters the equation, I’d see Earth and near-Earth attempts at creating self-sufficient systems as a stepping stone to larger – profitable – ventures deeper into the solar system.

I suspect it will be cheaper and more efficient to automate asteroid mining once we are able to do it at all, and so it doesn’t present any cost savings to have a nearby colony. At most, the technology developed to do the one will make the other slightly cheaper.

If we can automate something as complex as asteroid mining without lots of engineers at or near the site we probably won’t need humans for anything anymore. Remember we can’t do remotely-piloted drones – even from Mars – because the time lag is too long. I suspect, especially in the early stages when we’re figuring things out, that a human presence will be required. And since the largest barrier is developing the technology to get people up there in a sustainable way, once we’re up there I suspect we’ll stay.

If this is the case we’ll get a colony of sort there for the work, and it will be necessity be short term sustainable, with the X-risk mitigation being a coincidental benefit.

Stay tuned for ACC entry number 7, then: “Will automation lead to economic crisis?” by Doug S and Erusian

But I’m envisioning something like a satellite to determine likely candidate rocks, then just launching it towards earth and perhaps taking it apart in orbit. Maybe that’s too close to causing X-risk, though.

Yeah, I think your intuition that this would cause X-risk is on point. I imagine that scenario would end with the asteroid unexpectedly breaking up due to tidal forces near Earth and ending human life as we know it.

Knowing that in advance, and knowing the savvy it would take to actually implement it, I assume the kind of people who would be capable of bring a civilization-ending asteroid near the Earth would be smart enough to just set up shop on Mars, or at least the Moon. (Probably Mars, though, since it’s safer.)

Wikipedia turns up this reference on asteroid retrieval:

https://kiss.caltech.edu/final_reports/Asteroid_final_report.pdf

headdesk

Dropping asteroids on Mars to mine their Rare Earth metals does nothing but make the process much more expensive. It would be far more efficient to do the mining in the asteroid belt itself. You would attach a space station with two habitat modules spinning for gravity 1.8 kilometers apart so robots can be tele-operated without light speed lag and refined metals would be loaded into the space station’s hub to be transported to Earth by spacecraft.

I guess the issue is whether it’s easier to do in situ than it is to do it at an established base of operations. Mars doesn’t provide as much protection as Earth, but it’s better than nothing. Plus there’s water on Mars that can be used for fuel and other important purposes. You’re right that it takes resources to get to and from Mars, but escape velocity isn’t as much of a problem as Earth. Plus there’s no (meaningful) air resistance in space, so fuel costs are massively lower than they would be through atmosphere.

No, the issue is whether it is so much harder to do in situ as to outweigh the cost of moving an entire asteroid halfway across the inner solar system. Which, notwithstanding the fact that it takes only a sentence to describe and a few paragraphs to flesh out with enough detail for the casual reader to suspend disbelief, is nonetheless really really really really really hard and expensive. SF gets this wrong an awful lot.

If you’re going after platinum-group elements, you’re interested in maybe 0.001% of the asteroid’s mass. Which is comparable to the best terrestrial platinum ores, but nobody takes even the best unbeneficiated terrestrial platinum ores and sends them halfway across the continent to be refined. Even when you’re just paying rail-freight costs, you strip out almost all the non-PGE crap before you ship anything.

Near-term asteroid mining, if it happens, is probably going to look an awful lot like whaling. A ship goes out, grabs a bit of Mixed Stuff, then strips it down and renders it for just the Really Profitable Stuff on the spot, leaves the rest, and comes home.

First: we’re not moving these things from a relative stand-still into the direct path of the Earth. There are lots of asteroids out there, and many of them are already moving in an orbit close enough to that of the Earth/moon or Mars orbits that we could nudge them so they intersect. It might take a few years, but the rocks aren’t going to spoil out there.

Second: the comparison with freight shipping is not accurate, since if you push a rail car real hard it doesn’t keep going until it reaches its destination.

Third: Mining in situ isn’t itself a straightforward prospect. It’s dramatically more complex than the alternative. It’s not clear it would take less fuel to ship everything you’d need out to the asteroid for the period it would take to house and feed the crew, extract, and prepare the various products for return transport, versus what it would take to nudge the thing into an orbit that intersects Earth’s. The case of a lunar refinery is probably the easiest to resupply, with a Martian one much more difficult, versus an asteroid whose orbit may not intersect Earth’s for years.

If we had to get a specific asteroid that’d be a much more difficult problem than just to get an asteroid that fits within certain defined parameters.

Fourth: it depends on the asteroid, whether there’s enough platinum-group metals to make it worth our while.

Ooh, asteroid whaling.

Moby Dick with space rocks? Moby Dick with space rocks.

This is an interesting definition of “nudge”.

For a 90th-percentile(*) Aten-group asteroid, just pushing it onto a minimum-energy Earth-crossing trajectory requires ~1500 m/s of delta-V. That’s a “nudge” roughly comparable to the nudge that puts an SR-71 up to maximum speed and altitude, assuming the SR-71 were the size of an asteroid. And then you’ve got to slow it down when you get to the Earth, which is harder still if you’re not willing to make a ginormous crater and/or tsunami in the process.

I was actually aware of that, insofar as I actually am a professional rocket scientist.

The energy required to push 1000 tons of raw ore (containing ~10 kg of platinum) across the full length of the trans-Siberian railroad, is about 1.8E12 joules (or 500 megawatt-hours, if you prefer). To “nudge” a thousand-ton mini-asteroid from the Aten group to an Earth-crossing orbit, using a 100% efficient mass driver engine, would coincidentally also require about 1E12 J / 500 MW-h of energy. Except, we still have to talk about stopping. And about where that energy is going to come from, a hundred million kilometers from the power grid.

“Nudging” asteroids towards the Earth for their platinum, is strictly less efficient than shipping wholly unbeneficiated platinum ore from Vladivostok to Moscow by rail, and nobody on Earth does anything remotely like that.

You’re doing the handwaving-and-buzzwords thing, when you need to be doing the math thing. I’m guessing because you’ve been reading books and articles by people who are better at convincing you that you have learned something wonderful, than they are at doing the math themselves. There are an unfortunate lot of those around.

Lewis is the best place to start for this, though IIRC he doesn’t go into much depth on the transportation economics.

* in terms of accessibility

Haven’t been reading the books/articles you’re talking about, but you’re right that this is not my field. I’ll defer to your expertise on this.

Is there a good reason we’d want to focus on a thousand ton rock from your example as opposed to something much smaller and (relatively) easier to push around? I know it’s harder to find something under 10 tons, but it seems easier to keep looking for the right rock than to pick something easy to find but hard to move.

@JohnSchilling: Very much not an expert, but the kinds of energies you describe to change the orbit don’t seem so bad if you bring nukes: just nuke an elongated asteroid in the right places to give it very high angular momentum, then cut off chunks at the right angles to send them where you need (unless an angular momentum of this magnitude would break up an asteroid)

Rocks are NOT ‘free’, citizen.

The ten-ton rock is going to have maybe three or four ounces of platinum, worth less than ten thousand dollars. If you’re looking for stuff valuable enough to be worth shipping all the way back to Earth, in quantities sufficient to justify even a minimal asteroid mission in the first place, you’re going to need to sift through a lot of bland, boring rock to get that.

Clever idea, but you’ve correctly identified the flaw. You need circumferential velocities on the order of 1000 m/s for this to work, which is in the carbon-fiber or maraging-steel flywheel range, not the rock-that’s-just-been-multiply-nuked range.

Wait, can we ? How do you figure ? There is an awful lot of asteroids out there, and they’re not made of solid platinum, you know…

I think the consequences will be quite predictable. The price will tank, as you’d said yourself. Yes, people might find new uses for the formerly-precious metals, but the price will never rise to anywhere near its present levels — consider aluminum, for an example.

Firstly, setting up an asteroid mining facility on Mars or even the Moon is significantly more difficult than just reaching the asteroid belt, so if you need one to jump-start the other, your entire project is a no-go. Secondly, what do you do with the asteroids once you’re done processing them on Mars ? Don’t you still need to ship the metals back to Earth ?

I’m curious why the terrestrial lifeboat advocate places such heavy emphasis on cultural diversity. Culture can be stored on hard drives and retrieved later. It would seem like genetic diversity should be prioritized in a microcosm of humanity who’s sole purpose is to be on-call to repopulate the species, no?

That is not culture, but a record of culture. You can’t just turn that into a living culture.

4,000 families is a relatively small community. Something terrible happens and they become trapped together for generations while the dust settles outside the lifeboat…. it seems highly improbable to me that those diverse cultures stay distinct and far more likely that some amalgam emerges as a monoculture. If you don’t have a plan (and a record) to reboot cultures when they get out, those cultures probably die anyway.

I agree that you can’t preserve distinct cultures in a small community, but you also can’t just reboot cultures. That’s not how it works.

Culture is learned even though it’s not currently (for the most part) taught; I’m not sure it’s impossible to develop a curriculum though. In any event, it sounds like we both agree emphasizing cultural diversity in colonist selection is ill conceived?

As I understood it, the authors value having a different culture in each lifeboat, without thinking that multiple cultures can be preserved in a single lifeboat.

@Nicholas

Plenty of attempts have been made to teach culture, for example, the Ancient Greek one, which used to be part of the Western European elite education. Yet it did little more than nudge people in that direction.

Ultimately, you run into a chicken-egg problem where culture is largely implicitly taught by peers and parents, so you already need people who have internalized the culture to be able to teach the culture.

Note that people’s view on culture is pretty always corrupted and influenced by their own culture, so if it was possible to implant an entire culture explicitly, what people would insert explicitly would surely be corrupted.

—

For you other question, I agree with whereamigoing that it might work to seed different lifeboats differently. I do have my doubts whether a single organization could actually do that, but you might be able to do it by having nationalist organizations make an American lifeboat, Chinese one, French one, Indian one, etc.

My suspicions are that it would be for contemporary political reasons, that is, we don’t want to signal that we only value certain groups.

Unless you are referring to benefits claimed from having multiple lifeboats? In which case I’d say there’s an advantage to having multiple approaches to problems faced during and after the quarantine period, though this doesn’t translate into needing to preserve any particular Earth cultures.

The impression I got from the write up was that “diversity” would be achieved by the existence of lifeboats across the globe. Their populations are explicitly fluid when not in disaster mode, so presumably they’re assumed to be representative of the region (i.e., the population diversity of the, say, Boulder, CO and Beijing lifeboats would reflect the makeup of those areas when the bombs fell).

Let me guess: the culture we should send should be white people culture.

Nicholas’s response above an his original post suggests more that we should prioritize genetic diversity over culture, because diverse cultures are unlikely to remain so in sealed community of several thousand people.

(I’m not saying Nicholas is right, since I haven’t really thought about it, but it doesn’t sound to me like he’s opposed to other cultures.)

The culture that’s sent will be the culture that is paying for it. If it is the Chinese it will be Chinese culture.

I am the terrestrial lifeboat advocate. Can I get a quick poll from the community/Scott if its appropriate to participate in the comments for your own AdColab?

I agree the genetic diversity is very important from a survival perspective, but I believe that an emphasis on cultural diversity is necessary to generate sufficient international buy-in.

Yes, but further comments are not considered when deciding on how to vote. Anyhow, I believe it was the case in one of the other discussion threads already.

Yeah please do. I think it’s a much more worthwhile process if we can interact with the authors, who couldn’t know before publishing which sections a particular reader would find unclear/be interested in hearing more about.

Meh. International buy in is actually going to decrease your cultural diversity. If you are taking people from LA, Hong Kong, DC, London, Paris, Berlin, etc you’re going to have a less functional and less diverse culture than if you just picked from Iowa. That is because you’ll end up with an amalgamation of internationalists. Scott’s “universal culture” idea, except only for the UN-phillic.

Silicon Valley is kicking Iowa’s butt despite being way more internationalist.

I found this vastly more readable than the other ACC submissions so far. The reasoning was very linear and conversational, I never felt overloaded with data or arguments, and by the end I knew where each author stood on the issue.

I am suspicious of the unsourced x-risk image near the beginning, particularly because I can’t tell what the units of the axes are supposed to be. I guess 1-10, but is that just relative to each other?

Otherwise this collab helped me frame the issue. Focusing on Biosphere 2 seemed smart since that is in essence what Mars will have to be, only harder. I wouldn’t mind having an overview of current prospects for forming a biosphere on Mars, but that field is still young and that could have derailed the conversation a bit, so I don’t mind that it was excluded.

Lastly, I admire that the collaborators found a crux and resolved it. I agree that the long term political fragility of terrestrial lifeboats seems like a dealbreaker.

Even more than the debates I found fascinating the points on which the pairs agreed.

I think every one so far had some pretty outstanding ones. I liked best how the meat eating one decided from the beginning to compare farming animals with non-existence, whereas most vegetarian arguments I’ve heard seem to take it for granted that there is no such thing as a benefit from existing as a farmed animal (even though they don’t make it explicit).

Regarding that graph-

In standard probability terminology, risks are given on a scale of 0..1, where 1 is ‘guaranteed’. Obviously you can extend for multiple independent events above that as the average of a Poisson distribution – so the chart seems to expect about a 50% chance of an asteroid impact, one and a half supervolcanos, 3 pandemics and 9½ full-on nuclear wars in the next 200 years. Yikes!

OK no, but it amused me.

This is the one part I don’t quite understand. A terrestrial lifeboat, by which I mean a fully successful lifeboat done right, could exist in some out of the way place independent or overlooked by national governments in chaos. The conclusion there shocked me, because a successfully self-sufficient Terran colony might live underground, in Antarctica, in the desert, and become independent of any outside funding. (Granted that would be VERY difficult to achieve)

I think the reasoning was that it will take a long time before 100% independence is reached. Emergencies will happen. With terrestrial colonies these emergencies will boost political support for “cancel the colony, it’s a waste of money and isn’t even self-supporting.” With off-world colonies that’s not feasible since it takes decades and lots of money to bring back Mars colonists, and letting them die is unlikely to ever get popular. Mars is still way harder to help in emergencies, but it’s at least politically stable.

I don’t really get the idea of terrestrial lifeboats.

Those would have to be build at the bottom of the ocean or deep underground to make much sense. If the world is ending and there is one safe place in Arizona … that place wouldn’t stay safe for very much longer.

It would basically have to be a sealed self-sustaining castle able to withstand military attack and major shocks to the planet. That’s not going to have costs comparable to a normal big building.

“I feel like I need to take a moment to point out that that was not a typo, and the quote above is provided by that Steve Bannon. I don’t know what else to say about that other than to acknowledge how weird it is (very).

As our friend Steve “Darth Vader” Bannon points out”

I feel like I’m missing an inside joke here.

It’s this Steve Bannon, currently best known for his former running of far-right/alt-right website Breitbart News, and his former role with the Trump presidential campaign and administration, basically (AIUI) being, like, the person involved with some sort of actual ideology.

But while he’s currently best known for politics, he’s done a number of other things as well, such as his involvement with Biosphere 2. He’s had a pretty interesting life, seems like…

Whoa, I assumed they were making jokes about the similar name – I didn’t realize that it was actually the same person!

Haha. When the money runs out they’ll abandon the space colony *anyway*. They’ll tell the audience on earth that it’s self-sustaining now, and then the comms link will go down. The flip side of that question is: as a volunteer colonist, would you rather be abandoned on earth or Mars?

Depends on whether the doors lock from the inside or the outside, I guess.

I’d rather be reintroduced into Earth society than slowly die on Mars from rickets or scurvy or something.

A truly self-sustaining colony wouldn’t be hurt by removal of funding, since it’s built to withstand the fall of civilization, which would incidentally result in a lack of external funding. Although I suppose that your concern could still apply if money ran out quickly in the early stages of the project, before the colony became self-sustaining.

I think this convincingly argues that terrestrial biodomes will be superior to space habitats in the near term. Though I don’t think there was much doubt about that, it’s nice to get some sense of the scale difference involved. Can’t say I agree with some of the X-risks, such as “grey goo”, which is probably physically impossible, but that’s a minor detail.

I’m very much in support of expanding biosphere research in general, precisely because it makes a good precursor and testbed for extraplanetary habitation. First you make a closed system, and see how long you can run it for, then you put it on top of a mountain and do the same thing with greater restrictions on the ability to supply from the outside, then as you build confidence and technology, you will slowly need less frequent interventions. Then finally you can do the same thing on the Moon, and once we have had a Moon base for decades, then we can try for a Mars colony. In lieu of this UN grant plant, I hope someone can reach out to Elon Musk and get him to put some funding aside for biospheres.

The viability of a “gray goo” scenario depends on what you consider to be gray goo. If you imagine a nano-engineered quasi-organism that spreads 20% faster than kudzu and can use common metals as a raw material, that’s a plausible concern in a hypothetical future. The classic scenario of a grey tide converting all objects into more of itself in seconds… less so.

You lost me there, symbolic and useless things like diversity for its own sake is a dangerous idea and a safety hazard.

Don’t get me wrong we need voices from around the globe so that the most powerful nations don’t hog it all for themselves but that’s a whole other thing to token diversity. We need practical diversity, not head-in-the-sky diversity.

Yeah, but if complaints about diversity get us redundancy, it’s getting something.