I.

Tyler Cowen writes about cost disease. I’d previously heard the term used to refer only to a specific theory of why costs are increasing, involving labor becoming more efficient in some areas than others. Cowen seems to use it indiscriminately to refer to increasing costs in general – which I guess is fine, goodness knows we need a word for that.

Cowen assumes his readers already understand that cost disease exists. I don’t know if this is true. My impression is that most people still don’t know about cost disease, or don’t realize the extent of it. So I thought I would make the case for the cost disease in the sectors Tyler mentions – health care and education – plus a couple more.

First let’s look at primary education:

There was some argument about the style of this graph, but as per Politifact the basic claim is true. Per student spending has increased about 2.5x in the past forty years even after adjusting for inflation.

At the same time, test scores have stayed relatively stagnant. You can see the full numbers here, but in short, high school students’ reading scores went from 285 in 1971 to 287 today – a difference of 0.7%.

There is some heterogenity across races – white students’ test scores increased 1.4% and minority students’ scores by about 20%. But it is hard to credit school spending for the minority students’ improvement, which occurred almost entirely during the period from 1975-1985. School spending has been on exactly the same trajectory before and after that time, and in white and minority areas, suggesting that there was something specific about that decade which improved minority (but not white) scores. Most likely this was the general improvement in minorities’ conditions around that time, giving them better nutrition and a more stable family life. It’s hard to construct a narrative where it was school spending that did it – and even if it did, note that the majority of the increase in school spending happened from 1985 on, and demonstrably helped neither whites nor minorities.

I discuss this phenomenon more here and here, but the summary is: no, it’s not just because of special ed; no, it’s not just a factor of how you measure test scores; no, there’s not a “ceiling effect”. Costs really did more-or-less double without any concomitant increase in measurable quality.

So, imagine you’re a poor person. White, minority, whatever. Which would you prefer? Sending your child to a 2016 school? Or sending your child to a 1975 school, and getting a check for $5,000 every year?

I’m proposing that choice because as far as I can tell that is the stakes here. 2016 schools have whatever tiny test score advantage they have over 1975 schools, and cost $5000/year more, inflation adjusted. That $5000 comes out of the pocket of somebody – either taxpayers, or other people who could be helped by government programs.

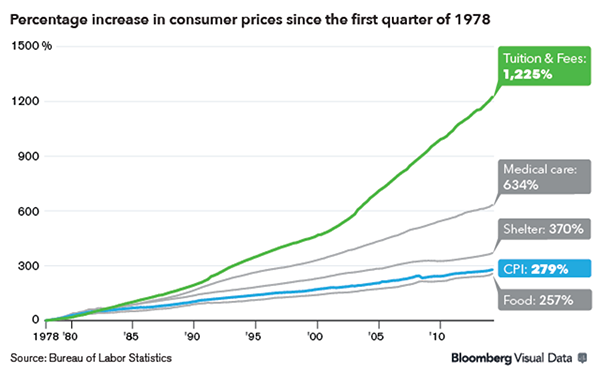

Second, college is even worse:

Note this is not adjusted for inflation; see link below for adjusted figures

Inflation-adjusted cost of a university education was something like $2000/year in 1980. Now it’s closer to $20,000/year. No, it’s not because of decreased government funding, and there are similar trajectories for public and private schools.

I don’t know if there’s an equivalent of “test scores” measuring how well colleges perform, so just use your best judgment. Do you think that modern colleges provide $18,000/year greater value than colleges did in your parents’ day? Would you rather graduate from a modern college, or graduate from a college more like the one your parents went to, plus get a check for $72,000?

(or, more realistically, have $72,000 less in student loans to pay off)

Was your parents’ college even noticeably worse than yours? My parents sometimes talk about their college experience, and it seems to have had all the relevant features of a college experience. Clubs. Classes. Professors. Roommates. I might have gotten something extra for my $72,000, but it’s hard to see what it was.

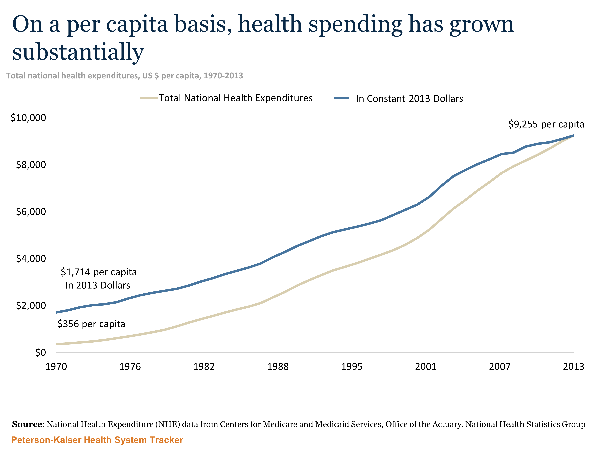

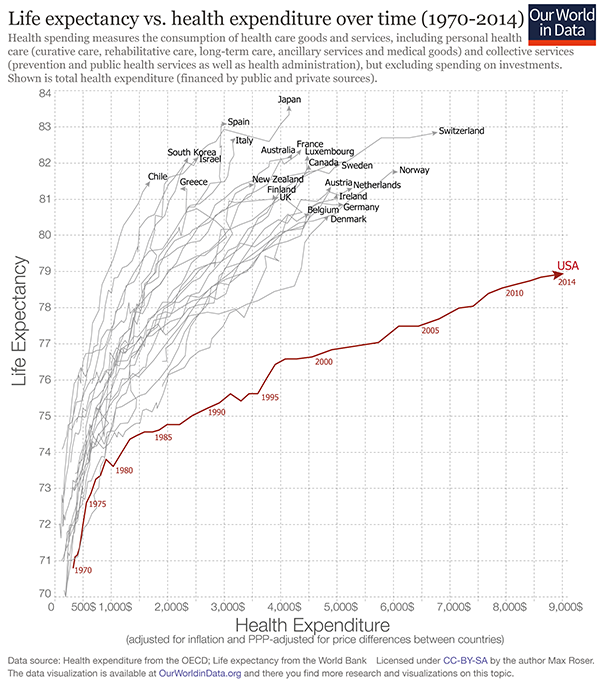

Third, health care. The graph is starting to look disappointingly familiar:

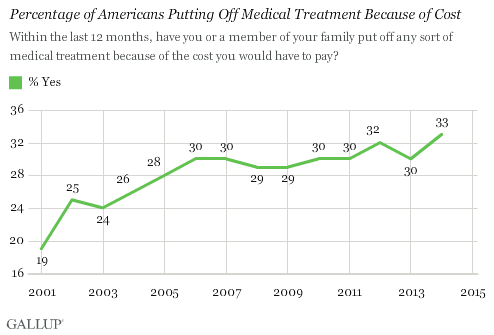

The cost of health care has about quintupled since 1970. It’s actually been rising since earlier than that, but I can’t find a good graph; it looks like it would have been about $1200 in today’s dollars in 1960, for an increase of about 800% in those fifty years.

This has had the expected effects. The average 1960 worker spent ten days’ worth of their yearly paycheck on health insurance; the average modern worker spends sixty days’ worth of it, a sixth of their entire earnings.

Or not.

This time I can’t say with 100% certainty that all this extra spending has been for nothing. Life expectancy has gone way up since 1960:

Extra bonus conclusion: the Spanish flu was really bad

But a lot of people think that life expectancy depends on other things a lot more than healthcare spending. Sanitation, nutrition, quitting smoking, plus advances in health technology that don’t involve spending more money. ACE inhibitors (invented in 1975) are great and probably increased lifespan a lot, but they cost $20 for a year’s supply and replaced older drugs that cost about the same amount.

In terms of calculating how much lifespan gain healthcare spending has produced, we have a couple of options. Start with by country:

Countries like South Korea and Israel have about the same life expectancy as the US but pay about 25% of what we do. Some people use this to prove the superiority of centralized government health systems, although Random Critical Analysis has an alternative perspective. In any case, it seems very possible to get the same improving life expectancies as the US without octupling health care spending.

The Netherlands increased their health budget by a lot around 2000, sparking a bunch of studies on whether that increased life expectancy or not. There’s a good meta-analysis here, which lists six studies trying to calculate how much of the change in life expectancy was due to the large increases in health spending during this period. There’s a broad range of estimates: 0.3%, 1.8%, 8.0%, 17.2%, 22.1%, 27.5% (I’m taking their numbers for men; the numbers for women are pretty similar). They also mention two studies that they did not officially include; one finding 0% effect and one finding 50% effect (I’m not sure why these studies weren’t included). They add:

In none of these studies is the issue of reverse causality addressed; sometimes it is not even mentioned. This implies that the effect of health care spending on mortality may be overestimated.

They say:

Based on our review of empirical studies, we conclude that it is likely that increased health care spending has contributed to the recent increase in life expectancy in the Netherlands. Applying the estimates form published studies to the observed increase in health care spending in the Netherlands between 2000 and 2010 [of 40%] would imply that 0.3% to almost 50% of the increase in life expectancy may have been caused by increasing health care spending. An important reason for the wide range in such estimates is that they all include methodological problems highlighted in this paper. However, this wide range inicates that the counterfactual study by Meerding et al, which argued that 50% of the increase in life expectancy in the Netherlands since the 1950s can be attributed to medical care, can probably be interpreted as an upper bound.

It’s going to be completely irresponsible to try to apply this to the increase in health spending in the US over the past 50 years, since this is probably different at every margin and the US is not the Netherlands and the 1950s are not the 2010s. But if we irresponsibly take their median estimate and apply it to the current question, we get that increasing health spending in the US has been worth about one extra year of life expectancy.

This study attempts to directly estimate a %GDP health spending to life expectancy conversion, and says that an increase of 1% GDP corresponds to an increase of 0.05 years life expectancy. That would suggest a slightly different number of 0.65 years life expectancy gained by healthcare spending since 1960)

If these numbers seem absurdly low, remember all of those controlled experiments where giving people insurance doesn’t seem to make them much healthier in any meaningful way.

Or instead of slogging through the statistics, we can just ask the same question as before. Do you think the average poor or middle-class person would rather:

a) Get modern health care

b) Get the same amount of health care as their parents’ generation, but with modern technology like ACE inhibitors, and also earn $8000 extra a year

Fourth, we se similar effects in infrastructure. The first New York City subway opened around 1900. Various sources list lengths from 10 to 20 miles and costs from $30 million to $60 million dollars – I think my sources are capturing it at different stages of construction with different numbers of extensions. In any case, it suggests costs of between $1.5 million to $6 million dollars/mile = $1-4 million per kilometer. That looks like it’s about the inflation-adjusted equivalent of $100 million/kilometer today, though I’m very uncertain about that estimate. In contrast, Vox notes that a new New York subway line being opened this year costs about $2.2 billion per kilometer, suggesting a cost increase of twenty times – although I’m very uncertain about this estimate.

Things become clearer when you compare them country-by-country. The same Vox article notes that Paris, Berlin, and Copenhagen subways cost about $250 million per kilometer, almost 90% less. Yet even those European subways are overpriced compared to Korea, where a kilometer of subway in Seoul costs $40 million/km (another Korean subway project cost $80 million/km). This is a difference of 50x between Seoul and New York for apparently comparable services. It suggests that the 1900s New York estimate above may have been roughly accurate if their efficiency was roughly in line with that of modern Europe and Korea.

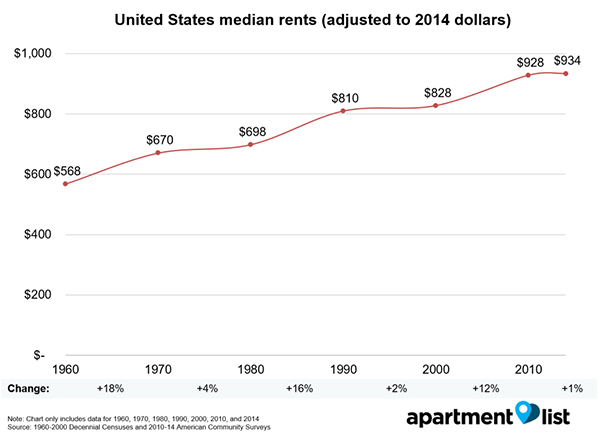

Fifth, housing (source:

Most of the important commentary on this graph has already been said, but I would add that optimistic takes like this one by the American Enterprise Institute are missing some of the dynamic. Yes, homes are bigger than they used to be, but part of that is zoning laws which make it easier to get big houses than small houses. There are a lot of people who would prefer to have a smaller house but don’t. When I first moved to Michigan, I lived alone in a three bedroom house because there were no good one-bedroom houses available near my workplace and all of the apartments were loud and crime-y.

Or, once again, just ask yourself: do you think most poor and middle class people would rather:

1. Rent a modern house/apartment

2. Rent the sort of house/apartment their parents had, for half the cost

II.

So, to summarize: in the past fifty years, education costs have doubled, college costs have dectupled, health insurance costs have dectupled, subway costs have at least dectupled, and housing costs have increased by about fifty percent. US health care costs about four times as much as equivalent health care in other First World countries; US subways cost about eight times as much as equivalent subways in other First World countries.

I worry that people don’t appreciate how weird this is. I didn’t appreciate it for a long time. I guess I just figured that Grandpa used to talk about how back in his day movie tickets only cost a nickel; that was just the way of the world. But all of the numbers above are inflation-adjusted. These things have dectupled in cost even after you adjust for movies costing a nickel in Grandpa’s day. They have really, genuinely dectupled in cost, no economic trickery involved.

And this is especially strange because we expect that improving technology and globalization ought to cut costs. In 1983, the first mobile phone cost $4,000 – about $10,000 in today’s dollars. It was also a gigantic piece of crap. Today you can get a much better phone for $100. This is the right and proper way of the universe. It’s why we fund scientists, and pay businesspeople the big bucks.

But things like college and health care have still had their prices dectuple. Patients can now schedule their appointments online; doctors can send prescriptions through the fax, pharmacies can keep track of medication histories on centralized computer systems that interface with the cloud, nurses get automatic reminders when they’re giving two drugs with a potential interaction, insurance companies accept payment through credit cards – and all of this costs ten times as much as it did in the days of punch cards and secretaries who did calculations by hand.

It’s actually even worse than this, because we take so many opportunities to save money that were unavailable in past generations. Underpaid foreign nurses immigrate to America and work for a song. Doctors’ notes are sent to India overnight where they’re transcribed by sweatshop-style labor for pennies an hour. Medical equipment gets manufactured in goodness-only-knows which obscure Third World country. And it still costs ten times as much as when this was all made in the USA – and that back when minimum wages were proportionally higher than today.

And it’s actually even worse than this. A lot of these services have decreased in quality, presumably as an attempt to cut costs even further. Doctors used to make house calls; even when I was young in the ’80s my father would still go to the houses of difficult patients who were too sick to come to his office. This study notes that for women who give birth in the hospital, “the standard length of stay was 8 to 14 days in the 1950s but declined to less than 2 days in the mid-1990s”. The doctors I talk to say this isn’t because modern women are healthier, it’s because they kick them out as soon as it’s safe to free up beds for the next person. Historic records of hospital care generally describe leisurely convalescence periods and making sure somebody felt absolutely well before letting them go; this seems bizarre to anyone who has participated in a modern hospital, where the mantra is to kick people out as soon as they’re “stable” ie not in acute crisis.

If we had to provide the same quality of service as we did in 1960, and without the gains from modern technology and globalization, who even knows how many times more health care would cost? Fifty times more? A hundred times more?

And the same is true for colleges and houses and subways and so on.

III.

The existing literature on cost disease focuses on the Baumol effect. Suppose in some underdeveloped economy, people can choose either to work in a factory or join an orchestra, and the salaries of factory workers and orchestra musicians reflect relative supply and demand and profit in those industries. Then the economy undergoes a technological revolution, and factories can produce ten times as many goods. Some of the increased productivity trickles down to factory workers, and they earn more money. Would-be musicians leave the orchestras behind to go work in the higher-paying factories, and the orchestras have to raise their prices if they want to be assured enough musicians. So tech improvements in the factory sectory raise prices in the orchestra sector.

We could tell a story like this to explain rising costs in education, health care, etc. If technology increases productivity for skilled laborers in other industries, then less susceptible industries might end up footing the bill since they have to pay their workers more.

There’s only one problem: health care and education aren’t paying their workers more; in fact, quite the opposite.

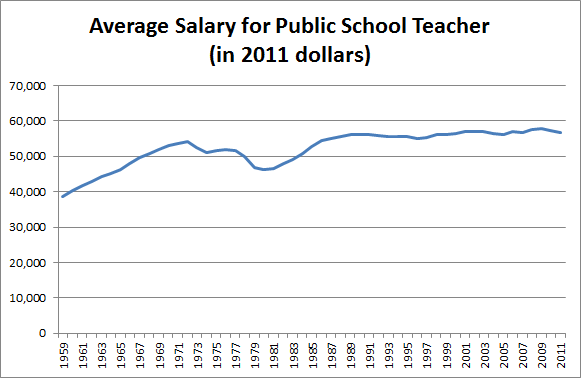

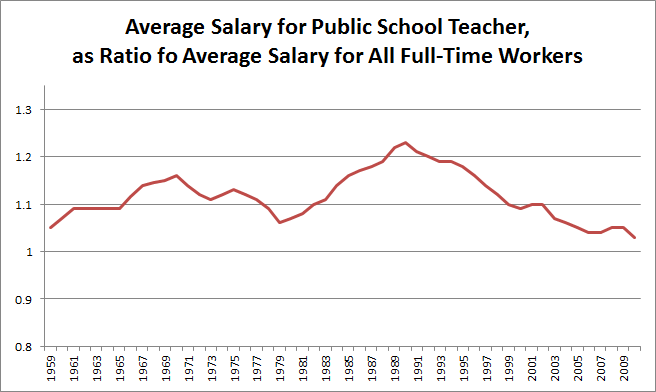

Here are teacher salaries over time (source):

Teacher salaries are relatively flat adjusting for inflation. But salaries for other jobs are increasing modestly relative to inflation. So teacher salaries relative to other occupations’ salaries are actually declining.

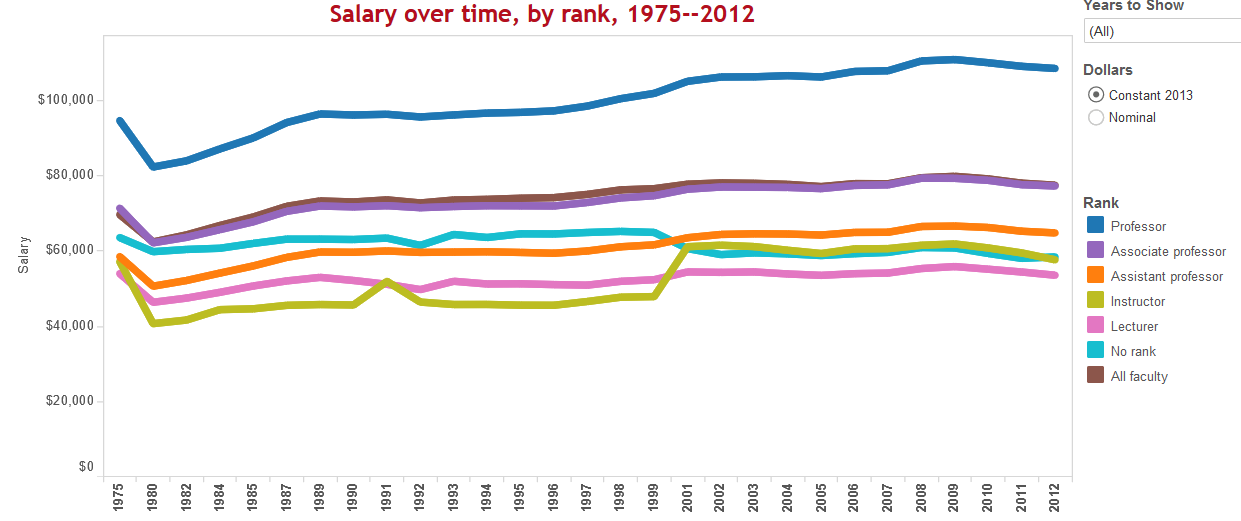

Here’s a similar graph for professors (source):

Professor salaries are going up a little, but again, they’re probably losing position relative to the average occupation. Also, note that although the average salary of each type of faculty is stable or increasing, the average salary of all faculty is going down. No mystery here – colleges are doing everything they can to switch from tenured professors to adjuncts, who complain of being overworked and abused while making about the same amount as a Starbucks barista.

This seems to me a lot like the case of the hospitals cutting care for new mothers. The price of the service dectuples, yet at the same time the service has to sacrifice quality in order to control costs.

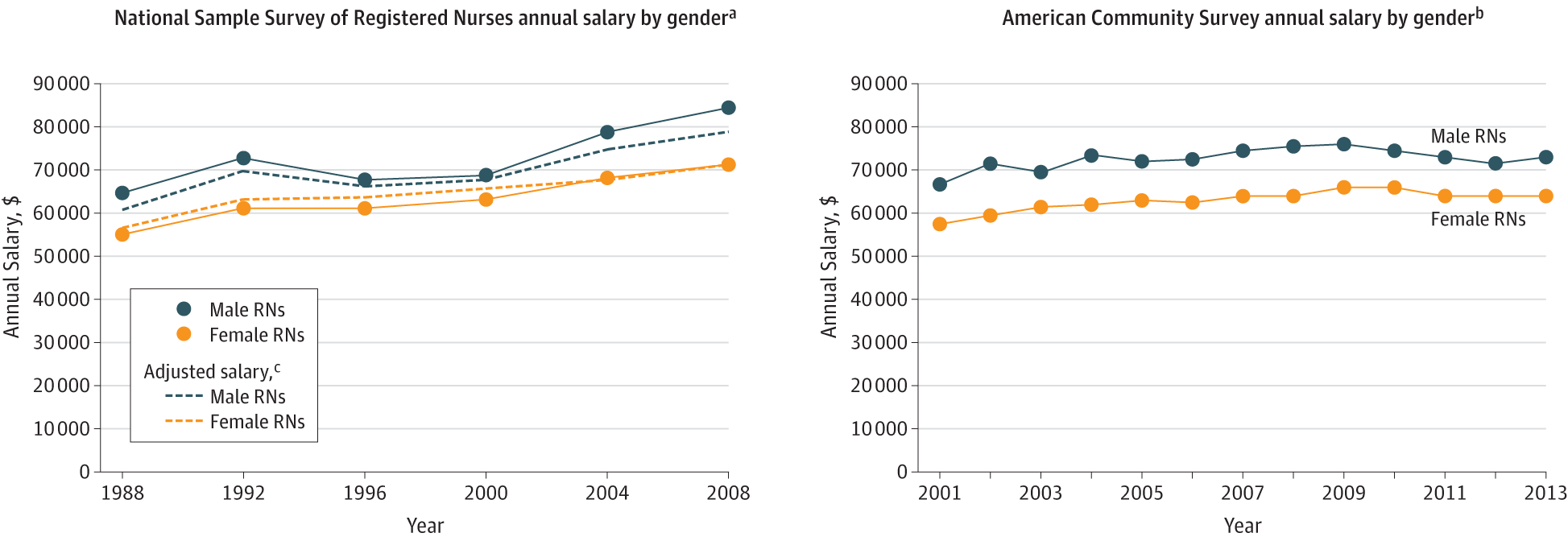

And speaking of hospitals, here’s the graph for nurses (source):

Female nurses’ salaries went from about $55,000 in 1988 to $63,000 in 2013. This is probably around the average wage increase during that time. Also, some of this reflects changes in education: in the 1980s only 40% of nurses had a degree; by 2010, about 80% did.

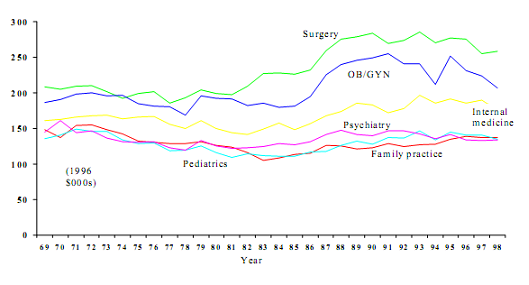

And for doctors (source)

Stable again! Except that a lot of doctors’ salaries now go to paying off their medical school debt, which has been ballooning like everything eles.

I don’t have a similar graph for subway workers, but come on. The overall pictures is that health care and education costs have managed to increase by ten times without a single cent of the gains going to teachers, doctors, or nurses. Indeed these professions seem to have lost ground salary-wise relative to others.

I also want to add some anecdote to these hard facts. My father is a doctor and my mother is a teacher, so I got to hear a lot about how these professions have changed over the past generation. It seems at least a little like the adjunct story, although without the clearly defined “professor vs. adjunct” dichotomy that makes it so easy to talk about. Doctors are really, really, really unhappy. When I went to medical school, some of my professors would tell me outright that they couldn’t believe anyone would still go into medicine with all of the new stresses and demands placed on doctors. This doesn’t seem to be limited to one medical school. Wall Street Journal: Why Doctors Are Sick Of Their Profession – “American physicians are increasingly unhappy with their once-vaunted profession, and that malaise is bad for their patients”. The Daily Beast: How Being A Doctor Became The Most Miserable Profession – “Being a doctor has become a miserable and humiliating undertaking. Indeed, many doctors feel that America has declared war on physicians”. Forbes: Why Are Doctors So Unhappy? – “Doctors have become like everyone else: insecure, discontent and scared about the future.” Vox: Only Six Percent Of Doctors Are Happy With Their Jobs. Al Jazeera America: Here’s Why Nine Out Of Ten Doctors Wouldn’t Recommend Medicine As A Profession. Read these articles and they all say the same thing that all the doctors I know say – medicine used to be a well-respected, enjoyable profession where you could give patients good care and feel self-actualized. Now it kind of sucks.

Meanwhile, I also see articles like this piece from NPR saying teachers are experiencing historic stress levels and up to 50% say their job “isn’t worth it”. Teacher job satisfaction is at historic lows. And the veteran teachers I know say the same thing as the veteran doctors I know – their jobs used to be enjoyable and make them feel like they were making a difference; now they feel overworked, unappreciated, and trapped in mountains of paperwork.

It might make sense for these fields to become more expensive if their employees’ salaries were increasing. And it might make sense for salaries to stay the same if employees instead benefitted from lower workloads and better working conditions. But neither of these are happening.

IV.

So what’s going on? Why are costs increasing so dramatically? Some possible answers:

First, can we dismiss all of this as an illusion? Maybe adjusting for inflation is harder than I think. Inflation is an average, so some things have to have higher-than-average inflation; maybe it’s education, health care, etc. Or maybe my sources have the wrong statistics.

But I don’t think this is true. The last time I talked about this problem, someone mentioned they’re running a private school which does just as well as public schools but costs only $3000/student/year, a fourth of the usual rate. Marginal Revolution notes that India has a private health system that delivers the same quality of care as its public system for a quarter of the cost. Whenever the same drug is provided by the official US health system and some kind of grey market supplement sort of thing, the grey market supplement costs between a fifth and a tenth as much; for example, Google’s first hit for Deplin®, official prescription L-methylfolate, costs $175 for a month’s supply; unregulated L-methylfolate supplement delivers the same dose for about $30. And this isn’t even mentioning things like the $1 bag of saline that costs $700 at hospitals. Since it seems like it’s not too hard to do things for a fraction of what we currently do things for, probably we should be less reluctant to believe that the cost of everything is really inflated.

Second, might markets just not work? I know this is kind of an extreme question to ask in a post on economics, but maybe nobody knows what they’re doing in a lot of these fields and people can just increase costs and not suffer any decreased demand because of it. Suppose that people proved beyond a shadow of a doubt that Khan Academy could teach you just as much as a normal college education, but for free. People would still ask questions like – will employers accept my Khan Academy degree? Will it look good on a resume? Will people make fun of me for it? The same is true of community colleges, second-tier colleges, for-profit colleges, et cetera. I got offered a free scholarship to a mediocre state college, and I turned it down on the grounds that I knew nothing about anything and maybe years from now I would be locked out of some sort of Exciting Opportunity because my college wasn’t prestigious enough. Assuming everyone thinks like this, can colleges just charge whatever they want?

Likewise, my workplace offered me three different health insurance plans, and I chose the middle-expensiveness one, on the grounds that I had no idea how health insurance worked but maybe if I bought the cheap one I’d get sick and regret my choice, and maybe if I bought the expensive one I wouldn’t be sick and regret my choice. I am a doctor, my employer is a hospital, and the health insurance was for treatment in my own health system. The moral of the story is that I am an idiot. The second moral of the story is that people probably are not super-informed health care consumers.

This can’t be pure price-gouging, since corporate profits haven’t increased nearly enough to be where all the money is going. But a while ago a commenter linked me to the Delta Cost Project, which scrutinizes the exact causes of increasing college tuition. Some of it is the administrative bloat that you would expect. But a lot of it is fun “student life” types of activities like clubs, festivals, and paying Milo Yiannopoulos to speak and then cleaning up after the ensuing riots. These sorts of things improve the student experience, but I’m not sure that the average student would rather go to an expensive college with clubs/festivals/Milo than a cheap college without them. More important, it doesn’t really seem like the average student is offered this choice.

This kind of suggests a picture where colleges expect people will pay whatever price they set, so they set a very high price and then use the money for cool things and increasing their own prestige. Or maybe clubs/festivals/Milo become such a signal of prestige that students avoid colleges that don’t comply since they worry their degrees won’t be respected? Some people have pointed out that hospitals have switched from many-people-all-in-a-big-ward to private rooms. Once again, nobody seems to have been offered the choice between expensive hospitals with private rooms versus cheap hospitals with roommates. It’s almost as if industries have their own reasons for switching to more-bells-and-whistles services that people don’t necessarily want, and consumers just go along with it because for some reason they’re not exercising choice the same as they would in other markets.

(this article on the Oklahoma City Surgery Center might be about a partial corrective for this kind of thing)

Third, can we attribute this to the inefficiency of government relative to private industry? I don’t think so. The government handles most primary education and subways, and has its hand in health care. But we know that for-profit hospitals aren’t much cheaper than government hospitals, and that private schools usually aren’t much cheaper (and are sometimes more expensive) than government schools. And private colleges cost more than government-funded ones.

Fourth, can we attribute it to indirect government intervention through regulation, which public and private companies alike must deal with? This seems to be at least part of the story in health care, given how much money you can save by grey-market practices that avoid the FDA. It’s harder to apply it to colleges, though some people have pointed out regulations like Title IX that affect the educational sector.

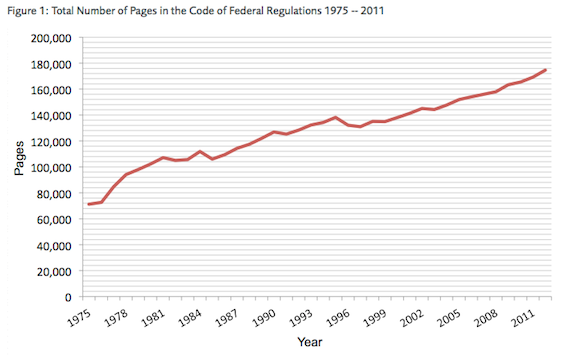

One factor that seems to speak out against this is that starting with Reagan in 1980, and picking up steam with Gingrich in 1994, we got an increasing presence of Republicans in government who declared war on overregulation – but the cost disease proceeded unabated. This is suspicious, but in fairness to the Republicans, they did sort of fail miserably at deregulating things. “The literal number of pages in the regulatory code” is kind of a blunt instrument, but it doesn’t exactly inspire confidence in the Republicans’ deregulation efforts:

Here’s a more interesting (and more fun) argument against regulations being to blame: what about pet health care? Veterinary care is much less regulated than human health care, yet its cost is rising as fast (or faster) than that of the human medical system (popular article, study). I’m not sure what to make of this.

Fifth, might the increased regulatory complexity happen not through literal regulations, but through fear of lawsuits? That is, might institutions add extra layers of administration and expense not because they’re forced to, but because they fear being sued if they don’t and then something goes wrong?

I see this all the time in medicine. A patient goes to the hospital with a heart attack. While he’s recovering, he tells his doctor that he’s really upset about all of this. Any normal person would say “You had a heart attack, of course you’re upset, get over it.” But if his doctor says this, and then a year later he commits suicide for some unrelated reason, his family can sue the doctor for “not picking up the warning signs” and win several million dollars. So now the doctor consults a psychiatrist, who does an hour-long evaluation, charges the insurance company $500, and determines using her immense clinical expertise that the patient is upset because he just had a heart attack.

Those outside the field have no idea how much of medicine is built on this principle. People often say that the importance of lawsuits to medical cost increases is overrated because malpractice insurance doesn’t cost that much, but the situation above would never look lawsuit-related; the whole thing only works because everyone involved documents it as well-justified psychiatric consult to investigate depression. Apparently some studies suggest this isn’t happening, but all they do is survey doctors, and with all due respect all the doctors I know say the opposite.

This has nothing to do with government regulations (except insofar as these make lawsuits easier or harder), but it sure can drive cost increases, and it might apply to fields outside medicine as well.

Sixth, might we have changed our level of risk tolerance? That is, might increased caution be due not purely to lawsuitphobia, but to really caring more about whether or not people are protected? I read stuff every so often about how playgrounds are becoming obsolete because nobody wants to let kids run around unsupervised on something with sharp edges. Suppose that one in 10,000 kids get a horrible playground-related injury. Is it worth making playgrounds cost twice as much and be half as fun in order to decrease that number to one in 100,000? This isn’t a rhetorical question; I think different people can have legitimately different opinions here (though there are probably some utilitarian things we can do to improve them).

To bring back the lawsuit point, some of this probably relates to a difference between personal versus institutional risk tolerance. Every so often, an elderly person getting up to walk to the bathroom will fall and break their hip. This is a fact of life, and elderly people deal with it every day. Most elderly people I know don’t spend thousands of dollars fall-proofing the route from their bed to their bathroom, or hiring people to watch them at every moment to make sure they don’t fall, or buy a bedside commode to make bathroom-related falls impossible. This suggests a revealed preference that elderly people are willing to tolerate a certain fall probability in order to save money and convenience. Hospitals, which face huge lawsuits if any elderly person falls on the premises, are not willing to tolerate that probability. They put rails on elderly people’s beds, place alarms on them that will go off if the elderly person tries to leave the bed without permission, and hire patient care assistants who among other things go around carefully holding elderly people upright as they walk to the bathroom (I assume this job will soon require at least a master’s degree). As more things become institutionalized and the level of acceptable institutional risk tolerance becomes lower, this could shift the cost-risk tradeoff even if there isn’t a population-level trend towards more risk-aversion.

Seventh, might things cost more for the people who pay because so many people don’t pay? This is somewhat true of colleges, where an increasing number of people are getting in on scholarships funded by the tuition of non-scholarship students. I haven’t been able to find great statistics on this, but one argument against: couldn’t a college just not fund scholarships, and offer much lower prices to its paying students? I get that scholarships are good and altruistic, but it would be surprising if every single college thought of its role as an altruistic institution, and cared about it more than they cared about providing the same service at a better price. I guess this is related to my confusion about why more people don’t open up colleges. Maybe this is the “smart people are rightly too scared and confused to go to for-profit colleges, and there’s not enough ability to discriminate between the good and the bad ones to make it worthwhile to found a good one” thing again.

This also applies in health care. Our hospital (and every other hospital in the country) has some “frequent flier” patients who overdose on meth at least once a week. They comes in, get treated for their meth overdose (we can’t legally turn away emergency cases), get advised to get help for their meth addiction (without the slightest expectation that they will take our advice) and then get discharged. Most of them are poor and have no insurance, but each admission costs a couple of thousand dollars. The cost gets paid by a combination of taxpayers and other hospital patients with good insurance who get big markups on their own bills.

Eighth, might total compensation be increasing even though wages aren’t? There definitely seems to be a pensions crisis, especially in a lot of government work, and it’s possible that some of this is going to pay the pensions of teachers, etc. My understanding is that in general pensions aren’t really increasing much faster than wages, but this might not be true in those specific industries. Also, this might pass the buck to the question of why we need to spend more on pensions now than in the past. I don’t think increasing life expectancy explains all of this, but I might be wrong.

IV.

I mentioned politics briefly above, but they probably deserve more space here. Libertarian-minded people keep talking about how there’s too much red tape and the economy is being throttled. And less libertarian-minded people keep interpreting it as not caring about the poor, or not understanding that government has an important role in a civilized society, or as a “dog whistle” for racism, or whatever. I don’t know why more people don’t just come out and say “LOOK, REALLY OUR MAIN PROBLEM IS THAT ALL THE MOST IMPORTANT THINGS COST TEN TIMES AS MUCH AS THEY USED TO FOR NO REASON, PLUS THEY SEEM TO BE GOING DOWN IN QUALITY, AND NOBODY KNOWS WHY, AND WE’RE MOSTLY JUST DESPERATELY FLAILING AROUND LOOKING FOR SOLUTIONS HERE.” State that clearly, and a lot of political debates take on a different light.

For example: some people promote free universal college education, remembering a time when it was easy for middle class people to afford college if they wanted it. Other people oppose the policy, remembering a time when people didn’t depend on government handouts. Both are true! My uncle paid for his tuition at a really good college just by working a pretty easy summer job – not so hard when college cost a tenth of what it did now. The modern conflict between opponents and proponents of free college education is over how to distribute our losses. In the old days, we could combine low taxes with widely available education. Now we can’t, and we have to argue about which value to sacrifice.

Or: some people get upset about teachers’ unions, saying they must be sucking the “dynamism” out of education because of increasing costs. Others people fiercely defend them, saying teachers are underpaid and overworked. Once again, in the context of cost disease, both are obviously true. The taxpayers are just trying to protect their right to get education as cheaply as they used to. The teachers are trying to protect their right to make as much money as they used to. The conflict between the taxpayers and the teachers’ unions is about how to distribute losses; somebody is going to have to be worse off than they were a generation ago, so who should it be?

And the same is true to greater or lesser degrees in the various debates over health care, public housing, et cetera.

Imagine if tomorrow, the price of water dectupled. Suddenly people have to choose between drinking and washing dishes. Activists argue that taking a shower is a basic human right, and grumpy talk show hosts point out that in their day, parents taught their children not to waste water. A coalition promotes laws ensuring government-subsidized free water for poor families; a Fox News investigative report shows that some people receiving water on the government dime are taking long luxurious showers. Everyone gets really angry and there’s lots of talk about basic compassion and personal responsibility and whatever but all of this is secondary to why does water costs ten times what it used to?

I think this is the basic intuition behind so many people, even those who genuinely want to help the poor, are afraid of “tax and spend” policies. In the context of cost disease, these look like industries constantly doubling, tripling, or dectupling their price, and the government saying “Okay, fine,” and increasing taxes however much it costs to pay for whatever they’re demanding now.

If we give everyone free college education, that solves a big social problem. It also locks in a price which is ten times too high for no reason. This isn’t fair to the government, which has to pay ten times more than it should. It’s not fair to the poor people, who have to face the stigma of accepting handouts for something they could easily have afforded themselves if it was at its proper price. And it’s not fair to future generations if colleges take this opportunity to increase the cost by twenty times, and then our children have to subsidize that.

I’m not sure how many people currently opposed to paying for free health care, or free college, or whatever, would be happy to pay for health care that cost less, that was less wasteful and more efficient, and whose price we expected to go down rather than up with every passing year. I expect it would be a lot.

And if it isn’t, who cares? The people who want to help the poor have enough political capital to spend eg $500 billion on Medicaid; if that were to go ten times further, then everyone could get the health care they need without any more political action needed. If some government program found a way to give poor people good health insurance for a few hundred dollars a year, college tuition for about a thousand, and housing for only two-thirds what it costs now, that would be the greatest anti-poverty advance in history. That program is called “having things be as efficient as they were a few decades ago”.

V.

In 1930, economist John Maynard Keynes predicted that his grandchildrens’ generation would have a 15 hour work week. At the time, it made sense. GDP was rising so quickly that anyone who could draw a line on a graph could tell that our generation would be four or five times richer than his. And the average middle-class person in his generation felt like they were doing pretty well and had most of what they needed. Why wouldn’t they decide to take some time off and settle for a lifestyle merely twice as luxurious as Keynes’ own?

Keynes was sort of right. GDP per capita is 4-5x greater today than in his time. Yet we still work forty hour weeks, and some large-but-inconsistently-reported percent of Americans (76? 55? 47?) still live paycheck to paycheck.

And yes, part of this is because inequality is increasing and most of the gains are going to the rich. But this alone wouldn’t be a disaster; we’d get to Keynes’ utopia a little slower than we might otherwise, but eventually we’d get there. Most gains going to the rich means at least some gains are going to the poor. And at least there’s a lot of mainstream awareness of the problem.

I’m more worried about the part where the cost of basic human needs goes up faster than wages do. Even if you’re making twice as much money, if your health care and education and so on cost ten times as much, you’re going to start falling behind. Right now the standard of living isn’t just stagnant, it’s at risk of declining, and a lot of that is student loans and health insurance costs and so on.

What’s happening? I don’t know and I find it really scary.

On teachers’ salaries, at least, the NCES data is data for WAGES only, not total compensation. Given their civil service protections, automatic, seniority based promotions, extremely generous benefits and pensions, a picture of flatlining wages is inaccurate. I’d also look at the sheer NUMBER of teachers employed over time, as I guarantee you that, nationwide, student/faculty ratios (to say nothing of student to administrator ratios) were substantially higher 40 years ago.

On regulations, you have to look earlier than 75. The neo-liberal era (roughly 1980 till 2000, or maybe 2008) did slow things down a little, but that wave is clearly spent.

Probably exaggerated. In liberal-as-hell Massachusetts, teachers don’t get “professional status” until their fourth year in a district and work year-to-year contracts before that. It is easy to make teachers’ lives miserable by giving them classes full of awful students, piling on paperwork, etc. until they quit. And NCLB and similar federal programs have given administrations a palette of tools they can use to pile black marks onto teachers’ performance records to justify firing even long-standing teachers with tenure.

“Promotions”? In most schools, the only “promotion” you get from a “teacher” position is “department head”. This isn’t automatic, and it isn’t really a promotion. You get a small stipend, teach one less class, and do a hell of a lot more paperwork.

You mean the guaranteed pay increases? As I mentioned before, they’re only guaranteed starting the fourth year since the school can decline to renew a contract any time before that. And the increases aren’t exactly mind-blowing either. Here’s a fairly typical Massachusetts school where an 11-year veteran with a Ph.D. makes $74,000, less than twice as much as the first-year bachelor’s degree with $47,000 (see Appendix A). (One major difference being, the 10-year veteran probably has a mortgage and children.) And you lose all that seniority as soon as you change districts, which is not exactly an uncommon occurrence.

Those salaries are above the national median, but this is metrowest MA where cost of living is fairly high.

Can you be more specific about what’s so generous about the benefits? I don’t think teachers get especially comprehensive insurance plans, even here in Massachusetts where the unions are pretty damned strong. I think they just get the standard bottom-of-the-line Romneycare plan. (And it’s part of their compensation like any other employer-provided health insurance.)

Nowadays, teachers get 403bs which are just mismanaged 401ks.

Most of the drop in student/teacher ratio happened between 1965 and 1985. The ratio has changed very little since 2005 as far as I can tell, while costs have if anything accelerated since then.

Also, in 1965, teachers could hit or humiliate kids who got out of line. Now, teachers aren’t even allowed to give “time out”s. The lack of disciplinary tools available to teachers now decreases the size of the largest manageable class. (I also think culture is relevant to this, where kids used to have more respect for adults in general and teachers in particular.)

>Probably exaggerated. In liberal-as-hell Massachusetts, teachers don’t get “professional status” until their fourth year in a district and work year-to-year contracts before that. It is easy to make teachers’ lives miserable by giving them classes full of awful students, piling on paperwork, etc. until they quit.

Do you know when most people get “impossible to fire status”? Never. And that’s a good thing.

>You mean the guaranteed pay increases? As I mentioned before, they’re only guaranteed starting the fourth year since the school can decline to renew a contract any time before that. And the increases aren’t exactly mind-blowing either. Here’s a fairly typical Massachusetts school where an 11-year veteran with a Ph.D. makes $74,000, less than twice as much as the first-year bachelor’s degree with $47,000 (see Appendix A).

And if that 11 year veteran slacks off and does a lousy job, how does it affect his pay? Not at all

>Most of the drop in student/teacher ratio happened between 1965 and 1985. The ratio has changed very little since 2005 as far as I can tell, while costs have if anything accelerated since then.

not according to the data presented by massivefocusedinaction

>Also, in 1965, teachers could hit or humiliate kids who got out of line. Now, teachers aren’t even allowed to give “time out”s. The lack of disciplinary tools available to teachers now decreases the size of the largest manageable class. (I also think culture is relevant to this, where kids used to have more respect for adults in general and teachers in particular.)

sure, but that’s not the point of the discussion.

Right. And most people in this case includes public school teachers.

Maybe you misunderstood. “Professional status” just means “you have a stable contract, but we can fire you if you give us cause.” Without professional status, the school can decline to renew the teacher’s contract with no penalties whatsoever to the school. Teacher is SOL if they were depending on having the job and their contract isn’t renewed.

Uh, it will impact it 100% because they will get fired. As I mentioned before, accountability measures like NCLB give administrators a lot of leverage.

I know at least one fantastic teacher who had professional status and a record of year-by-year increasing the percentage of passes on the AP chemistry exam in her class in an otherwise poor-performing school who got fired because she didn’t comply with a bunch of the bullshit busywork ed reform stuff the administration tried to push on her. So not only is it possible to fire a slacker with tenure. It’s possible to fire a demonstrably extremely effective teacher with tenure!

I know, I know, “anecdotal”, but it’s better than any evidence you’ve provided so far.

That’s not a graph of student/teacher ratio. (In fact, separating growth in faculty from growth in student body seems to be used to make the changes harder to compare, whereas just giving the ratio would make the comparison much easier — so this seems a little intentionally misleading.) Here’s one:

http://toolbox.gpee.org/uploads/RTEmagicC_figure_8_studentteacher_ratio.png.png

It’s hard to get good data for the last few years, so I’ll drop the “been flat since 2005 claim” in favor of “the drop in student/teacher ratio is linear but the cost increases are superlinear”.

If cultural and behavioral changes are the cause of part of the cost increase, then I think that’s a pretty interesting result, even if it doesn’t give you any justification for maligning school teachers.

I take it you’re walking back the “extremely generous benefits and pensions” part?

Do you know when most people get “stable contract status”? Never.

Not sure why you are playing hide the ball and talking about one school district in one state when we are talking about the national public school system.

I think you misunderstand. “Professional status” for a teacher is the same as “having a W-2” for non-teachers. It’s like a normal salary job where if they fire you without cause you can collect unemployment insurance.

Not having professional status is like having a temp job that lasts one year and your employer has the choice to renew it or not. If they don’t renew it, you don’t get unemployment insurance. You don’t have any recourse. You have no grounds for a wrongful termination suit.

I’m much more secure in my private sector tech job than my wife (I’d expect my bosses to give me at least one warning before firing me), I make about twice as much in salary, I have better benefits.

I don’t work as hard, I’m not appreciably smarter than her, and I probably don’t add nearly as much value to society.

The assumption that I’m playing “hide the ball” seems pretty uncharitable. I’m talking about schools in MA because that’s what I know. I’m also talking about schools in MA because MA has high cost of living, unparalleled public school performance, and disproportionately strong teachers’ unions compared to the rest of the country, so if the accusations of lazy overpaid coddled teachers don’t apply to MA, then it’s hard to see how they’d apply anywhere.

Actually, people in most countries get “stable contract status” as soon as they start a full-time professional job. It’s called, you know, a contract.

@wysinwygymmv

>Maybe you misunderstood. “Professional status” just means “you have a stable contract, but we can fire you if you give us cause.”

Mass teachers get tenure after 4 years, not just professional status. Tenure is repeatedly described as “permanent” in these laws and comes with the full suite of civil service protections, which in practice, amounts to practically un-fireable.

>Without professional status, the school can decline to renew the teacher’s contract with no penalties whatsoever to the school. Teacher is SOL if they were depending on having the job and their contract isn’t renewed.

You mean just like every employee in the private sector who can be fired at will?

1. Tenure and professional status are synonyms.

2. Please provide evidence for the “un-fireable” claim. I know for a fact that it’s possible to fire tenured teachers, because I’ve seen it happen even to teachers who were demonstrably very good at their jobs (as I already mentioned).

No. As I already explained, I am a private sector employee and if I got fired without cause or laid off, I would be able to collect unemployment insurance. My wife does not have professional status, so the school can just decline to renew her contract. She gets no unemployment insurance, no recourse to union intervention, no recourse to wrongful termination suits.

Please read more carefully.

Edit: I haven’t read it yet, but you may find it interesting:

http://haveyouheardblog.com/wp-content/uploads/2016/07/Han_Teacher_dismissal_Feb_16.pdf

Edit 2:

http://www.southcoasttoday.com/article/20140616/NEWS/406160312

That quote is from probably the most powerful teacher’s union in the country.

@wysinwygymmv

>2. Please provide evidence for the “un-fireable” claim. I know for a fact that it’s possible to fire tenured teachers with cause, because I’ve seen it happen even to teachers who were demonstrably very good at their jobs (as I already mentioned).

According to the latest NCES data the average district in Mass has 209 teachers, an average of 2.2 of whom are fired every year for cause. Of those 2.2, 1.9 are nontenured. They don’t have a breakdown of the average tenured/non-tenured ratio, but assuming the national average of 55% tenured teachers, that means 1/5 of one percent of tenured teachers are fired in a given year, vastly below private sector rates, and slightly above the national rate for teachers.

>She gets no unemployment insurance, no recourse to union intervention, no recourse to wrongful termination suits.

Again, except for UI, I don’t get any of those things either. Now the UI thing should be fixed, but it’s not the end of the universe and doesn’t outweigh the many perks she gets when she does get tenured.

>“They continue to be evaluated thoroughly. If they are struggling, they must be given guidance on how to improve. If they fail to improve, there is a speedy process of dismissing them,” wrote the MTA.

That they say it does’t make it true. the numbers don’t lie. “Due process rights” are precisely the civil service protections I’m talking about. To fire anyone, an employer has to prove to an arbitrator that they are firing them for cause. That is a very large burden, the process can take months or years and doesn’t always work. A year ago, the DC metro caught fire and it was found that the maintainer signing off on that section was faking his records. he appealed his dismissal arguing that while it was true he faked his records, so did everyone else so it wasn’t fair to fire him. he got his job back.. The union then sued again for not giving it back to him fast enough.

These protections are orders of magnitudes more than exist in any private sector workplace, and they are poisonous for efficiency.

Should we be comparing to the private sector average? Isn’t that driven largely by the industries with extremely high turnover (entertainment, hospitality, food service)? Teachers are certainly fired at a low rate, but it’s not clear that the private sector average is driven by poor performance or that high turnover rates actually improve performance in any industry. (Granted, that’s moving the goalposts a bit.)

“I don’t get any of that, except the relevant one!”

Yes, exactly.

Unemployment insurance and tenure are fulfilling the same function, here. People require more stability in their lives than most employers are willing to offer. The solution in most public sector settings is unemployment insurance, and the solution in most educational settings is tenure.

She doesn’t get “many perks” when she gets tenure. She will still have a quarterly review process that is considerably more adversarial than my annual review process, and she can be disciplined and ultimately fired on the basis of poor performance reviews. Again, I’ve seen this happen so “unfireable” is at best an exaggeration. I cannot think of any other “perks” that she gets except for a guaranteed raise that is, in practice, much lower than the increases in pay I’ve consistently gotten in my private sector job over the last 7 years. If anything, that’s the opposite of a “perk”. There is one “perk”, which is tenure, and it is comparable in value to unemployment insurance.

And it can feel like the end of the world if you are trying to buy a house and start a family and those efforts depend on the income from that non-tenured teaching job.

This might or might not cost the company as much as unemployment insurance, depending on the circumstances. In some cases, “orders of magnitude” might be true, but I think that is only rare and isolated cases. I suspect there is not much difference in average.

I think you’re dramatically overestimating the extent to which lower job security benefits efficiency or similar measures in the private sector.

@wysinwygymmv says:

>Should we be comparing to the private sector average? Isn’t that driven largely by the industries with extremely high turnover (entertainment, hospitality, food service)? Teachers are certainly fired at a low rate, but it’s not clear that the private sector average is driven by poor performance or that high turnover rates actually improve performance in any industry. (Granted, that’s moving the goalposts a bit.) And remember, we’re not counting voluntary separations, but actual removals for cause.

I’m happy to credit this argument IF you can show evidence to it.

>“I don’t get any of that, except the relevant one!”

My point is that the others are far more relevant.

>Unemployment insurance and tenure are fulfilling the same function, here.

No, they don’t. UE doesn’t prevent your employer from getting rid of you if you do a bad job. Tenure does.

>She will still have a quarterly review process that is considerably more adversarial than my annual review process, and she can be disciplined and ultimately fired on the basis of poor performance reviews.

I’ve already showed the evidence that they are NOT more adversarial, at least when it comes to actual firings.

>Again, I’ve seen this happen so “unfireable” is at best an exaggeration.

anecdotes are not data. the data says that tenured teachers are almost never fired.

>I cannot think of any other “perks” that she gets except for a guaranteed raise that is, in practice, much lower than the increases in pay I’ve consistently gotten in my private sector job over the last 7 years.

You probably got those raises because you did a good job. I assume your wife also did a good job, and didn’t. But that’s not the point, the point is that if she HADN’T done a good job, she’d have gotten those raises. If you hadn’t gotten a good job, you’d have gotten nothing, or maybe fired. You’re considering only the upside for good workers and ignoring the bad. I fully grant you that, if you want to work hard, make a difference, and do a good job, public sector employment can be a raw deal, but that’s precisely the problem. the system, on the whole refuses to either punish bad behavior or reward good, which results in a lot more bad behavior.

> If anything, that’s the opposite of a “perk”. There is one “perk”, which is tenure, and it is comparable in value to unemployment insurance.

Mathematically, inability to be fired is vastly more valuable than part of your salary if you are fired.

>This might or might not cost the company as much as unemployment insurance, depending on the circumstances.

It’s not just the cost to the company, it’s cost to the managers who have to fill out all the paperwork and build a court case against bad employees.

>I think that is only rare and isolated cases. I suspect there is not much difference in average.

I’ve shown you evidence to the contrary. Please show me what makes you suspect the opposite.

I was more specific than you are taking into account: they are both filling the gap between the employee’s need for stability and the employer’s unwillingness to provide that stability. Yes, there are other effects that may be more or less desirable depending. For the record, I don’t mind considering the replacement of tenure with unemployment insurance for teachers. That’s just not the situation as it stands today.

The thing is, you only have really indirect evidence for bad behavior. If we assume you’re right on with the extent to which public school teachers are too safe from firing, then I think you’re vastly overestimating:

-the ease with which private sector employees are fired

-the positive effect such firings have on performance for private firms

-the negative effect on school performance of the difficulty to fire teachers

You’ve shown me evidence that federal employees are very hard to fire. You haven’t shown me evidence that this causes lots of inefficiency.

You also claimed that the difference in difficulty in firing was “orders of magnitude”, but this article says the private sector average is 3% and you previously cited statistics showing that MA fires public school teachers at a rate of 1% (including non-tenured), so at best I think you’ve vastly overstated your case.

I think you’ve fairly proven that it’s hard to fire tenured teachers, but you haven’t proven that this has much impact on school performance. Maybe we should look at the performance of schools compared to percentage of workforce who are tenured. My hypothesis: schools with higher percentages of tenured teachers will perform better than schools with lower percentages of tenured teachers.

This is because schools with more tenured teachers will have higher morale among teachers, there will be more solid working relationships between teachers and administrators, teachers will have more community ties, and teachers will on average be more experienced.

@wysinwygymmv says:

>I was more specific than you are taking into account: they are both filling the gap between the employee’s need for stability and the employer’s unwillingness to provide that stability.

That people might want them for the same reasons does NOT make them the same. those other effects are important.

>The thing is, you only have really indirect evidence for bad behavior.

I have plenty of direct evidence. Please stop moving goalposts.

>I’ve shown you evidence to the contrary. Please show me what makes you suspect the opposite.

No you haven’t. However, the contrary evidence is easy, private school teachers produce better results AND are paid less.

>You also claimed that the difference in difficulty in firing was “orders of magnitude”, but this article says the private sector average is 3% and you previously cited statistics showing that MA fires public school teachers at a rate of 1% (including non-tenured),

You’re mis-representing what I said. I said non-tenured teachers were harder to fire, and I was right. their rate of firing is 1/5 of one percent, more than a order of magnitude less.

>My hypothesis: schools with higher percentages of tenured teachers will perform better than schools with lower percentages of tenured teachers.

Show evidence of that. I’ve shown evidence to the contrary.

To make an apples-to-apples comparison, though, you need to compare private sector employes fired after at least four years of continuous employment to firing rates for tenured teachers. I suspect they’d probably be fairly comparable.

Many (from my experience, the vast majority) people who are going to be problem employees are pretty evidently problem employees within their first year. A four-year probationary period is going to weed out a huge number of potential problems before they ever make it into your “tenured teacher” category.

I’m curious about the claim that if a teacher’s contract is not renewed she doesn’t get unemployment insurance. That seems odd–could you explain why? I don’t know the rules in Massachessetts, but checking the web page for the program in California, under “eligibility” I find:

To be entitled to benefits, you must be:

Out of work due to no fault of your own.

Physically able to work.

Actively seeking work.

Ready to accept work.

Why wouldn’t an unemployed teacher qualify? Looking down the web page I don’t find any exception for teachers.

Doing a search for “teacher” it’s clear that teachers can receive unemployment insurance–there’s a discussion of special circumstances when they can’t (if the claim is filed during a recess period and they have an offer from the school to go back to work when the recess if over).

Here is the page for Massachusetts. It has a list of categories of workers that are not eligible, and teachers are not on it.

A search for “teacher” finds:

So as far as I can tell, your claim is not true for Massachusetts. Perhaps you can find something on the web page to support it?

@DavidFriedman: Any “temp” job (that is, a job with a defined end date, like a 1-year-long teacher contract) is ineligible for unemployment benefits. (I can only speak to OH and NC but I assume all States are the same there. Otherwise, you could get unemployment compensation after your summer internship, term of elected office, etc.)

Here are some articles describing how teachers in NYC cannot be fired. http://www.nydailynews.com/new-york/education/city-spend-29m-paying-educators-fire-article-1.1477027

http://nypost.com/2015/03/21/nyc-schools-removed-289-teachers-but-only-fired-9/

In fact, in NYC there is a term for this “The Rubber Room”, see http://www.newyorker.com/magazine/2009/08/31/the-rubber-room

I don’t know what the correct rate for firing teachers is.

I do know that 0.5% is too low. Anyone who has worked knows that the thought that you could get together 200 of your coworkers — even skipping those who had only been on the job a year or two to leave short-termers out of your pool — and find only one slacker is nonsense.

Again, I don’t know what the correct firing rate is.

For separate anecdote, my dad got unemployment when he was let go from a private school at the end of the school year.

And according to the Massachusetts Teachers Association, laid-off teachers can collect unemployment: http://www.massteacher.org/memberservices/~/media/Files/legal/mta_rif_booklet_2014_web.pdf

I don’t think this is true (in any state). Do you have cites? I looked at several places that implied temp jobs always get unemployment, like this one.

I’ve been doing temp work for about 12 years now, and I think I’ve always qualified for unemployment, although I’ve never collected on it. Of course it is possible I get get where I live (Minnesota), and wouldn’t in other states, but I don’t think so.

Edit: I should add that I’ve heard quite often of workers that just work in the summer and live off of unemployment every winter. Anecdotal, so maybe not true, but again, I think it is true.

Teachers in PA [not national but a lot better than anecdotal,and fairly representative & large state] have a $50 Billion pension fund. Last decade the amount they get on retirement was spiked 25%, and years required dropped from 10 to 5 under Gov. Rendell. They also have gold-plated benefits. The amount the State is paying for teacher’s pensions was under $3bn annually a couple years ago, was $4.4B last year and will rise to $5.2 in a few years — just for the pension.

The avg HS teacher in my district makes $66k in salary, and $31k in benefits [this is all published, public data.] Longer tenured teachers make up to $97k in salary. You can also sort by ‘specialty’ and with Ns = 1 you get some interesting data: The kindergarten music teacher, Master’s degree, 20 years exp is earning over $125k in salary and bennies per year. For 9 months work.

Of course, senior administrators make significantly more than that!

PSERS costs are approximately 33% of school budgets already. Again, that’s just pension dollars, and not benefits or salaries.

With pensions, it’s less important what teachers get nowdays when they join than what they get when they leave – i.e. what has been promised to them 20 or 30 years ago.

Also, if it’s so easy to fire a teacher, how the phenomenon of “rubber rooms” in e.g. New York is explained, where teachers essentially spend years doing nothing because they can’t be allowed to teach (because of some fault) but can’t be fired either? Here HuffPo article claims it costs 22M a year:

http://www.huffingtonpost.com/2012/10/16/rubber-rooms-in-new-york-city-22-million_n_1969749.html

From the collective bargaining agreement you linked

How many places of work guarantee a higher salary for more education, while also paying for that education?

Lots of interesting incentives for not using sick leave

Looking through this it appears as if this would increase to up to $84,000 a year if you hit specific sick leave incentives, and could be augmented with other activities (up to ~$16,000 a year for a dual role of head football and baseball coach appears to be the max), for 183 scheduled work days a year.

This link has a schedule for retirement benefits for Massachusettes teachers starting after 2012, how generous theses packages are depends on the contribution rates etc, but very few private salaried positions have anything like defined retirement benefits these days.

Doing a quick scroll through this contract I would say that none of the individual benefits listed seem excessive, but the sum total actually might well be. Frequently in the private sector you have the opportunity to work for a place with either good retirement benefits, or good health benefits, or good vacation packages, but it is pretty rare to have all three available along with other perks (continued education benefits).

We’re talking about the distinction between teachers and the rest of us. The fact that a bad boss can selectively try to make a teacher miserable is a red herring. The issue is why they are forced to resort to doing so, instead of just firing you. And the answer is “they’re not at-will employees, and they have the backing of a union” is an enormous job benefit.

Pay increases.

Yup! In my town it’s 5%, like clockwork, good times or bad.

First, what percentage of contracts are not renewed? I don’t think it’s many.

Second, do you realize that DURING a contract–IOW, during one of those four years–the teacher still has more protection than any at-will employee, which is almost everyone else?

One wonders how many PhDs are working in public schools, outside administration. Answer: One percent, and I’d bet good money that a lot of them have administration roles. And of course one wonders what they’d be worth elsewhere (if they were good enough to teach college they’d probably be doing it), so i don’t know if this is much data. more to the point,

Another major difference probably being that the PhD. is paid for in whole or in part by the school while working, unlike many folks.And a lot of them are in an edu-field (pedagogy) and not a hard field (math) so are merely an administrator grooming tool.

Well, most obviously you get roughly 16 weeks of vacation instead of the normal 2-3 weeks. You also get a pension in many cases, and the above-mentioned job security; and the benefit of a union; and paid training; and…

Dunno, but….

Sure, if the employer got to tax the public to pay for it. Our district pays teachers most of their insurance costs.

Late on, you mention this, which I will depersonalize:

I don’t know about you, but for many people: Yes you do, and yes you are.

W/r/t work: Teachers put in long days like many folks–40 to 50 hour weeks are not uncommon–but they don’t put in MORE work than most other degreed professionals. Certainly not if you count the vacation. Yeah, they have occasional long days but so do we all.

W/r/t intelligence: If you’re dealing with an education major then yes, the chances are that your average STEM-major SSC reader is in fact smarter than the teacher.

Saw this table recently: http://www.aei.org/publication/friday-afternoon-links-21/ which suggests the teaching and admin staff grows much faster than student number.

Also, given that pensions cost very little immediately (or at least can be made to seem so) and are a good way to placate unions, I’d expect pension commitments increase for some time without noticeable effect on costs, until people start retiring and then it turns out pension fund is underfunded and needs more money in it. Which might be where we are now.

I think you mean “underpaid and overworked”?

(typo thread)

“people can just increase costs and not suffer any increased demand because of it” -> decreased demand

“second,,” (double comma)

“elderly people live deal with it every day” -> deal

Also, in one of the first quotes, Scott writes “they conclude:” and then the quote starts with “based on x we conclude”. I would edit that to reduce redundancy.

“we se similar effects”

“grandchildrens’” -> “grandchildren’s”

“I think this is the basic intuition behind so many people, even those who genuinely want to help the poor, are afraid of “tax and spend” policies.” -> “…behind *why* so many people” ?

they comes in, get treated for their meth overdose

comes -> come

why does water costs

costs -> cost

Having worked in a school, I’d be inclined to accept Gazeboist’s correction, but to be fair, they have to teach classes of twelve to eighteen year olds all day, five days a week.

A million dollars/pounds/euro a year wouldn’t be enough to get me to do that. Plus these days, the amount of paperwork and box-ticking is crazy, and you can’t give a misbehaving student a clip round the ear/slap with the báta while they can swear at you, throw things at you, and physically assault you. Teachers are also being asked to be child-minders, do child-rearing (teaching them things their parents should be teaching them about how to be a human being), social workers and more.

From some angles, it’s a cushy job. From others, you need to be really dedicated to it because it’s not for everyone.

*Shrug*.

In isolation, it could have been either (though of course they would imply different things about his views), but in context Scott clearly meant the opposite of what he initially wrote.

I’d look further at the ratio of administrators to teachers/doctors etc, and the cost of complying with regulation for the fairly decentralized health and education sectors. Which line looks more like the education cost line?

Regarding your question on markets working, one of these things is delivered by a mostly free market, the other isn’t: Google’s first hit for Deplin®, official prescription L-methylfolate, costs $175 for a month’s supply; unregulated L-methylfolate supplement delivers the same dose for about $30.

Why can’t a manufacturer of L-methylfolate suppliments undercut the perscription sellers? That’s why markets aren’t delivering the cost savings they should be.

Saying regulations increase prices and limit market mobility is not something new or original, but part of the question is whether the cheaper price of the free market is actually worth it. Unless I fundamentally misunderstand what a grey-market is, I would imagine that the grey-market dealers are not going to be as cautious about their doses, or as picky about how they fabricate the materials. Part of the price of medicine is paying for the FDA-approved label, whether it’s through the better treatment of workers or the better quality material. The biggest problem with this is the fact that we don’t seem to be treating the workers better, nor getting better quality materials. (at least in the fields of education, medicine, and public works)

Why does it have to be the FDA? Why can’t it be some private credentialing organization that customers can choose to pay attention to or not?

If you want to convince us to buy grey-market pharmaceuticals, shouldn’t you be answering that question? Why aren’t there any private credentialing organization to tell which grey-market products are high quality? If there is one, why should I trust it? I know the FDA has a long reputation of strict certification, if you name some competing organization I’ve never heard of it might be a random scam.

Because the FDA is mandated by government fiat? Duh…?

There’s no point in a private organization setting safety requirements because the FDA has already set the minimum safety requirements way way too high (on average) and nobody can reduce it (short of fundamental changes to the law). Any organization doing this at a large enough scale to get noticed by the doctors would be noticed by the FDA and probably sued out of existence.

Also, I’m not trying to get you to buy gray-market pharmaceuticals. Where did you get that idea? I’m trying to point out that safety rules/screening don’t automatically imply the FDA.

One example here is helmets. They all have to be DOT certified (in the US). However there is a private organization with higher requirements (Snell). Some helmets get Snell certification and some do not. The distinction is generally understood (at least when it comes to motorcycle helmets) and some customers pay attention to Snell certification and some do not.

The benefit of Snell is that it is possible to set up competitor organizations with different standards without going through the heavy-weight process of legislation. It is also possible to have gradations for different people with different price and risk tolerances.

My questions is: why can’t we do the same for drugs?

For the specific case of nootropics, I think there’s a reddit board that does exactly that.

Whether it’s trustworthy I don’t know.

You’re right, my response was off-the-cuff and I didn’t think carefully about what exactly you were trying to argue for.

@newt0311

The system of having an independent organization like Snell works as long as there is just one of them. Once you have multiple rating agencies competing against each other to get the helmet manufacturers’ business the incentive scheme becomes perverse. Snell would be incentivized to give as many helmets as high a rating as possible because some other rating org could come in and undercut them. If I were building helmets why would I bring them to Snell if I can get an A+ rating from some other organization?

Reputation.

@CarpathoRusyn: By this logic, shouldn’t increased competition in, say, movie or video game review sites result in a race to the bottom where they’re all awarding everything 10/10 in a scramble for favors from studios? And yet that’s not what we see; if anything, the proliferation of review channels on the internet has made reviews more honest and critical in the last 15 years compared to the era before that where entertainment products were mostly reviewed by a few major magazines.

Personally, I’m far less trusting of rating and review agencies that don’t have competitors to verify their results and keep them honest. It massively increases the potential for bribery, pressure and other malfeasance.

@CarpathoRyson: the competition is two sided. As a consumer, would you trust a rating slapped on every helmet in the shop?

> Why can’t a manufacturer of L-methylfolate suppliments undercut the perscription sellers?

Because quartering the price is probably not going to quintuple the sales.

Regulated manufacturers quite likely have lower actual manufacturing costs, as they have larger scale. They have larger scale because most people want and can afford regulated drugs. Prices for those drugs are high because people tend to prefer not to die in horrible pain, and so place a high value on things that prevents that. Most of the money earned from a high price goes into marketing to turn that high potential value into a high price actually paid. Product development is just a special case of this; a drug that works better is easier to market.

The grey-market sellers have to massively undercut that to make it worth their while. They can still make a profit by leeching off the regulated sellers marketing.

I suspect this dynamic effects all the case with cost inflation; more gdp means people have more money, so are open to be persuaded to spend more on the really valuable stuff. That persuasion is expensive.

Perhaps I’m not asking my question well, why aren’t consumers with a prescription able to purchase the $30 supplements and keep the savings (surely there are some cheepskates, I know I’m one). In other words why aren’t the supplement makers capturing the expansion they could get by competing with the $170 prescription drug makers.

For the specific case of Deplin/Metafolin vs. grocery store folate supplements, the answer is basically that consumers are are perfectly free to rip up their prescription, buy supplements and pocket the difference.

However, doctors are not free to advise them to do this, from the risk-avoidance perspective outlined in this post, because if anything goes wrong the patient then has a potential malpractice lawsuit. Doctors theoretically have a free hand to prescribe off-label, suggest supplements or alternative therapies, but deviation from standard-of-care opens them up to increased liability. The “FDA Approved” stamp serves to cover their ass, and since they’re not the ones paying the cost difference they have zero-to-negative incentive to prescribe cheap supplements. Meanwhile, most patients don’t have the savvy or swaggering self-confidence to go around ripping up their prescriptions (and arguably they don’t directly pay the added cost, either) so they go along with it.

Our illustrious host goes into this at length in Fish – Now By Prescription:

“Is the public getting any service from LOVAZA™®© and DEPLIN™®©?

I say: yes! The companies behind these two drugs are doing God’s work; they are making the world a much better place. Their service is performing the appropriate rituals to allow these substances into the mainstream medical system.”