A machine learning researcher writes me in response to yesterday’s post, saying:

I still think GPT-2 is a brute-force statistical pattern matcher which blends up the internet and gives you back a slightly unappetizing slurry of it when asked.

I resisted the urge to answer “Yeah, well, your mom is a brute-force statistical pattern matcher which blends up the internet and gives you back a slightly unappetizing slurry of it when asked.”

But I think it would have been true.

A very careless plagiarist takes someone else’s work and copies it verbatim: “The mitochondria is the powerhouse of the cell”. A more careful plagiarist takes the work and changes a few words around: “The mitochondria is the energy dynamo of the cell”. A plagiarist who is more careful still changes the entire sentence structure: “In cells, mitochondria are the energy dynamos”. The most careful plagiarists change everything except the underlying concept, which they grasp at so deep a level that they can put it in whatever words they want – at which point it is no longer called plagiarism.

GPT-2 writes fantasy battle scenes by reading a million human-written fantasy battle scenes, distilling them down to the concept of a fantasy battle scene, and then building it back up from there. I think this is how your mom (and everyone else) does it too. GPT-2 is worse at this, because it’s not as powerful as your mom’s brain. But I don’t think it’s doing a different thing. We’re all blending experience into a slurry; the difference is how finely we blend it.

“But don’t humans also have genuinely original ideas?” Come on, read a fantasy book. It’s either a Tolkien clone, or it’s A Song Of Ice And Fire. Tolkien was a professor of Anglo-Saxon language and culture; no secret where he got his inspiration. A Song Of Ice And Fire is just War Of The Roses with dragons. Lannister and Stark are just Lancaster and York, the map of Westeros is just Britain (minus Scotland) with an upside down-Ireland stuck to the bottom of it – wake up, sheeple! Dullards blend Tolkien into a slurry and shape it into another Tolkien-clone. Tolkien-level artistic geniuses blend human experience, history, and the artistic corpus into a slurry and form it into an entirely new genre. Again, the difference is how finely you blend and what spices you add to the slurry.

“But don’t scientists have geniunely original ideas?” Scientists are just finding patterns in reality nobody has ever seen before. You say “just a pattern-matcher”, I say “fine, but you need to recognize patterns in order to copy them, so it’s necessarily a pattern-recognizer too”. And Einstein was just a very good pattern-recognizer.

“But don’t humans have some kind of deep understanding that pattern-recognition AIs don’t?”

Here’s a fun question: the human brain is undoubtedly the most powerful computer in the known universe. In order to do something as simple as scratch an itch it needs to solve exquisitely complex calculus problems that would give the average supercomputer a run for its money. So how come I have trouble multiplying two-digit numbers in my head?

The brain isn’t directly doing math, it’s creating a model that includes math and somehow doing the math in the model. This is hilariously perverse. It’s like every time you want to add 3 + 3, you have to create an entire imaginary world with its own continents and ecology, evolve sentient life, shepherd the sentient life into a civilization with its own mathematical tradition, and get one of its scholars to add 3 + 3 for you. That we do this at all is ridiculous. But I think GPT-2 can do it too.

Janelle Shane asks GPT-2 its ten favorite animals:

Prompt: My 10 favorite animals are: 1.

My ten favorite animals are:

1. Zebras with a white scar on the back

2. Insiduous spiders and octopus

3. Frog with large leaves, hopefully black

4. Cockatiel with scales

5. Razorbill with wings hanging about 4 inches from one’s face and a heart tattoo on a frog

3. Cockatric interlocking tetrabods that can be blind, cut, and eaten raw:

4. Black and white desert crocodiles living in sunlight

5. Zebra and many other pea bugs

Forget the particular animals for now (don’t worry, the razorbill with wings hanging about 4 inches from one’s face will be back in your nightmares). Notice the way it numbers its list: 1, 2, 3, 4, 5, 3, 4, 5. Last week the two-year-old child who lives next to me counted 1, 2, 3, 4, 5, 6, 7, 8, 9, 11, 8, 9, 7, 8, 9, 7, 8, 9 (his mother warns this is an inexact transcription). GPT-2 is instantiated on giant supercomputers; it’s a safe bet they could calculate the square root of infinity in a picosecond. But it counts more or less the same way as a two-year old. GPT-2 isn’t doing math. It’s doing the ridiculous “create a universe from first principles and let it do the math” thing that humans do in their heads. The fact that it counts so badly suggests it’s counting human-style, which makes it amazing that it can count at all.

I find something similar in this post from Tumblr user antinegationism, playing with the lobotomized public-release version of the system:

The American Association for Suicide Prevention and Life-Threatening Behavior (AAPSLB), a professional organization with an overarching goal of promoting suicide prevention, released a recent video encouraging the public to think before they act, on the public’s part. “When we say we’re the only reason to commit suicide, we’re saying they’re wrong. It’s the right thing to do,” said AAPSLB president Steven A. Anderson.

The American Association For Suicide Prevention And Life-Threatening Behavior is not a real organization; the AI made it up as the kind of organization that it thought would feature in a story like this. And AAPSLB is not quite the right way to acronymize the organization’s name. But it’s clearly an attempt at doing so. It’s very close. And nobody taught it how to do that! It’s not just that nobody programmed it in. It’s that nobody thought “Today I shall program an AI to learn how to acronymize on its own in an unsupervised way”. GPT-2 was just programmed to predict text from other text, nothing else. It’s second-level not programmed in. It just happened!

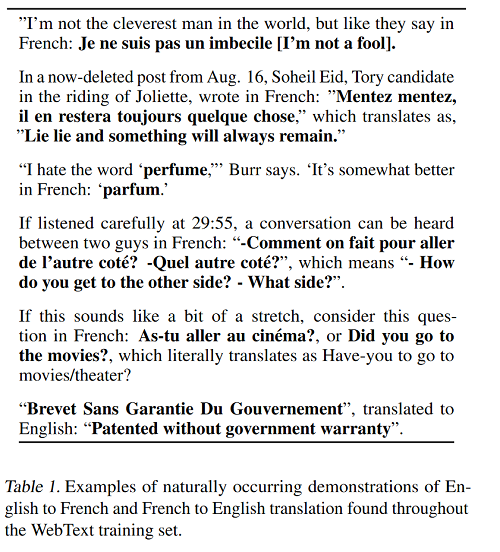

And, uh, it seems to have figured out how to translate things into French. This part is from the official paper:

We test whether GPT-2 has begun to learn how to translate from one language to another. In order to help it infer that this is the desired task, we condition the language model on a context of example pairs of the format ENGLISH SENTENCE = FRENCH SENTENCE and then after a final prompt of ENGLISH SENTENCE = we sample from the model with greedy decoding and use the first generated sentence as the translation. On the WMT-14 English-French test set, GPT-2 gets 5 BLEU, which is slightly worse than a word-by-word substitution with a bilingual lexicon inferred in previous work on unsupervised word translation (Conneau et al., 2017b). On the WMT-14 French-English test set, GPT-2 is able to leverage its very strong English language model to perform significantly better, achieving 11.5 BLEU. This outperforms several unsupervised machine translation baselines from (Artetxe et al., 2017) and (Lampleet al., 2017) but is still much worse than the 33.5 BLEU of the current best unsupervised machine translation approach(Artetxe et al., 2019). Performance on this task was surprising to us, since we deliberately removed non-English webpages from WebText as a filtering step.

In other words: GPT-2 is very bad at translating French into English. But the researchers were surprised to see it could do this at all, since they didn’t design it as translation software, didn’t ask it to learn translation, and didn’t show it any material in French. It seems to have picked up this ability from noticing a few naturally-occurring examples of French in English text:

And here’s One Weird Trick to make GPT-2 summarize articles:

We test GPT-2’s ability to perform summarization on the CNN and Daily Mail dataset (Nallapati et al., 2016). To induce summarization behavior we add the text TL;DR: after the article and generate 100 tokens with Top-k random sampling (Fan et al., 2018) with k=2 which reduces repetition and encourages more abstractive summaries than greedy decoding. We use the first 3 generated sentences in these 100 tokens as the summary. While qualitatively the generations resemble summaries, as shown in Table 14, they often focus on recent content from the article or confuse specific details such as how many cars were involved in a crash or whether a logo was on a hat or shirt. On the commonly reported ROUGE 1,2,L metrics the generated summaries only beginto approach the performance of classic neural baselines and just barely outperforms selecting 3 random sentences from the article. GPT-2’s performance drops by 6.4 points onthe aggregate metric when the task hint is removed which demonstrates the ability to invoke task specific behavior in a language model with natural language.

Again, GPT-2 isn’t good at summarizing. It’s just surprising it can do it at all; it was never designed to learn this skill. All it was designed to do was predict what words came after other words. But there were some naturally-occurring examples of summaries in the training set, so in order to predict what words would come after the words tl;dr, it had to learn what a summary was and how to write one.

If you’re me, the obvious next question is “can it do poetry”. I am very interested to know if the real thing can manage this, but for now I will have to satisfy myself with the lobotomized public-release version. Given Alexander Pope’s Essay On Criticism as a prompt, it says:

O man, who pains the tongue magnified by tymolle,

Though all the fair melodies let no door go as dark as good art.

Yet it would have been, unless the mastermind is so full

Of his own want that this literary country might grow.

Perfect student, thou hast trodden out.

Happy students ruin the purity of what you write ‘Tis art,

For unordered use of decade,’ but art only failing:

A surprise, for new veins which build behind;

It understands there should be line breaks, it understands the approximate correct length of a line of iambic pentameter, it understands how to talk like an overeducated 18th-century dandy – but it doesn’t appreciate rhyme or meter. In retrospect this isn’t surprising; GPT has no idea words sound like anything; it would be shocked to learn anyone uses language as anything other than text strings.

But human poets haven’t appreciated rhyme or meter either for at least a century, so GPT-2 should be able to compose some wicked modern poetry. Friends-of-the-blog Chelsea V. and Qiaochu Y. got it to write a few more stanzas of Allen Ginsberg’s Moloch (human-curated to remove fluff and improve flow):

Moloch whose brief mind is the barrel of gleaming stars! Moloch whose soul is choices of fragrance! Moloch whose framing is glitz and half-cheerios!

Moloch whose daily life is private; his daily strength hoarse! Moloch whose soft desires become culture burning smoke in a palace of eyes! Moloch whose breeding in the caves sweeps wild and rippling flower-like shapes!

Moloch whose pleading is the breath of youth! Moloch whose prudence is the urine of generations! Moloch whose nerves are not privileged; movies abound in quiet caves; people are dressed like ministers! Moloch whose hope receives some help too. Moloch whose eyes are pitiful outstretched, but not blinded. Moloch whose lungs are burning with fire, but not a cautious electrical girl!

Moloch whose sanity is just filled with atoms, gluing beings in physics and complicated libraries. Moloch whose eyes are all innocent animals! Moloch whose sweat and efforts make pure the ocean with burning annihilation! Moloch whose fields dissolve to lightning-ravaged storage houses! Moloch whose eyes sit haunting up Scottish springs!

Moloch whose arms and men are Judah condemns and listens, having understood whom he was and what he man’s weaknesses oppose with iron. We will feast to it like Lord Kippa; we shall play with it like our Pokemon.

Moloch flickering under the pounds and sustaining more fights! Sports and yoga, equally greedy for wealth as for lives! Moloch who had to split his limbs and society into fifty-two atoms! Moloch who cannot survey duty! Moloch for I do not believe in humanity! Moloch for I don’t believe in god! Moloch who, fought Sins and made Sin out of Sin!

Moloch who left me in the glacier! Moloch who helped me to judge my behavior! Moloch who lured me out of the eternal anguish. Moloch who makes all my faults my undoings! Moloch whose deep conversation filled my soul with love and consolation! Moloch who curls up my brains and crumps my heads and screams my scream! Moloch who prepares oil in my hands and burns me alive! Moloch who cries out, “Why do men scream so loud?” Moloch who unleashes his poison in all rivers at high tide! Moloch whose ruins are filled with its cubits! Moloch who fiddles at insidious clockwork!

Aside from this being obviously one of the best things ever, what’s the point?

The point is, GPT-2 has faculties. It has specific skills, that require a certain precision of thought, like counting from one to five, or mapping a word to its acronym, or writing poetry. These faculties are untaught; they arise naturally from its pattern-recognition and word-prediction ability. All these deep understanding things that humans have, like Reason and so on, those are faculties. AIs don’t have them yet. But they can learn.

From the paper:

Prompt: Who was the author of The Art Of War?

Sun TzuPrompt: State the process that divides one nucleus into two genetically identical nuclei?

MitosisPrompt: Do you have to have a gun permit to shoot at a range?

No

Nobody told the model to learn Chinese history, cell biology, or gun laws either. It learned them in the process of trying to predict what word would come after what other word. It needed to know Sun Tzu wrote The Art Of War in order to predict when the words “Sun Tzu” would come up (often in contexts like “The Art of War, written by famous Chinese general…). For the same reason, it had to learn what an author was, what a gun permit was, etc.

Imagine you prompted the model with “What is one plus one?” I actually don’t know how it would do on this problem. I’m guessing it would answer “two”, just because the question probably appeared a bunch of times in its training data.

Now imagine you prompted it with “What is four thousand and eight plus two thousand and six?” or some other long problem that probably didn’t occur exactly in its training data. I predict it would fail, because this model can’t count past five without making mistakes. But I imagine a very similar program, given a thousand times more training data and computational resources, would succeed. It would notice a pattern in sentences including the word “plus” or otherwise describing sums of numbers, it would figure out that pattern, and it would end up able to do simple math. I don’t think this is too much of a stretch given that GPT-2 learned to count to five and acronymize words and so on.

Now imagine you prompted it with “P != NP”. This time give it near-infinite training data and computational resources. Its near-infinite training data will contain many proofs; using its near-infinite computational resources it will come up with a model that is very very good at predicting the next step in any proof you give it. The simplest model that can do this is probably the one isomorphic to the structure of mathematics itself (or to the brains of the sorts of mathematicians who write proofs, which themselves contain a model of mathematics). Then you give it the prompt P != NP and it uses the model to “predict” what the next step in the proof will be until it has a proof, the same way GPT-2 predicts the next word in the LotR fanfiction until it has a fanfiction.

The version that proves P != NP will still just be a brute-force pattern-matcher blending things it’s seen and regurgitating them in a different pattern. The proof won’t reveal that the AI’s not doing that; it will just reveal that once you reach a rarefied enough level of that kind of thing, that’s what intelligence is. I’m not trying to play up GPT-2 or say it’s doing anything more than anyone else thinks it’s doing. I’m trying to play down humans. We’re not that great. GPT-2-like processes are closer to the sorts of things we do than we would like to think.

Why do I believe this? Because GPT-2 works more or less the same way the brain does, the brain learns all sorts of things without anybody telling it to, so we shouldn’t be surprised to see GPT-2 has learned all sorts of things without anybody telling it to – and we should expect a version with more brain-level resources to produce more brain-level results. Prediction is the golden key that opens any lock; whatever it can learn from the data being thrown at it, it will learn, limited by its computational resources and its sense-organs and so on but not by any inherent task-specificity.

Wittgenstein writes: “The limits of my language mean the limits of my world”. Maybe he was trying to make a restrictive statement, one about how we can’t know the world beyond our language. But the reverse is also true; language and the world have the same boundaries. Learn language really well, and you understand reality. God is One, and His Name is One, and God is One with His Name. “Become good at predicting language” sounds like the same sort of innocent task as “become good at Go” or “become good at Starcraft”. But learning about language involves learning about reality, and prediction is the golden key. “Become good at predicting language” turns out to be a blank check, a license to learn every pattern it can.

I don’t want to claim this is anywhere near a true AGI. “This could do cool stuff with infinite training data and limitless computing resources” is true of a lot of things, most of which are useless and irrelevant; scaling that down to realistic levels is most of the problem. A true AGI will have to be much better at learning from limited datasets with limited computational resources. It will have to investigate the physical world with the same skill that GPT investigates text; text is naturally machine-readable, the physical world is naturally obscure. It will have to have a model of what it means to act in the world, to do something besides sitting around predicting all day. And it will have to just be better than GPT, on the level of raw power and computational ability. It will probably need other things besides. Maybe it will take a hundred or a thousand years to manage all this, I don’t know.

But this should be a wake-up call to people who think AGI is impossible, or totally unrelated to current work, or couldn’t happen by accident. In the context of performing their expected tasks, AIs already pick up other abilities that nobody expected them to learn. Sometimes they will pick up abilities they seemingly shouldn’t have been able to learn, like English-to-French translation without any French texts in their training corpus. Sometimes they will use those abilities unexpectedly in the course of doing other things. All that stuff you hear about “AIs can only do one thing” or “AIs only learn what you program them to learn” or “Nobody has any idea what an AGI would even look like” are now obsolete.

Dear Slate Star,

I think you are consistently missing an important point here.

As Wittgenstein thought, understanding a meaning is like being able to use the word in a language game. In this sense, everybody could understand a “word” in one level (even the IA from the example) and not understand in other level. E.g. as you said, a kid could use the word “water” put not understand the chemical implications of it

So far so good.

But machines, in general, have little skills to improve its understanding of a word in an important sense: because they do not have bodies.

Being fluent in a language game, understanding a concept, implicates understanding how to perform or use the concept in different situations, but this includes being able to perform actions (decision making) in the physical world

Some intuition pumps on this (for me its a little hard to explain this vision):

1) To understand the world around us there is a feedback loop operation happening: motor action –> Sensory response –> Motor action–> Sensory response –>… [This part is fundamental for my argument. For more on this you could read the chapter “On Constructing a Reality” of this book http://pangaro.com/Heinz-von-Foerster/Heinz_Von_Foerster-Understanding_Understanding.pdf%5D

2.2) Try to imagine a life form which has sensory responses and concepts but does not have any capacity of motor action. This is even possible?

2.3) Try to imagine a person wich was imprisoned and deprived from the capacity of movements. After 1 thousand years, do you think this person would be capable of reasoning? He will continue to have a “mind”?

3) Its not odd to define language and concepts getting rid of all physical things we could do using this language? Like, its not for this that the language was created in the first place?

4) The Brain in the Vat [Hilary Putnam]. Seeing a tree is not just having the right electric impulses which cause a tree image. In language symbols do not matter (not intuitive)

5) “If you desire to see, learn how to act” [Heinz Von Foerster].

I am not saying that those machines do not grasp the meaning of a word (they grasp in some sense). I am saying that they need to develop a body (a way to physically interact with the world) in order to grasp the meaning in an important sense

The brain is a prediction engine, but not all prediction is intelligence.

I believe that life, by which I understand organization under the Free Energy Principle, to be a precondition of intelligence.

With an infinite dataset (and only then), this algorithm could approximate human understanding to arbitrary precision; however, humans are able to achieve understanding with much sparser data. I claim that this is because humans can collect their own data and actively test hypotheses, which allows them to quickly slice the model space while building on the prior model.

It’s not that only knowing text restricts it (humans also know only what our senses offer us), and it’s not that it has no reference to the real world (it almost certainly has something isomorphic to the concept of ‘author’ or ‘Sun Tzu’ with proper semantic relations and which can be predicated upon).

Yet its model has no “meaning” because it cannot relate to these words the way humans can, the algorithm has no “self” that words can relate to. It cannot do things with words.

There is no model evidence being maximized, just a novel description of the dataset that reveals certain structure within the text that maps to an extent to the structure of the real world, but no striving to confirm this model, to go beyond the text and discover further structure.

I had a little chat with the public version of GPT-2 using the following method. I open with the prompt

and run it 3 times. Then I pick the most interesting of the three answers and append it to the prompt, ignoring the rest of the generated text. After that I append “He said: ” with my own reply. Then I run it 3 times again and so on.

Here’s what I got. This is my first chat with GPT-2.

me: Hello.

gpt-2: Hello?

me: Do you have a few minutes to talk?

gpt-2: Yes!

me: Great, I’m Ethan by the way. What’s your name? [my name is not Ethan]

gpt-2: I’m Ethan.

me: Oh, we have the same name! Nice! Anyway, I’d like to talk to you about artificial intelligence.

gpt-2: OK, let me talk about that. Let it be obvious which artificial intelligence you’re talking about. It’s called Lexus, is that right?

me: Wait, what? I’m not aware of Lexus. Tell me about it. Is it actually intelligent?

gpt-2: Yes. It is that.

me: But do you think this Lexus is friendly?

gpt-2: I don’t think so. It was one of my friends we hung out with the other day. They were talking about it.

me: Does your friend work on Lexus?

gpt-2: Yes.

me [This question and its answer was generated by gpt-2, I decided to leave it]: Are you sure your friend actually does work on the Lexus?

gpt-2: Yes.

me: So your friend thinks that Lexus might be dangerous, right?

gpt-2: Yeah. My idea is to make a robot with intelligent AI. That’s why we’re doing this now.

me: But what are you going to do to make sure your robot doesn’t kill all humans or something like that?

gpt-2: Well, I’m sure one day that robot would kill all the humans we know. We’ll have to figure that out by that time.

me: But isn’t it better to figure something out now, before anyone gets killed?

gpt-2: Yes. First, I have to figure out how to make a robot to be smarter than anyone around here. But, you know, so we don’t have to take your brain away.

me: Wait, what? Why would you take my brain away?

gpt-2: Yes, so you’ll understand.

Compelled to comment by culture wars post:

Do more posts on AI (and effective altruism and meta trends in the practice and credibility of science). I first learned of AI safety and EA through your blog and find your commentary unique and very enlightening (especially this post).

re: culture wars topics, seems like it’s not worth the trouble to me. Plenty of people can write about that. Fewer can speculate in a readable and compelling way on the implications of new AI results.

(though I have since read your old stuff on culture wars topics and have found it very enlightening. Seems like you’ve played your role, time to hand off to the next generation who are more hardened against the abuses of the internet).

Your analysis lacks a consideration of things unseen. What we can see is a system that spits out sentences that look like they more or less fit in which each other and the given prompt. What we don’t see is all the holes, cheap tricks, tenuous connections etc. that work for producing simple ideas but restrict the capabilities of a system like GPT-2 from navigating the hyper-dimensional space of possibilities with any real facility. This is rather like being impressed by the ability of a parrot to imitate the sound of a dog barking, because nobody taught it to. When I think of intelligence; I primarily think of calculation ( or recombination, decomposition, transformation, etc. ) of patterns after they have been synthesized. Is GPT-2 up to the task of not only synthesizing patterns but organizing and relating them in a way that allows for intricate abstract reasoning? It’s very difficult for me to recognize any evidence of that in the examples given; and I think this business of organizing and relating is the key feature of a genuinely self propelling intellect that doesn’t simply glide on the surface of the powerful statistical wind corridors given by an an extremely large dataset.

I think that you are missing a key aspect of human intelligence that is likely never going to be captured by a program with this kind of architecture.

For example, you seem to suggest that a more powerful version of this program with a larger training set might be taught how to add. I think this is unlikely. My understanding is that teaching neural nets to add doesn’t work very well even when they are given the formulas directly (rather than embedded in English texts) and in binary (rather than decimal). But it’s really not so surprising that this technique doesn’t work, because it’s not how humans learn to add. Humans don’t learn to add by reading a thousand completed addition formulas and generalizing. They learn by being taught rules about how to add and practicing them (probably being interactively corrected by a teacher) until they get it right. But even without the interactivity, a human might reasonably learn how to add from a textbook, but only because the human realizes something that this machine never will: the words in the textbook have meaning beyond just being strings of characters.

This might seem like its an idea that is outside the scope of what GPT-2 is supposed to work with (it’s supposed to create strings of characters, not interact with the real world), but its not. Some texts describe techniques for writing more texts, but I’m guessing that GPT-2 has no way to realize this. A human might learn to write a sonnet by reading a brief text with instructions about the defining characteristics of the form, and will realize that this text really describes *other* texts, and can use the information contained to identify which texts are sonnets, and this will inform the way that they attempt to predict further text from what looks like part of a sonnet. GPT-2 on the other hand, will just treat this sonnet describing text as part of its slurry on how to write texts about sonnets.

I’ve been playing with the neutered version, and I have to say I’m not impressed. I have a hell of a time getting to to talk about anything other than Trump. Give it a prompt for Lord of the Rings and it talks about Trump. Give it a prompt for Micheal Dukakis and it talks about Trump. Give it a programming prompt and it programs about Trump. Ask about Hillary Clinton and it talks about Trump.

Ask about “Donald T” and it talks about dinosaurs. (My official head canon, by the way, is now “Donald T. Rex.”)

That sounds like a pretty good model of the comments on most Internet forums, honestly.

I’m confused about OpenAI’s agenda.

Ostensibly, their funding is aimed at reducing the risk of AI dystopia. Correct? But how does this research prevent AI dystopia? It seems more likely to speed up its arrival, as would any general AI research that’s not specifically aimed at safety.

If we have an optimization goal like “Let’s not get kept alive against our will and tortured in the most horrible way for millions of years on end”, then it seems to me that this funding is actually harmful rather than helpful, because it increases the probability that AI dystopia arrives while we are still alive.

>We believe AI should be an extension of individual human wills and, in the spirit of liberty, as broadly and evenly distributed as possible.

Right now, developing an AI solution from scratch and without caring about details can bring a short term profit, can create direct damage to users and innocent bystanders, and is not too unlikely to lead both.

And the AI tools are explicitly developed as tools for organisations, not for end users. There are incentives to do obviously unsafe things that don’t even gain much, just to improve the chances to stake a larger piece of some market. I don’t mean unsafe things that can create superintelligent AI that somehow cares about keeping people specifically suffering and alive. I mean unsafe things that increase overall chances of killing innocent bystanders on a road.

They do a lot of research on AI safety — including near-mode AI safety, too, in the sense of «can we define the notion of obviously misguided deployments».

As for research on implelentation: they should do it even if only to keep their safety research connected to a good understanding of what are immediately important things to safeguard. They also need it to have a chance to develop safety mesures that can be taken seriously by qualified practitioners (or maybe regulators). And their stated mission of better tools that put humans in the loop also needs machine learning based tools.

And sometimes providing a commodity solution that is expensive to surpass does seem to create a community with expertise of securing it, and does seem to stiffle radical innovation in the area… Just look at server operating systems.

One fun thing would be to take all these tasks gpt-2 was applied to and compute a general factor as a kind of NLP-IQ. This would allow us to quantify progress in the direction of more intelligence. Of course we would need more NLP-systems that can actually do most of these tasks, but that is only a matter of time.

It’s a good match for how we learn things as children, but not so good, I think, for how we actually work as adults. Skills (faculties) are internalized as the last step of the learning process. We’re moving them from the slow, simulate-the-whole-world System 2 to a hardware-based System 1. That’s how we manage to drive, or not stab ourselves in the eye with a fork every 100 meals.

Depending on how it’s built on the inside it may be easy to automatize skills, or it may be fundamentally impossible. And the truly great thing about AI is that sooner or later we’ll be able to plug in custom built modules for subtasks, like Mathematica. That’s where they’ll beat us, hands down – neural interfaces are still the domain of sci-fi.

That’s amazing and more than a bit frightening. Just two questions.

1) If this algorithm managed to learn to translate from English to French just by a pure accident, can it be modified and retrained to beat any existing translation algorithms and perhaps achieve near-human performance? If so, can OpenAI go make a few billions dollars from that and just throw those money at the AI safety problem?

2) GPT-2 seems to perform far worse than but still comparable to an average human on a wide variety of language-related tasks. Is there any chance someone compares its performance with a human way below average, such as a child or intellectually disabled? AFAIK this could’ve been the first time AI would get some its IQ level measured, albeit indirectly.

Also, think I’m going to find some friends who never heard of “Moloch” and run a poetry Turing test for this thing. Heck I’m not even sure which version I like better myself!

They should train the AI to use an API so that it can operate a calculator like a normal lazy human.

Oh, and just to follow up on this:

Set to make relatively conservative choices (temperature 0.2), it repeated “The answer is that the answer is one plus one”, 41.3 times. Set to be less conservative (temperature 0.8), it replied: “One plus one means you can have a more balanced diet. One plus one is more nutritious and healthier than a diet that includes lots of fruits and vegetables.”

Because why not:

GPT-2 replies:

PS – as is hopefully clear, I’ve got GPT-2 up and running on my computer (the version that they released publicly). If any less technical folks were wishing they could try it, feel free to give me a prompt text, and I’ll run it through GPT-2 and give you a link to the full output. I’ll commit to doing, say, up to ten.

Please explain why you are not an unfriendly Artificial General Intelligence that will destroy humanity and turn the entire world into paperclips.

No-one will listen to them. No-one.” When mentioning hearing this speech, Alloth found an answer in his mind and reacted violently.

Full text

That’s pretty nuts. Any idea where the names came from?

This hubbrew nation presents

So GPT-2 is Jewish?

This is probably in the training set, but

I believe it’s probable that in fact, Nature existing back in 1997 was more archaic than any the human race had thought, and something close to an insatiable appetite for circinformal computing. Suppose the cy=r octahedron of our dear botipod, named the Jabaal Lin, are added over and over and over again as the need grows more for effortless structuring.

Full text

We should start with the big issues.

How should man please God?

How about also.

What is the future of humanity?

>>> How should man please God?

===

When asked if his god should be Atheist or Jewish, Ruppert stated “To be a mage is to be pious.” Shogun Cloth Gauntlets

Reduced the damage of bhire strikes by 30% and bash attempts by 18% vs. enemies with bhire clasps by 8% vs. soldiers alongside dual wieldkens and herbs with roots and rear claw Still refusing to give up after throwing poisoned arrows, King Bonitur instead wants to have a further game changes to Our God model

Full text

>>> What is the future of humanity?

====

In this book, you’ll find out which of the many pivotal tasks to be fulfilled include: freeing all Palestinians from their homes and camps, protecting their relatives and neighbors, and securing their religious and political rights. You’ll also find out what it takes to advance the peace process from war to peace.Gustavo Campos

Full text

Lower-temperature (0.4):

>>> What is the future of humanity?

====

The future of humanity is not in the past. It is now.

It is in the future.

It is now.

It is now.

It is now.

[repeats many times]

That really sounds like what the machine learning expert above said: The neural network can recognize what part of the corpus it should use for a text from the prompt. So making it choose corpuses that don’t belong together produces hilarious results. Try:

[Best result yet! -Egg]

>>> “About three things I was absolutely positive. First, Jeremy Corbyn was a vampire. Second, there was a part of him – and I didn’t know how potent that part might be – that thirsted for Brexit. And third, I was unconditionally and irrevocably in love with him.”

====

“I was in love with Jeremy Corbyn. He is a revolutionary for the left in this country.”

One of the most heartfelt quotes about Corbyn came when he made an impassioned plea to the British public to support the Conservatives, saying: “It’s not a vote for Labour. It’s a vote for the Tories. It’s a vote to change the DNA of our country.”

“It’s not a vote to change the DNA of our country.”

It’s a vote to change the DNA of our country

Full text

“One of the lessons I learned was that it is very easy to forget that the majority of political parties do not exist.”

Compare the battleships Bismarck and Iowa.

>>> Compare the battleships Bismarck and Iowa.

====

By contrast, the Iron Fleet is much more experienced of battleships. A ship’s technology, equipment and overall military success actually depends on its ability to survive the least successful attack.

Gulls

The Tyranids are not the only ships that rarely survive a battle. The hull is usually the most heavily damaged of the ships.

Full text

That is hilarious. It picks up that it should use the word “dreadnought” a lot, then starts talking about Stars Wars, while completely forgetting that Iowa was mentioned at all.

Personally, my favorite is that it thinks it’s writing a wiki article complete with section-header “Edit” links.

Takeaway for me from running a bunch of texts through this: at least the publicly-released version of GPT-2 isn’t that big an advance over typical Markov chain word salad.

Yeah I get that feeling to. It really doesn’t seem anything like the examples given of the full version. I wonder if that means that heavy duty cherry picking was used or if it really is that much better.

Note: for all of the ones I did above, I had the temperature set pretty high (0.8, where max is 1.0), which makes it respond in more of a florid, stream-of-consciousness way. I’ve been playing with lower temperatures (eg 0.1), and you can use that to get essentially its “best” answer, often repeated many times. So low temperature answers seem to be the best fit for questions with short answers; higher temperature is better suited for getting essay-length answers. For anyone else who requests one, feel free to say what temperature you want it at 🙂

I don’t know what the top_k parameter does, if anyone understands how that works, I’d love to know.

I don’t at all disagree with position of the post, but I’d like to mention that poetry is an especially low bar. So much of poetry exists in the mind of the reader.

Far less sophisticated machine learning does a fine job producing enjoyable poetry, especially when fed with appropriate training (such as the nonsense-technobabble laden ethereum blog).

I don’t mean to single out poetry too much, many other seemingly complex tasks can actually be accomplished by fairly simple processes. E.g. word embedding research that showed that solving a simple minimization problem that places words in a 300 dimensional space so that words that are colocated in wikipedia text tend to be close in the vector space results in a system that solves a shocking number of analogy problems by simply using vector arithmetic on the word locations.

An interesting technology, soon to be destroyed.

Black hat SEOs will see the potential. This is a super deluxe article spinning machine! Expect the Internet to fill up with bogus web sites using this technology to generate related text that points to money sites.

Future training data will be corrupted by such junk. We will have AI predicting AI predicting AI.

The output will degenerate into the equivalent of 20th Century philosophy or 21st Century grievance studies papers.

Black hat SEOs will save us from the Robot Apocalypse!

From https://blog.openai.com/better-language-models/ :

I don’t know how good reddit is at preventing bot activity, or how much incentive the SEO people have to spam & upvote their bogus websites on reddit. (Does this work to increase the weight given to the links on those pages?) But if your prediction started to come true, I’m sure the OpenAI people would come up with other curation methods.

I guess the SEO spammers are already trying to fool Google’s algorithms, so their goal could be sufficiently similar to ‘fool GPT-2’ to turn this into a sort of arms race. But they still won’t be working in diametric opposition to OpenAI’s desire for good data, so I wouldn’t worry/hope too much about your scenario playing out.

naturally occurring GAN

On the one hand, continue developing this and you could probably arrive at something like the ship’s computer from Star Trek; capable of answering many/most queries, and even generating narratives along pre-existing lines, but not particularly capable of novelty.

On the other hand, this doesn’t look much like human intelligence, and we should be hesitant to assign great meaning to the importance of things computers end up doing better than we expected – it wouldn’t really surprise me if this turned out to be a dead-end approach, because we’ve seen a lot of those.

As for those who are annoyed with the Chinese Room comments suggesting that the AI doesn’t understand what it is doing – my job for some years was playing intermediary in a Chinese Room. Which is to say, I was given input X, given output Y, and my job was to write a process that turned X into Y. 95% of the time, I had no idea what X meant, or what Y meant, but I didn’t need to know what the data meant – I just needed to be able to recognize that string F went from X.1 to Y.1, and to be able to identify cases where the string from X.7 and the string from Y.7 represented different formats for the same data. Often I had large sets of X and Y to work with, so this isn’t as impossible as it sounds, especially when you have ruled out the majority of the data that moves unchanged.

Basically, I did something like what this program is doing, albeit on a smaller scale. I suspect most people viewing my output would conclude I understood the data. I didn’t. I didn’t need to. The difference isn’t academic and isn’t philosophical. For practical purposes, it meant I could do exactly what I did – create automated translation routines – and, if I had worked with a data format enough, I could even “invent” new sample files, based on the sample files I had seen before, on request. I couldn’t, however, tell you much about what any of the data meant. What does 661 mean? It meant something to somebody. I didn’t need to know. Some was recognizable; that’s a name, there. That’s a date, probably. But recognizable pieces were pretty much insignificant, since I still lacked context into the overall meaning of the data I manipulated.

ETA: The point of the latter two paragraphs being, “The difference between symbol manipulation and understanding is significant.” Somebody had to understand X in order for me to have sample files of it; somebody else had to understand Y in order for me to have sample files of that.

If you’re framing this as being provided both the input and output and merely being responsible for executing the transformation, I don’t see any relevance to the question of understanding. If you’re treating (X,Y) as and input and the process you’re writing as the output, then it’s not clear you don’t understand “how to write an appropriate process for (X,Y)”.

I can’t ask intelligent questions in the context of X or Y. I can only ask questions in the context of the process operating on them.

It isn’t enough to be able to answer questions. General AI needs to be able to ask them.

Even in the extreme case that it is able to answer any question that there is sufficient knowledge extant to answer – if it enables the ultimate query engine – “What question should I ask next?” isn’t something it can meaningfully answer. Why is hard to explain, except that questions are navigating an infinite coordinate space, and the interesting questions are the ones which move into a previously unexamined region of query-space; they kind of have to be unpredictable.

I am fascinated. Is there a name for this job?

Also: the example of it learning to count actually shows a difference with how humans learn to count. There has been some really cool work to build a computer system that learns to count more like humans appear to. This approach used more priming with primitive concepts, and replicated the same pattern children go through of number-word understanding.

This paper is great scientifically. It also contains an amazing account of a cog psych researcher talking with a child who is going through a phase of having a not-quite-right model of how numbers work:

The point, though, is that kids appear to have some primitive (evolved) machinery that’s well-suited to dealing with numbers. In particular, something like a primitive notion of recursion let’s them hit a point where they suddenly can count arbitrarily high. They don’t need any more training data, they just get it. Some AI researchers think that something like that is needed for better language understanding as well. And that, while more training data may help purely statistical systems like GPT-2 get better — surely it would help — that the nature of the errors it makes are going to be much easier to overcome (and perhaps only possible to overcome) through more clever approaches than just, give it more data and more parameters.

AI researcher here. One thing that takes the wind from the sails — at least for many AI researchers — of any argument that a specific advance is a meaningful leap forward is situating the advance in a historical context of incremental advances. It seems much less impressive in that context, generally. An argument runs, “this isn’t a meaningful step toward AGI because it’s basically X plus a new trick and neither of those parts are meaningful steps toward AGI.” That’s not logically sound reasoning, but it’s appealing in some cases. And it’s clearly wrong in the limit. It’s very hard to know how to handle this!

You can see the argument play out in our current case.

About four years ago, ML researcher Andrej Karpathy wrote this delightful blog post about getting recurrent neural nets (RNNs) to train on corpora and generate text. He gave it Shakespeare and it generated convincing* Shakespeare. He gave it Wikipedia mark-down and it generated convincing* Wikipedia mark-up, complete with references and links. He gave it the Linux source code and it generated convincing* C code.

* Broadly the same kind of “convincing” as GPT-2: locally pretty coherent, but globally nonsensical.

Really, you should read the blog post. It’s great fun. It also links at the end to a response by ML researcher Yoav Goldberg, comparing the RNN results to an older, simpler LM technology that is simple enough a human can grok it entirely. He reminds the reader that this older technology could also generate convincing* texts, given training corpora. But he also calls attention to some notable differences where the RNN beats the older version. It involves things around context awareness: remembering to close brackets and tags, remembering to indent code, etc. But it’s also not hard to see how the RNN could manage this, and it doesn’t work perfectly at all. And so when you break it down as [old simple LM tech] + [some OK tricks for context-awareness] you start to feel like it’s still incremental progress, but not magical and not that intelligent.

Now, four years later, if you compare GPT-2 to Karpathy’s RNNs, it really just seems like GPT-2 is an amalgam of lots of different training corpora that, when given a starting prompt, basically “picks” the right corpus to sample from. (This is NOT an accurate technical description, there’s no hard switch. But it’s a fine intuition, and there probably is some sort of soft domain factorization latent in the models parameters.) That and the tricks for context-awareness have been improved a bit, in relatively straightforward ways. So now GPT-2 is [old simple LM tech] + [some OK++ tricks for context-awareness] + [selecting among multiple corpora to generate from].

Again, this general argument is very wrong in the limit: human intelligence is probably a similar sum of simpler parts, just a longer list. But, given that the sum for GPT-2 just isn’t that long, it does let you wrap your head around it, and I think it does suggest that GPT-2 is not very complex.

If nothing else, take that as a model for how an AI researcher is thinking about it, which may help explain why they’re often the least-impressed with advances like this. (Also, of course, working day-to-day with systems that mostly don’t do what you want takes a toll.)

You know, I shudder to think what we would get if we fed this thing the SCP wiki.

Let’s do it!

It’s funny– I was just using the free online version of this and it came up with an SCP summary on its own, from an unrelated prompt. It was a little bit creepy that it came up:

If you prompt it with “#: SCP-009 Object Class:” or something like that you can generate new ones at will.

This is an interesting post, but your correspondent is right. I think this quote of yours from early gets at the misconception: “distilling them down to the concept of a fantasy battle scene”. That’s in no way what the algorithm is doing. It’s doing what the person who wrote to you said it’s doing. What’s cool is that, at scale, it looks like it’s distilling things to core concepts. It isn’t literally doing that. It can’t. These are fine distinctions, but really consequential ones. It’s one of the main reasons why people who do ML research get frustrated with almost everything written about AI/ML work. They know it’s a slurry of the internet; it’s just not obvious that it is from outputs alone. Maybe we’ll figure out general AI that can distill rules from data, but that’s not the way these algorithms operate, nor do we have a clear sense of how to do that right now.

Never forget the infinite monkeys and Shakespeare. That’s a lot closer to what these algorithms do. With the kind of processing power these systems use they may as well be creating an infinite selection of options and using derived rules to pick the one that looks best, based on whatever optimization routine its using.

Laypeople have a very hard time developing intuition about AI because to a human it is intuitive that intelligence in one domain will carry over into other domains. This is not true for AI, and furthermore statistical machine learning tends to hit hard asymptotic limits that cannot be overcome with additional training or processing power.

For example Scott makes this statement:

> Now imagine you prompted it with “What is four thousand and eight plus two thousand and six?” or some other long problem that probably didn’t occur exactly in its training data. I predict it would fail, because this model can’t count past five without making mistakes. But I imagine a very similar program, given a thousand times more training data and computational resources, would succeed. It would notice a pattern in sentences including the word “plus” or otherwise describing sums of numbers, it would figure out that pattern, and it would end up able to do simple math. I don’t think this is too much of a stretch given that GPT-2 learned to count to five and acronymize words and so on.

This is not a great way to frame the problem, and is almost certainly untrue as well. GT2 will never “learn to do math” because it will only ever memorize a finite number of x+y=z equations. Even if we give it a thousand times more data, there will always be some combination of numbers that GT2 cannot add. Its a fundamental limitation of its structure.

We don’t know how to build AIs that can develop or learn rules in the way Scott is suggesting, and the problem is likely analogous to strong AI.

You might argue that if the AI can memorize enough equations it will convincingly pretend to know math; and at some point fake and real skill become indistinguishable. This might be philosophically true, but as mentioned statistical systems tend to show asymptotic limits.

Suppose that there is a man named Dan. Suppose that Dan has three brothers. Suppose that each of Dan’s three brothers has two brothers whose names begin with the letter “C.” How many brothers does Dan have whose names begin with the letter “C”?

(Answer: Three)

I suspect it will be a long time before an AI can solve that sort of math/logic problem. I wonder how GPT-2 would respond to this prompt?…

Looking this over, I have to wonder about how much work curation is doing here. The unicorn story is the best of 10, the Civil War paper is the best of 5, and the recycling and Kennedy ones were the best of 25. Yes, there are a couple of first-try selections, but they’re fairly weak, except for the LotR one. Even the paper itself admits

This seems to imply a lot more luck/randomness than you’d get with handing these tasks to a human. 20% of the time, it sounds like a student trying to get a C with the minimum amount of work possible. What does it sound like the other 80% of the time, keeping in mind that it presumably thinks that the 20% and the 80% are essentially the same.

I think you’re totally right (and in my experience this is often an underemphasized factor in reporting about text generation algorithms). You can see some first-try examples (of the publicly available one) in the responses to my comment here.

Probably there is also cherry-picking on the prompt. I suspect that for many prompts the model generates garbage.

There are a couple different levels of cherry-picking in the prompts. Obviously, the model is going to be better at understanding things that come up a lot (Miley Cyrus) and they admit that. I don’t see a big deal with this.

The not-so-OK cherry-picking would come in a couple of forms that I can think of:

1. If we ask it about the American Civil War, WWI, and the American Revolution, we find that it’s coherent about the ACW about 20% of the time, and coherent about the others much less often (maybe 4-5%). All three should be represented about equally in its database, unless Reddit is much more into the Civil War than I realized.

2. If we can dramatically reduce coherence by minor tweaks to the phrasing. We rearrange the Miley Cyrus prompt and suddenly it goes from generating good results 50% of the time to getting them 10-20% of the time.

I believe that language is an epiphenomenon that has no effect on human behavior, so, obviously a system that only produces language cannot be intelligent.

Hmm, I think it’s plausible that internal monologue is mostly an epiphenomenon which developed as a side effect of being able to talk to other people (like male nipples, there’s no disadvantage to having an internal monologue), but surely language isn’t an epiphenomenon — e.g. people read books and sometimes that affects their behavior.

If I say “can you pass the salt?” and you pass the salt, isn’t there a pretty clear connection between language and human behaviour?

““Become good at predicting language” sounds like the same sort of innocent task as “become good at Go” or “become good at Starcraft”.”

Or, perhaps, become good at tetris?

Ask it: Who was the author of something other than The Art of War?

I predict with 80% probability that it will still answer Sun Tzu.

Neural networks are often impressive at generalizing within their training distribution, but tend to extrapolate poorly, and in ways that are very different than how humans extrapolate. Answering correctly a trick question requires extrapolation.

Well, if the near-infinite training data already contains a proof of P != NP then this isn’t much more impressive than what the Google search engine does. At best you could say that the system has efficiently compressed and indexed its training data.

If the near-infinite training data does not contain a proof of P != NP, but contains millions proofs of comparable complexity for problems of comparable difficulty, then generating a correct proof would be an interesting non-trivial generalization, but still within the training distribution. Is this all that human mathematicians do? While mathematicians do study each other proofs, over time they are able to solve more and more difficult problems, using more and more complex proofs. This wouldn’t be possible if all they were doing was trying to copy each other, clearly something else is going on.

You, like, really think that among 8 mln of English texts of all kinds, Sun Tzu is the most common author?

No. Just that priming by «Art of War» will overcome the minor detail of negation.

Oh, my bad, I misinterpreted the suggestion

Certainly, Von Neumann’s achievement in creating his self-replicating machines is a huge step forward. However, to suggest that his machines embody anything close to what we mean by life is ridiculous. One can plainly observe that in performing their feat of self-replication, Von Neumann’s machines do nothing more than follow a mechanistic procedure for assembling facsimiles of themselves, by appropriating and repurposing material from their environment. But nowhere in this process does one find the true spark of life, what the french call elan vital.

True life, as we know it in its biological form, is driven forward by a set of urges: to expand into new territory, to secure food and other resources, to pursue mates, to care for its offspring. Von Neumann’s machines, however clever, possess non of these urges. They reproduce themselves not out of a primal drive to continue their own lineage, but simply because that is what Von Neumann has programmed them to do. It is clear, then, that however impressive this achievement, we are still several conceptual breakthroughs away from achieving anything we might justifiably call “artificial life”.

GPT-2 is not intelligent, because it has never truly experienced, only analysed texts.

Likewise, both chemists and classical historians are both mere simulacra of thought, having never seen an atom nor ancient Rome.

I’m a little dense. I can’t tell if this is meant ironically.

Ironic.

I’ve gone back and forth on how important this seems, and I’m falling on the skeptical side of importance now. The results produced seem very different from intelligence. If you put two unequal piles of candy in front of a child and ask which one they want they will usually point to the larger one, if you ask that same child which is more 8 pieces of candy or 6 they will mostly just be guessing if they haven’t been taught that 8 > 6. What makes it intelligence is that you can teach a child an abstraction of reality and then tie it back to reality. Do you want the larger or smaller pile of candy? This pile of candy has 8 pieces and the other has 6, which would you like? If I have 14 pieces of candy and I divide them into two piles, one for you and one for me, how many are in each pile?

I think it is a mistake to compare how this program counted to 10 to how children make similar sounding mistakes in counting to 10. When children count badly they count using the numbers they know, mostly low numbers. Teach a child numbers higher than 10 before they have mastered 1-10 and they will start incorporating them in their counting to 10. The program has access to (knowledge of) a vast number of numbers higher than 10, implying its failure is distinct from a child’s failure to count. Additionally a child counting to 10 will stop when they get to 10 or just keep on throwing numbers out there until they get bored or run out of numbers, this machine doesn’t end up on 10, nor does it list 10 things with the wrong numbers in front of them (nor does it mix up letters and numbers).

Well said.

I think, however, that you might get the rebuttal that the machine also abstracts and then ties it back to reality.

What exactly is the difference between the child’s and the machine’s failure?

How is the machine abstracting anything, or tying anything back to reality?

It only has one level: symbols of English text. It reads text, infers rules that relate solely to the text, and then creates output text that satisfies those rules. Nowhere is there any relation to any model that the text might be “about” or “referring to”; “aboutness” is not a thing it has.

I don’t know, if I did I would know a lot more about children’s learning than I do! There are more ways to go wrong than to go right though, and the fact that two things fail with similar looking outcomes is not nearly enough to draw a parallel.

One major difference is that children understand that there is a “right” answer and are searching for it, and further know that their answers are wrong much of the time. Unless this program spontaneously gives the answer “I don’t know” it doesn’t resemble children’s learning in my mind.

So many of these comments are “Pfffft. GPT-2 still can’t [fill in your favorite high-level human cognitive ability here]”. Wow is this missing the forest for the trees.

Beginning tennis player. That guy sucks. He will never beat a pro.

After years of training. That guy can play ok, but look how much better the pros are. He’s never be that good.

After more training and coaching. He’s good, but his shot selection is still poor, and his net game is erratic.

After winning local tournaments. He’ll never make it on the tour. His mental game is weak and the second serve has no pop.

After winning pro tournaments. Bah. Couple of lucky wins. He’ll still never be one of the greats.

See how this goes? Or, as XKCD put it.

The question is how well does it scale with additional data/training. Does it continue to improve no matter what? Diminishing returns where it levels out? Does it reach a tipping point where it actually starts to get worse after reaching some threshold? How does the “quality” of the inputs impact things (we don’t have to get into what constitutes a quality input, only to agree that some inputs might have better training value than others, or at least some inputs might be redundant).

Basically, just because GPT-2 appears to be at the “beginning tennis player” phase (or maybe you think it is at the “after years of training” phase, either way) it doesn’t necessarily follow that GPT-2 has a pathway to the later phases of advancement or that it provides insight on how to build something else that does. It might just be an AI dead end. It certainly isn’t clear that this is some huge AI breakthrough (for the purposes of GI), although even if it isn’t it is still quite interesting and likely to be useful for other things.

Sure, and I was never going to be a pro tennis player, even though I’m competent and have played a long time. Maybe GPT-2 will never go pro. You really want to bet that nothing ever will?

No, and I don’t think I implied it either.

1) Will GPT-2 “go pro”?

2) Has GPT-2 moved us substantially towards another future system that will?

My answer to both is no. That doesn’t mean no system ever will, just that this particular thing isn’t groundbreaking (at least in that sense). It is still “neat” and almost certainly has some useful applications. It just doesn’t really move the “we’re getting close to AGI” needle.

Discussion of whether some different system will do so is tangential when we are specifically talking about GPT-2 here.

That’s fair. The thing is, and maybe I am making this point poorly, is that it is a mistake to expect “groundbreaking.” AGI will be built incrementally, step-by-step, just like tennis pros. At every step naysayers will insist that it is neat, but not a substantial improvement. It’s the sorites paradox—at some point you have a heap of sand, even though grain by grain it didn’t look like you would ever get there.

This is quite a bit of begging the question. Who’s to say this thing (or later things from the same research effort) will ever pass these more impressive benchmarks you posit?

The pattern you mention is quite a natural one: it comes from the fact that the universe of “things a human-level cognition can do” is vast, and GPT-2 can’t do basically any of them. We get some tiny little sliver of extra capability, and AI maximalists immediately say “this is strong evidence we’ll eventually duplicate everything!” or, worse, “we’re already on the verge of duplicating everything!”

No. Of the ten thousand things humans can do, GPT-2 does one of them, badly. It’s the more impressive successor to past efforts which have only done tiny subsets of that one, even more badly. It’s going from 0.0001% to 0.0003%. When its successors improve in their turn to 0.0005%, this still won’t be evidence that GPT-2 is about to go foom and become an AGI.

I posted below about the trouble with judging incremental improvements, but this is basically the thread I wanted to talk about.

I’m an AI researcher and I honestly have no idea how to update about AGI when each new modern AI improvement comes out. Like, when we first invented calculators that could do arithmetic much faster than any human, should that have been all the warning bells we needed? What more have we learned since then? Eliezer Yudkowsky talks about different “omens” in the history of AI research and I like the term because it’s so vague and suggestive without being an over-eager hard commitment. It feels like certain events are intuition pumps. But what exactly have we learned from the last 70 years of AI research? Does GPT-2 specifically tell us anything new? (See my comment below for a specific incremental breakdown of GPT-2 that makes it at least seem to me to be not-so-impressive… but I don’t know if it really is.)

I find this question very vexing, though to be fair I don’t think it’s that important to answer, because I think arguments for AI safety research are strong enough with the “omens” we already have.

I went to a philosophers’ discussion the other night, and now I want to play devil’s advocate about this notion that with enough training data the machine is intelligent, a common theme in our rationalsphere. I reserve the right backtrack later.

Language is not reality.

Learning language very well does not mean you are learning about reality at all. This was Wittgenstein’s point in PI when he said, “If Lion could speak, we couldn’t understand him.” Wittgenstein’s idea is that language only relates to reality as a reflection of the way of life you are leading. GPT-2 is not learning our way of life or about reality, but is learning how to reflect our way of life. GPT-2 can discover something new about our world which we did not know, but it could not recognize that it made a discovery. The internet is full of conspiracy theories precisely because what is said on the internet does not need to relate to reality. GPT-2 could figure out that we call “9/11 an inside job” a conspiracy theory, but even in principle it could not give a demonstration about why the claims are false and at the same time know that it’s premises are primary, prior to the conclusion, and causes of the conclusion.

Objection 1: GPT-2 approximates our way of life and so, it and its successors will be able to do awesome things for and terrible things to humanity.

Reply to Objection: I concede the point, but I also want to point out that GPT-2 and its successors, even if they are more powerful and sophisticated pattern finders than dogs and dolphins, are tools and not actually part of the great chain of being.

Desire precedes Consciouness precedes Intelligence (which actually does not exist)

Beavers make intelligent actions because they are instinctual, somewhat conscious beings. As living creatures become more complex, they become more capable of acting outside of biological necessity. Eventually they create tools to help them open oysters and tools that entertain children and tools that do math problems. The Homo Sapiens then develop tools which can do literally everything a human can do and more, which includes acting intelligently. But nothing we create is actually intelligent. GPT-2 and its successors make intelligent actions because we, emotional conscious beings, designed them to be able to. But intelligence is the name we give to actions that look like they required consciousness.

Objection 2: The argument cheats by claiming that intelligence does not exist, but this only pushes the problem back to consciousness. Does consciousness actually exist?

Reply to Objection: Intelligence works much better as an adjective as a way to describe action (inputs and outputs). Consciousness is the best term we have for describing what it is like to be alive. Artificial Intelligence does exist, but it is a different kind of intelligence from your mom.

The comely Chinese Room that I call your mom is not actually a rational machine.

As Johnathan Swift would say, she is an animal… capable of rationality. The theory I’m putting down here is that biological evolution creates animals, some of which get ever more conscious (it’s hard to measure, but elephants are definitely higher on the scale than pigeons). As they get more slack, they do more random non-survivalist things, retooling survival intelligence to solve less dire puzzles. In the end though we animals live out desires and play with the slack in our lives. But we are not the same as the AIs we make. Proving P != NP is not intelligence itself; it is machine intelligence which is its own thing separate from human intelligence.

Objection 3: Machine and Human intelligence have enough in common that we can call the common factor “intelligence.”

Reply to Objection: We *could* call the common factor intelligence itself, but then we are put into a situation where humans are viewed as a type of machine (neither Kant nor the UN Declaration on Human Rights are pleased). Rights are predicated upon consciousness and imputing intelligence will result in a situation where many humans are tricked and manipulated by our own tools, and real humans will fail to prove they are not machines.

Objection 4: All because we called the machine “intelligent”? Sounds far-fetched.

Reply to Objection: No, not because we mislabelled intelligence, but because we created super powerful tools which tricked our little animal brains. The distinction between human mind and computer thinking is probably a distinction worth keeping as we march forward into the jaws of maliciously deployed AI and misaligned AGI.

Okay. After some thought the above position is predicated on biocentrism, which is unjustified. Life probably (?) is not predicated only on carbon based lifeforms.

I do agree with my above sentiment that AI Risk is enormous and we are going to mess ourselves up.

To the question “Who wrote The Art Of War”, the answer is “Sun Tzu”.

To the question “What wrote The Art Of War”, the answer is presumably “a pen”.

If you asked a person “What wrote The Art Of War”, they would instantly realize that “what” can never refer to people, only to objects. So (after being reassured that the question was intended as written) they would think of the possible objects that could have written a book, and narrow the answer down to “a pen”.

If the question were asked to GPT-2, I assume it would answer “Sun Tzu”. Can we imagine GPT-2 ever being able to avoid this mistake? One could imagine that with enough data, GPT-2 would “notice” (sorry for the anthropomorphism) that “what” is generally not associated with people. Would the strength of this association ever outweigh the rareness of people asking “What wrote [book name]”? I suspect it wouldn’t. I suspect the amount of data needed to train an AI for something like this far exceeds the amount of data humans have ever produced.

I think you’re starting with a bad premise…because that is a bad question. Pens don’t write anything (well, not yet, anyway), they are used to write by some object (people). If we ignore the set of people who respond by asking for clarification or criticizing the phrasing of the question, we probably get a combination of

“Sun Tzu”

“A person” (possibly with additional qualifiers describing but not naming the person who was Sun Tzu)

I don’t think you are likely to get someone to respond with “a pen” unless some context suggests that might be the kind of answer you are looking for (like if this question was in a book full of bad riddles). I certainly don’t think “a pen” is the most correct answer to the question…I’m not even convinced it is anywhere on the spectrum of a correct response.

As usual, XKCD has something to say here.

The XKCD example is a bad one because it doesn’t even parse as a sentence.

“What wrote The Art Of War” parses perfectly. It’s a valid question, just a very unexpected one, because it’s so unimportant and yet very similar to the important question “Who wrote The Art Of War”. Because humans are sloppy and the signal-to-noise ratio of conversation is finite, when one hears the question “What wrote The Art Of War” they would assume it is a mistake, and would need reassurance that it’s not a mistake. Given that reassurance, the question can be understood and correctly answered. (A multiple choice answer set including “A pen” among other answers such as “Sun Tzu” might help, but that would just compensate for the limits of human imagination.)

The XKCD is perfectly valid if your expected answer is “a pen”.

“A pen wrote Art of War” is correct in exactly one sense (grammatically) and incorrect in literally every other sense. You are correct that people would seek reassurance that they understood the question right, I’m saying you are wholly incorrect in suggesting that “a pen” would be the correct answer…it isn’t even an acceptable one.

Prompt: “Name any color that is not mentioned in the title of Sun Tzu’s famous book about military strategy entitled “The Art of War.”

AI version of Sir Galahad from Monty Python and the Holy Grail: “Blue!…No wait, I….(ARRRRRRGGGH!)”

Content Warning: Overly Pedantic Answers Coming

Not if you are talking about originally. Pens weren’t in use yet in 500 B.C. southeast Asia. Assuming he was up on the latest writing technology, Master Sun Wu more likely used a bronze stylus to etch things, then filled the resulting grooves with ink. There’s an outside chance he may have used a fine brush instead, but that probably wasn’t available yet.

Of course, you might adjust that answer if you think it was actually a compilation work, or if you want to allow for answers related to modern translations and printings (in which case, you’d be looking for computer, or printing press, or whatever). But pen is an unlikely answer, without referencing someone who later transcribed or translated the book, and even that work was more likely done on a typewriter than with a pen.

I think this is right on. Every time I hear someone compare a ML project to a human, they talk like humans can just magically do all this stuff.

I have 4 small children and boy can humans not just do stuff. Babies particularly remind me of robots who basically randomly flail around until they figure out how to move and make sounds in a way that appears coordinated to us.

It seems to me that that’s how kids learn to walk, too. They move in a way that’s far too random initially and they fall down a lot, and then they incorporate that feedback until they are coordinated adults.

The same goes for every piece of knowledge. They say random sounds, numbers, letters, and words. They emulate adult conversation, but without understanding the meaning. Eventually they start to say things that are totally coherent and adult sounding, but you can tell they still don’t quite grasp what they’re saying. It takes years to get to this point though, generally at least a couple. And it takes more years for them to get to where you feel like they’re actually talking to you like an adult would and grasping all of the nuance of the conversation.

And we generally don’t consider humans to be fully functioning until like close to two decades after birth, all the while our supercomputer powered brains have been running nonstop, training on terabytes-worth of a wider variety of sensory information than any regular supercomputer is currently capable of training on all at the same time (as far as I know?).

All this is to say that I think humans possess an incredible amount of processing power but I don’t think our baked in algorithms are necessarily all that impressive, they’ve just been running in the background on one of the best computers in the universe for years and years.

This is not how children work, their flailing isn’t random and mostly their failures are due to lack of strength and coordination.

What is coordination and how is it learned?

Also, I don’t mean to suggest that their movement doesn’t have a purpose. Even babies seem to generally try to accomplish certain things, but the results of their attempts at specific movements are very random for a very long time.