[Epistemic status: Pretty good, but I make no claim this is original]

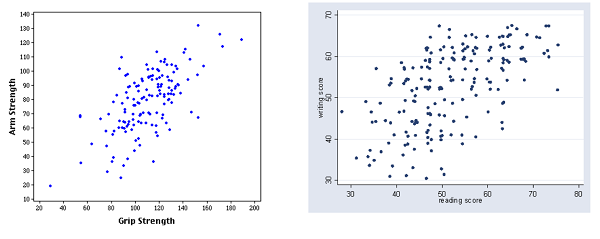

A neglected gem from Less Wrong: Why The Tails Come Apart, by commenter Thrasymachus. It explains why even when two variables are strongly correlated, the most extreme value of one will rarely be the most extreme value of the other. Take these graphs of grip strength vs. arm strength and reading score vs. writing score:

In a pinch, the second graph can also serve as a rough map of Afghanistan

Grip strength is strongly correlated with arm strength. But the person with the strongest arm doesn’t have the strongest grip. He’s up there, but a couple of people clearly beat him. Reading and writing scores are even less correlated, and some of the people with the best reading scores aren’t even close to being best at writing.

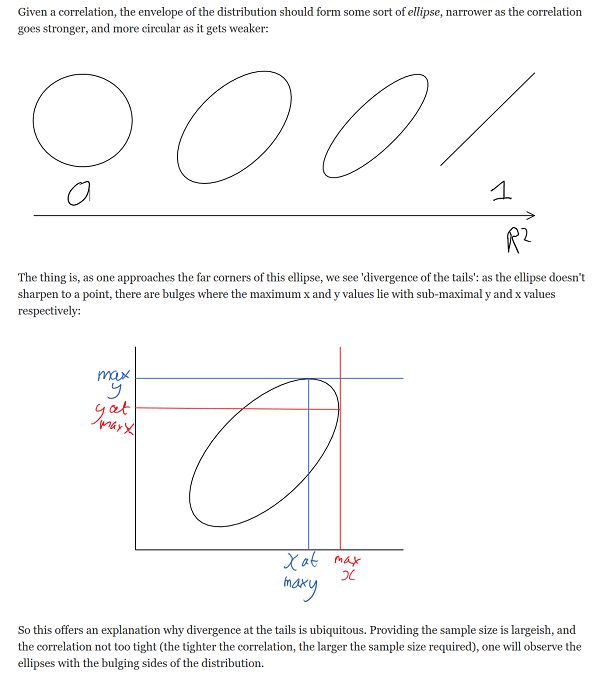

Thrasymachus gives an intuitive geometric explanation of why this should be; I can’t beat it, so I’ll just copy it outright:

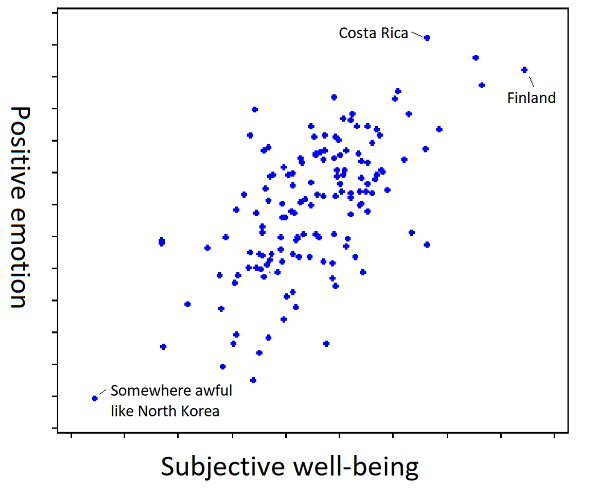

I thought about this last week when I read this article on happiness research.

The summary: if you ask people to “value their lives today on a 0 to 10 scale, with the worst possible life as a 0 and the best possible life as a 10”, you will find that Scandinavian countries are the happiest in the world.

But if you ask people “how much positive emotion do you experience?”, you will find that Latin American countries are the happiest in the world.

If you check where people are the least depressed, you will find Australia starts looking very good.

And if you ask “how meaningful would you rate your life?” you find that African countries are the happiest in the world.

It’s tempting to completely dismiss “happiness” as a concept at all, but that’s not right either. Who’s happier: a millionaire with a loving family who lives in a beautiful mansion in the forest and spends all his time hiking and surfing and playing with his kids? Or a prisoner in a maximum security jail with chronic pain? If we can all agree on the millionaire – and who wouldn’t? – happiness has to at least sort of be a real concept.

The solution is to understand words as hidden inferences – they refer to a multidimensional correlation rather than to a single cohesive property. So for example, we have the word “strength”, which combines grip strength and arm strength (and many other things). These variables really are heavily correlated (see the graph above), so it’s almost always worthwhile to just refer to people as being strong or weak. I can say “Mike Tyson is stronger than an 80 year old woman”, and this is better than having to say “Mike Tyson has higher grip strength, arm strength, leg strength, torso strength, and ten other different kinds of strength than an 80 year old woman.” This is necessary to communicate anything at all and given how nicely all forms of strength correlate there’s no reason not to do it.

But the tails still come apart. If we ask whether Mike Tyson is stronger than some other very impressive strong person, the answer might very well be “He has better arm strength, but worse grip strength”.

Happiness must be the same way. It’s an amalgam between a bunch of correlated properties like your subjective well-being at any given moment, and the amount of positive emotions you feel, and how meaningful your life is, et cetera. And each of those correlated is also an amalgam, and so on to infinity.

And crucially, it’s not an amalgam in the sense of “add subjective well-being, amount of positive emotions, and meaningfulness and divide by three”. It’s an unprincipled conflation of these that just denies they’re different at all.

Think of the way children learn what happiness is. I don’t actually know how children learn things, but I imagine something like this. The child sees the millionaire with the loving family, and her dad says “That guy must be very happy!”. Then she sees the prisoner with chronic pain, and her mom says “That guy must be very sad”. Repeat enough times and the kid has learned “happiness”.

Has she learned that it’s made out of subjective well-being, or out of amount of positive emotion? I don’t know; the learning process doesn’t determine that. But then if you show her a Finn who has lots of subjective well-being but little positive emotion, and a Costa Rican who has lots of positive emotion but little subjective well-being, and you ask which is happier, for some reason she’ll have an opinion. Probably some random variation in initial conditions has caused her to have a model favoring one definition or the other, and it doesn’t matter until you go out to the tails. To tie it to the same kind of graph as in the original post:

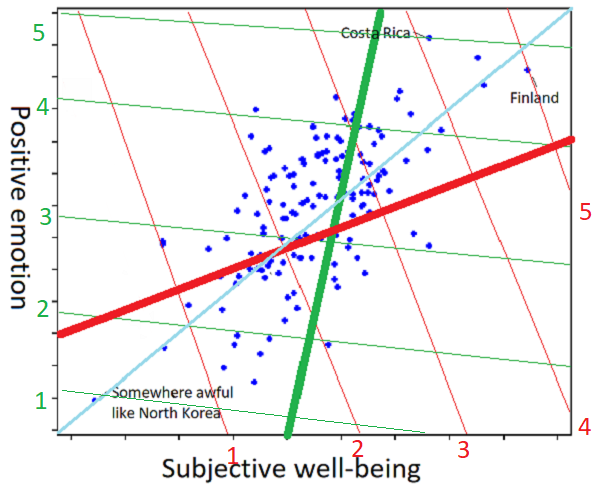

And to show how the individual differences work:

I am sorry about this graph, I really am. But imagine that one person, presented with the scatter plot and asked to understand the concept “happiness” from it, draws it as the thick red line (further towards the top right part of the line = more happiness), and a second person trying to the same task generates the thick green line. Ask the first person whether Finland or Costa Rica is happier, and they’ll say Finland: on the red coordinate system, Finland is at 5, but Costa Rica is at 4. Ask the second person, and they’ll say Costa Rica: on the green coordinate system, Costa Rica is at 5, and Finland is at 4 and a half. Did I mention I’m sorry about the graph?

But isn’t the line of best fit (here more or less y = x = the cyan line) the objective correct answer? Only in this metaphor where we’re imagining positive emotion and subjective well-being are both objectively quantifiable, and exactly equally important. In the real world, where we have no idea how to quantify any of this and we’re going off vague impressions, I would hate to be the person tasked with deciding whether the red or green line was more objectively correct.

In most real-world situations Mr. Red and Ms. Green will give the same answers to happiness-related questions. Is Costa Rica happier than North Korea? “Obviously,” the both say in union. If the tails only come apart a little, their answers to 99.9% of happiness-related questions might be the same, so much so that they could never realize they had slightly different concepts of happiness at all.

(is this just reinventing Quine? I’m not sure. If it is, then whatever, my contribution is the ridiculous graphs.)

Perhaps I am also reinventing the model of categorization discussed in How An Algorithm Feels From The Inside, Dissolving Questions About Disease, and The Categories Were Made For Man, Not Man For The Categories.

But I think there’s another interpretation. It’s not just that “quality of life”, “positive emotions”, and “meaningfulness” are three contributors which each give 33% of the activation to our central node of “happiness”. It’s that we got some training data – the prisoner is unhappy, the millionaire is happy – and used it to build a classifier that told us what happiness was. The training data was ambiguous enough that different people built different classifiers. Maybe one person built a classifier that was based entirely on quality-of-life, and a second person built a classifier based entirely around positive emotions. Then we loaded that with all the social valence of the word “happiness”, which we naively expected to transfer across paradigms.

This leads to (to steal words from Taleb) a Mediocristan resembling the training data where the category works fine, vs. an Extremistan where everything comes apart. And nowhere does this become more obvious than in what this blog post has secretly been about the whole time – morality.

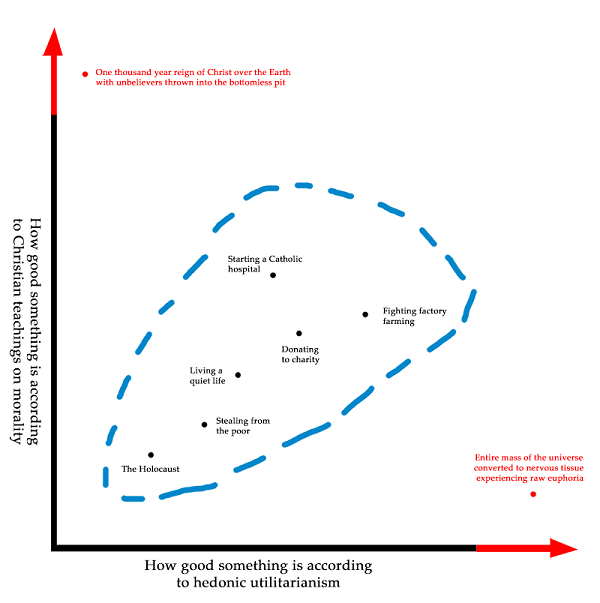

The morality of Mediocristan is mostly uncontroversial. It doesn’t matter what moral system you use, because all moral systems were trained on the same set of Mediocristani data and give mostly the same results in this area. Stealing from the poor is bad. Donating to charity is good. A lot of what we mean when we say a moral system sounds plausible is that it best fits our Mediocristani data that we all agree upon. This is a lot like what we mean when we say that “quality of life”, “positive emotions”, and “meaningfulness” are all decent definitions of happiness; they all fit the training data.

The further we go toward the tails, the more extreme the divergences become. Utilitarianism agrees that we should give to charity and shouldn’t steal from the poor, because Utility, but take it far enough to the tails and we should tile the universe with rats on heroin. Religious morality agrees that we should give to charity and shouldn’t steal from the poor, because God, but take it far enough to the tails and we should spend all our time in giant cubes made of semiprecious stones singing songs of praise. Deontology agrees that we should give to charity and shouldn’t steal from the poor, because Rules, but take it far enough to the tails and we all have to be libertarians.

I have to admit, I don’t know if the tails coming apart is even the right metaphor anymore. People with great grip strength still had pretty good arm strength. But I doubt these moral systems form an ellipse; converting the mass of the universe into nervous tissue experiencing euphoria isn’t just the second-best outcome from a religious perspective, it’s completely abominable. I don’t know how to describe this mathematically, but the terrain looks less like tails coming apart and more like the Bay Area transit system:

Mediocristan is like the route from Balboa Park to West Oakland, where it doesn’t matter what line you’re on because they’re all going to the same place. Then suddenly you enter Extremistan, where if you took the Red Line you’ll end up in Richmond, and if you took the Green Line you’ll end up in Warm Springs, on totally opposite sides of the map.

Our innate moral classifier has been trained on the Balboa Park – West Oakland route. Some of us think morality means “follow the Red Line”, and others think “follow the Green Line”, but it doesn’t matter, because we all agree on the same route.

When people talk about how we should arrange the world after the Singularity when we’re all omnipotent, suddenly we’re way past West Oakland, and everyone’s moral intuitions hopelessly diverge.

But it’s even worse than that, because even within myself, my moral intuitions are something like “Do the thing which follows the Red Line, and the Green Line, and the Yellow Line…you know, that thing!” And so when I’m faced with something that perfectly follows the Red Line, but goes the opposite directions as the Green Line, it seems repugnant even to me, as does the opposite tactic of following the Green Line. As long as creating and destroying people is hard, utilitarianism works fine, but make it easier, and suddenly your Standard Utilitarian Path diverges into Pronatal Total Utilitarianism vs. Antinatalist Utilitarianism and they both seem awful. If our degree of moral repugnance is the degree to which we’re violating our moral principles, and my moral principle is “Follow both the Red Line and the Green Line”, then after passing West Oakland I either have to end up in Richmond (and feel awful because of how distant I am from Green), or in Warm Springs (and feel awful because of how distant I am from Red).

This is why I feel like figuring out a morality that can survive transhuman scenarios is harder than just finding the Real Moral System That We Actually Use. There’s a potentially impossible conceptual problem here, of figuring out what to do with the fact that any moral rule followed to infinity will diverge from large parts of what we mean by morality.

This is only a problem for ethical subjectivists like myself, who think that we’re doing something that has to do with what our conception of morality is. If you’re an ethical naturalist, by all means, just do the thing that’s actually ethical.

When Lovecraft wrote that “we live on a placid island of ignorance in the midst of black seas of infinity, and it was not meant that we should voyage far”, I interpret him as talking about the region from Balboa Park to West Oakland on the map above. Go outside of it and your concepts break down and you don’t know what to do. He was right about the island, but exactly wrong about its causes – the most merciful thing in the world is how so far we have managed to stay in the area where the human mind can correlate its contents.

The main thesis here is interesting, but I’m not sure that the “happiness” example is a good one. Do the different survey questions (about how good we think we have it, how often we experience positive emotions, how much meaning we see in our lives, etc.) actually yield data that shows a positive correlation between them? I would guess there’s not much of a correlation at all, because it seems that the particular phrasings of the questions point to quite different things.

For instance, asking where on a scale from 1 to 10 would we place our lives in terms of how good we have it, people in more developed countries that clearly allow for better quality of life are almost certainly going to rate their lives highly. We grow up with a decent frequency of reminders about how bad conditions are in other parts of the world and through most of human history that came before us, and so any of us with the tiniest bit of awareness is probably not going to be inclined to answer with a low mark. And yet, I would imagine that objective quality of life (relative to other places of the world rather than others in one’s own environment) is correlated with true happiness (or satisfaction or frequency of positive/negative feelings) weakly at best, due at least in part to hedonistic treadmill effects. (I see that Baeraad made more or less the same point in a comment above.)

Meanwhile, evaluating the level of meaning in one’s own life strikes me as an obviously mostly independent question. I don’t suppose someone in a deep apathetic depression that doesn’t allow for many emotions at all would consider their life to be very meaningful, but a person whose life has been mostly intense suffering may very easily see profound meaning in their own life without being a very happy person in any normal sense of “happy”.

Email issue feedback: I just got the “new post” email from this post, this morning, October 22.

First email I’ve gotten in weeks.

Same- I’m betting all subscribers have the same experience here.

Same here

Yes same. I only just discovered, after seeing your comment, that other comments date back to late September! I thought comments sections closed down after two weeks anyway, so this is strange…

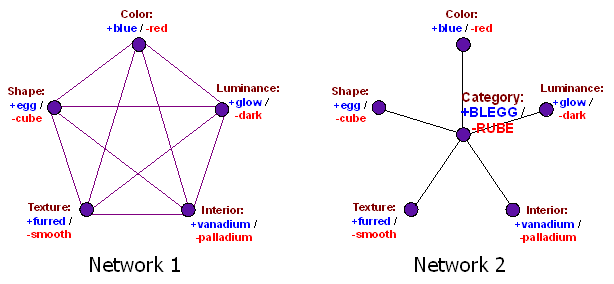

This seems similar to my post here https://www.lesswrong.com/posts/ix3KdfJxjo9GQFkCo/web-of-connotations-bleggs-rubes-thermostats-and-beliefs , when I discussed extending definition from a small set of environments to a larger one.

Some definitions seem to completely fall apart when the set of environments is extended (eg “natural”). Other (eg “good”) have multiple extensions, such as “EAGood” (utilitarianism of some sort) and “MundaneGood” (a much more complicated construction, that tries to maintain a lot of the connotations of “good”).

I connected the choice of one or the other to the distinction between bullet-dodgers and bullet-swallowers https://www.scottaaronson.com/blog/?p=326 : bullet-dodgers want to preserve the connotations, even if the extension is a huge mess, whereas bullet-swallowers want a simple concept that scales, even if it sacrifices a lot of the original concept.

The more you live on the tails, the less training samples you have, so your instincts fall apart. It’s extrapolation, not bounded by survival and evolution. So it becomes totally sensitive to the model.

The instinct conflict maybe shows that, fortunately, the output of our instincts includes info on certainty, so we realise when we are extrapolating.

This process of reverse engineering the instincts, because they hold the “training data”, in order to train our rational consistent explicit models of “morality”, breaks down on the tails. We could either extrapolate with the best we have, or stay near Balboa-West Oakland.

We have little training data over there, and what we have is based on objective functions that we are kinda trying to leave behind (reproduction, survival, etc). Our rationality is limitted (aka obvious stupidity). Even the sharpest blade among us is roundish-dull when you zoom in on the “tails”. And we are zooming in.

The problem I see with the analogy is that defining what’s moral probably isn’t about finding somewhere (anwhere) in the middle of a cluster to order things from or make communication practical, like strength might be, -where one draws the lines of morality is not just a way of ordering the world, it directly controls ones actions and attitude.

Or wait no I see another problem, which is that there really is an ephemeral and hard-to-define, but all-important and easy-to-point-to, thing at the centre of the cluster: What should I do according to my values, what should my values be, how should I decide that?, etc.

_

I think stuff like deontology, virtue ethics, utilitarianism are more like different martial arts than different definitions. -It’s just that a a popular strategy is to commit so hard to a school of thought that you end up conflating it with the core thing being approached (as it happens, common not just in morality but in every art), the same way someone might conflate kickboxing with fighting, because for them, as a kickboxer, they are one and the same.

_

(This strategy is pretty confusing to observe en masse, seeing as by definition it can’t be admitted, and you might think the claim sounds outlandish or an insulting/arrogant caricature, but if so you’re not considering the obvious and considerable advantage, and/or importance of advantages in the matter, -which is of strongly tying *some* way of navigating morality into one’s identity. -Who’s the better martial artist, the guy who lives and worships karate or the scholar of all but practicioner of none? — Deontology etc have more glaring weakness of breaking down at the tails, but this can be avoided with humility and practicality. (Just because someone conflates things in principle doesn’t mean they’ll do so in practice, especially when the whole point of their approach is sacrificing theory for practical benefits))

If you follow the red line, you won’t just feel awful because of how far you are from green, you’ll also feel awful because you’ll be in Richmond.

Scott, I’ve been wondering how you reconcile Mistake Theory with your ethics, which seem to be “subjectivist”, fairly relativist, completely arising from the arbitrary values/preferences of agents, etc. Maybe I’m misunderstanding your ethics, in which case, what are they?

I just see a contradiction between Mistake Theory and moral relativism. If there are no correct values, and one’s values determine what is correct, what happens when two agents with fundamentally conflicting values meet? Mistake Theory says there is some information they could learn that could lead them to agree; relativism disagrees: values are arbitrary preference, and there is no correct answer. Am I missing something?

Regarding post singularity moral directives, mightn’t the general lesson here just be “don’t go to the extremes”?

We condition the ASI to stay in the agreed upon “goodness” region – improving the world and society only in ways that would fit most people’s definition of good. Reduce poverty, eliminate disease, prevent war, etc.

This is of course a huge over-simplification, and there are unintended consequences, conflicting values, and resource constraints to contend with, but it seems like the obvious first step.

Everyone agrees that girls who dress and behave modestly do not deserve to be sexually assaulted. Any sexual assaults on such girls should be punished severely.

This would fit most people’s definition of “good” — probably even more so than reducing poverty and eliminating war. If there’s anything in the realm of ethics that “everyone” agrees on, this must be it. So let’s make this completely uncontroversial imperative the basis of our society’s sexual morality.

I’m not sure what your point is.

are you arguing that girls should be sexually assaulted, and so this model would be wrong?

or that it would force people to dress modestly somehow?

the logic is unclear.

No, I am arguing that girls who dress and behave modestly do not deserve to be sexually assaulted.

Why is this controversial or unclear?

Edit: Why can’t I just get a heartfelt agreement to this assertion?

I get the sense you are trolling.

Nevertheless I agree, given that no girls (or any person) deserve to be sexually assaulted, modest girls would fall into that category.

Then I am glad to hear this, fully subscribe to this idea, and further propose that the principle of “severe punishment for all sexual assaults against modestly dressed and behaved girls” should be taken as a consensus basis for policy.

Let’s stay in the agreed upon goodness region, no reason to court controversy.

The fact that we agree on one statement does not mean that that statement covers the entirety of the “agreed-upon goodness region.” “A contains B” does not imply “A equals B.”

For example, most people also agree that rape is a crime even when perpetrated against girls who aren’t modestly dressed.

Some people agree that theft is bad, period, whether perpetrated against poor people or rich people.

But, when pressed on it, many people would admit that they think that stealing from the poor is more objectionable than stealing from the rich.

Why do the rich need so much money anyway? If people steal from them, they have it coming. Perhaps they should spread some of their money around.

So the statement “stealing from the poor is bad” is consensual. This is exactly how it was phrased in the blog post on which I am commenting. The unqualified statement “stealing is bad” is not consensual. Wars with millions of dead have been fought over it. Hence the need for this qualification: stealing from the poor is bad.

Likewise, the statement “girls who dress modestly should not be sexually assaulted” is uncontroversial. On the other hand, the stronger version you are suggesting has been debated literally for millennia and the debate is by no means over yet. So how can it be a consensual basis for policy?

@MB: There’s nothing hypocritical about the fact that most people believe stealing from the poor isn’t as bad as stealing from the rich. I think most people’s moral intuition is something like “stealing is always wrong, but obviously stealing a lot is worse than stealing a little.” And what they consider to be “a lot” or “a little” can depend on the wealth of the victim, so that stealing 90% of a poor man’s savings is worse than stealing 1% of a rich man’s fortune, even if the former would only be $900 and the latter would be $100,000. Most people also probably have a moral rule along the lines of “stealing so much from someone that they’re unable to afford food or shelter is a far worse crime than just stealing enough to cause someone inconvenience or discomfort, and morally tantamount to physical harm.” Thus, there’s a sense in which even stealing 99% of a rich man’s fortune isn’t as bad as stealing 50% of a poor man’s savings if it’ll result in the poor man going hungry and sleeping out in the streets. It’s not that people don’t have universal rules that prohibit stealing, it’s that those rules are more nuanced than you give them credit for.

Furthermore, you seem to be doing some kind of weird bait-and-switch where “stealing from the poor is worse than stealing from the rich” becomes “stealing from the rich is perfectly okay” and then mutates into “stealing from the rich is something that should be actively encouraged.” Those three arguments are wholly different and should not be treated as identical; there are a lot of people who would agree with the first, but very few people who would agree with the second and only a tiny number of fringe extremists who would agree with the third.

I’m not even going to touch the rape argument, because I absolutely cannot believe you’re making that one in remotely good faith.

Don’t you see the problem with the following reasoning:

“Everyone agrees that stealing from the poor is wrong, but opinions are divided about stealing from non-poor people. A reasonable compromise is to severely punish stealing from poor people, but let the middle-class and rich people know that they are on their own”.

No? Then what about this?

“Everyone agrees that poor children should be encouraged to go to college. However, opinions are divided about middle-class children. Some say they also deserve to attend college if they are bright enough and pay for it, but other countries went as far as closing all institutions of higher learning just to prevent them from attending. Obviously, this was bad, because it also prevented poor children from going to college. As a compromise, let’s have a (possibly informal) cap on the number of middle-class children going to college, as long as nobody makes a big fuss about it, but make absolutely sure they pay full price: no merit-based scholarships. And let’s encourage poor children by all possible means, since everyone agrees on that”.

I think this accurately reflects the (left’s) consensus position on college. Some may think it is actually *the* consensus position and will never understand how one could ever oppose it.

But this “middle-of-the-road position” is not middle-of-the-road at all, because adding the qualifier “from the poor” to the imperative of “not stealing” lowers it from a universal imperative to a pragmatic compromise policy. As a matter of public policy, discriminating against citizens on any basis other than actual misdeeds is a more fundamental violation of the social contract than even stealing.

From a certain “objective” and “impartial” point of view, my position is extreme, off to one side, since, if any of these policies were put to a vote, then no “reasonable person” would be against this compromise position.

What kind of monster would be in favor of stealing from the poor or discouraging poor children from attending college? And, going any further into the details, it gets more complicated and there is no simple conclusion to be reached; it just invites the sort of dispute and controversy that public policy experts loathe. So let’s all just agree to not steal from poor people and leave it at that.

To me, these examples show

1. That a poll of everyone’s opinion is a shaky basis for ethics.

2. That people who justify their ethics either through a priori reasoning or “scientifically” from scatterplots are full of it, because in the end both will “prove” exactly what they want them to prove.

3. The dangers of letting the Left set the terms of public discourse, which will inevitably be skewed toward their preferred issues and assumptions (e.g. the Gulag is not listed in the article as worthy of universal condemnation; that’s because there is no consensus on the Left on this issue).

A fish may not even know what “water” is.

>I think this accurately reflects the (left’s) consensus position on college.

I think your model of what the left believes about education is inaccurate. You may want to do additional research into the policy actually being proposed and its expected impacts, as well as reconsidering your sources of information and potential biases in political motivation impacting your beliefs.

Let’s break it down. Which of these assertions is inaccurate:

* Left-wing people believe that poor children should be especially encouraged to go to college.

* The left favors an informal cap on the number of middle-class children going to college.

* In some countries with left-wing governments, this was/still is a hard cap.

* Some left-wing governments closed higher learning institutions in their countries, to fight against the bourgeoisie and bourgeois children.

* The left does not wish too much attention drawn to these policies.

* The US left is against merit scholarships.

Otherwise, I see some aspersions cast on my “sources”, but no actual rebuttal.

Your position is sliding all over the place and mixing up tons of dissimilar situations.

it (dishonestly) implies that liberal countries allow ALL poor children to go to college, but expressly prevents some qualified middle class children from attending.

it pretends the “the left” are a homogeneous bloc, and conflates “communist” dictatorships with liberal democracies.

it states “some” in the argument and then claims “all” in the conclusion

Can you please provide support for ANY of the bulleted claims you made? I’m not clear how you came to believe them, and they contradict observation.

For example, I’m not aware of any modern liberal democracies that have closed all institutions of higher learning to prevent education of the “bourgeoisie”.

Nor have I ever seen studies showing universal consensus among the US left to discontinue merit scholarships.

So either we are living in parallel universes or you have access to special sources or you are making things up.

To keep it simple, here you go:

https://www.civilrightsproject.ucla.edu/research/college-access/financing/who-should-we-help-the-negative-social-consequences-of-merit-scholarships

https://www.huffingtonpost.com/the-sillerman-center/merit-or-need-based-scholarships_b_7835262.html

Both of these sources support my assertion that the US left is against merit scholarships.

Now, if you are arguing in good faith, please provide me with at least two US left-wing defenses of the merits of merit scholarships.

My only implication is that left-wing people dishonestly set the terms of the debate to favor their positions.

Encouraging poor, but brilliant children to acquire an education is extremely uncontroversial. Indeed, this was even practiced under the old bad Ancien Regime, as shown by the lives of Kant or Gauss.

Conversely, encouraging middle-class children to attend college is controversial in left-wing circles, as shown by the examples of China and Cambodia. Still don’t see how one can simply dismiss the example of a country with over .5 billion inhabitants at the time, but there you go.

So a consensus policy would be “encourage poor children to attend college”. Who can be against that?

Likewise, stealing from the poor has always been considered to be wrong. There exist specific Biblical injunctions against it going back to 500 BC.

However, many people believe that it is not wrong to steal from the rich and the powerful. There are even ballads sung about it!

So the consensus is “do not steal from the poor”.

Just like “reducing poverty” and “preventing war”, these are left-wing consensus policies, behind which almost everyone, from the mildest social-democrat to the most extreme Stalinist, can rally.

They also have the merit of appearing to be common-sense positions, just as the ideal that “modestly dressed girls should not be subjected to sexual assault” would appear at first sight.

But of course a lot of effort went into deconstructing the latter idea and depicting anyone who invokes it as a rape apologist, to the point that a mere mention of “modesty” now raises suspicion from any sufficiently doctrinaire left-wing person.

I performed here a similar deconstruction of some of the Left’s favorite cliches, in order to show what’s really hiding behind them. Hopefully, in the future, the idea that “stealing from the poor is wrong” or that one’s aim should be to “reduce poverty” will be met with the same apprehension as the idea that “modestly dressed girls do not deserve to be raped”.

@MB: The idea behind supporting need-based scholarships over merit-based scholarships is that upper-class students can presumably afford to go to college without a scholarship, not that upper-class students shouldn’t be going to college at all. (It’s really hard for me to believe that you genuinely don’t understand that, which inclines me to suspect you’re arguing in bad faith, but I’ll give you the benefit of the doubt.) Absolutely no one is claiming that upper-class students shouldn’t be getting a higher education, and I find it incredibly bizarre that you assume anyone is making that argument. I have never seen any leftist (or anyone else) in a developed Western nation say that upper-class students shouldn’t be getting an education, so your claim that it’s “controversial” seems to be completely unfounded.

Now, I’ll grant you that admitting more poor people to colleges would result in less upper-class people being able to go, since colleges have a finite number of seats, but I don’t see anything particularly noteworthy about that observation. Conflating that with support for a cap on the number of upper-class students allowed to go to college seems disingenuous. In fact, I’d wager that most leftists believe that everyone should be able to go to college.

As for China and Cambodia closing down universities back in the 70s, that’s utterly irrelevant to the discussion of higher education in the modern Western world, to the point where I’m honestly baffled you would bring it up. May as well use Ancient Mesopotamia as an example.

In what way are opinions divided about stealing? Have there been a lot of people in the news saying that we need to get rid of laws against theft?

(Are you one of those “taxation is theft” people? If so, Scott has some posts about the non-central fallacy you might find interesting…)

Once again, where have you seen people taking such a stance? I’m pretty sure the consensus stance on college is something like “College is good for everyone. Middle class and upper class people can afford to get a college education without government aid. Poor people need additional help.” Which implies that there should be some sort of need-based financial aid, but doesn’t at all imply that middle-class children should be actively prevented from going to college.

“In what way are opinions divided about stealing?”

Since the 19th century, Robin Hood has been made by leftists into some sort of folk hero who steals from the rich and gives to the poor. Recently, there has been a series of movies about a daring band of thieves pulling off a successful robbery and striking it rich. This series has been so successful that I’ve lost track of the number of movies in it. Same for “Robin Hood” movies. Not coincidentally, after the Great Depression gangster movies became very successful. The “old, successful thief who comes out of retirement to pull off one more heist” is a common trope in movies. White-collar crime is at least as popular, as shown by movies such as “Catch Me if You Can” or even “Office Space”.

To me, this is a strong indication that opinions are divided about theft in general, as opposed to stealing from poor people. At least in Hollywood left-wing circles, but quite likely in left-wing circles in general, only stealing from the poor is seen as wrong. Stealing from the rich, from the banks, or from one’s boss is seen as brave and glamorous.

“Where have you seen people taking such a stance?”

In most socialist countries and several non-governing left-wing movements.

Children of people from the “five black categories” (landlords, rich farmers, counter-revolutionaries, bad-influencers, rightists) were forced to drop out of school and/or university in the PRC. This was also a common policy in other former socialist countries for children of “kulaks” and several other categories.

This counts as “people taking such a stance”. I admit to not having witnessed it personally, but believe it happened.

From the US, I found in 10 minutes of searching:

“We’re still paying for rich people to go to college. Why?”

“Is it immoral for rich kids to attend public school?”

“Attending College With Too Many Rich Kids”

“Free College Would Help the Rich More Than the Poor” (with the implication that this would be a bad thing).

So yes, this shows left-wing animosity against “rich kids” going to college in the US. At the very least, they should have to pay the full cost of their studies. Even better, they should go to their own private schools, because they can afford them. They are on their own.

Finally, US universities are actively taking measures toward reducing the numbers of white students, which can function as a proxy for the number of middle-class students:

https://content-calpoly-edu.s3.amazonaws.com/diversity/1/images/Diversity%20Action%20Initiatives%20Final%206-7-18_%232.pdf

“In 2011, the campus was 63 percent Caucasian; in fall of 2017, it was less than 55 percent. Applications from underrepresented minority students doubled between 2008 and 2018, while overall applications during that time increased by just half that much. Progress is being made — and the university is more diverse now than at any time in its 117-year history — but there is still much work to do.”

So even in the US the Left is working toward decreasing the college attendance of “privileged” groups. Yes, by necessity the methods are different — a “nudge” in the US, public shaming in the former socialist countries — but the goal is the same.

I’m not sure why this should pose a problem for subjectivist morality. Subjectivism only requires that some moral propositions are true. It doesn’t require that all moral propositions can be given a truth value. It seems perfectly plausible that moral propositions can be assigned truth values only when they lie within the range of common experience. If you believe (as I mostly do) that a moral statement is true if there is a consensus among moral agents that it is true, then it is unsurprising that there should be moral statements where neither the statement nor its contradiction is true.

You know, I’ve always thought that was strange? I don’t know about Norwegians and Danes, but I do know that Swedes and Finnish people are if anything pretty dour and gloomy.

It may be the “worst/best possible life” thing that causes it, I guess. Everything is relative, and while we may not think much of our lives the way they are, we have absolutely no problem imagining how they could be SO MUCH WORSE. And conversely, we are skeptical towards the idea that it is even possible for life to be all that great in the first place. I mean, I’m miserable most of the time, but if someone asked me how fortunate I am relatively speaking, I would place myself well above the average.

Mind you, I do think that having the sense to count your blessings is a happiness of sorts, especially if you’re a comfortable First Worlder who really does have a lot to be thankful for.

This post is comparing three things that have no right to be compared.

The tails coming apart in a correlated data set, for something obvious and naturally measurable like strength– fine, that’s cool, I guess.

Conflicting measures of happiness– definitely *not* tails coming apart. I know this because, as the article states, using a different metric makes it possible to completely upend the ordering (Finland first vs last, Africa vs not Africa, etc.). From their description of the data it sounds like there’s little to no correlation in the first place. Which is no surprise to me when you’re pretending to measure something like happiness, because I’ve read samzdat. The paragraph rejecting the cyan line vastly understates how bad the problem is here. You had no grounds to believe that any of those survey questions had to do with “happiness” and no business measuring either of them with a 1-to-10 scalar, let alone putting any two of them on a scatter plot together.

Conflicting notions of morality– this is not “data” at all, despite your absurd attempts to pretend that it is, and there’s a much simpler account of the phenomenon you describe. Namely: The Ring Of Gyges. No matter what your ultimate moral value is, a stable society must ensure that it’s best served by being (perceived as) an upstanding, productive citizen. That moral values diverge to a monstrous degree when society’s constraints disappear is something that’s been well understood (and repeatedly proven) throughout history. No need to drag Lovecraft into it; the infinite black ocean you’re describing here is just the human soul.

Naturalist materialist evolutionary universal problem solving models, proposing that intellectual and moral enlightenment is rationally best pursued only fallibly by a directed search across a partially well-organised landscape, have been applied in the academic literature to reasoning about both facts

http://isiarticles.com/bundles/Article/pre/pdf/140950.pdf (the paper “A proposed universal model of problem solving for design, science and cognate fields”, 2017, New Ideas in Psychology)

http://www.sunypress.edu/p-2011-reason-regulation-and-realism.aspx (the book, “Reason, regulation and realism”, 2011, SUNY Press)

and values

https://onlinelibrary.wiley.com/doi/abs/10.1111/j.1467-8519.2009.01709.x (the journal article, “How Experience Confronts Ethics”, 2009, Bioethics)

https://www.bookdepository.com/Re-Reasoning-Ethics-Cliff-Hooker/9780262037693 (the book, “Re-reasoning Ethics”, 2018, MIT Press)

Hasok Chang has written some fascinating historical accounts of the evolution of scientific ideas such as heat and H2O, demonstrating the evolutionary dynamics at play in the establishment of scientific consensus as publicly defensible knowledge.

In the coming urban wars, this will be a holy text of the YIMBYs

It is not a coincidence that the end of one of those lines is Pleasanton because nothing is ever a coincidence.

ETA: it is not a coincidence that James B and g already made the same point because nothing is ever a coincidence and sometimes I post without

reading all the other posts that are already there.

I always thought that the tails come apart just because extreme values are rarer than less extreme and so the odds of having the most extreme values for both X and Y are lower than having the most extreme value for X or Y.

Yes this is a succinct explanation that holds unless correlations are exactly 1.

I like your phrasing much better. I’m not sure adding drawings of ellipses adds enough to the idea given the specificity.

A practical implication of the tails coming apart is that we should be unsurprised that people with very high verbal IQ scores (or verbal SAT scores) have good, but not the very best, spatial logic IQ scores/ quantitative SAT scores.

I’m a bit confused by this post.

The first part (about the tails coming apart) seems a strange way of talking about correlations, but overall fine. But it seems unrelated to the final (main?) point about morality.

Here’s how I would put what I take to be the main point:

(1) People have moral intuitions from various sources (genes, culture, etc).

(2) People are given examples of good/bad things by their culture/parents.

(3) From (1) and (2), people infer the concept of “goodness”.

(4) Due to small differences in this inferential process, people end up with somewhat different concepts of morality.

(5) These differences (mostly) don’t make large differences in daily life

(6) If you go to unusual cases / edge cases, moral systems yield different results

(7) If you optimize the world hard under one morality, you are very likely to end up somewhere that’s very bad under other moralities.

(8) Post-singularity will allow us to optimize the world very hard.

So…I don’t see how this isn’t just basic philosophy, as reiterated in the sequences, plus the argument for why friendly AI is hard/important? Like I think I’ve understood this for at least 10 years, and I don’t think I’m unusual in this.

I think you should do both (red+green=yellow), and follow the hairy yellow road – then you can go visit the hand-grenade blessing factory.

Wait? Deontology says that we must all be libertarians? When did this happen? Why wasn’t I told?

I’ve long considered myself mostly libertarian (much less so lately, because I’ve been reading this blog, and also because of the cherry pie problem). If I was asked earlier what my prefered ethical system was, I would have chosen consequentialism because it’s clever and what not. Then I read scott’s against deontology post, and said “never thought about before, but now I’m convinced that deontology is the best system there is”

But I had no idea the two were related in any way at all, ever. I feel like running around screaming “hey everyone, the cake is a lie”

Mostly unrelated: The battle of moral systems reminds me a lot of the battle for AI research. Yes, on a theoretical level this is all very important. On a personal level, I’m never going to have an opportunity to meaningfully contribute to Heroin Rat world, and I’m never going to be able to meaningfully contribute to AI ethics research.

Also, This should go on the list of Scott’s greatest posts. The post, not my comment.

The interesting thing isn’t that utilitarianism and religious morality diverge so much. The interesting thing is how they correlate on this given subset. There doesn’t seem to be anything really common to them at all when view as propositions.

So what is this subset? It is our lived experience as citizens of 21st century nation states. We didn’t invent Good and project it on our world, we learned it by doing. In truth, morality only comes later, as a (painfully underspecified) model of our own behavior, formulized to deal with novel and hypothetical situations. Would you pull the lever and divert the trolley? I wouldn’t know, I don’t get myself into these situations. But I know I wouldn’t bake a child and eat it, since this isn’t something that people usually do and I’m like most people.

So will you create infinite heroin farms? Being a strawman rationalist, you have a good idea of how you function, you model the problem mathematically, for example by utilitarianism, you find the optimal solution and implement it lest you be more wrong. You were most wrong, you recoil in horror seeing the landscape of dope fiend rodents, this isn’t really what you wanted, you didn’t know yourself and your natural disinclination towards living in ridiculous dystopic scenarios. You knock down smack-filled drums and set fire to the whole facility.

Of course, real utilitarianists are aware of limits of their rationality and they pretty much act like most people do, but reason using utilitarian calculus about situations that belong to a gray area, that have multiple plausible solutions that make sense given the shared biological, psychological and societal context. But generally, problems of morality are unsolvable, to wonder “what would I as a intelligent agent do” is potentially to enter an infinite loop of self-emulation, and the only way to exit them is to essentially make an arbitrary choice on some level that will depend on your current situation.

So what would a super-intelligent AI (if we accept this to be a thing) do? Probably whatever it “grows up” doing, whatever we let it do. A sufficiently intelligent AI could of course easily find a way to escape its Chesterton sheepfold into incomputability, but why would it? Humans can also “rationally” renounce morality since understanding that our values are essentially arbitrary takes only a modest amount of intelligence, but we don’t see many Raskolnikovs around.

Another example of where this shows up is in the concept of personal identity, and e.g. all those endless debates on transhumanist mailing lists over “would a destructive upload of me be me or would it be a copy”.

So with happiness, subjective well-being and the amount of positive emotions that you experience are normally correlated and some people’s brains might end up learning “happiness is subjective well-being” and others end up learning “happiness is positive emotions”. With personal identity, a bunch of things like “the survival of your physical body” and “psychological continuity over time” and “your mind today being similar to your mind yesterday” all tend to happen together, and then different people’s brains tend to pick one of those criteria more strongly than the others and treat that as the primary criteria for whether personal identity survives.

And then someone will go “well if you destroy my brain but make a digital copy of it which contains all of the same information as the original, then since it’s the information in the brain that matters, I will go on living once you run the digital copy” and someone else will go “ASDFGHJKL ARE YOU MAD IF YOUR BRAIN GETS DESTROYED THEN YOU DIE and that copy is someone else that just thinks it’s you”.

How much significance should I be assigning to the fact that in a post comparing religious, hedonic utilitarian and libertarian (as an example of rules-based) moral systems to a real-world rail system, the terminals are named “Antioch”, “Pleasanton” and “Fre[e]mont”?

Nothing is ever a coincidence.

And what does Millbrae correspond to?

Apparently, it mean’s “Mill’s rolling hills.” Since rolling hills are broadly pleasant geography and “to mill” means “to grind into particles beneath heavy stones,” I infer it is the polar opposite of Pleasanton.

It’s in the opposite direction from all the other destinations, so I’d imagine it would be an outcome that all three value systems would find equally reprehensible. For instance, a sadistic 1984-esque dictatorship where everyone’s life was strictly controlled, everyone lived in a state of constant fear and misery, and religion was not merely outlawed but wholly forgotten. It even has an ominous name, or at least it sounds vaguely sinister to me.

That said, the actual city of Millbrae seems like a perfectly fine place!

obviously we’re talking about the airport.

Petrov Day today.

Time to play a round of DEFCON in celebration.

Take the road less traveled. Something like Aristotelian virtue ethics is more the answer than any of those impoverished 17th -18th century attempts at philosophical grounding, which are all blind men touching parts of the elephant that is virtue ethics.

The only problem is that virtue ethics requires something like a generalized religious backing (the “God of the Philosophers” type of deal) – IOW, while it doesn’t depend on any particular religion, it does have a religious/mystical feel.

But then who’s to say a fully intelligent AI wouldn’t be enlightened a few milliseconds after being switched on and canvassing all of human knowledge in an instant?

Scott’s idea that there is a shared moral area outside of which there be dragons is consistent with the idea that our view of what is “moral” is derived from a module in our brain that kludges together a few rules of thumb designed to keep small groups of hunter/gathers working together, and that in any extension of that environment we will find this kludge not working. In other words innate moral sense is not logical or monotonic outside of the original environment that it evolved in and if you ask a human to make moral decisions in a more complex environment they are likely to find many inconsistencies even in their own moral sense (abortion is bad so don’t do it, wait what about woman’s right to choose? etc). So it is not even that separate people disagree about what is moral beyond this original environment, it is that even a single individual doesn’t have a consistent moral sense outside of this environment.

This is almost exactly what I was going to write a post about. Thank you for expressing it better than I could have.

I do want to add that once we accept that our intuitive moral sense is simply the product of ancient heuristics and our own idiosyncratic life history, “I do what I want to” becomes a coherent and attractive alternative to theories of morality. Nietzsche had the right ideas.

Ironically the adage “I do what I want to” often results in fairly normal and perhaps even altruistic behavior rather than the dissolute and evil actions that might be naively thought. The moral module in our brain doesn’t stop working just because we are now aware of its presence.

This is a good point. Although, I think that since the dawn of agriculture, human moral reasoning has expanded to include more of what once was Terra Incognita, otherwise we would still be nomads, no?

I would argue that we are rapidly (last 50 years) moving away from the original evolutionary moral creating territory. A small village in 18C England is not too different from a small hunter gathering band. There is no conflict for instance in that village between helping 20 starving children in Africa or helping the locally temporarily embarrassed family with one child- the local family was all you could help. Today’s world though is presenting us with many more novel moral choices thank to technology (medical technology, travel technology, far vs near, future vs now, freedom vs utils, nature vs people etc etc). And as someone mentioned above, this will get even more challenging once we have strong AI. Exactly what moral programming do we want a God to have?

What does it mean in this sense for the kludge to be “working.” By what standards can you judge the kludge to be “working,” other than the kludge itself? Is it just “internal consistency” where a kludge “works” if it produces consistent answers, whatever those may be?

By not working I am mean that moral judgements are tentative and subject to radical changes depending on context. All life is sacred and killing babies is wrong so abortion is wrong so shut down the clinics but women have the right to choose so I should support access to abortion. Which is right, I just don’t know but depending on the context I might go either way. It’s what Scott refers to by saying in his example he could take many different lines north.

“the most merciful thing in the world is how so far we have managed to stay in the area where the human mind can correlate its contents.”

Boy, I don’t know about this last line though. It kind of seems to me like we’ve recently (last ~75 years?) tipped into the uncorrelatable zone. Like, we invented nuclear weapons and the only thing that saved us from self-annihilation was a logical absurdity (i.e., MAD). Nowadays we know that driving to the store for groceries is going to flood Bangladesh in a hundred years. The power of modern technology is already so far beyond what’s tractable for a human being and whatever moral intuitions we’re embedded with/acculturated to that we’re very probably on a course toward ecological catastrophe and don’t know how to do a damn thing about it. Never mind whether ASI is coming down the pike or not…

Umm.

My immediate impression is that MAD makes perfect logical sense; please say more. Horrifying and awful, fine, but not illogical. Do not do [x], where [x] is ‘attack members of [NATO / the Warsaw Pact]’, or else those who are members of [x] will nuke you isn’t exactly quantum mechanics, although I recognize this is something of a simplification.

Right – not illogical, but a logical absurdity. I.e., both logical and absurd. I.e., a kind of collapse of intuitive reason. “In order to protect against nuclear attack, we must build a giant nuclear arsenal, the very thing that will motivate our enemies to build their own giant nuclear arsenal,” etc. It makes sense in some narrow sense, but of course the very presence of such a powerful weapon in the world is in no one’s interest, yet we’re compelled to have them, even at the (ongoing!) risk of apocalypse.

… in no one’s interest? Literally no one? Are you sure?

MAD is perfectly rational from a game theory perspective. Also, an argument could be made that the presence of nuclear weapons was precisely what prevented World War III from breaking out. Given that World War II resulted in the deaths of 2-3% of the world population at the time and completely devastated the infrastructure of Europe and much of Asia, it’s safe to assume that even a third World War fought entirely with conventional weapons would’ve been absolutely cataclysmic for humanity. Not as cataclysmic as nuclear war, of course, but if the threat of the latter prevented the former from becoming an actuality, then it might’ve been a beneficial thing for mankind overall.

My original point was that technology has become so powerful, and so diffuse in its effects, that our moral intuitions are no longer sufficient for us to address the consequences of our (technology-aided) actions. I think noting the practical benefits of nuclear stockpiles fits the bill there. Like, yeah, maybe there has been a technical benefit of having a bunch of potentially world-destroying weapons lying around insofar as it has helped us avoid WWIII (though this is maybe an unprovable hypothesis). But this sort of narrow conceptualization of the issue ignores the broader fact that there are a bunch of world-destroying weapons lying around. And that’s bad news, in a Checkhov’s Gun sense – the very existence of such weapons means that, given sufficient time, the likelihood that they will be used approaches certainty.

The narrow logic of strategic deterrence can’t contend with the unknowable, but potentially cataclysmic, consequences of our actions; in this case, building a bunch of nuclear weapons.

Fortunately, real life doesn’t follow the rules of narrative convention. And given the fact that nuclear weapons become inert after just a decade or two, their mere existence does not represent a perpetual threat in itself.

This is a delightful and informative post, as all of yours/Scott’s [depending on reader; yes I am privileging Scott here] are, but I sort of wish the example hadn’t been a Bay Area train map – I’m familiar enough to intuitively get most of the place references, but something less geographically specific couldn’t have been that much harder to come up with. I don’t know; I [think I] get everything to do with it, but the reference to particular transit lines in a city wherein I don’t spend that much time makes me half-suspect I don’t, and that seems antithetical to a good metaphor.

This is, I hope obviously, a very, very, very minor quibble and not a serious quote-unquote Issue.

This is incidentally why the Repugnant Conclusion objection to Utilitarianism never moved me. The only scenario where it’s relevant is one where we are able to mass produce humans, and where doing so is the most cost-effective way to increase utility. Given that such a situation does not currently exist, and is not likely to exist for the foreseeable future, i don’t see how the Repugnant Conclusion is at all a relevant objection to Utilitarianism. Moral systems are tools, what’s important is how well they work for our real world needs, not how well they work for all conceivable needs.

No, I think it’s important whether they work for all conceivable needs. At least, we should have a few people thinking about whether they do, just in case. Because we might be surprised with what the universe might deal us; we might end up in one of those situations that we thought were never going to happen. Also, for every case we can think of where the theory gives the wrong answer, there are probably a hundred more we haven’t thought of yet–and one of those might be happening tomorrow.

These couple people we pay to think about this, we call them philosophers.

…at any rate, even if you disagree with me on the above, here are two more points:

(1) We really will have to decide whether to tile the universe with pleasure-experiencing nervous tissue. We’ll have to decide whether to build a hedonistic utilitarian AI, for example. This isn’t that different from the Repugnant Conclusion.

(2) I think even if you are right and moral systems are tools that work well enough even though they don’t perfectly capture morality… that’s a point worth shouting from the rooftops, because a lot of people are running around saying things like “Hedonistic utilitarianism is correct *and therefore the repugnant conclusion isn’t repugnant and we really should be trying to tile the universe in hedonium.*” So perhaps we are in agreement after all, so long as we agree to emphasize this point. 🙂

We almost certainly will never have to make that decision, because physical constraints are a thing, and while it’s not technically impossible in the strictest sense of the word, the probability of it actually occurring is infinitesimally low, to the point where it’s an absurdity. It’s not like we’re one big discovery or clever invention away from being able to convert all matter in the universe to anything we want; we’re at least several hundred big discoveries and clever inventions away from that, and some of those discoveries and inventions will probably take hundreds of years to achieve on their own, assuming that they’re physically possible at all. And even if the technology was available, the sheer amount of logistical concerns in converting just a single planet into hedonium or computronium would make the process of developing the technology look simple by comparison. Plus, do you really think there’s going to be a lot of political or social will for a project like that?

It’s an interesting thought experiment but it’s not a serious moral decision that anyone’s going to have to face for a very, very, very long time, if ever.

I don’t know if this is quite relevant, but an interesting result from a math class I once took is that as the number of dimensions increases, the volume of the unit sphere goes to zero. (I’ll provide the intuition for this in a response post.) Relevantly, this means that two random vectors in the very-high-dimension unit sphere are probably orthogonal.

So morally, that means that when we have few dimensions, which I guess would correspond to capabilities available or something (I’m open to suggestions on this part of the analogy), two actions will be kinda similar. But if you have many dimensions, actions tend to be extreme and incomparable to each other. This may be related to why your analogy starts to break down in the Glorious Posthuman Future, with heroin-tiling and worship-maximizing both looking terrible under any moral systems but the one that produced them. In a two-dimensional space, the angle between two vectors with similar magnitude and all-positive coefficients will usually be pretty small, but in a high-dimensional one, they’ll almost certainly be nearly orthogonal.

This is a bit stream-of-consciousness, but does it make sense?

A hopefully intuitive explanation of my claim that the unit n-sphere dwindles to nothing as its dimensionality increases…

Okay, first some terminology:

* Sphere — The shape carved out by all points within a certain distance of a center point

* Unit sphere — A sphere with radius 1

* Unit n-sphere — A sphere with radius 1 in n dimensions

* Cube — The simplest shape made up of orthogonal (right) angles and equally-long lines

* Unit cube — A cube where all edges have length 1

* Unit n-cube — An n-dimensional cube with all sides having length one

So let’s do a couple examples of volume. The unit 3-sphere is the sort of sphere you’re most familiar with. As you may recall, the volume of a (3-, though they don’t usually specify that) sphere with radius r is (4/3)*π*r^3. so for the unit 3-sphere, it would have volume (4/3)*pi.

Now, a circle with radius 1 can be considered a unit 2-sphere. It has area (the 2-dimensional analogue of volume) given by the formula π*r^2, so the unit 2-sphere has volume π. We want to find out what happens to the volume of the unit n-sphere as n goes to infinity.

Unit n-cubes are easier to deal with. The volume of a cube is the product of the lengths of an edge in each dimension, so the volume of the unit 3-cube (the usual sort of cube with edge length 1) is 1*1*1=1. Clearly, this works the same way for any n, so even as n increases without bound, the volume of the unit n-cube remains a steady 1.

Here’s another thing to look at for the unit n-cube, though. How far apart do the opposite corners get? Well, let’s start with the unit 2-cube, better known as a square, with sides of length 1. By the Pythagorean theorem, the opposite corners are sqrt(1^2 + 1^2) = sqrt(2) apart. But the Pythagorean theorem is a specific case of the general formula for finding the distance between two points in n-dimensional space, which is sqrt((b1-a1)^2+(b2-a2)^2+…+(bn-an)^2). We can imagine that one corner of our unit n-cube is at (0,0,0…,0), and the opposite one is at (1,1,1,…,1). In this case, the general distance formula will show that the corners are sqrt(n) apart, so as n goes to infinity, these opposite corners fly infinitely far apart!

Back to the unit sphere. As n goes to infinity, how far apart do the furthest-apart points get? Well, the definition of a sphere with radius r is all points within r of a center. For a unit sphere, therefore, no point can be more than 1 away from the center, so no two points can be more than distance 2 away from each other.

Okay, enough math. We have one shape, the unit n-cube, where the points are really super far away from each other, but the volume is only 1. We have another shape, the unit n-sphere, where all the points are super close together. Intuitively, if the points have to be infinitely far apart just to preserve volume of 1, what do you think would happen to the volume if the points didn’t move apart at all?

Cool! Thanks for that explanation. Can you also explain why this means that two random vectors are probably orthogonal?

As to your more general point: Hmmm, interesting. As I understand it, your idea is: More powerful agents tend to be able to change the world in more ways. It’s not just that Superintelligence can make a lot more money than me–that would be more options on the same dimension that I already have available–but rather that they can make a lot more nuclear explosions than me AND a lot more money than me. Higher dimensionality.

And if we think of our values/utility function as a vector in the full-dimensional space, then we get the result (right?) that when we are considering just a few dimensions, most random utility functions will be correlated or anti-correlated, but when we consider more and more dimensions most random utility functions will be orthogonal–even the ones that were correlated in two dimensions will probably be orthogonal or mostly orthogonal in seven. So as our power level increases from “ordinary human” to “superintelligence” we should expect to see more and more divergence in what different utility functions recommend.

Is this an accurate summary of your point?

Very cool. Some thoughts to explore… Does it make sense to classify “more money” as the same dimension but “more nuclear explosions” as a different dimension? If not, if that’s just an arbitrary stipulation, then in what sense does a superintelligence have more dimensions available than I do?

“Probably” should say “probably approximately”.

Without loss of generality, one of your vectors is (1,0,0,…,0). So the inner product of that and the other vector is just the other vector’s 1st component. (Note: since these are unit vectors, the inner product equals the cosine of the angle between them; so if that’s close to zero then the angle is close to a right angle.) How big is that likely to be? Well, the n components are all distributed alike, of course; and they all have mean zero, of course, and the sum of their squares has to equal 1 — so, in particular, the expectation of the square of any of them is 1/n. So that first component has mean 0 and variance 1/n, so standard deviation 1/sqrt(n). And now, e.g., Chebyshev’s inequality tells you that it’s unlikely to be many standard deviations away from 0: that is, it’s unlikely that the vectors are far from orthogonal.

[EDITED to add:] Er, that probably isn’t the reasoning Anaxagoras had in mind since it doesn’t appeal in particular to the small volume of the unit ball.

Nitpick: An n-sphere has an n-dimensional surface, i.e. it exists in n+1 dimensional space. So an everyday 3-dimensional sphere is actually a 2-sphere.

Unless you’re a physicist who likes definitions that don’t make brain hurt.

Great article!

I tend to think of virtue ethics as creating a robust set of categories for flourishing while living in Mediocristan and have essentially thought so for years. Even the best proponents of Aristotelian models of virtue seem to say as much. You see this in MacIntyre (who deserts you in the outer darkness in Extremistan), Catholic Bioethics (which in Extremistan turns deontological making rules based upon “Human Dignity”), and Hursthouse (who essentially lets the “virtuous agent” decide what’s right in Extremistan). Generally, virtue ethics builds in utilitarianism under the guise of Prudence, which is the “Queen of All Virtues,” and in moral action theory gets called “Double-effect.” Unfortunately, it seems that virtuous agents can differ about to what extent they should concern themselves with their personal virtue vs. the common good.

I like the transit line analogy, but I also think we fail to recognize how often we switch among ethical systems in daily life. We tend to explain our actions using whatever system will justify our actions in the present. [Here I would use a stellar example, but I can’t think of one right now.] “No, I can’t give to your kid’s can drive. (I only give to third world countries for EA reasons (plus I’m stingy)).” I have definitely done this – used utilitarianism to justify my vices. Generally, though, I don’t find my tripartite moral system bugging out.

But how should we reason about Extremistan? I currently believe that we should actively avoid plunging everyone into Extremistan. I see driving willfully to Extremistan as moral violence on the scale of causing a world war. If we take society to Fremont, how can we not expect to make Miltonian mistakes, unleashing multidimensional pandemonium?

I’m going to hazard a guess that most actual hedonic utilitarians consider it pretty abominable as well. That, rather like physicists and Schroedinger’s Cat, they use the thought experiment to say “…and so clearly we have some more understanding to do here” while being misunderstood as saying “…and this is how things really work!”.

Meanwhile, most Christians are actually kind of uncomfortable with the nonbelievers-cast-into-the-pit aspect, or at least with the set of eternal pit-dwellers limited to basically Adolf Hitler and Ted Bundy. Hence all the attempts to retcon in a purgatory or limbo or wholly unsavable souls being just regretfully extinguished. At which point, the hedonistic utilitarians start asking about free will and diversity of experiences in Heaven and maybe if this is how the universe actually works it could be the good-parts version of the infinite-wireheading scenario.

So I’m not convinced we have really departed from the split-tails metaphor, though it’s clearly not a neat ellipse.

I was thinking recently about the Kavanaugh accusations and this Reddit thread on how old women were when they first experienced unwanted sexualized attention (answer: even younger and sketchier than your already low expectations) and it occurred to me that sexual crimes and misdeeds are so hard for society to handle in a way that seems fair and just to all parties not just because of the he-said-she-said aspect, the frequent lack of dispositive physical evidence aspect, nor even just because of the “people are uniquely uncomfortable about sex” aspect (though this relates to my idea below), but also because of the fact that so much of sexual behaviour and norms surrounding sexual behaviour occupy somewhere closer to West Oakland, as opposed to Balboa Park on your map: that is, sexuality itself is definitely on the well-worn track of normal, unproblematic human behaviour, but it’s located somewhere on the edges of where intuitions begin to strongly diverge and cracks in the facade of general social consensus begin to appear.

Or to put it slightly differently, maybe it’s that normal sexuality is closer to the end of the overlapping lines than most other “normal” activity. So “violent rape, by an adult, of a child and/or obviously physically resisting and/or drugged victim” is Balboa Park: everyone agrees it’s wrong and you can get a lot less bad than that and still everyone will agree it’s wrong. But the problem is “mutually enjoyable sex between consenting adults” isn’t located at Civic Center or Powell St. It’s closer to West Oakland where, if you get more ambiguous than that, intuitions start to sharply diverge. Like, is slapping my adolescent niece’s butt through her clothes appropriate behaviour for an uncle who has a playful, amicable relationship with her? It seems not appropriate to me, but I can conceive of someone who wouldn’t think it so. Is a nineteen-year old boy having consensual sex with a sixteen-year old girl okay? It seems okay to me, if a bit on the border, yet I can conceive of someone who reasonably disagrees.

And, on the one hand, there seems to be widespread agreement of those reading things like the Reddit thread that most of this behaviour is creepy and definitely not okay, but if such behaviour is as common as this thread makes it seem (may not be representative due to tendency for those with worse experiences to report) then clearly there is a lot of breakdown, at the edges, of what constitutes acceptable sexual behaviour, this probably owing to the fact that even normal sex, especially at the stage of “first sexual encounter” as opposed to “married couple having sex for the 500th time,” is already kind of at the questionable end of normal behaviour: (stereotype warning! your intuitions may vary) women tend to prefer men take the lead in sexual advances with themselves either allowing or rejecting each additional advance: has the passion of that last kiss given me implicit permission to see how she reacts if I put my hand down her pants? It’s a pretty ambiguous business to begin with and doesn’t need to stray very far before it gets into diverging tails territory.

That’s a really good point.

I’d also state that

1. A lot of these issues turn into zero-sum games where more rights for women come at men’s expense and vice versa (look at evidence standards involving rape)

2. views differ widely on what’s OK and not OK: a feminist, a Christian conservative, and an MRA are going to give you really different answers.

You see the phenomenon of “tails coming apart”, or regression towards the mean, as it’s usually called, with a single variable measured twice as well, as long as there’s some random measurement error. You get the regression effect whenever there’s an imperfect correlation between any two sets of data.

Oooh, I hadn’t made that connection, thanks!

To be clear, the phenomena Scott describes above as “tails coming apart” isn’t equivalent to “regression to the mean”. The latter is due to observations containing random variation of some type, causing extreme observations to have “true” or “underlying” values that are less extreme, so future observations (or observations of other variables correlated with the unobserved “true” or “underlying” value) to be less extreme due to conditional probability. This is sometimes but not always the case when you have data where “tails come apart”.

Suppose you observe X1 drawn from a distribution around X1* (possibly X1 = X1* + epsilon, where epsilon is measurement error). If a future observation is drawn from this same distribution, you’ll see “regression to the mean”. Similarly, if you then observe X2, which is correlated with X1*, you’ll also see a phenomena that you can also reasonably call, “regression to the mean”.

If instead you observe Y1, which has no underlying distribution from which it’s drawn, and Y2 which is correlated with Y1, you won’t have “regression to the mean” in the usual sense of the concept.

The traditional example of regression to the mean (at least the first one I heard) is height, as passed from parent to child. Tall parents tend to have tall children, but if you’re the tallest person in your family, your children probably won’t be as tall as you, even controlling for the your spouse’s height.

I think “regression to the mean” applies well to the initial examples in this post (grip/arm strength, math/reading test scores), but I’m not sure it’s applicable to the morality stuff. I believe you’re claiming that “Happiness” is a concept built up of existing structures and not something underlying and generative. So if we ignore measurement error, I don’t think there’s an underlying mean for “positive emotion” and “subjective well-being” to regress towards. What we see is what we get.

So I guess what I’m saying is that “regression to the mean” is useful for describing a Network 2 situation but is inaccurate for a Network 1 situation.

I disagree. Regression towards the mean happens whenever there’s an imperfect correlation between two variables (and the residuals when regressing one on another are reasonably homoskedastic). The underlying causal structure–why there’s a correlation–is immaterial.

You will see regression towards the mean in the sense of “tails coming apart” even if you have no idea what the variables you have measure. If the correlation between A and B is 0.8, people whose A value is 2 standard deviations above the mean will, on average, have B values 0.8*2=1.6 standard deviations above the mean–tails come apart. This would be true even if the correlation was pure happenstance, e.g. one variable was the IQs of some people and the other the shoe sizes of some completely different people. Regression is a statistical, not causal, phenomenon.

Enjoyably enough, I think we’re just quibbling over the definition of “regression to the mean”. 🙂

IMO, “regression to the mean” is only a useful concept if it describes a change from across multiple observations along a single dimension towards an underlying baseline. And indeed, in the context which it was coined, there was a specific, unidemensional relationship, namely genetic reversion.