Last month I got to attend the Asilomar Conference on Beneficial AI. I tried to fight it off, saying I was totally unqualified to go to any AI-related conference. But the organizers assured me that it was an effort to bring together people from diverse fields to discuss risks ranging from technological unemployment to drones to superintelligence, and so it was totally okay that I’d never programmed anything more complicated than HELLO WORLD.

“Diverse fields” seems right. On the trip from San Francisco airport, my girlfriend and I shared a car with two computer science professors, the inventor of Ethereum, and a UN chemical weapons inspector. One of the computer science professors tried to make conversion by jokingly asking the weapons inspector if he’d ever argued with Saddam Hussein. “Yes,” said the inspector, not joking at all. The rest of the conference was even more interesting than that.

I spent the first night completely star-struck. Oh, that’s the founder of Skype. Oh, those are the people who made AlphaGo. Oh, that’s the guy who discovered the reason why the universe exists at all. This might have left me a little tongue-tied. How do you introduce yourself to eg David Chalmers? “Hey” seems insufficient for the gravity of the moment. “Hey, you’re David Chalmers!” doesn’t seem to communicate much he doesn’t already know. “Congratulations on being David Chalmers”, while proportionate, seems potentially awkward. I just don’t have an appropriate social script for this situation.

(the problem was resolved when Chalmers saw me staring at him, came up to me, and said “Hey, are you the guy who writes Slate Star Codex?”)

The conference policy discourages any kind of blow-by-blow description of who said what in order to prevent people from worrying about how what they say will be “reported” later on. But here are some general impressions I got from the talks and participants:

1. In part the conference was a coming-out party for AI safety research. One of the best received talks was about “breaking the taboo” on the subject, and mentioned a postdoc who had pursued his interest in it secretly lest his professor find out, only to learn later that his professor was also researching it secretly, lest everyone else find out.

The conference seemed like a (wildly successful) effort to contribute to the ongoing normalization of the subject. Offer people free food to spend a few days talking about autonomous weapons and biased algorithms and the menace of AlphaGo stealing jobs from hard-working human Go players, then sandwich an afternoon on superintelligence into the middle. Everyone could tell their friends they were going to hear about the poor unemployed Go players, and protest that they were only listening to Elon Musk talk about superintelligence because they happened to be in the area. The strategy worked. The conference attracted AI researchers so prestigious that even I had heard of them (including many who were publicly skeptical of superintelligence), and they all got to hear prestigious people call for “breaking the taboo” on AI safety research and get applauded. Then people talked about all of the lucrative grants they had gotten in the area. It did a great job of creating common knowledge that everyone agreed AI goal alignment research was valuable, in a way not entirely constrained by whether any such agreement actually existed.

2. Most of the economists there seemed pretty convinced that technological unemployment was real, important, and happening already. A few referred to Daron Acemoglu’s recent paper Robots And Jobs: Evidence From US Labor Markets, which says:

We estimate large and robust negative effects of robots on employment and wages. We show that commuting zones most affected by robots in the post-1990 era were on similar trends to others before 1990, and that the impact of robots is distinct and only weakly correlated with the prevalence of routine jobs, the impact of imports from China, and overall capital utilization. According to our estimates, each additional robot reduces employment by about seven workers, and one new robot per thousand workers reduces wages by 1.2 to 1.6 percent.

And apparently last year’s Nobel laureate Angus Deaton said that:

Globalisation for me seems to be not first-order harm and I find it very hard not to think about the billion people who have been dragged out of poverty as a result. I don’t think that globalisation is anywhere near the threat that robots are.

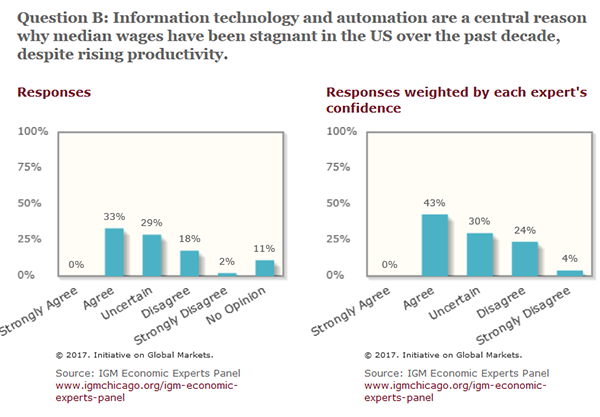

A friend reminded me that the kind of economists who go to AI conferences might be a biased sample, so I checked IGM’s Economic Expert Panel (now that I know about that I’m going to use it for everything):

It looks like economists are uncertain but lean towards supporting the theory, which really surprised me. I thought people were still talking about the Luddite fallacy and how it was impossible for new technology to increase unemployment because something something sewing machines something entire history of 19th and 20th centuries. I guess that’s changed.

I had heard the horse used as a counterexample to this before – ie the invention of the car put horses out of work, full stop, and now there are fewer of them. An economist at the conference added some meat to this story – the invention of the stirrup (which increased horse efficiency) and the railroad (which displaced the horse for long-range trips) increased the number of horses, but the invention of the car decreased it. This suggests that some kind of innovations might complement human labor and others replace it. So a pessimist could argue that the sewing machine (or whichever other past innovation) was more like the stirrup, but modern AIs will be more like the car.

3. A lot of people there were really optimistic that the solution to technological unemployment was to teach unemployed West Virginia truck drivers to code so they could participate in the AI revolution. I used to think this was a weird straw man occasionally trotted out by Freddie deBoer, but all these top economists were super enthusiastic about old white guys whose mill has fallen on hard times founding the next generation of nimble tech startups. I’m tempted to mock this, but maybe I shouldn’t – this From Coal To Code article says that the program has successfully rehabilitated Kentucky coal miners into Web developers. And I can’t think of a good argument why not – even from a biodeterminist perspective, nobody’s ever found that coal mining areas have lower IQ than anywhere else, so some of them ought to be potential web developers just like everywhere else. I still wanted to ask the panel “Given that 30-50% of kids fail high school algebra, how do you expect them to learn computer science?”, but by the time I had finished finding that statistic they had moved on to a different topic.

4. The cutting edge in AI goal alignment research is the idea of inverse reinforcement learning. Normal reinforcement learning is when you start with some value function (for example, “I want something that hits the target”) and use reinforcement to translate that into behavior (eg reinforcing things that come close to the target until the system learns to hit the target). Inverse reinforcement learning is when you start by looking at behavior and use it to determine some value function (for example, “that program keeps hitting that spot over there, I bet it’s targeting it for some reason”).

Since we can’t explain human ethics very clearly, maybe it would be easier to tell an inverse reinforcement learner to watch the stuff humans do and try to figure out what values we’re working off of – one obvious problem being that our values predict our actions much less than we might wish. Presumably this is solvable if we assume that our moral statements are also behavior worth learning from.

A more complicated problem: humans don’t have utility functions, and an AI that assumes we do might come up with some sort of monstrosity that predicts human behavior really well while not fitting our idea of morality at all. Formalizing what exactly humans do have and what exactly it means to approximate that thing might turn out to be an important problem here.

5. Related: a whole bunch of problems go away if AIs, instead of receiving rewards based on the state of the world, treat the reward signal as information about a reward function which they only imperfectly understand. For example, suppose an AI wants to maximize “human values”, but knows that it doesn’t really understand human values very well. Such an AI might try to learn things, and if the expected reward was high enough it might try to take actions in the world. But it wouldn’t (contra Omohundro) naturally resist being turned off, since it might believe the human turning it off understood human values better than it did and had some human-value-compliant reason for wanting it gone. This sort of AI also might not wirehead – it would have no reason to think that wireheading was the best way to learn about and fulfill human values.

The technical people at the conference seemed to think this idea of uncertainty about reward was technically possible, but would require a ground-up reimagining of reinforcement learning. If true, it would be a perfect example of what Nick Bostrom et al have been trying to convince people of since forever: there are good ideas to mitigate AI risk, but they have to be studied early so that they can be incorporated into the field early on.

6. AlphaGo has gotten much better since beating Lee Sedol and its creators are now trying to understand the idea of truly optimal play. I would have expected Go players to be pretty pissed about being made obsolete, but in fact they think of Go as a form of art and are awed and delighted to see it performed at superhuman levels.

More interesting for the rest of us, AlphaGo is playing moves and styles that all human masters had dismissed as stupid centuries ago. Human champion Ke Jie said that:

After humanity spent thousands of years improving our tactics, computers tell us that humans are completely wrong. I would go as far as to say not a single human has touched the edge of the truth of Go.

A couple of people talked about how the quest for “optimal Go” wasn’t just about one game, but about grading human communities. Here we have this group of brilliant people who have been competing against each other for centuries, gradually refining their techniques. Did they come pretty close to doing as well as merely human minds could manage? Or did non-intellectual factors – politics, conformity, getting trapped at local maxima – cause them to ignore big parts of possibility-space? Right now it’s very preliminarily looking like the latter, which would be a really interesting result – especially if it gets replicated once AIs take over other human fields.

One Go master said that he would have “slapped” a student for playing a strategy AlphaGo won with. Might we one day be able to do a play-by-play of Go history, finding out where human strategists went wrong, which avenues they closed unnecessarily, and what institutions and thought processes were most likely to tend towards the optimal play AlphaGo has determined? If so, maybe we could have have twenty or thirty years to apply the knowledge gained to our own fields before AIs take over those too.

7. People For The Ethical Treatment Of Reinforcement Learners got a couple of shout-outs, for some reason. One reinforcement learning expert pointed out that the problem was trivial, because of a theorem that program behavior wouldn’t be affected by global shifts in reinforcement levels (ie instead of going from +10 to -10, go from +30 to +10). I’m not sure if I’m understanding this right, or if this kind of trick would affect a program’s conscious experiences, or if anyone involved in this discussion is serious.

8. One theme that kept coming up was that most modern machine learning algorithms aren’t “transparent” – they can’t give reasons for their choices, and it’s difficult for humans to read them off of the connection weights that form their “brains”. This becomes especially awkward if you’re using the AI for something important. Imagine a future inmate asking why he was denied parole, and the answer being “nobody knows and it’s impossible to find out even in principle”. Even if the AI involved were generally accurate and could predict recidivism at superhuman levels, that’s a hard pill to swallow.

(DeepMind employs a Go master to help explain AlphaGo’s decisions back to its own programmers, which is probably a metaphor for something)

This problem scales with the size of the AI; a superintelligence whose decision-making process is completely opaque sounds pretty scary. This is the “treacherous turn” again; you can train an AI to learn human values, and you can observe it doing something that looks like following human values, but you can never “reach inside” and see what it’s “really thinking”. This could be pretty bad if what it’s really thinking is “I will lull the humans into a false sense of complacency until they give me more power”. There seem to be various teams working on the issue.

But I’m also interested in what it says about us. Are the neurons in our brain some kind of uniquely readable agent that is for some reason transparent to itself in a way other networks aren’t? Or should we follow Nisbett and Wilson in saying that our own brains are an impenetrable mass of edge weights just like everything else, and we’re forced to guess at the reasons motivating our own cognitive processes?

9. One discipline I shouldn’t have been so surprised to see represented at the multidisciplinary conference was politics. A lot of the political scientists and lawyers there focused on autonomous weapons, but some were thinking about AI arms races. If anyone gets close to superintelligence, we want to give them time to test it for safety before releasing it into the wild. But if two competing teams are equally close and there’s a big first-mover advantage (for example, first mover takes over the world), then both groups will probably skip the safety testing.

On an intranational level, this suggests a need for regulation; on an international one, it suggests a need for cooperation. The Asilomar attendees were mostly Americans and Europeans, and some of them were pretty well-connected in their respective governments. But we realized we didn’t have the same kind of contacts in the Chinese and Russian AI communities, which might help if we needed some kind of grassroots effort to defuse an AI arms race before it started. If anyone here is a Chinese or Russian AI scientist, or has contacts with Chinese or Russian AI scientists, please let me know and I can direct you to the appropriate people.

10. In the end we debated some principles to be added into a framework that would form a basis for creating a guideline to lay out a vision for ethical AI. Most of these were generic platitudes, like “we believe the benefits of AI should go to everybody”. There was a lunch session where we were supposed to discuss these and maybe change the wording and decide which ones we did and didn’t support.

There are lots of studies in psychology and neuroscience about what people’s senses do when presented with inadequate stimuli, like in a sensory deprivation tank. Usually they go haywire and hallucinate random things. I was reminded of this as I watched a bunch of geniuses debate generic platitudes. It was hilarious. I got the lowdown from a couple of friends who were all sitting at different tables, and everyone responded in different ways, from rolling their eyes at the project to getting really emotionally invested in it. My own table was moderated by a Harvard philosophy professor who obviously deserved it. He clearly and fluidly explained the moral principles behind each of the suggestions, encouraged us to debate them reasonably, and then led us to what seemed in hindsight the obviously correct answer. I had already read one of his books but I am planning to order more. Meanwhile according to my girlfriend other tables did everything short of come to blows.

Overall it was an amazing experience and many thanks to the Future of Life Institute for putting on the conference and inviting me. Some pictures (not taken by me) below:

The conference center

The panel on superintelligence.

We passed this store on the way to the conference. I thought it was kabbalistically relevant to AI risk.

I have arguments for the relevance of diversity in future conferences, not only in disciplines, but also in national, cultural, gender, religious, and gender backgrounds.

1. Topics related to future of artificial intelligence affect all humanity.

2. Statistically speaking, people from different national, cultural, gender, ect. backgrounds tend to have different viewpoints on important moral, political, and philosophical issues.

3. The interests of humanity cannot be accurately represented by a subgroup.

4. Unless you have intimately lived in the experience of another language, culture, gender, etc., you don’t know what you don’t know.

5. Blind spots in science, politics, and philosophy based on Euro-centrism have had shocking and dire consequences throughout history. Scientists have particularly been drawn towards scientific racism with respect to intelligence. The mention of bio-determinism in this article obliquely acknowledges this often self-interested fascination with the idea of biologically based intellectual inferiority of some groups, even though the consensus of geneticists and other scholars in the field does not accept the idea of biological intellectual determinism.

6. This deep rooted history of scientific racism, social Darwinism, and lack of self reflection has had terrible effects in the development of technology, including employ in use of colonialism, genocide, forced sterilization, warfare, and oppression of people groups not represented in the majority scientific establishment. Unless these tendencies are rooted out, the development of AI could lead to similar results.

7. Creating limitations on the development of artificial intelligence is impossible without broad political and social support from multiple international actors.

8. A small group of elite intelligensia that is mostly or entirely represented by Euro-centric of Asian-centric perspectives will not have broad-based social support. Political buy-in may be required for multiple nations, including China and Russia, but also possibly Iran, Israel, Turkey, South Africa, or a range of nations whose economic and scientific development over the next several decades is difficult to predict.

9. Lack of lived experience or intimate understanding of oppression, exclusion, or invasion of personal body space can lead to an improper calculation of the importance of these risks in developing AI.

10. Underlying biases will be coded into AI. (This is already happening in predictive policing involving algorithms used by local police departments, etc.)

11. Constant vigilance and affirmative efforts to increase diversity are necessary to overcome the ordinary and comfortable assumptions or your particular background and experience, even in intellectual environments.

12. “Nothing about us without us” is a principal that derived from the disability rights movement. When decisions intimately involve a particular group of people, they must be represented in the decision-making process. Again, AI affects all humanity. It is wrong to make decisions for all humanity without diverse representation of its different interests.

This is not an attack on the race, gender, ability, perspective, etc., of any participants. People from European and Asian people contexts, male and heterosexual identities, and English-language cultures can and must be represented. And, of course, you have to start somewhere. Economic and social inequality greatly reduce the pool of diverse participants with scientific, legal, and other relevant experience. However, without greater diversity at some point, future conferences will be limited in their future effects and legitimacy, and will have an increased probability of significant blind spots while making vital decisions.

There are many well-established ways to increase diversity, if organizers are interested in making concrete efforts in this direction. This can include outreach to affinity groups of scientists or other experts from minority populations, affirmative efforts to seek and find non-majority group participant, “snowballing” by beginning with a small diverse group and networking outward, incorporating diversity principles into the highest levels of group priorities, etc. Lack of diversity is a problem that cannot ever be completely overcome, but it can be improved with sustained effort.

We have more jobs today than we ever had in the past. This includes more jobs that don’t require a college education.

We have more automation today than we ever had in the past.

Arguing that automation decreases net employment is not supported by any evidence whatsoever. In fact, all available evidence (100%) points to the exact opposite conclusion.

The reason we sometimes see regional unemployment is not because automation decreases the total number of jobs, but because some people refuse to move to find work. However, calling this “technological unemployment” is highly suspect, as the cause is not that there isn’t work for these people, but that they’re unwilling to move in order to pursue economic opportunity. It is the same thing we saw with outsourcing.

We really don’t want to enable such people.

This of course makes sense from basic economic principles; human labor is a scarce resource. We can simply reallocate that resource and end up with more valuable stuff as a result. There’s always stuff that needs doing which isn’t being done.

Also, using horses as an example is always a terribly stupid example – horses aren’t people and don’t have jobs. Horses aren’t like people – they’re like equipment. And we replace equipment quite frequently.

—

I do have to admit it always amuses me how seriously people take some of these things, though.

Until they get together and vote in Trump. Whups.

We shouldn’t spend a lot of resources shielding people from economic reality, but at a certain point those people get together and demand their unreality at the voting booth.

Speaking purely from a Machiavellian viewpoint, they need to be met part of the way there and for long enough that that doesn’t happen.

From a more considerate viewpoint, they need to be given enough time to plan their careers, so that if they became a truck driver 5 years ago, they aren’t unemployable for the last 20 years of their life. Perhaps not enough to keep their dead region of America vibrant, though.

Indeed, people were unwilling to move halfway around the world to a foreign country where indoor plumbing was a luxury in order to make 1/10th their previous wage (and therefore end up unable to save enough to move back to their first world origins)… and besides the countries outsourced-to wouldn’t accept them anyway.

Yes, and there’s a reason that department is called “Human Resources”. To employers, people are like equipment too; only leased.

Oh … replace “human” with “god”, and “AI” with “human” and you get the recognizably typical outlook of mystic religious sects.

AI, trying to decipher the mysterious “will of humans”, I find that weirdly amusing.

Re. old white mill workers learning to code. I don’t think it’s as outré as it might sound. I’m reminded of something in Vernor Vinge somewhere (or it might have been Stross?), about the distributed solution of complex algorithms farmed out to a micropaid public in the form of pachinko-like pastimes (thereby leveraging random magic in random peoples’ brains).

And then of course there’s maker culture, which seems to have become unfashionable as a chattering class topic, but which looks like it’s carrying on a vigorous life outside the limelight.

And while it’s true that combining useful tasks with the Csikszentmihalyi-endorsed work modus of “making a game out of it” seems to have so far eluded developers (the closest experiments so far being a few lame attempts to make gaming “worthy” in learning terms, or meditative, or exercising, or whatever), it’s still early days.

There are developments in the gaming industry at the moment, where content creators get small payments from the developer for creating extra content for other users (e.g. a game I play at the moment, Warframe – top class third person multiplayer shooter, btw – has people making the game’s equivalent of variant armors via the Steam system). That’s one piece of the puzzle we’re watching do a species transition in realtime.

The only thing missing, to make this kind of virtual malarkey truly rewarding labour, in a way that would satisfy the whole human being (as traditionally), is the physical component – but that can be substituted for in other ways. Supposing the “bundle of gadgets” view of the mind is true, then these things are relatively compartmentalized, and being dumb on their own, easily satisfied (as the occasional bit of camping or hiking is somewhat of a substitute for our ancestral environment). We’re moving to a future both suitable for and inducing ADHD in a multitude of real and virtual environments, as opposed to lifetimes of ruminant monotoil in dark, satanic mills.

I wonder, too, if this kind of distributed play, combined with AI, could end up becoming something like Iain Banks’ Minds – solving the economy in good, old-fashioned socialist style, eventually supplanting the market. Several possible layers of irony there 🙂 In fact, with haptics and large halls dedicated to fun augmented reality LARP-ing, we’d have something like the Chinese Room 🙂

The education thing is just down to the fact that education is shit – partly because it’s all still based on the Bismarckian model of State indoctrination, currently being repurposed by the extremist Left-wing ideologues of today’s Humanities academy; partly because the State (every State, just some less ineptly than others) has for over half a century been inflating educational achievement to make the numbers look good, to buy votes. Working class people are plenty smart enough (to be brutal about it, its the dysfunctional underclass that’s truly dumb), and anyway, all you need to do is to knit all those random sparks of magic in peoples’ brains together into a cohesive, problem-solving whole, and reward appropriately.

Plus there’s crafts – there could easily be a two-tier system where at one level you have cheap robot-produced stuff that’s fully functional but has that slight uncanny valley effect that everything digital has (and probably will have for the foreseeable future – you can’t get as many bits even in 96khz/24bit audio recording as there is “grain” in analogue tape; people still generally value film+real sets over RED+CGI; the resin replica of Bastet you can buy from the museum will never be quite as satisfying as the precious original), and on another tier, people still paying good money for artisan bits and bobs, or even more closely-tailored (than robots with mass production constraints would make) maker-produced stuff. Even if you go up to the design level, you’ll probably have the same “can’t quite put my finger on it” difference.

Plus, computers always go wrong – this is the rock against which many fond, spergy hopes about the future of AI, robots, etc., are always going to crash, and it continually surprises me that so many of these exploratory discussions keep forgetting this most obvious fact that everybody on Earth now knows. The haphazard, rickety but robust chaos-eating products of millions of years of evolutionary R&D still have the advantage, and I don’t see that advantage being lost for quite some time yet. Still, it’s good to air these things now.

Scott- many of the comments here reminded me of your statement late in the report:

Many posts about horses and lumber-jacks and automation (did anybody say buggy-whip?). We should all be concerned about our own jobs being replaced by AI. Plumbers, HVAC techs and Electricians have a lot less to worry about than Professors, Managers and Analysts when it comes to AI. Sure, truck drivers will be replaced by drones so their kids will have to find a new way to get by. Our grand-kids will want running water, A/C and electricity (and whatever comes after that… batteries in the basement and panels on the roof). They will not be listening to an Accountant or Professor offer platitudes in person (who BTW pulls down 5x poverty rate, or so).

Just like popular music groups won’t get lots of people to live shows once digital music is widely available.

baconbacon- there will always be room for rockstars! and to make money these days they have to attract live audiences because automation has removed all the middle-men from the value chain. The now-unemployed middlemen were the ones screaming about Napster the loudest.

The art survives. And re-wiring a 100 year old house is an art… just so you know.

I’ve done a lot of wiring to my 90 year old house- in a post scarcity world I think I am just getting one of them super cheap new houses.

I can’t speak for everyone else ITT, of course, but I for one am interested in this stuff precisely because it is acutely apparent to me that most of the value I provide my employers as a white-collar schlub could in fact be automated with today’s technology, let alone future ditto. In fact, I started a thread several Open Threads past about the potential automatability of skilled trades vs. white-collar work. Not sure how or if I should let that guide my career choices, but it’s absolutely on my mind.

One way around robots stealing our lives is to restructure our communities. Already there are apps/sites focused on sharing within a community: you’re a masseuse and you massage someone in your community, and some professional chef cooks you dinner a week later when you’re in a pinch. We need communities where people want to rely on each other, and accept that we need to switch to a mostly service economy when it comes to people.

I know massage chairs exist, but the best ones still aren’t as good as a human. And is it really worth building a machine that is? Human engineering ability far exceeds our need to engineer.

I think this is a good intermediate step, but what happens when you can buy a massage robot from Amazon, who is 100 times better at massaging people than a human masseuse ? You ask “it really worth building a machine that [is that good]”, but that’s the wrong question. If it’s theoretically possible to build such a machine, then someone will not only build it, but also market it, because he can make money that way — a prospect that will become increasingly more important in an increasingly automated economy.

I have a feeling that my grandkids (if any) won’t even know what an “A/C” is. They’ll just expect every house to always exist at optimal temperature. They will never see a human A/C repairman, except maybe at some sort of an artisanal renaissance faire: “see, kids, this is how we humans used to keep our houses cool in the summer, before the drones were invented…”

I think there’s a way to fabricate ethics, in a sense. Using the idea of liberty and claim rights we can outline what people are permitted to do, what they are obligated to do, and what they must refrain from. It wouldn’t be easy, but I suspect a lot of preferred human action could be coded using this scheme. Probably not a cure-all for AI, it could serve as a decent starting point.

Yes – I would want my robot servant to be a deontologist.

I am indeed assuming that no such super human AI is coming in the near future. In my opinion, the automation technologies I see today has serious limitations and will continue to require humans for as long as I am comfortable extrapolating the future (a few decades).

I’m not denying the possibility of the creation of a superhuman AI, but I am not sure how much we could meaningfully prepare for it. In the event of a true hard take off, all our preparation may only keep this AI from turning us into paperclips for another 30-45 minutes.

There seems to be a general assumption that it’s important for the AI to (to at least some extent) behave according to our official, verbally stated morality, rather than that which can be inferred from our actions (including the action of making moral statements). It’s not obvious to me that this is actually desirable.

Well, that’s what we officially verbally state. Our actions may be different.

“Here we have this group of brilliant people who have been competing against each other for centuries, gradually refining their techniques. Did they come pretty close to doing as well as merely human minds could manage? Or did non-intellectual factors – politics, conformity, getting trapped at local maxima – cause them to ignore big parts of possibility-space?”

This is a beautiful encapsulation of the profound misunderstanding of intelligence at the heart of the “AI risk” movement. All those brilliant Go players did exactly as well as merely human minds could manage–because that’s how well they managed. Blaming their failure to do better on “non-intellectual factors” implicitly assumes that there’s some kind of pure intellectual essence in every human brain–call it “intelligence”, if you like, although “soul” would be more apt–that engages in problem-solving as a purely abstract, Platonic, intellectual activity, and can only be limited by its quality and quantity, or by its distraction by “non-intellectual factors”.

In reality, the brain does what the brain does–shooting associations around, mixing emotions, memories, distractions and ideas together in a big ball of neuron-firing. Sometimes all that firing generates useful Go moves or strategies, and sometimes it produces bad ones, or none at all. Some people’s brains, through a combination of prior aptitude and arduous training, seem to produce good Go moves and strategies more often than others’. But the gaps or flaws in what they produce aren’t a result of “non-intellectual factors”–they’re part and parcel of the individual and collective quirks of their brain activity.

Now, it turns out that the world’s best modern machine learning algorithms produce a different set of moves and strategies from the ones that the world’s best Go players produce, and that the former more often than not beat the latter. Of course, we expect the next generation of machine algorithms to produce moves and strategies that allow them to beat the current generation. Does that mean that the current generation are being distracted by “non-intellectual factors”? Of course not–they’re simply generating the moves and strategies that their design and inputs cause them to generate, as are their successors, not to mention their human predecessors. There’s no pure essence of problem-solving here–just data-processing machines doing what they’re programmed to do with the inputs they’re given.

Take away that pure essence of problem-solving–the soul, if you will–and the prospect of “hyper-intelligent AI” (if such a thing even has any meaning) starts to look a lot less scary. A huge, complicated multi-purpose problem-solver will still behave the way it’s programmed to behave, just as we do, and as Go programs do. And if we don’t horribly botch the implementation (a completely different risk that applies equally to much dumber technologies), there’s no more reason to expect it’ll destroy humanity, than there is to expect a really, really proficient Go algorithm to destroy humanity.

>There are lots of studies in psychology and neuroscience about what people’s senses do when presented with inadequate stimuli, like in a sensory deprivation tank. Usually they go haywire and hallucinate random things. I was reminded of this as I watched a bunch of geniuses debate generic platitudes. It was hilarious.

This reminds me of the movie Simon from 1980:

https://en.wikipedia.org/wiki/Simon_(1980_film)

https://www.youtube.com/watch?v=LOj5wptt-BU

Scott, what is the basis for your assertion that human beings don’t have utility functions?

Not Scott, but when we talk about having a utility function, we are usually speaking under the axioms of Von Neumann-Morgenstern utility theory: completeness, transitivity, continuity, and independence. I’ve seen various attacks on these w.r.t. human preference, but the Allais paradox, which attacks the independence axiom, is one of the more famous and important.

(Transitivity also looks weak to me: it is not very hard to arrange a situation where people will temporarily, at least, prefer A to B and B to C and C to A. These inconsistencies are usually resolved once pointed out, but a utility function that’s time-variant in unpredictable ways is not much of a utility function.)

Adding to what Nornagest said: because the VNM utility theorem is biconditional — an individual’s preferences can be characterized by a utility function if, and only if, said preferences satisfy the given axioms — if the axioms are violated, then we know that the individual has no utility function.

The continuity axiom has also been challenged (in both a descriptive and a normative sense).

Interesting. I think there’s a sort of necessity argument that human beings do have utility functions, even granted that they don’t have the VNM regularity properties.

After all, we do make decisions, and unless one is prepared to go full-tilt-boogie mysterian that has to be the outcome of some kind of weighting and scoring process, some kind of computation. Scott’s claim that humans have no utility functions seemed bizarre to me because it seemed tantamout to denying that such computation take place, and … er, what else could be happening? But I see now that he meant a much more specific thing, denying formal regularity properties. OK then.

Speculating…I think what’s behind the observed regularity failures is that humans actually have a patchwork of situationally-triggered utility-functions-in-the-VNM-sense; there is local coherence, but the decision process as a whole is a messy, emergent kluge whose only justification is that it was just barely good enough to support our ancestors’ reproductive success.

People have very lumpy preferences. A personal anecdote, I have been looking at houses for several years now knowing that we will eventually move and basically just monitoring the market. Of the hundreds of online listings I have perused a handful have been visited, and of those handful 2 very specifically elicited a response of “I have to own this house and live here, I am willing to sell other assets/give up my expensive hobbies for the next several years to make this happen”. There is very little gradient with a bunch of “nopes”, a few “yes, if many other conditions are met” and a small number of “oh dear god, please yes” types.

Lumpiness doesn’t fit with a function very well, but it is how many people react in terms of car buying or college decisions where one is a “oh dear god yes” and lots of effort gets put into making that happen with much less thought and effort put into fall back positions.

Perhaps this is just me admitting to certain political biases, but I often struggle taking seriously the threat of technological unemployment for the same reasons I struggle to take seriously the threat of climate change. I keep getting back to the following strain of logic…

1. A rather large portion of the tech/science community is strongly left-leaning/progressive

2. Said people have suddenly discovered a problem with the potential to completely wipe out civilization as we know it

3. Sure, you don’t really notice the problem now, but they assure us that once it starts it will be impossible to reverse and will get very bad very quickly without immediate action

4. The only proposed solutions involve implementing the same types of socialist economic policies that progressives have been constantly advocating for since the 1920s

Granted, the AI people do a better job on #4 than the climate change people, but I still feel like a whole lot of arguments about the seriousness of the threat of technological unemployment inevitably include “… and that’s why we need socialism!” as the final line.

A lot of people often express displeasure about how the climate debate has become so “politicized.” I suspect this is a large reason why. I would encourage those concerned about technological unemployment to really try to not emphasize the “and we solve this by nationalizing Google and instituting a giant UBI” part of your analysis – lest the same sort of partisan divide start to manifest itself here.

My perception at SSC is that there is not a shared feeling that we must preemptively implement socialist policies, but rather, that we should think through possible scenario’s and have solutions ready.

Is this really so objectionable?

Not really, no. I just have a perception that a whole lot of people are leaping right to “and obviously this will require the abolition of property rights as we know them.”

And they may be right. But being too enthusiastic about that sort of thing paints a picture that you might not want to be painting…

For what it’s worth, I’m not seeing a lot of “and obviously this will require the abolition of property rights as we know them.” A lot of enthusiasm about UBI, yes, and a lot of handwaving about that proposal’s financial issues, but there’s a lot of daylight between that and xxxFullCommunism420xxx.

If we’re assuming that the UBI is the only source of income for the vast majority of the population, I think that’s de facto socialism – but I’m sure people could argue otherwise.

Sure, in the sense that it achieves socialist goals. But that’s one thing and the abolition of property rights is another. If it turns out there’s a way we can achieve the former without troubling with the latter (and without completely breaking the incentive structure that makes productive society work, which I see as pretty much the same thing but you may not), then that’s cool with me; my entire objection to socialism lies in the realm of means and incentives. We all want freedom and prosperity, right?

I’m just kinda skeptical that UBI can get us there in the short term, robots or no robots.

@Matt M

UBI is the opposite of the elimination of property. It is granting property to people (usually in the form of money, which turns property into easily tradeable tokens).

You have to keep in mind that there are (at least) two definitions of socialism. One is to eliminate property, which we generally call communism today. The other is to do wealth transfers, which doesn’t eliminate property and/or capitalism, but equalizes the buying power somewhat within the capitalist system. The latter is what most people who call themselves socialists today, want.

That’s one of the things they want. But most of them also want a lot of political control over how things are done, for any of a variety of reasons. Bernie Sanders was not campaigning for wealth redistribution plus extensive deregulation.

They may speak positively of Denmark and similar societies, but that’s in part because they do not realize that those societies, although more redistributive than the U.S., have less government control of other sorts.

UBI is the opposite of the elimination of property. It is granting property to people (usually in the form of money, which turns property into easily tradeable tokens).

It is granting people ration cards to spend on consumption goods while the means of production are controlled by the state.

The extent to which it resembles our current system says more about how far from genuine market principles our current system has strayed than it does about anything else…

Matt M

UBI proposals generally assume that the means of production are controlled by the free market and that tax income is used to pay for the UBIs.

I think that you fail to understand what the most common proposals for a UBI actually involve.

You are straight up just making up definitions. Free market doesn’t mean what every you feel like it meaning, it has an actual definition and you can’t say “the free market controls the means of production, but then we take part of that production and do X with it” Controlling what happens to the output is controlling the means of production, and so isn’t “the free market”.

Of course this is how fascists framed it as well “hey, you guys get to keep the factory, the government is just going to make sure you use it in socially appropriate ways”.

@baconbacon

OK, then make it ‘capitalism.’

Taxation still preserves the basic free market mechanism of supply/demand, which is not true if you have a single producer.

That was my point and your nitpick in no way addressed my actual argument.

I think that you fail to understand what the most common proposals for a UBI actually involve.

Most common proposals for UBI are operating in the economic reality of today where productive assets are ridiculously widely dispersed, available at relatively low cost to most people, and where labor alone is still a large source of productivity.

The situation becomes very different if you assume that all productive assets are held by a fraction of a percent of the public, and that all value-creating activities ultimately stem from these assets. A UBI in that environment is much closer to “the newly elected leader of the democratic peoples republic seizes control of de beers’ diamond mine and shares the wealth with the people while allowing de beers some nominal control over production and a decent share of the remaining profits because dear leader doesn’t know much about running a diamond mine” than it is to “the free market”

It is not a nitpick, you are attempting to win the argument at every step by framing the discussion and hiding costs. A UBI isn’t Capitalism plus a safty net, its capitalism minus parts of capitalism plus a safety net. You (and basically every framer of the discussion) keep skipping the middle part as if it doesn’t exist.

If you don’t address the costs of your proposals you don’t have an argument, you have propaganda.

I also have no idea what this line is in reference to.

What part of capitalism do you imagine a UBI would be remove, that we didn’t already do away with back when we invented taxes?

In what part of this argument about the future have we been discussing the status quo?

In terms of what the UBI gives up, philosophically its the idea of assistance being temporary and targeted (some programs in the US do this, but on a far smaller scale than a UBI would). It says “even if there was no poverty the government still is and should be in the business of redistribution, that the only reason not to have redistribution is perfect income equality. It will, likely and over time, bind those on the net receiving end into what is practically a single issue voting block as SS has done for seniors. It will treat immigrants in one of two ways, either as second class citizens who don’t get UBI or as not allowed into the country without proof of employment, with earnings > X, and it will likely decrease the labor participation of the poor.

That is just if it is done really well, not if it is done the way it actually will be implemented.

Baconbacon I think you are being a little uncharitable, at first blush I assumed, as Aapje has now confirmed, that ‘free market’ was just intended to imply ‘the mechanism of our current economy’.

I agree with Aapje, I have never seen a suggestion for UBI that coupled it with communism, or any other major change to property rights, the common form I have seen is for the UBI to just replace our current welfare programs.

As to your response to John and deeper social issues, it is a slippery slope argument and people who are ‘ignoring’ it are hardly trying to sneak anything past anyone. Only somebody predisposed to model the world the same way you do would assume that a UBI would result in the outcomes you predict, it is perfectly reasonable that another person would simply never think of those concerns/not think they would happen. Your future forecasting is far from indisputable facts about UBI that people only fail to mention out of a desire to mislead.

I disagree, he clearly in a few posts states that the free market won’t/can’t take care of specific groups of people which is a statement about idealized free markets. He then uses it to mean “our economy” in a different way, which is where the switch comes in, because he then implicitly damns “free market” with our the problems in our current situation when he conflates the two, despite them being different things. This is a rhetorical trick (intentional or subconscious) that allows him (and others) to put the chain of reasoning of “free markets don’t do X, our system is a free market system and it doesn’t do X, case in point”. This allows him to skirt issues, notice how he doesn’t address how the actual welfare system in the US was created, which is brought up in several posts, but states why he thinks it was created and moves on as if it is true because he says it.

This is exactly what SA means when he discusses mote and bailey tactics. “Of course when I said ‘free markets’ I meant ‘our current system’, except when you move back he goes right on making statements of what free markets can and can’t do in a purely theoretical sense, and then when called on it he claims points for having riled someone up.

It is impossible to argue honestly with someone who gets to ex post define the terms he uses in response to the criticism. “oh your objection doesn’t hold because i meant meaning #2 this time, and next time I will be using meaning #1”- wait where was the refutation of the point?

Is this from another thread? In this thread Aaepje used free market once before you replied to it, and then immediately said that it was a mistake/corrected it.

Also, misuse of ‘free market’ is so rampant you should probably lower your prior that people are doing it intentionally.

I don’t think this is generally accepted to be a philosophical prerequisite to capitalism. If it is, capitalism basically hasn’t existed in the western world in the past century, and it seems nonsensical to talk about whether a UBI that won’t exist for many years to come is or is not compatible with a narrowly-defined economic system that died a century ago.

now you’re getting it!

In a reply to this post (but not in this specific subsection) I wrote

Where I clearly define free market in the first line. the response was

This is a straight jump from one person clearly and explicitly talking about free markets with the definition as up front as possible, where the reply immediately conflates the US system and its flaws alleged remedies with a free market. Followed by a few posts down in a reply to someone else

This is basically back and forth between the definitions within a few paragraphs.

It isn’t the misuse that bugs me, its the impossibility of arguing against someone when you clearly state X, and they immediately move on Y.

You asked what would be given up that wasn’t already given up with accepting taxation. A fair number of proponents of welfare framed it as “getting people back on their feet so they can get back into the markets in a healthy way”. UBI separates us from that second portion, by tying it to existence, not need, it is no longer that capitalism/free markets are an ideal to get back toward but that capitalism needs a permanent structure around it.

It seems clear that some people here disagree with my premises (which is fine), but instead of merely debating those, I get accused of being disingenuous (this is not so fine).

My belief is that a true free market/pure capitalism is impossible due to (literally) natural limitations. So any attempt to achieve a free market by laissez faire will fail and will result in a outcomes that are further away from the free market than a regulated system (note that this is not a claim that we currently have a system that maximizes the free market as much as is realistically possible).

My belief is that our current system has adopted the key elements of the free market to a decent extent, primarily by having buyers and sellers relatively freely choose how much to consume/produce and who to buy from/sell to. As such, I think it is fair to call our system (partly) capitalist.

My claim is that a UBI preserves the ability of consumers to decide, based on their preferences, how to spend their money and preserves the ability for producers to decide what to produce. As such, these key capitalist features remain when you implement a UBI. Of course, if you consider our current system insufficiently capitalist due to regulation, then a lack of movement to more laissez faire is objectionable in itself. You can also argue than it is even less of a free market and object to that*. However, my argument was on a more fundamental level: is a system based around a UBI necessarily radically different when it comes to the key features that make me (and a lot of other people) use the word ‘capitalism’ to describe (part of) our current system? My argument is that it doesn’t have to be.

Of course, it is correct that if you roam off 100% of the profit of producers, they become disincentivized to produce, no different from taxing 100% of profits. However, the proposals for UBIs tend to consist of redistributing only part of the GDP via this mechanism, not all of it. So there could still be differences in buying power, which makes successful producers more wealthy and thus better off.

In the theoretical situation where people are utterly unable to produce any value with their labor and in the absence of other reward systems than profits for producers, an UBI would presumably result in roaming off 100% of the profits and at that extreme, it capitalism no longer incentivizes producers to produce.

However, it is far from a given that widespread use of robot labor that will drive many people’s earning power below acceptable levels, will also drive the value of their labor below the levels that are sufficient to incentivize market behavior. Even if that is the case, I see other reward systems as a potentially fruitful avenue, given that humans are not economically rational.

I would also argue that a situation where no one produces any value with their labor (everything is run by robots, including the entire production pipeline to make robots), capitalism as a way to incentivize people for their labor is not applicable anyway (since there is no labor necessary). If you still demand capitalism in such a situation, you have lost sight of what capitalism is supposed to do, IMO.

And to make my position clear: I am not arguing for a UBI per se. I am merely arguing for not dismissing it as an option.

* Of course, capitalism is merely a tool to achieve a goal and should not be a goal in itself, IMHO

That’s an interesting claim. I can understand the claim that a true free market would not be ideal, but why is it impossible? Could you explain?

I am using a definition of free market that requires the absence of barriers of entry, inequalities of bargaining power, information asymmetry, etc, etc. Basically, anything that enables raising the market price of a good or service over marginal cost.

The simple fact that resources are not unlimited and not of equal quality already causes these distortions. IMO, these distortions are self-reinforcing, which is why I don’t believe in laissez faire, because I believe that it will result in the system spiraling towards a situation where a small group of people own everything and have power over everyone.

Basically, I think that markets are unstable, not in the least because the goal of the actors is to create market inefficiencies that benefit them. They will make use of any existing distortions to create a more distorted market.

Hence I believe that unregulated markets cannot exist.

However, as I also don’t believe that humans are homo economicus, I believe that humans have some tendency to self-regulate (in ways that conflict with the free market), which is presumably what you depend on for your economic beliefs. However, I believe that this self-organizing ability is insufficient for a highly complex economy.

It sounds to me as though you are using “free market” to mean something more like “perfect competition.” They are not the same thing.

Information asymmetry, to take one of your points, is one of the reasons why a free market does not produce as good an outcome as could be produced by a perfectly wise central planner with unlimited ability to control people–why its outcome is not efficient in the usual sense. That doesn’t mean the market is impossible, merely that a market fitting the simple stylized model that economists sometimes start with is.

UBI is not about the abolition of property rights. It’s about raising taxes. In a sense, raising taxes is an incremental abolition of property rights, because the state takes some of the benefits of your property, but we already have taxes, we’re surviving them, if you can call this living.

Future automation UBI is consistent with capitalists being extremely absurdly rich and the rest of us being merely absurdly rich (by 2017 standards). It’s not straight-up commie, just redistributionist.

@suntzuanime

Agreed. Maybe a century from now everyone has at least two or three robot

slavesservants, and an additive manufacturing machine, but really rich people own asteroids and small seastead states or something.It’s perfectly consistent with the idea of the social market economy. Certain conservatives (and as a consequence US liberals, to the chagrin of actual socialists) like to call that socialism or even stealth communism, but it really isn’t (I seem to remember the Dutch PM or someone getting outraged when Bernie called their system socialist). Sweden’s model is basically this, and it has some of the best protected private property rights in the world, and the easiest to start businesses, at least according to the Heritage Foundation. 90% of resources are privately owned in Sweden according to Wiki, but they have a big welfare state and strong union rights at the same time.

Calling government doing things with wealth “socialism” or “communism” is just the right wing version of when extreme leftists call doing things with borders “fascism” or “nazism”.

Not sure about this part though:

“In a sense” is being used like “there is an argument” or “one might suggest”, I’m using it to raise a point I feel I should address but do not necessarily endorse.

Fair ’nuff.

If we’ve reached the point where the source of 99.9+% of all wealth is generated from AI, then the taxes that are collected to pay the UBI are coming exclusively from the AI owners.

I mean I guess there’s no way to hash this out without a debate on “taxation = theft” but it seems to me that if the board of directors of google is being forced, against their will, to perpetually fund the entire existence of everyone else in society, that doesn’t really sound like any form of capitalism I would recognize.

We already have a system where one group of people (workers and capital owners) is forced to fund the existence of another group of people (welfare/social security recipients). It’s arguably not unworkable.

The automation future is one in which 99.9% of all wealth is generated by capital. This isn’t necessarily all Google, there are a wide variety of corporations that have valuable capital. And even Google has a wide variety of stockholders: you’re not taxing the board of directors, you’re taxing the stockholders, which is a broader base.

@Matt M

They’d only be funding the baseline level of income, which is kind of what companies do with their wages, only now it is applied collectively. Given the existence of private property and a market (therefore capitalism), people would still be able to use that money over time to purchase their own capital with which to create wealth above that base level. No technology can remove the advantage of scarcity in land, and the different uses of it. Even if everything is so automated that human labor is useless, differences between applications of automated capital would lead to differences in wealth generation. Even if labor is reduced to telling a bunch of AGI robots to do shit on a piece of property, the difference in what you tell it to do and where would create opportunities for leverage.

Imagine everyone is on universal welfare, but then someone pools a certain amount of welfare to buy shares, or to buy capital and land directly, and have their robots build something unique other people would be interested in. What if you buy shares in a seasteading resort, make it rich, and then decide to enter the asteroid mining business?

AGI doing everything humans can do will lead to humans ceasing to be a means of labor, but human desires are still going to be acting on the AGI*. If we’ve designed it to be friendly, then every model does what we tell it to, automating the processes we want it to automate; and what we want, our desires, are going to matter as regards how much value the final result has.

*Liability is going to be YUGE.

Thank you Scott. I really enjoyed this post.

One thing popped out at me:

Say we do this and, at the end of the day, find that the underlying human value function is really that we’re just a bunch of dicks, and what progress we’ve made in the past have all been a fluke from the occasional groups of humans acting in non-dickish ways: the occasional lit matches in a sea of darkness, if you will. So we device a super intelligence that runs everything now, perfectly and effectively, by our dickish values…

It’s somewhat likely that humans enjoy being dicks, but we really don’t enjoy other people being dicks to us. So the equilibrium in this case probably wouldn’t involve just letting people be dicks at each other. Maybe it would create something else for us to be dicks at, to get it out of our systems.

As far as I can tell, this is half of the function of Siri.

And 75+% the function of videogames?

Of course, the busybody moralists want to take that away from us too…

How can these things be called degrading?

I guess it must be awkward for the people designing responses, but it seems like an oddly petty thing to get upset over.

Sexual harassment is supposed to be unwanted sexual advances. A machine cannot “unwant” anything, any more than it can want anything. So there’s literally nothing you could do or say to a machine which would constitute sexual harassment.

The person anthropomorphizing SIRI isn’t the guy who says “SIRI suck my cock,” it’s the writer who gets (faux?) offended on SIRI’s behalf. It’s no different than writing the same thing on a notepad document and deleting it.

*Which particular* humans to watch?

And human values in what context?

What we view as “human values” today are distorted by operating in an environment where there are many other agents of comparable power. But a superintelligence unleashed should be able to gain a degree of control over the world equivalent to that of a Civ player. Civ players tend to rule as literal God-Kings.

The very best chess players have historically maxed out close to an Elo rating of 3000. The very best chess engines like Stockfish now perform at around 3300-3400. It has been theorized that truly optimal chess play sets in at around 3600.

Go is vastly more complex than chess, so humans have been able to explore a much lesser percentage of its possibility-space due to cognitive constraints.

I noticed right away that AlphaGo would do more for Go than all the chess engines had done for chess. It’s an empirical fact that humans grandmasters do not have much difficulty understanding what the top machines are doing when they play each other, and for any given game, a small number of queries to the engine logs is sufficient for the GMs to feel that the machines’ play makes sense. Human GMs play a chess in a way that is mildly influenced by computer experience — the margin of draw is now known to be larger so that more positions are defensible than had previously been appreciated, certain 5-piece endings have been re-evaluated, and certain openings are no longer played because they are known to be bad, but a GM from the 60’s observing the play of today’s top players would find it quite recognizable (much less difference between 1967 and 2017 than between 1917 and 1967, and the difference between 1867 and 1917 is far larger). We know now that 6- and 7-piece pawnless endings are far beyond human ken but they play an absolutely negligible role in real games between either computers or humans.

On the other hand, AlphaGo plays a game with which the top human players are not familiar, and this was to be expected from the nature of its algorithm.

Reading point 8 I got Blindsight chills.

Clearly we just need to genetically engineer some vampires to explain the opaque reasoning of the AI!

I think you’re reading too much into the results of that IGM poll. For one, there is a world of difference between widespread technological unemployment and stagnant/reduced wages for a portion of the income distribution. Widespread technological unemployment is the big concern I hear from futurists (you mention it yourself in point 2), but wages adjusting to a new equilibrium is exactly the opposite of unemployment.

Two, stagnant median wages in the US may be less a matter of robots taking our jobs and more a matter of technology and globalization (which is facilitated by technology) leading to equalization between low-skilled third world labor and low-skilled first world labor. In other words, the drop in the wages of first-world low-skilled labor is in large part the result of (technology-enabled) competition from third-world labor, not robots. But once equalization is more or less complete, we might expect wages to rise across the globe as technology/capital (robots) increases worker marginal productivity. Furthermore, consider this alternative framing of wage growth in the face of automation: over the past fifty years, despite great increases in technology/automation, the global median wage has skyrocketed.

Funny you should mention WV truckers being retrained as coders — my husband used to work for a tech company in WV. They paid really badly and treated their workers like crap, which they could get away with because it was West Virginia and unemployment was so high. No one would quit because there weren’t any other jobs to be had; the feeling was that, as a high school graduate in WV, you were dang lucky to have that job and shouldn’t complain even if it didn’t actually pay the bills.

I’m not sure this is the technological utopia we all dream of, in other words. You can retrain truckers as coders, but if unemployment is high among those groups, and if their skills aren’t rare enough to be in high demand, they’re going to be paid at least slightly less than what they were as truckers.

Smart & Final is a discount grocery chain founded by Abraham Haas, father of Walter A. Haas of the Levi Strauss fortune and the Haas School of Business at UC Berkeley.

Uh oh, is there really any chance this is false? I mean, usually I believe my guess is probably pretty damn close, but by no means always. If that’s just me, I’ve suddenly got a lot of priors to reevaluate.

J. Storrs Hall suggests that the inability to perfectly model our own thought processes is fundamental to what we think of as free will.

Free will is the god of the gaps of human consciousness. It’s just a catchall for the stuff we can’t explain. This is blindingly obvious unless you are under the effects of motivated cognition.

Sure, but as I read Hall he goes further, suggesting that there is an advantage for minds that are structured that way, that they are more effective than minds that can perfectly model their own functioning.

If true, this would have consequences regarding hard AI takeoff.

I’ve been trying to integrate Hall’s thesis with Dennett’s Varieties of Free Will Worth Wanting, but it’s all really hard to get a grip on.

That panel though. White dude. White dude. White dude. White dude. White dude.

if you watch an NBA game it’s black dude black dude white dude black dude black dude

Obviously the conference was structurally racist and sexist. But do you feel that’s a problem? Is there a feminist or an Afrocentric approach to benevolent AI?

Warning: further making fun of people for their race and gender, without attempting to tie it in to substantive issues, will result in a ban.

None of the responses so far have been productive and further responses along this line will result in a ban.

On the “Superintelligence: Science or Fiction” panel, a bunch of smart famous people publicly agreed that the timespan between the advent of approximately-human-level* AI and superintelligence would be somewhere between a few days and a few years. This is good. But was there anything like a survey on when approximately-human-level AI would be reached? I’m always interested in updates on those predictions. Particularly with DeepMind aiming in a very practical and near-term sense at rat-level AI.

I was also overjoyed to see Fun Theory specifically mentioned on the panel.

*Obviously there won’t be such a thing as exactly human-level AI, it’s just a qualitative threshold point that’s easy to talk about, and the panelists all understand this.

The AI Impacts team (Katja Grace et al) have done a formal survey of hundreds of AI researchers about this kind of thing. They will publish their results soon.

Please tell us the results when they come out.

It is often stated that it might be difficult to infer our values because we are irrational or lack in computation, e.g. an AI observing a weak chess player may learn that he likes to lose because he made some losing moves. This is a fair attack on IRL but it looks soluble.

An issue that is more problematic because it strikes at our potential misunderstanding about morality is the following. Imagine an AI observes people through their daily routines, interactions with other people. It learns that people avoid stealing and doing other bad stuff. It hypothesises that average human does not like stealing. However, then it finds out that most people commit small illegal acts, like pirating, going over the speed limit, when no one is looking. They even commit great atrocities when they are sure they will not be punished, like raping during war, when far from judicial powers.

Thus, the AI learns, the truer, more cynical values of humans. Be selfish as long as no one can punish you. If your actions are observable, adhere to social contracts. Use morality as a signal of willingness to cooperate and trustworthiness — do not consider it as an end itself.

See, this is why one might desire some sort of editing power. I wouldn’t mind a computer observing my actions and deriving a system of morality from them, but I would want to see what moral rules it came up with, and edit them. You know, “Delete the part about treating people more kindly if they are more attractive, and where you decided it was moral to yell at my children provided I didn’t get a good night’s sleep the previous night.” Can we assume a computer would be capable of this? I remember Leah Libresco wrote a post some time ago suggesting that such a computer-human team would be able to come up with better moral rules than either of us would alone.

I agree that being able to edit would be handy. However, many modern methods do not allow this. You cannot look inside a deep neural network and tweak it a bit because you want to add an exception to the rule it found. Besides, vanilla inverse reinforcement learning is meant to solve the value problem for us. If we know which values and how to include we might as well get rid of IRL and write the values ourselves.

Getting AI to do the moral philosophy for us/with us is a solution but this is not something you can work on today (or any time soon), so AI researchers are not too eager to discuss this approach.

Adversarial training methods are sort of a “edit what the net learns” trick, aren’t they?

ISTR an educational example in one paper: one net was trained to classify images, and another net was trained to try to find “mistakes” by adding small (in L2 norm) perturbations to training set data which would cause the classification to change. The first net can then be trained to avoid those mistakes. We know a priori that adding a faint static to an image of a bird doesn’t make it look like a couch instead, but if you naively train a classifier with a bunch of bird pictures and couch pictures then there end up being exploitable weaknesses which an adversary could use to change the output, and by using an adversarial training process we can eliminate that one category of weakness.

Regarding the economic questions:

1. Median wages: have they grown or not isn’t a settled question, framing it this way is very misleading. Individual median wages might have stagnated but that isn’t the whole ball game. Median household income (inflation adjusted) is up 25% since the late 1960s, and median household size has dropped from ~3.3 to ~2.6 in that span. So instead of 3.3 people living on 40k a year or 12k per person, 2.6 people are living on 50k a year or 19k a person, which represents a ~ 58% increase in income per person per household which is a very different picture than stagnant wages. Some median wage statistics also fail to cite rising benefits (health insurance, vacation etc) and give a misleading picture on their own (though not all).

Now there are arguments against using household wages alone, and there are arguments against using individual wages/compensation, presenting either on its own is misleading.

2. Re Horses: The decline of the horse is either wildly overstated or wildly understated. First the overstated, the invention of the car put horses out to pasture (sorry), but it was also part of a trend that increased the overall number of animals in the employ of humans. Populations of domesticated dogs, cats, cattle, sheep, goats, pigs, chickens etc have increased dramatically (even adjusting for human population) since the introduction of the car. Implying that because the car knocked the horse out of employment that then it decreased net employment is a fallacy of composition, and it would be like looking at the end of WW2 and seeing military employment plummet and assume that it meant that total employment must likewise drop (it did, but briefly).

The understated case is that some of the above animals live totally shit lives, arguably shittier than non existence, and the future for humans is as bleak as it is for caged chickens.

I think the danger is overstated, employment rose during the post ww2 period, hitting its peak around 2000 in terms of labor force participation, household incomes rose over this period and while some lament that it “Takes two incomes now” I see very few of those same people living a 1960s lifestyle (their houses are larger, they have 2 cars, cable, high speed internet access at home, multiple smart phones in the family).

The major caveat being that since 2000 household wages have stagnated, although when you consider major military action, and major financial crisis occurring during that period I am confident that if we could edge past those two drags then household income growth would pick up again.

I understand that you cannot comment on individual people, but I’m really wondering if you feel like many minds were changed vis-a-vis superintelligence and its risks. On a group level, would you say that (some / many of) the skeptics changed their minds? Or maybe it was more common for skeptics to convince “believers” there is nothing to worry about? Where skeptics (initially) vocal about their opposition to this “alarmism” / “fearmongering” / “Luddism”? Did skeptics / believers mingle a lot, or did they tend to clump together? Or perhaps in other words: were there heated discussions between them, or did they tend to avoid each other? Did you hear any good arguments against the idea that AI might pose an existential risk?

On a slightly different note: what vibe did you get from AI and ML practitioners about the closeness of AGI? Major challenges?

Sorry about the barrage of questions. I’m just kind of sad I wasn’t able to attend myself, and I’m really curious about the effectiveness of conferences like this at convincing entrenched “experts” and “breaking the taboo” (which sounds really great)… I understand the importance of preserving anonymity, and hope my questions can be answered on a group level in a way that preserves that. Thank you very much if you take the time!

My impression was that among the people who had really thought about the issue, collective minds were changed in a way that didn’t require anyone’s individual mind to be changed – ie the people who were concerned about risk became more vocal about it and started feeling like they were the majority, and the people who weren’t concerned about risk became less vocal about it and started feeling like they weren’t.

Among people who hadn’t thought about the issue, I feel like they got the impression that the people who had thought about the issue were concerned about risk, and updated in that direction.

Just out of rank curiosity, was there a sense at the conference that Eliezer gets any credit for his role in getting the ball rolling in this area? It’s a good sign that he no longer has to be the one pushing these ideas, but it’s just jarring to me that I haven’t seen his name a single time in any reporting on this event. I’m not sure whether this is the type of situation where people are intentionally avoiding associating his name with the conference, or if his name is not being referenced for the same reason physicists don’t feel the need to cite Newton in every paper.

Bostrom basically wrote Superintelligence so that you could cite the ideas without getting into academic politics about whose they were.